Ask any SEO what SEO-related task makes them cringe the most. They will likely respond with link building or website migrations. Most everyone aligns on the first one: link building really can be a pain. The second response always surprises me. I am a big fan of website migrations and even wrote about why you should be prioritizing your migration right now.

Why so much angst about website migrations? It’s not without merit. A poor migration without SEO in mind can lead to significant performance drops. Below is an image of just one example that keeps SEOs up at night. I’m not going to write about each step in a successful migration but will go into detail on scaling solutions for one of the bigger pain points: redirects.

This website’s failed migration in 2018 took them nearly 3 years to regain their organic performance

Purpose of Redirects for a Website Migration

In order to avoid catastrophic organic performance drops after a website migration takes place, 301 redirects need to be put in place. The process begins with mapping each and every page on your old website to your new website. This requires a 1:1 mapping. Once the URLs are mapped you can leverage your HTACCESS file to implement a 301-status redirect for each URL. Failure to appropriately map out redirects or use the proper 301 status is often the cause for a decline in organic traffic post-launch.

Mapping your core website pages (homepage, about us, category pages or even articles/blog) is fairly straightforward even if it’s done manually. However, how do you map redirects for an ecommerce website that has thousands, hundreds of thousands or even millions of project pages? Let’s cover two unique approaches.

Approach ONE: Identifying URL Patterns

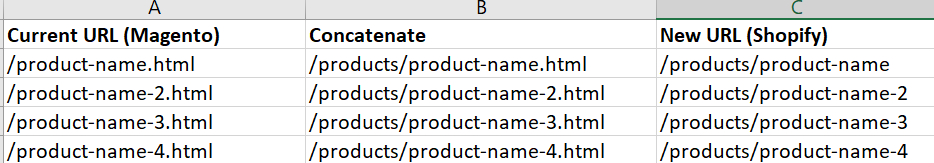

Leveraging your current website and dev environments you will be able to compare a single product. Assuming your migration requires a URL change now is the time to identify any patterns. For instance, if your current website’s CMS is Magento and you’re migrating to Shopify, below are the two different URLs for the same product.

MAGENTO .com/product-name.html

SHOPIFY .com/products/product-name

In this instance we can use some Excel wizardry to scale URL mapping without needing to map each product individually.

Column A – list all your existing site’s product URLs (leverage your favorite crawler to get this)

Column B – Use “concatenate” formula =CONCATENATE(“/products”,A2)

Column C – Delete the last 5 characters (.html) through the LEFT formula =LEFT(B2, LEN(B2)-5)

Run your column C values through your SEO crawler (after appending your dev URL) to ensure all rows result in a 200 status. If this is successful, you now have a scalable solution for all product URLs from the old Magento site to the new Shopify site.

Approach TWO: What if the product URLs are different?

What if your old website product URLs didn’t use the product name or were dynamically generated by a database? I’ve got a tip for this too. It’s going to require identifying patterns and an SEO crawler such as Oncrawl or Screaming Frog SEO Spider.

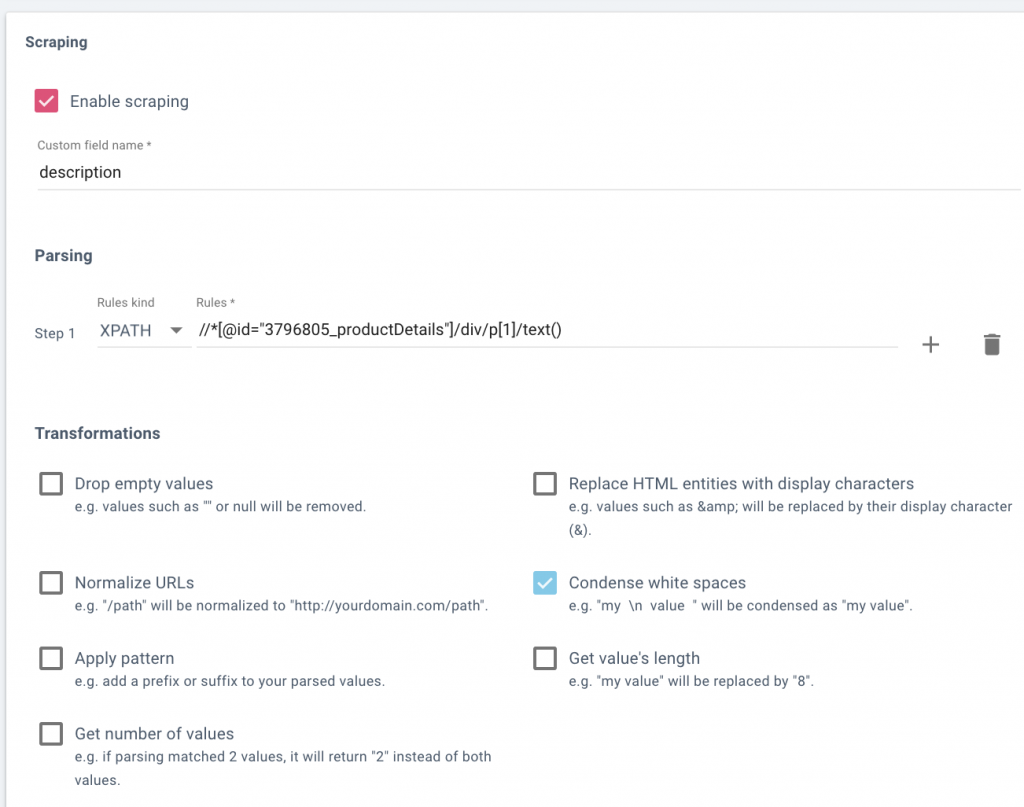

Example: the old website generates product URLs by the product SKU value.

Old URL: .com/product/38472

New URL: /com/product/grey-baseball-cap

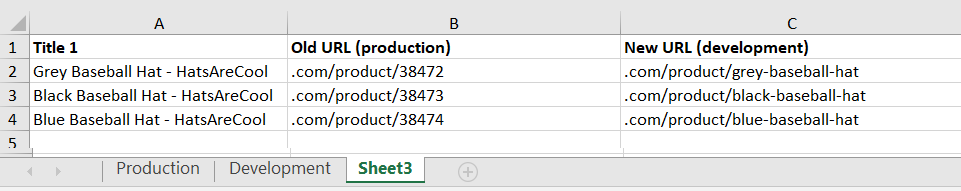

Solution 1: Compare/match Title Tags (VLOOKUP)

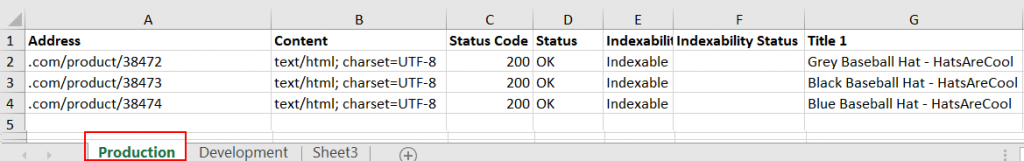

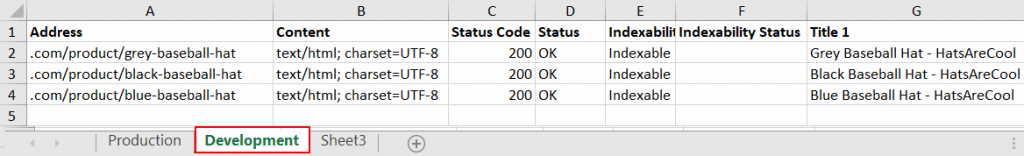

If no easy URL matching solution exists between the two sites, we’ll have to move on to the next solution. Did the production title tag values get pulled over to the new development site? If so, we can leverage crawls to compare both production and the new dev site to find matching values.

Example: No product URL correlation but title tag values match

Step One: Run full crawls of both the current production website as well as the development environment.

Step Two: Export both crawls to one Excel document, each in their own dedicated tab.

Step Two: We’re going to be running a VLOOKUP value and to make this function work properly we need to put the title tag value in front of the URL. After moving column G to Column B each tab will look like this.

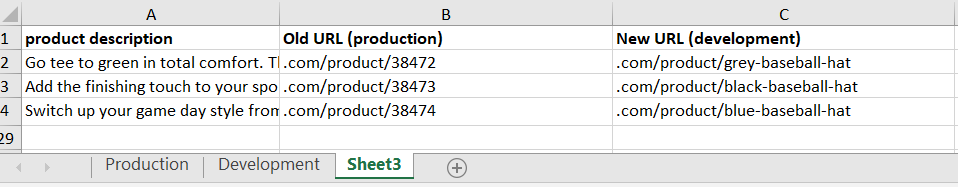

Step Three: Open up a “sheet 3” tab and in column A copy and paste the title tag values from your development tab. Set up column B to list your production URL. Column C will be your new dev URL.

Step Four: Run a VLOOKUP from your sheet3 against your production and development tabs matching the title tag values. If you set up your sheets exactly as how I did this is the VLOOKUP code you’ll need for each value.

=VLOOKUP(A2,Production!$A1:B100000,2,FALSE)

=VLOOKUP(A2,Development!$A1:B100000,2,FALSE)

*note that if you have more than 100,000 values in your spreadsheets then you’ll need to change the B1 value to be larger than the default 100,000 value I put in place.

The final result of running the crawls, organizing the data in the spreadsheet, and running VLOOKUP is a single sheet with your current URLs and your new development sites URL.

Solution 2: Comparing Product Body Copy (XPath/VLOOKUP)

When URLs are completely different and title tags don’t match you might be tempted to roll up your sleeves and start manually matching the URLs. STOP – I’ve got one more tip for you.

We’re going to utilize custom extraction to pull and match body copy of individual product pages. We’ll then leverage the VLOOKUP command we used in the title tag example to match the two URLs.

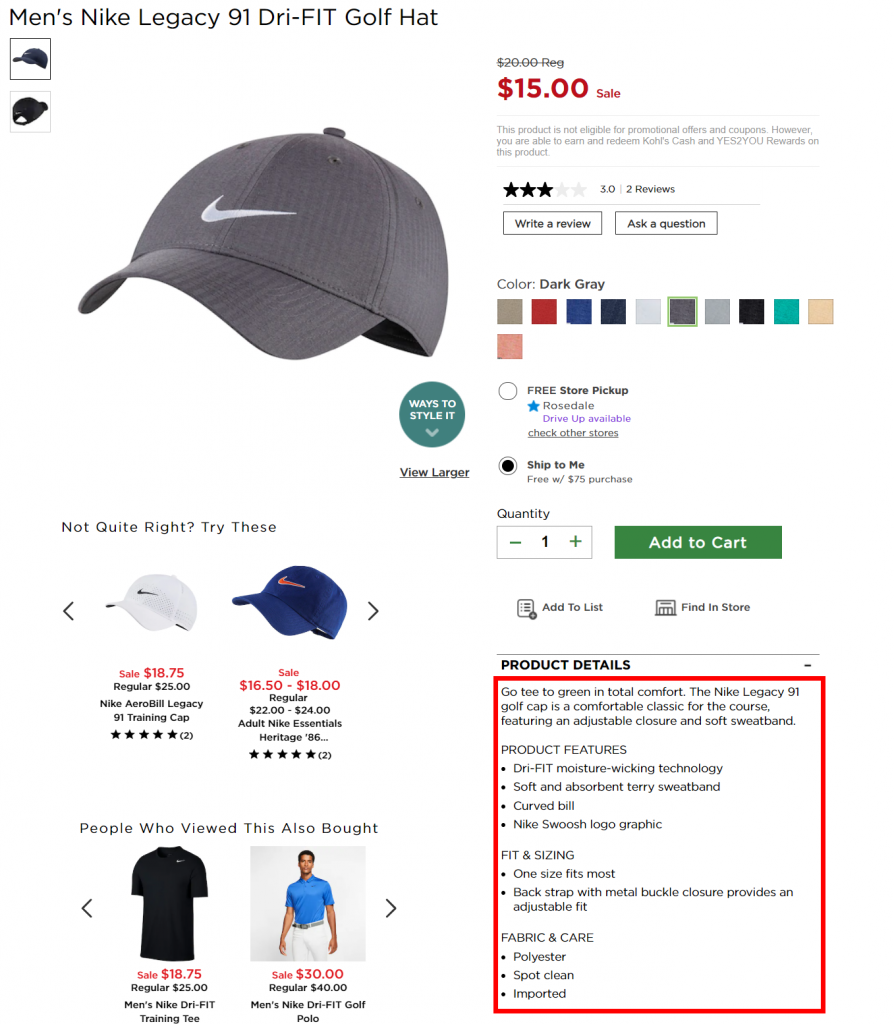

Step One: Open up a matching product page on both production and the development site. Validate that the product descriptions are indeed the same on both sites.

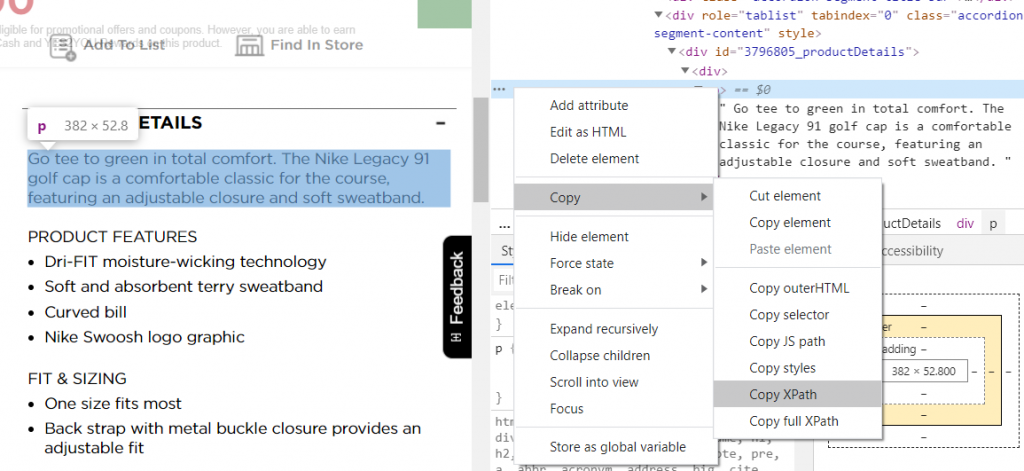

Step Two: In Chrome web browser you can right click on the product description and click “inspect element”. This will open up Chrome dev tools and bring you to the section of code that we’ll be scraping.

Within Chrome dev tools, right click again and select “Copy” and then “Copy XPath”. You’ll get a value similar to this //*[@id=”3796805_productDetails”]/div/p[1]

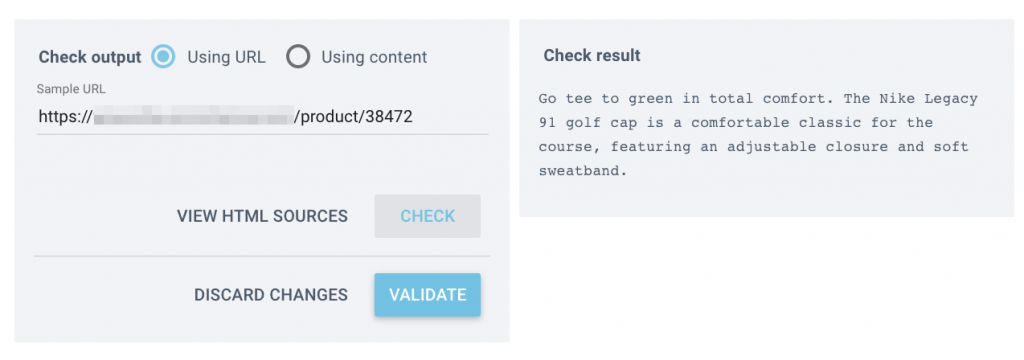

Step Three: In Oncrawl, navigate to Scraping in the crawl profile setup (+ Set up new crawl > Scraping). Enter a name for this field, such as “description” and paste your XPath code you recently copied from Chrome dev tools. You’ll need to add “/text()” at the end to capture the product description text.

In this example, I’ve also checked “Condense whitespace” to avoid any paragraph characters in the description.

Step four: Test your custom extraction logic.

In Oncrawl, before saving your rule, you should enter a few URLs in the “Check output” box at the bottom and make sure that when you click “Check” you see the description in the box at the right:

After validating that the extraction logic is working correctly, go ahead and run all your product URLs. You will be able to export all your extracted descriptions (tied to a URL) after the crawl is complete.

Step five: At this point you will be repeating steps 1-3 for your development site. You will now have two different tabs for each environment where each product URL has an extracted description associated with it.

Step six: Similar to the title tag example, we are going to use VLOOKUP to match product descriptions between production and development sites. If done correctly, you’ll have a list of old and new URLs that you can now use to map your redirects.

URL logic, Title Tags and Description Matching Failed?

Don’t give up. I promise you the last thing you want to do is dedicate the number of hours needed to manually match all of these URLs. Here are a few other tactics I’ve seen used with varying success:

- Identify and match Schema.org markup values

- Identify and match image name and or image alt tags

- Sometimes you’re lucky and an actual SKU will be a part of a product template

- Identify and match product reviews

Last Ditch Effort for Mapping Product Redirects

Sometimes products go through such an overhaul that it’s impossible to not have to manually build a 1:1 mapping. If this is your situation consider using all the tactics above to identify as many as you can. As a last resort consider tasking your summer interns or more junior resources to help solve for the remaining unmatched products.

While the tactics listed above aren’t a fire-proof solution, I’ve found that it solves for a significant amount of work. Even if it solves for 75% of your redirects you’ll be thankful for getting back the time that you would have otherwise spent manually mapping these redirects.