We are pleased to introduce yet another new feature that extends the capabilities of our SEO log analyzer: custom alerts for problems in your log monitoring process.

Whether you are interested in activity from organic traffic on your website, or the presence of search engine bots on your site, you’ll want to be notified as soon as we find a gap in your data. This type of notification is very important in log analysis. Because a gap in the data will change how you read the analysis!

The advantage of server logs for SEO is due to two elements:

- First, the data in your logs are the only data that are guaranteed to be complete, unaggregated, and without hidden or “not set” data. Logs give you a much more complete view of activity on your site than the tools made available by Google, such as Google Analytics or Google Search Console.

- Second, logs do not depend on scripts or third-party services: server logs by definition capture all the requests for content on your site.

However, when you skip sections of log data, both of these advantages are lost.

When your monitoring process is working, logs are a reliable source of information that allow you to complement your analytics solution, to validate the visibility of an entire site to search engines, or even to track your crawl budget if your site is large enough to require special attention to the content that is presented as a priority to robots for indexing.

Why monitor your server logs with Oncrawl?

While log analysis can be used by developers to monitor the performance of a web server, Oncrawl’s log analyzer is built specifically to meet SEO needs.

Oncrawl allows you to take server log analysis even further by blending server log data with data from crawls. This type of cross-analysis allows you to discover the impact of technical SEO metrics on the behavior of search engine robots, to understand the correlations between technical SEO optimizations and the pages that attract the most visitors from search engines, and to prioritize SEO projects according to the pages that attract the most SEO traffic.

In addition, recent new features of Oncrawl’s log analyzer make it even more powerful.

Three great (new) reasons to analyze your logs with Oncrawl

1. Alerting for monitoring process problems

Don’t wait until your next login to discover a problem with your monitoring!

In log monitoring, the SEO team is often at the end of the line, or even out of the loop when changes are made to the way log files are collected and transferred.

Whether the implementation of the log transfer process for SEO monitoring is your own responsibility, or whether was is set up for you by someone else, it is important to be able to detect flaws in the procedure as soon as possible:

- A script that hasn’t executed

- A format that has been changed

- A broken component

- An update or a provider change that impacts your logs

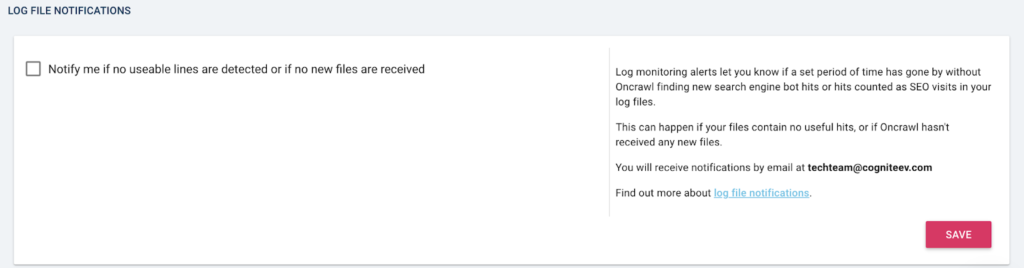

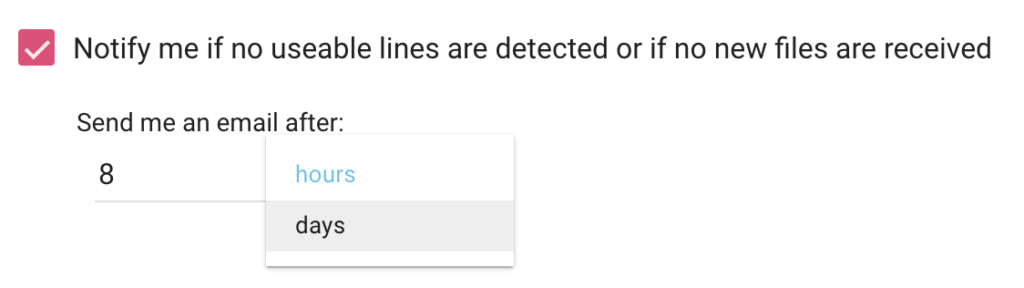

There are two types of problems with your log monitoring data that now trigger an alert:

- Oncrawl has not received any new log files within a defined period of time

- Oncrawl has received new files, but no recent lines showing organic visits or search engine bot visits have been detected.

Alerts can be turned on or off at any time, and you can set or change the threshold of time before an alert is triggered whenever you want.

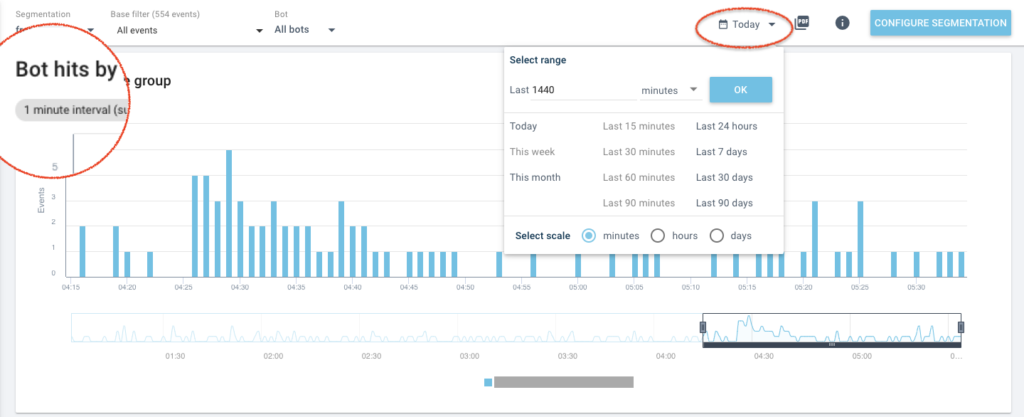

2. Live bot tracking

Oncrawl now allows all log analysis users to monitor — hour by hour or minute by minute — the activity on their websites.

There are plenty of cases when up-to-the-minute data is mission-critical in SEO, particularly about how Google and organic traffic react to changes on your website. If this sounds like something that might make a difference for you, check out the presentation of how to use this feature, for example in the context of a migration.

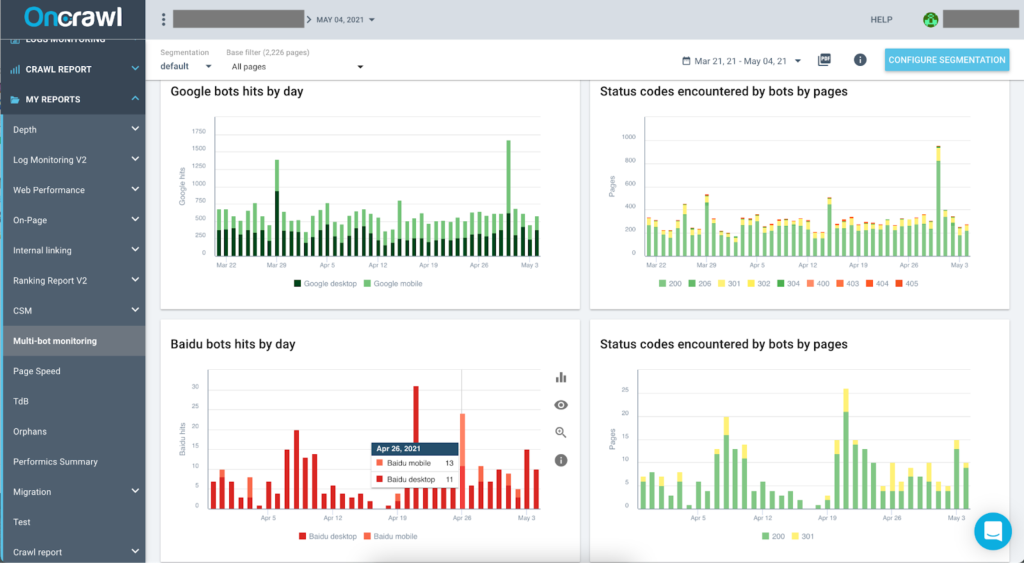

3. Extension of monitoring to the most used search engines

Log monitoring for SEO often focuses on Google, its robots including the famous Googlebot and the traffic it brings. But many sites owe an important part of their SEO traffic to other search engines:

- Bing : North American markets

- Yandex : Eastern European markets, Russia or Turkey

- Baidu: Asia Pacific markets, especially China

This is why Oncrawl also lets you monitor bots and SEO traffic from the four most popular search engines.

Depending on your needs, you can track them in the dashboards offered by default in Oncrawl, or even create your own reports.

How to take advantage of the new alerting

To make sure you’re getting the most out of your log monitoring, make sure you’ve enabled notifications for problems in your monitoring process.

Like cross-analysis and live monitoring, alerting is an integral part of Oncrawl’s log analysis offer. It is included in all log analysis plans.

To enable it, log monitoring must already be enabled for your project. From the project’s main page, click on Add data sources and make sure you’re looking at the Log files tab. Alerts can be enabled or disabled, and the threshold can be modified, in the Log file notifications section on this page.