A sitemap is not only a list of pages on a website. In XML format. A sitemap also serves as a multipurpose technical SEO tool.

Here are just a few of the things you can accomplish with a sitemap.

What is an XML sitemap?

An XML sitemap is a file placed at the root of a website that lists pages using the sitemaps.org protocol. This bot-readable protocol was developed to provide webmasters with a standardized way of listing pages that should be indexed by search engines. It usually contains additional data about each page.

This additional data is what makes the XML sitemap useful in so many situations. Like a Swiss army knife, sitemaps have tools to address a broad range of technical SEO issues related to crawling and indexing.

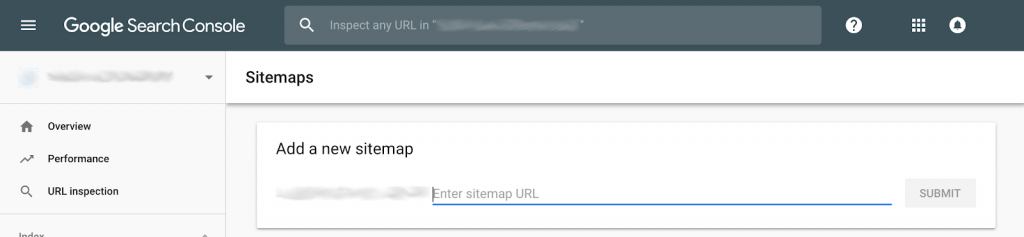

Submit new URLs to Google

One of the primary–and most obvious–uses of a sitemap is to submit URLs to search engines. Google also allows you to submit a new sitemap when you have included new URLs in your website.

Submitting new URLs in a sitemap has, in fact, become the recommended means of announcing the presence of new pages to Google. This alerts Google to changes on your website “ahead of [Google’s] regular schedule”.

It’s worth noting, however, that although Google is made aware of new pages when you submit a new sitemap that contains them, Google is not required to crawl or index them immediately.

You can also use this method to announce changes to pages by using the tag < lastmod > in your sitemap to indicate the date of the update.

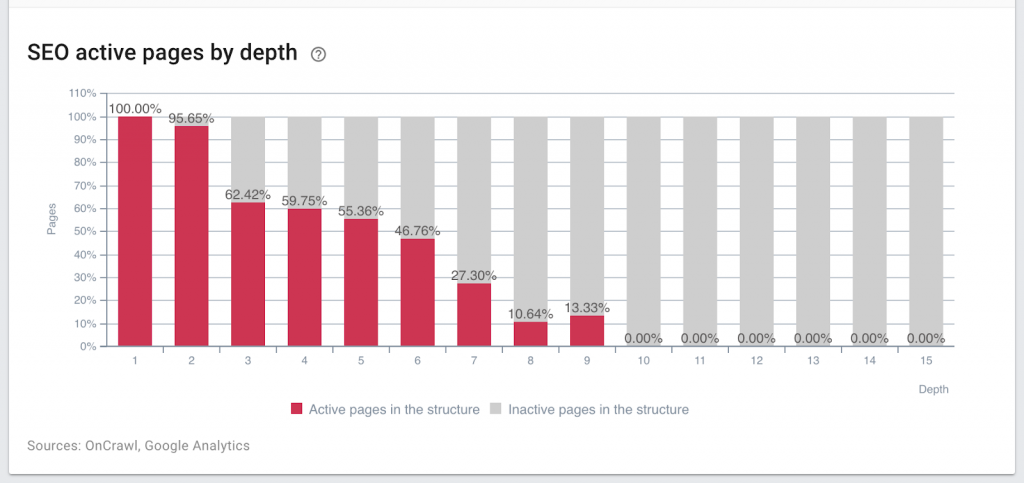

Mitigate the effects of deep page depth

Crawling is based on links between pages. Your site architecture is therefore important for both bots and users who navigate your site in order to find pages.

Pages that require a large number of clicks in order to be found from your home page or main landing pages are considered to be located deep in your site architecture. It’s well known that this can have multiple consequences:

- Depth affects ranking through a lower popularity score used in the PageRank algorithm.

- Depth influences the time it takes to get pages crawled.

- Depth can prevent users from visiting pages, or reduce the frequency of user visits.

Influence of depth on activity: the deeper a page is located in the site structure, the less likely that it will receive organic visits. (Source: Oncrawl)

While sitemaps can’t address the “link juice”, popularity, and human visitor issues, you can use a sitemap to provide URLs of pages that are located deep in your site to bots.

Like a Swiss army knife, they might not be the ideal tool for this job. But when you don’t have the option of reworking your internal linking strategy right away, using XML sitemaps is a quick and dirty way of making sure that the Googlebot can find, crawl, and index URLs with excessive page depth.

Speed up de-indexing multiple URLs from Google

Usually the fastest way to remove a single page from Google’s index is to use the URL removal tool. However, this can be tedious if you have a large number of URLs to process.

Because sitemaps are a great way to keep Google up to date on the state of your URLs, you can also use them to indicate which URLs should be de-indexed.

Pages that you want removed from Google’s index should appear in your XML sitemap:

- As “noindex”. This indicates the change in indexing status that you’d like Google to take into account.

- With a < lastmod > tag indicating the date of the change, as recommended by John Mueller.

Search for orphan pages

Orphan pages are pages that have become unlinked from your main site structure. Because of this, their SEO performance is limited. Furthermore, when external links draw traffic to these pages, the rest of the site doesn’t profit. These pages should be removed or linked to from pages within your site structure.

The key to managing orphan pages is finding them. One of the best ways to look for orphan pages is to compare the URLs in your sitemap to the URLs that can be found by crawling your site. When crawl comparison with sitemaps is enabled in Oncrawl, all information about orphans in sitemaps is included in standard crawl results.

Orphan pages discovered in sitemaps. (Source: Oncrawl)

Manage duplicate content

Once you have identified duplicate content, the best ways to resolve it is to differentiate the content or to use canonical declarations to indicate to search engines which of the duplicate pages should be indexed.

The URLs you want to use as canonical URLs can be included your XML sitemaps: URLs in sitemaps are considered to be suggestions for canonical URLs. When Google needs to choose a canonical URL on its own for a group of URLs with similar content, it will use the URL that appears in your sitemap as a primary candidate. If you’re having problems with Google ignoring your canonical URLs, this can also be a method to reinforce those declarations.

The corollary to this trick is that it’s not a good idea to put non-canonical URLs in a sitemap. In fact, Google advises against it.

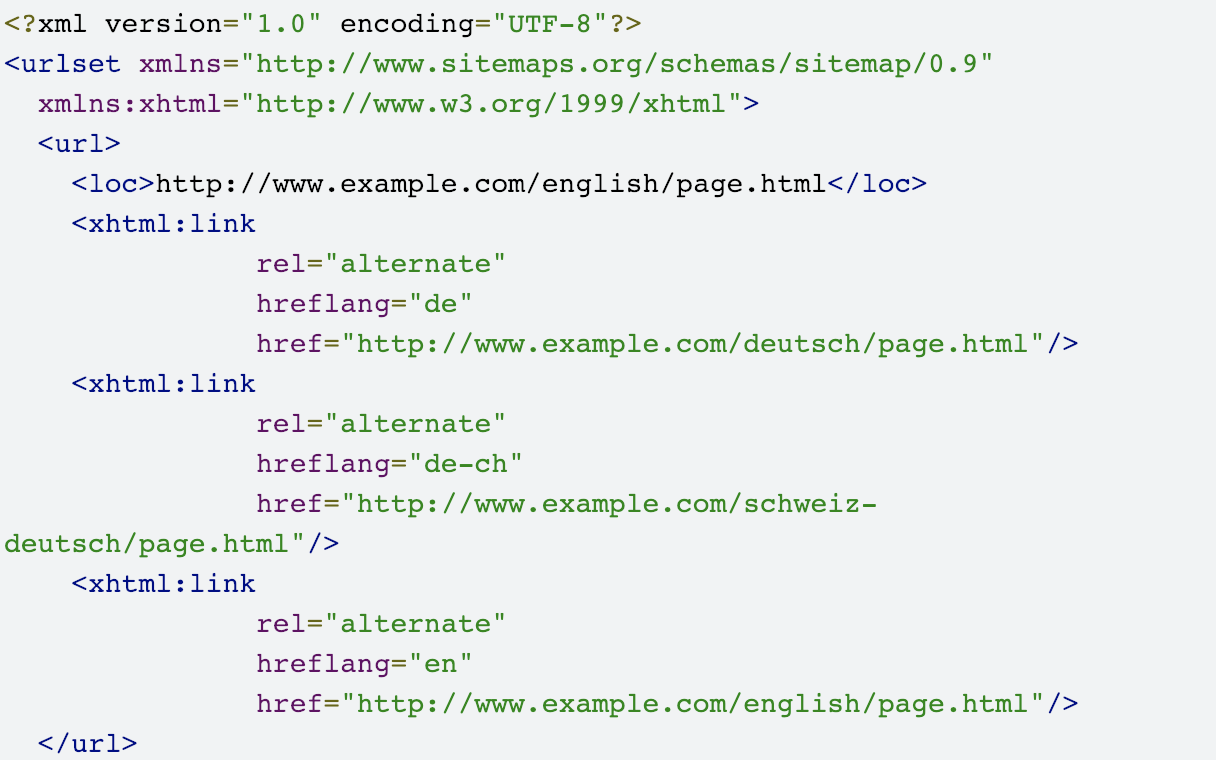

Establish international content

XML sitemaps support hreflang declarations.

There are a number of reasons that you might prefer to declare hreflangs in sitemaps rather than on individual pages. These reasons might include reducing code on pages by keeping it out of page headers, or dealing with a large and frequently-changing site.

The best practices for hreflang declarations remain the same:

- Respect the obligatory language and optional region codes

- List all translations of a page, including the page itself

- Don’t direct all pages to the home page

- Use the x-default code for pages that allow users to choose their language or region

If you use hreflang declarations in sitemaps, avoid also using hreflang declarations in page headers or in page html.

Example of hreflang declarations in sitemaps (Source: Google)

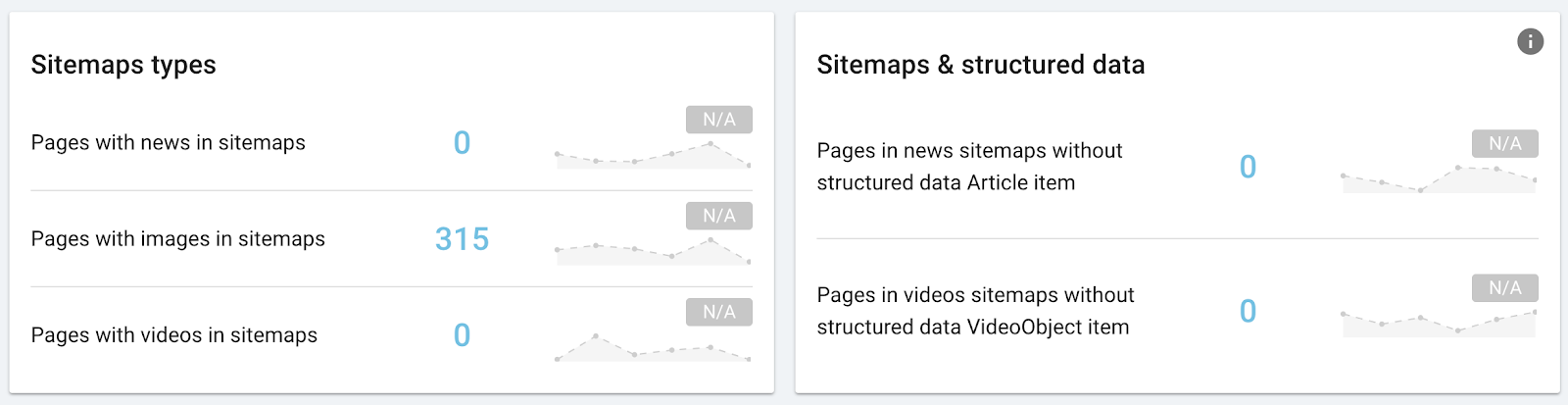

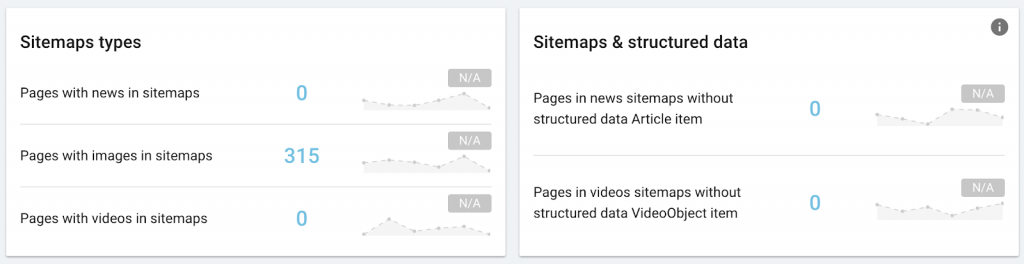

Present content to Google as Images, Videos and News

Content that you would like to have picked up by Google Images, Google Videos or Google News can be indicated in sitemaps. While this does not help you earn better rankings, it ensures that your content is discovered faster.

Google recommends also using the corresponding schema.org markup (ImageObject, VideoObject or NewsArticle) on the page, in addition to including images, videos, and news articles in XML sitemaps.

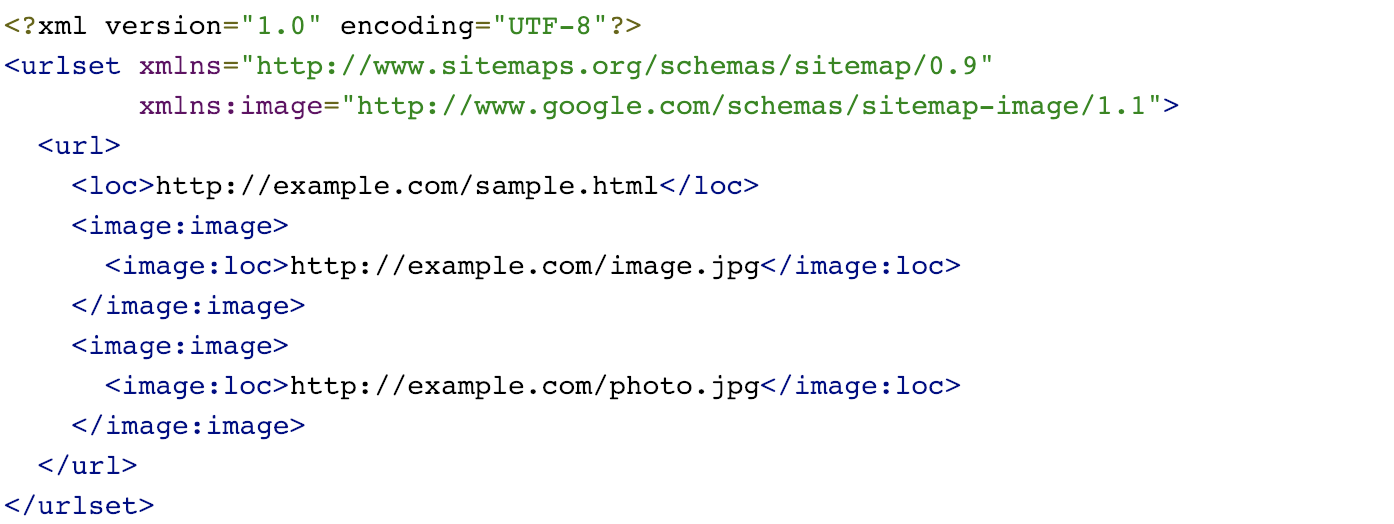

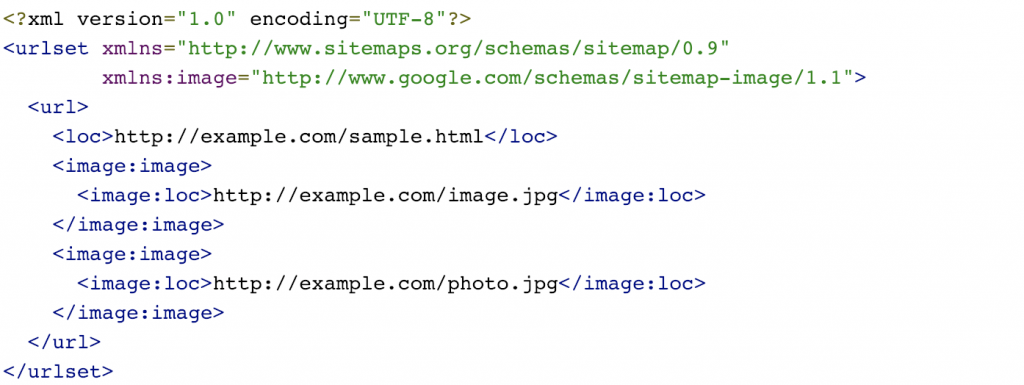

Google Images

Using an image sitemap makes it more likely that your images will be included in Google’s Image Search results.

Image sitemaps list image resources on your website and can contain additional information, such as a caption, geolocalisation, titles, and license information.

Example of image markup for sitemaps (Source: Google)

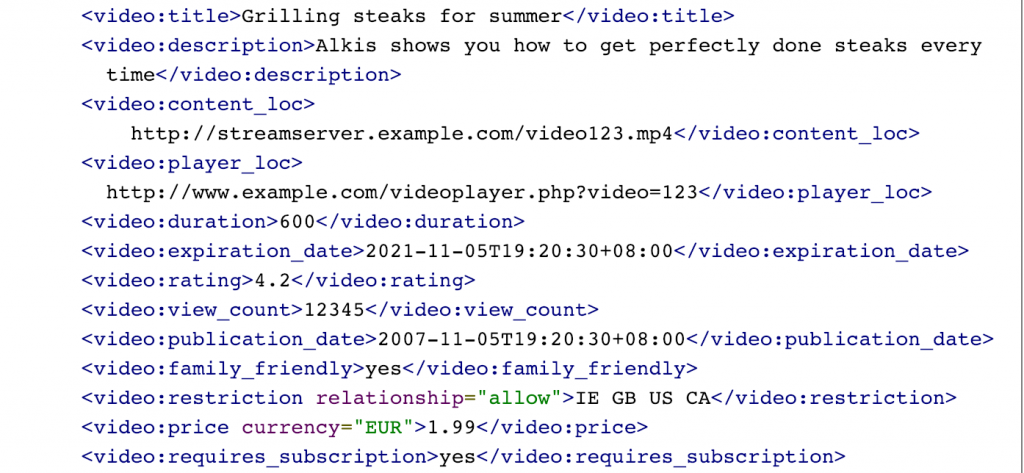

Google Videos

Video sitemaps make it easier for Google to find your video content and provide additional information that Google’s bots can’t read in video format. This additional information includes content such as description, duration, player location, rating, view count, location restrictions, price, and more.

This is a Google-specific format. You can find full information about the video sitemap format in Google’s help center.

Example of additional data for videos in sitemaps (Source: Google)

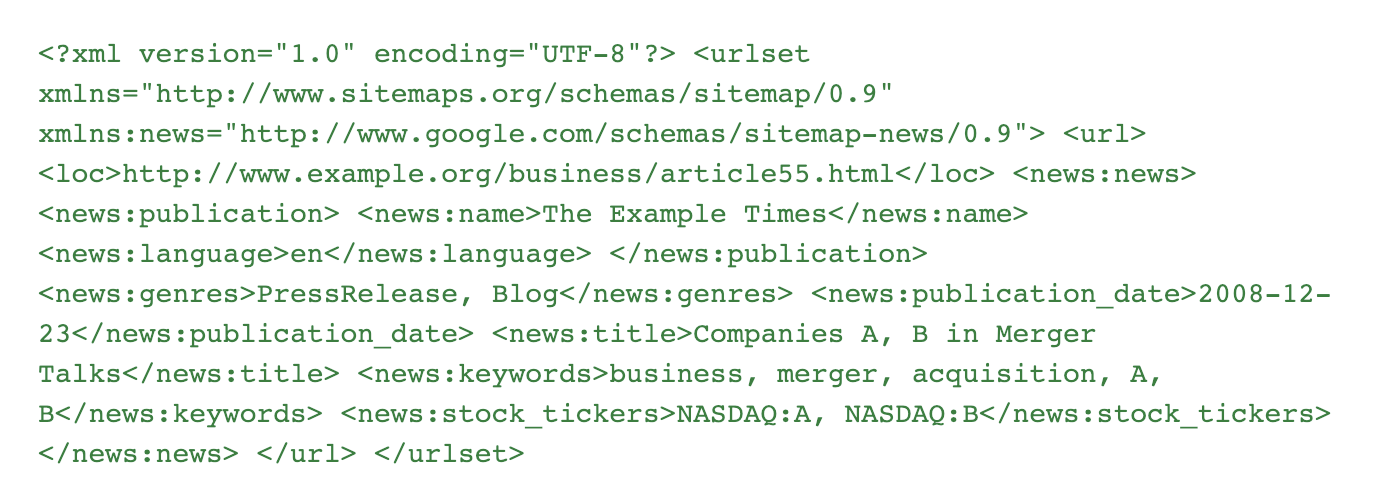

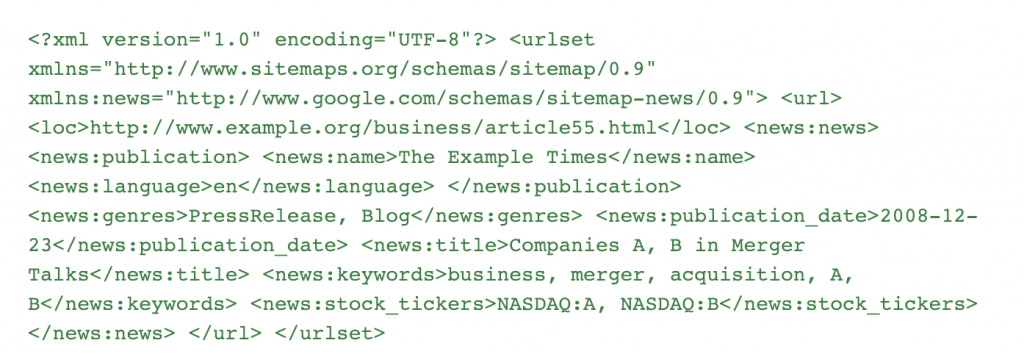

Google News

To use a Google News sitemaps, your site needs to be included in Google News.

News sitemaps follow standard sitemap protocol and include tags for URLs that represent news articles.

You can find full information about the news format in Google’s help center.

Example of Google News markup for sitemaps (Source: Google)

Optimize for multiple search engines

Finally, XML sitemaps are supported by multiple search engines, not just by Google. If you have a growing number of visitors from other search engines, using XML sitemaps will help optimize the information you provide to other search engines about the pages in your site.

XML sitemaps are supported by major search engines such as Google, Yandex, Yahoo!, Bing, and Baidu, among other search engines.