Win top visibility in organic channels, page by page or at scale

Your technical SEO strategy shapes how search engines discover, interpret, classify, and present a website in order to attract your audience. Oncrawl’s crawler allows you to collect an analyze thousands of metrics across your site, providing the information you need to increase visibility, traffic and revenue.

A flexible and scalable source of information about your website

A multi-level crawl strategy that serves your business

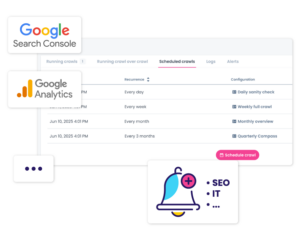

Crawl what you need, when you need it: Oncrawl is built to manage multiple projects, processes, and workflows, freeing you to focus on other important tasks.

– Define the scope, frequency, and notification options for each type of analysis.

– Solve crawl issues faced by other crawlers regarding rendering, speed, site size, access, or complexity.

– Cover use cases from audits, to simulating search engines, to crawlability and indexability trouble-shooting, to custom monitoring.

Drive better decision-making based on the how and the why

Your website and your brand are unique. Your digital strategy should reflect how search engines and search users really interact with your digital content, and how that helps you reach your business goals.

– Identify how your site’s content, performance and structure work together and use those relationships to prioritize the projects that can have the most impact.

– Remove biases and generalizations: focus on site sections that are the most-viewed or highest-converting.

– Ensure the pertinence of insights by contextualizing with data from other sources: in-house data lakes; web performance, cost, and revenue information; and search performance data from connectors to sources like GA4 or Google Search Console.

With Oncrawl, stay a step ahead

– View your site the way search and answer engines do

– See how website projects and changes impact the overall health of your site

– Determine which technical factors are the weakest on your site

– Incorporate new metrics as search and answer engines evolve