The Oncrawl interface intends to be very user friendly with many relevant graphics and visualizations. However, when the need in data analysis is more sophisticated, it is essential for our users to be able to request Oncrawl on a more personal way.

The Oncrawl filters, whether they are Quick or Customs – that is to say pre-written or personalized data filters – are the solution for customized and complex analysis of an important amount of data coming from your projects.

If you already master the Data Explorer and wish to consult our 17 Custom Filters, click here.

Oncrawl is a powerful system which compiles and creates a set of SEO metrics. But our data platform is above all based on an API which enables to request all the compiled data of your project through the Data Explorer.

How does the Data Explorer work?

The Data Explorer is the interface to prioritize for complex analysis because it allows to browse all your data by manipulating our OQL request language (Oncrawl Query Language).

The Data Explorer is the interface to prioritize for complex analysis because it allows to browse all your data by manipulating our OQL request language (Oncrawl Query Language).

The “Data Explorer” is available in the “Tools” part located at the bottom of the app menu.

You will find in the Data Explorer some “Quick Filters” which are pre-filled filters by our team of SEO experts.

They are the first possible step for the creation of your own filters; the Custom Filters (own filter) which we will approach in this article.

What are Quick Filters used for?

The “Quick Filters” have been created to help you access some important SEO measures.

In the “Quick Filter” field, you already have access to very important filters which will make you quickly detect some SEO Quick Wins to improve, as links pointing towards 404, 500 or 301/302, too slow or thin pages…

Here is the complete list:

- 404 errors

- 5xx errors

- Active pages

- Active pages not crawled by Google

- Active pages with status code encountered by Google different than 200

- Canonical not matching

- Canonical not set

- Indexable pages

- No indexable pages

- Orphan active pages

- Orphan pages

- Pages crawled by Google

- Pages crawled by Google and Oncrawl

- Pages in the structure not crawled by Google

- Pages pointing to 3xx errors

- Pages pointing to 4xx errors

- Pages pointing to 5xx errors

- Pages with bad h1

- Pages with bad h2

- Pages with bad metadescription

- Pages with bad title

- Pages with HTML duplication issues

- Pages with less than 10 inlinks

- Redirect 3xx

- Too Heavy Pages

- Too Slow Pages

But it happens that these “Quick Filters”, thought in a generic way, are not detailed enough to be relevant for your project. It may also be the case when you want to analyze a set of accurate URLs answering to specific conditions – a page group of your segmentation, a particular URL typology or why not a set of pages which doesn’t receive any Google or SEO visits.

When you choose one of the Quick Filters, the part below evolves to display the filter configuration. The data table located at the bottom of the configuration is also updated. This is the moment when you start interpreting data.

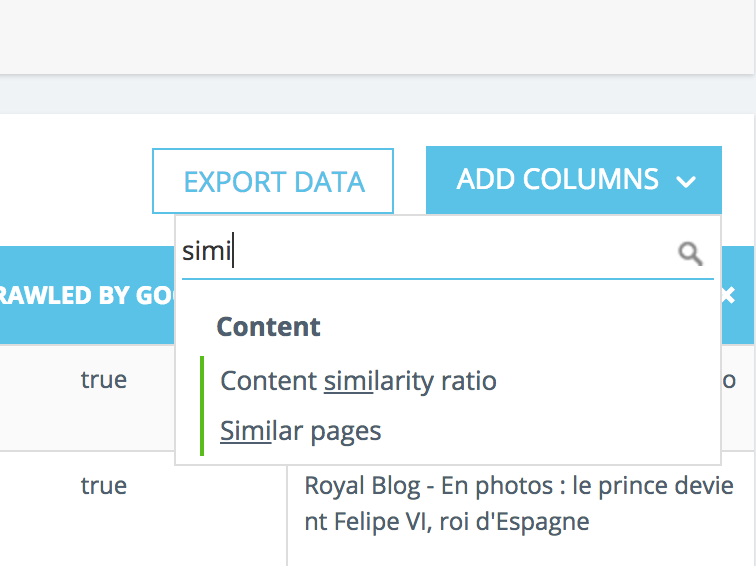

PRO TIP: We advise you to expand your data table by adding columns in accordance with your current analysis.

As a reminder, all the data are available for all the URLs, it is up to you to define your metrics. Obviously, once configured, this table is exportable in CSV.

Custom Filters are improved Quick Filters

Our “Quick Filters” are powerful but if you want to go further in the request process and save your own filters, this is where you need Customs Filters or Own Filters.

You might have noticed the field “Name”. It enables to give a name to your personalized filter to find it easily at the bottom of the “Quick Filters” list. But first of all, let’s see how filters work.

How do filters work?

There are numerous data available and the filters possibilities add an important flexibility.

A filter is composed of three parts:

- The Field: the data which is requested

- The Operator: the operator which enables to filter the field

- The Value: the value that the operator has to use for the data

NB: there are two options when we work on character string fields which allow to force the case or to use regular expressions on the filter. We will not approach the RegEx concept in this article.

Perhaps you have noticed that there are two types of filters sequences aggregation – lines : the AND and the OR. This functionality is a way to create inclusive or exclusive sets in the data request.

By default, logic operators between filters are AND, but it is also possible to use OR, which allows to compare URLs from different sets. It is up to you to judge the most relevant type of aggregation for your data.

This applies between every line of the filter, but once again you have probably noticed that it is possible to create blocks. Thus, OR and AND can be very interesting in this context.

How to create a Custom Filter from scratch?

Let’s take a concrete example. Select all the duplicated pages crawled by Google and whose title includes a particular expression:

This means creating a filter in 3 steps:

- “Pages with duplicate content issues” is true

- “Crawled by Google” is true

- “Title” contain [my expression]

This simple example allows to better understand how data can be filtered. You should already be excited about crossing metrics!

How to create Custom Filters faster?

You can start with one of the Quick Filters to create your “Own Filter” by adding conditions in the filter and save it to quickly recover it at your next visit.

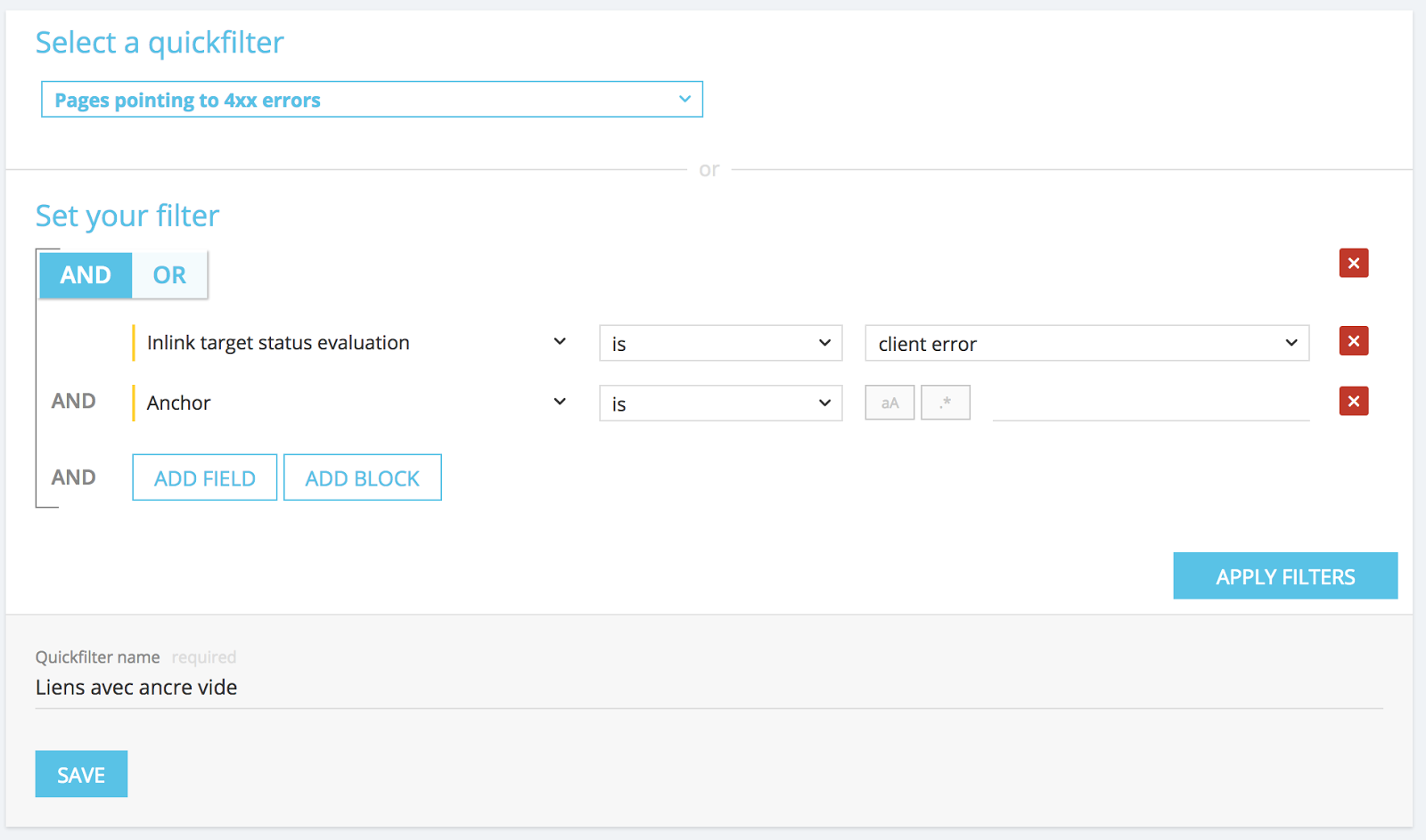

For instance, starting from links pointing towards a 4xx, you can choose to filter the links with an empty anchor: “Anchor“ / “is” / “”. Then save this filter thanks to the next block “Quick Filter Name” and click in “Save” just like in the screenshot below.

Once saved, it can be modified freely and re-saved under the same name, if needed.

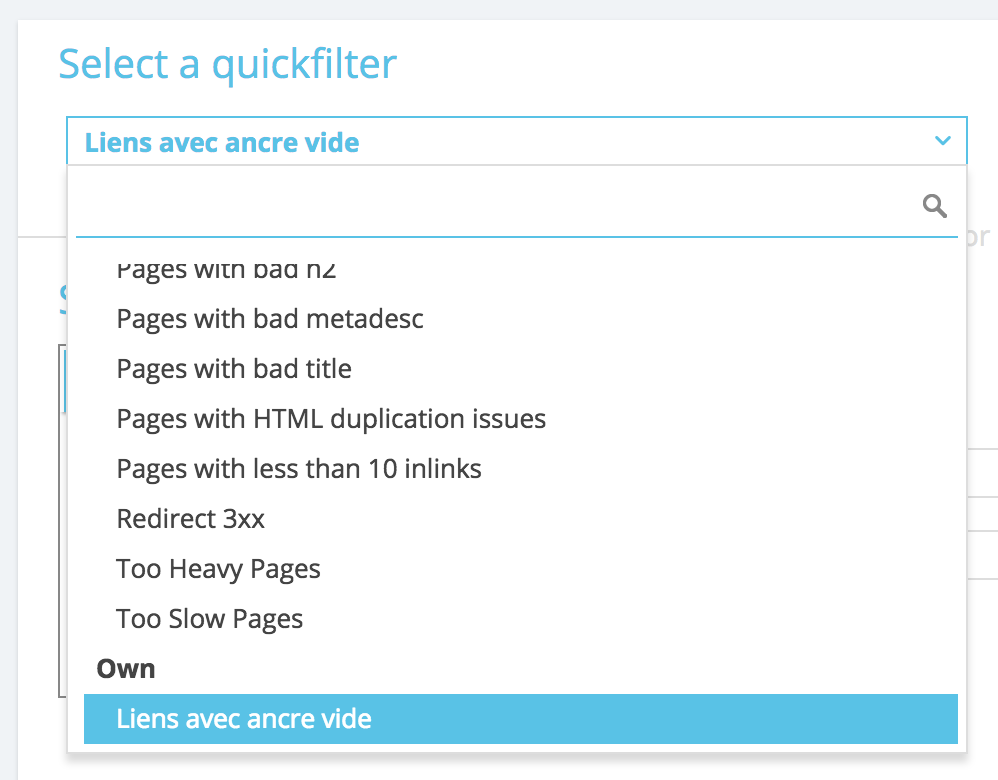

You now have access to this particular “Quick Filter” directly in the list “Select a Quick Filter” at the bottom of the “Own” part as in the screenshot below.

17 Custom Filters to know

After all these explanations, you might want to know the 17 Custom Filters that are most appreciated by our power users. Suspense is over, here they are:

They are separated in two groups, for users using Basics projets and for users with Advanced projects (with logs analysis and cross-data reports).

Basic Custom Filters

Checking Important Indexability

- Pages which can’t be indexed or crawled.

It is important to verify that none of your important pages are in this list.

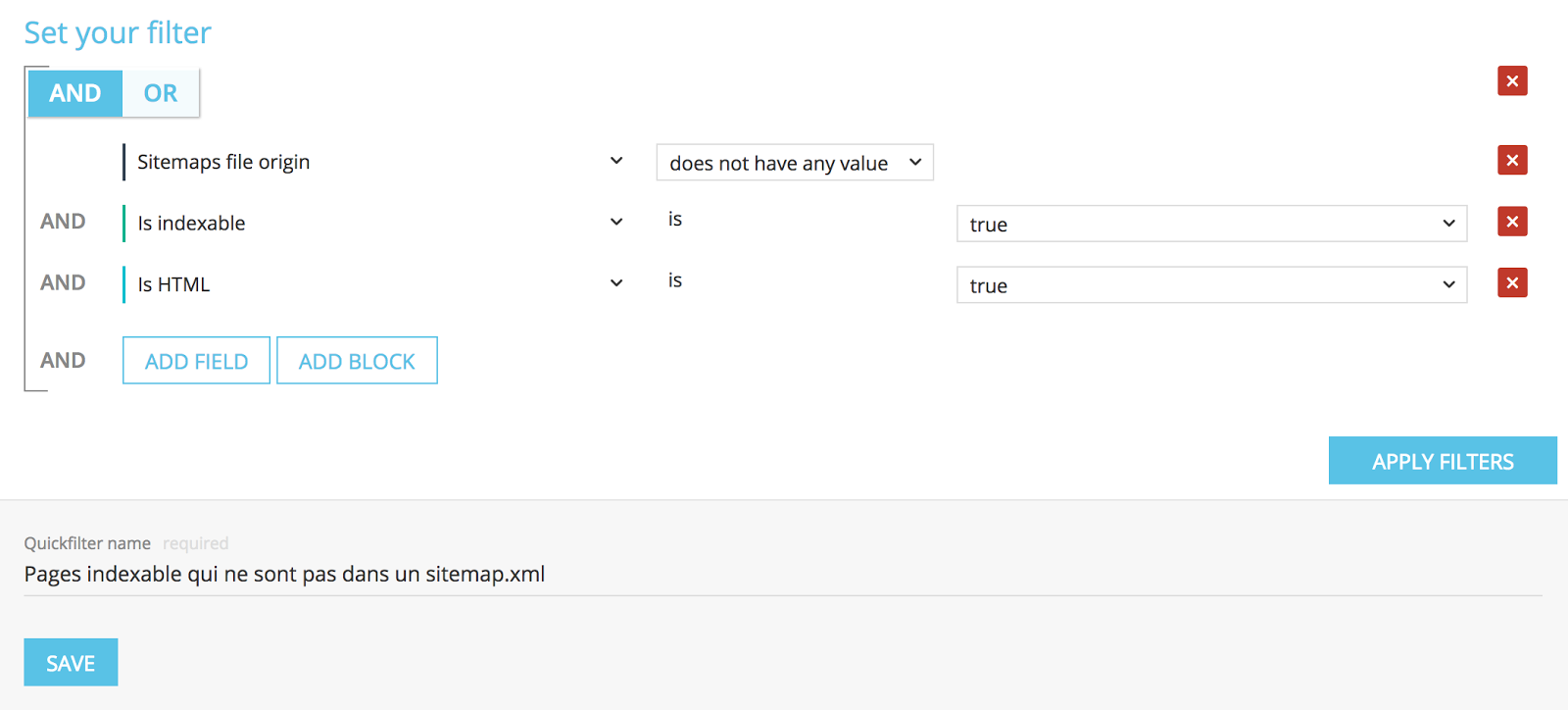

- Indexable pages which are not in a sitemap.xml.

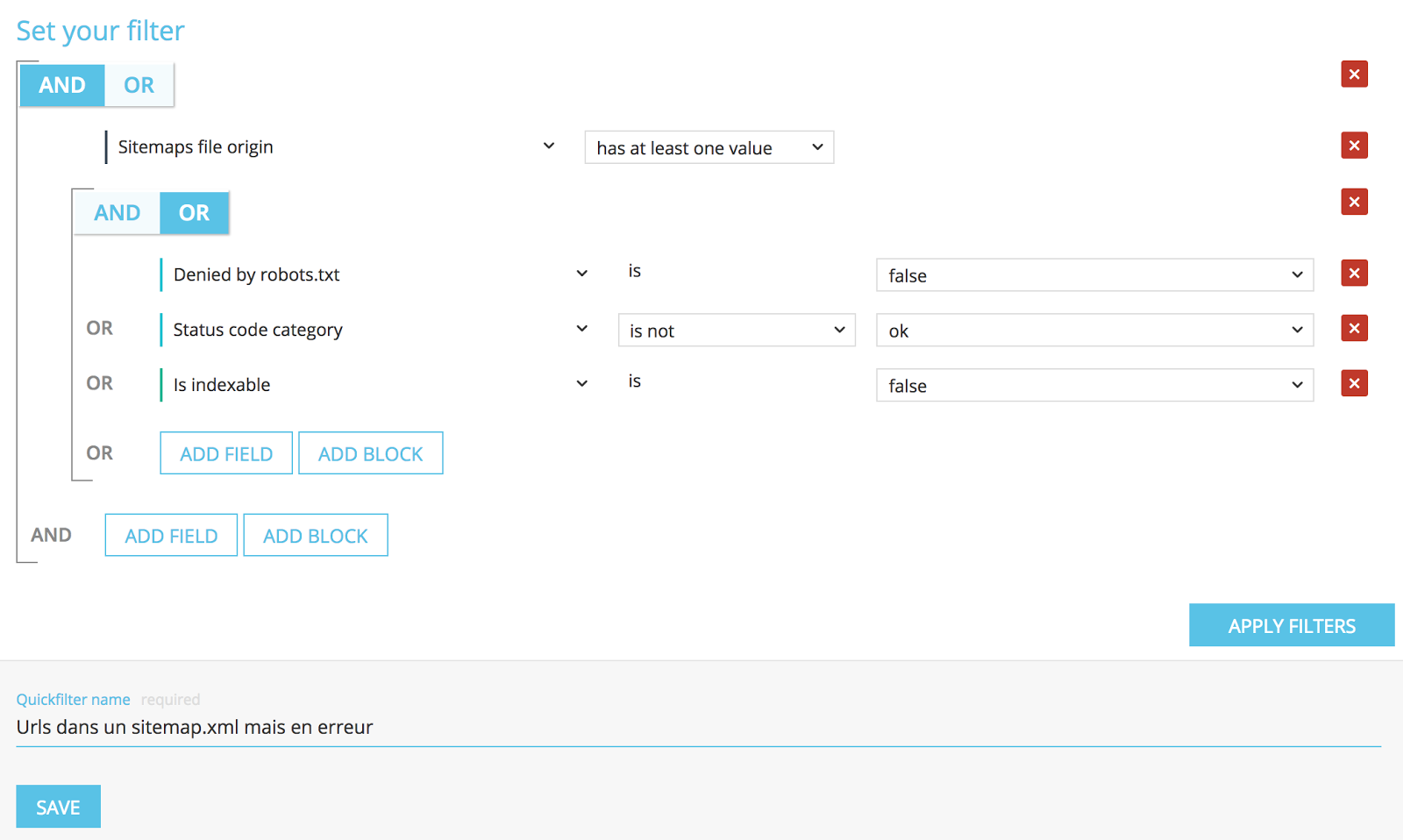

- Pages in a sitemap.xml but pointing towards error pages.

Poor Content, Duplicates and Canonicals: Objective, counteract Google Panda

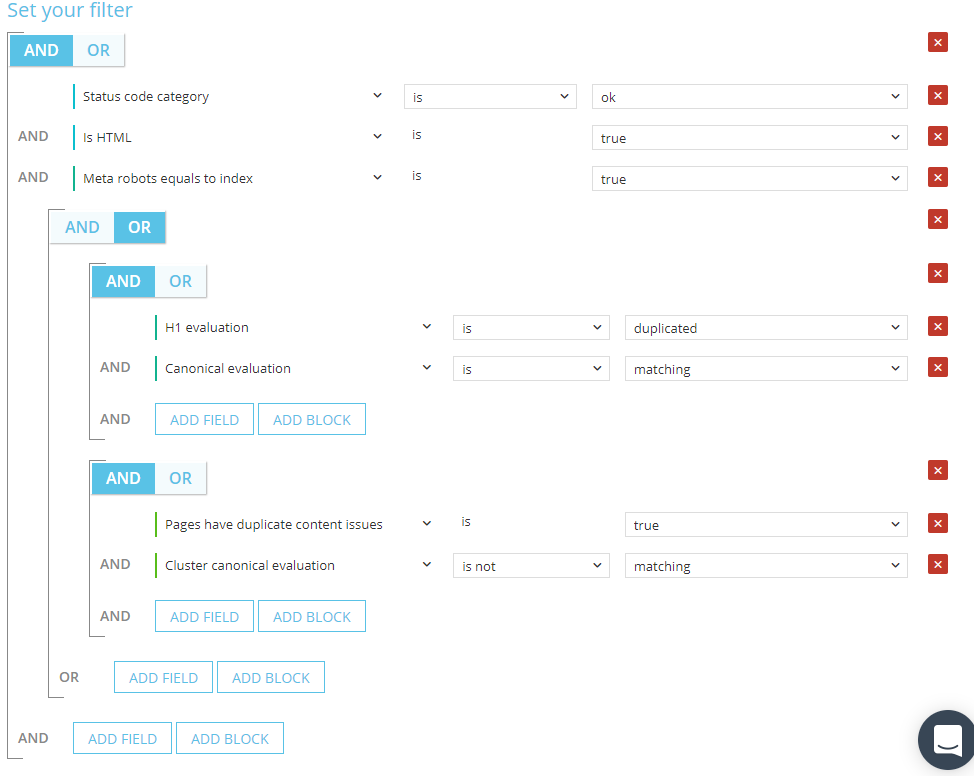

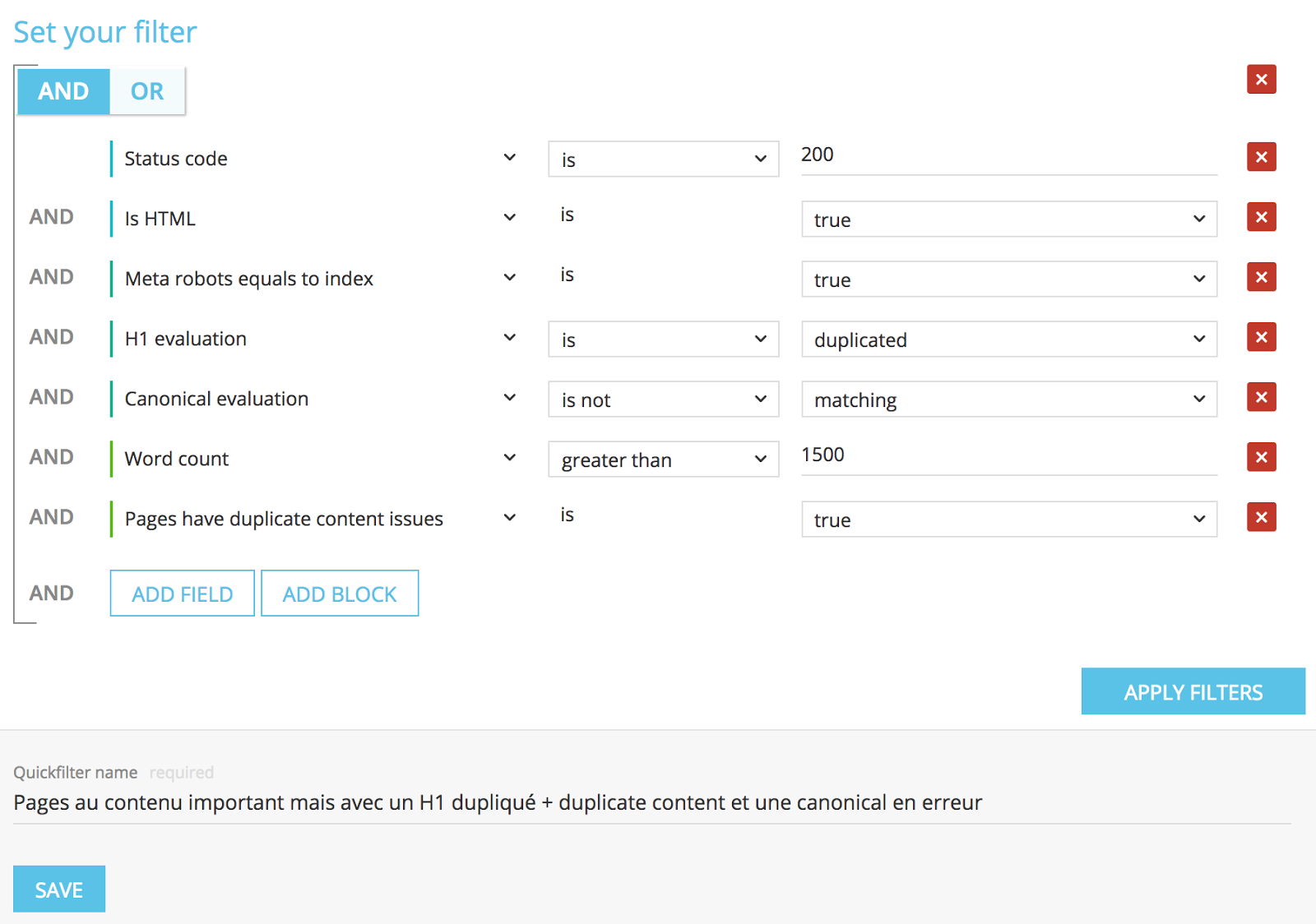

- Pages including important content but with a duplicated H1, duplicated content and canonicals in error.

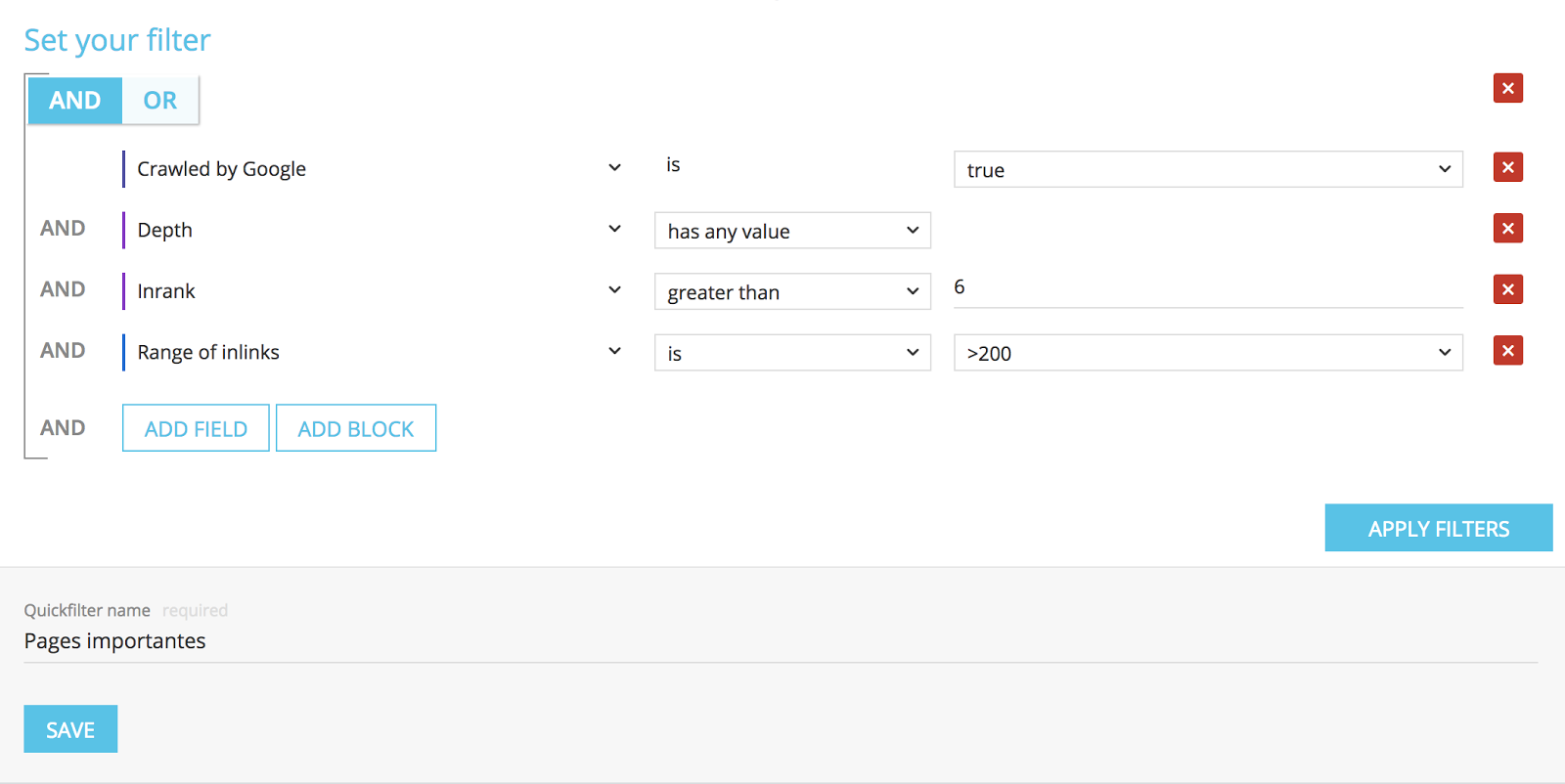

You are seeing that “Status code is 200” + “is HTML is true” + “Meta robots equals to index is true” filters can be important to focus on your indexable pages.

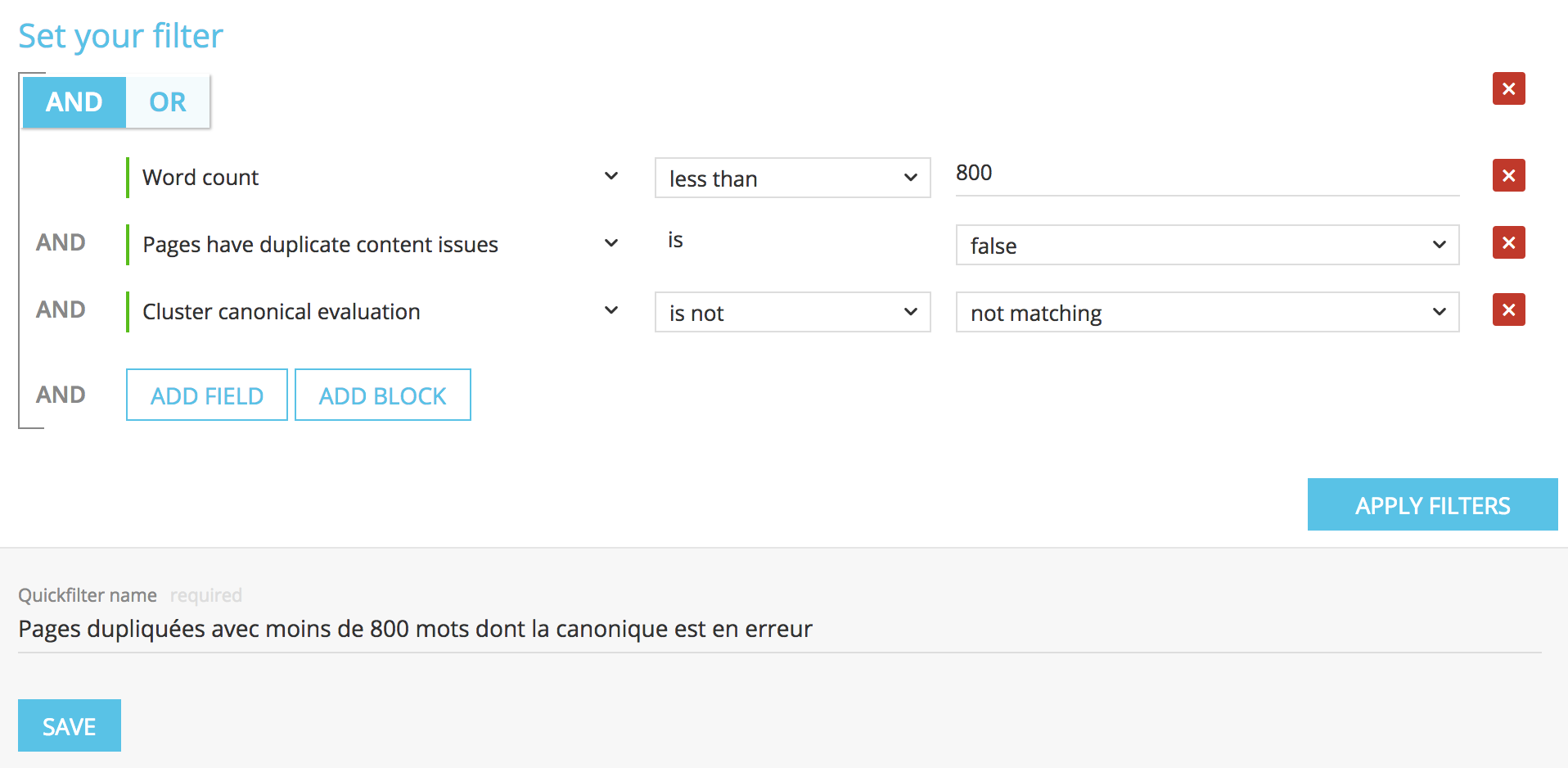

- Duplicated pages with less than 800 words whose canonical is in error.

Be very careful with the logical operators sequence when you use AND and OR.

Pro Tip: add Ngrams and Similar Pages columns to have a more sophisticated analysis.

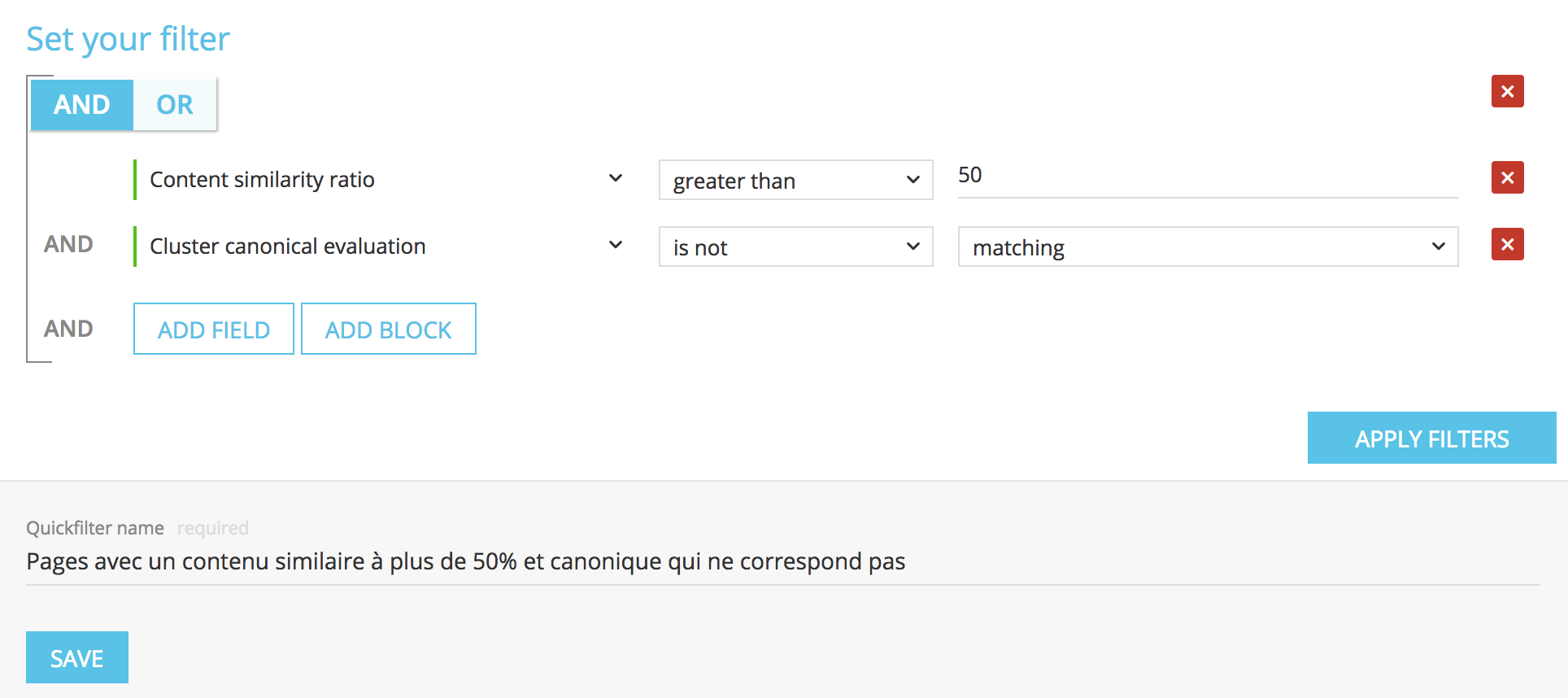

- Pages with a similar content at more than 50 % and canonical which doesn’t respond.

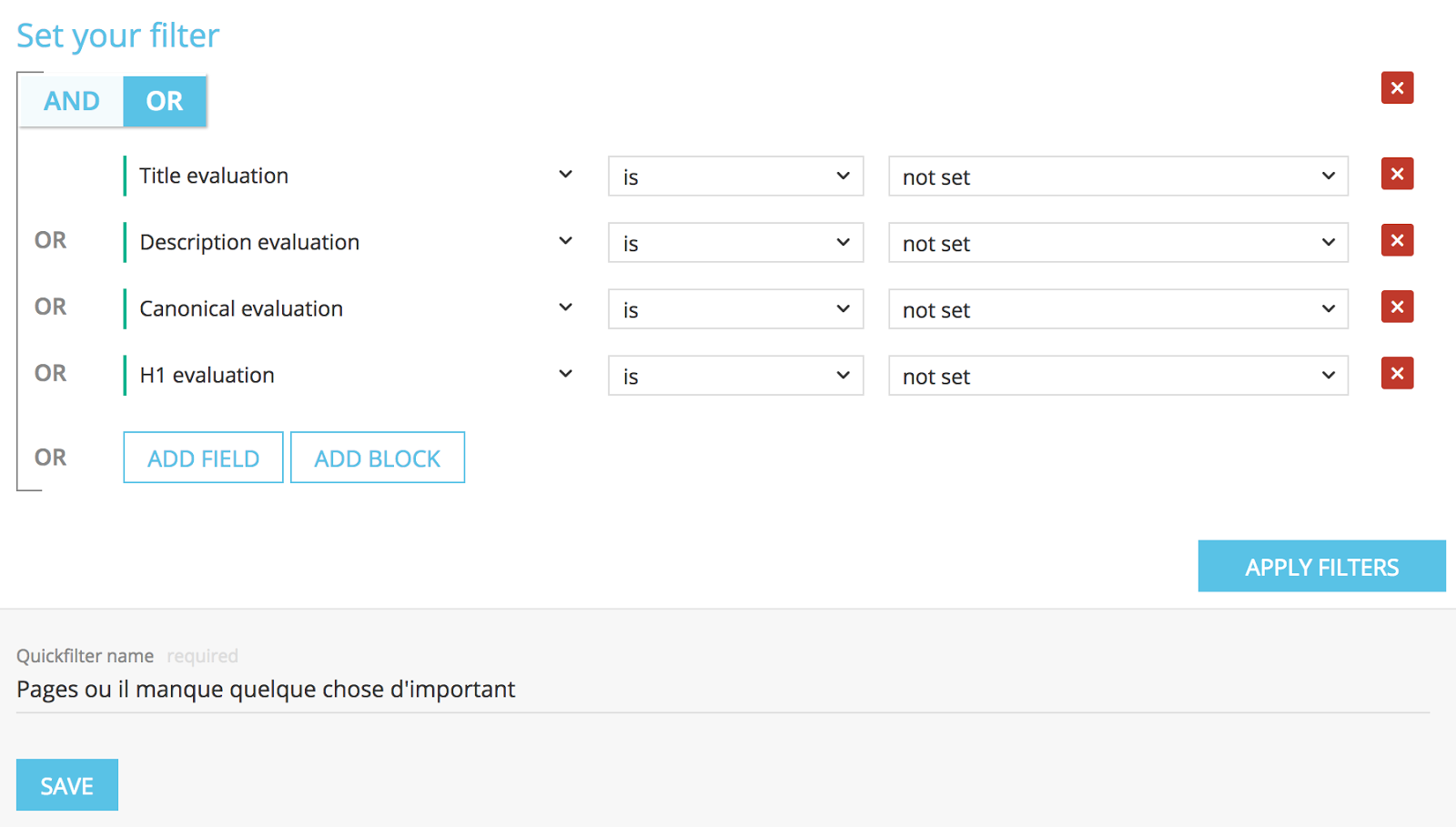

- Pages where important SEO tags are missing or empty.

Internal Linking: Objective, counteract Google Penguin

The internal linking has an impact in your capacity to spread the popularity of your pages towards some other essential pages and to encourage their ranking. Think about doing some basic checking.

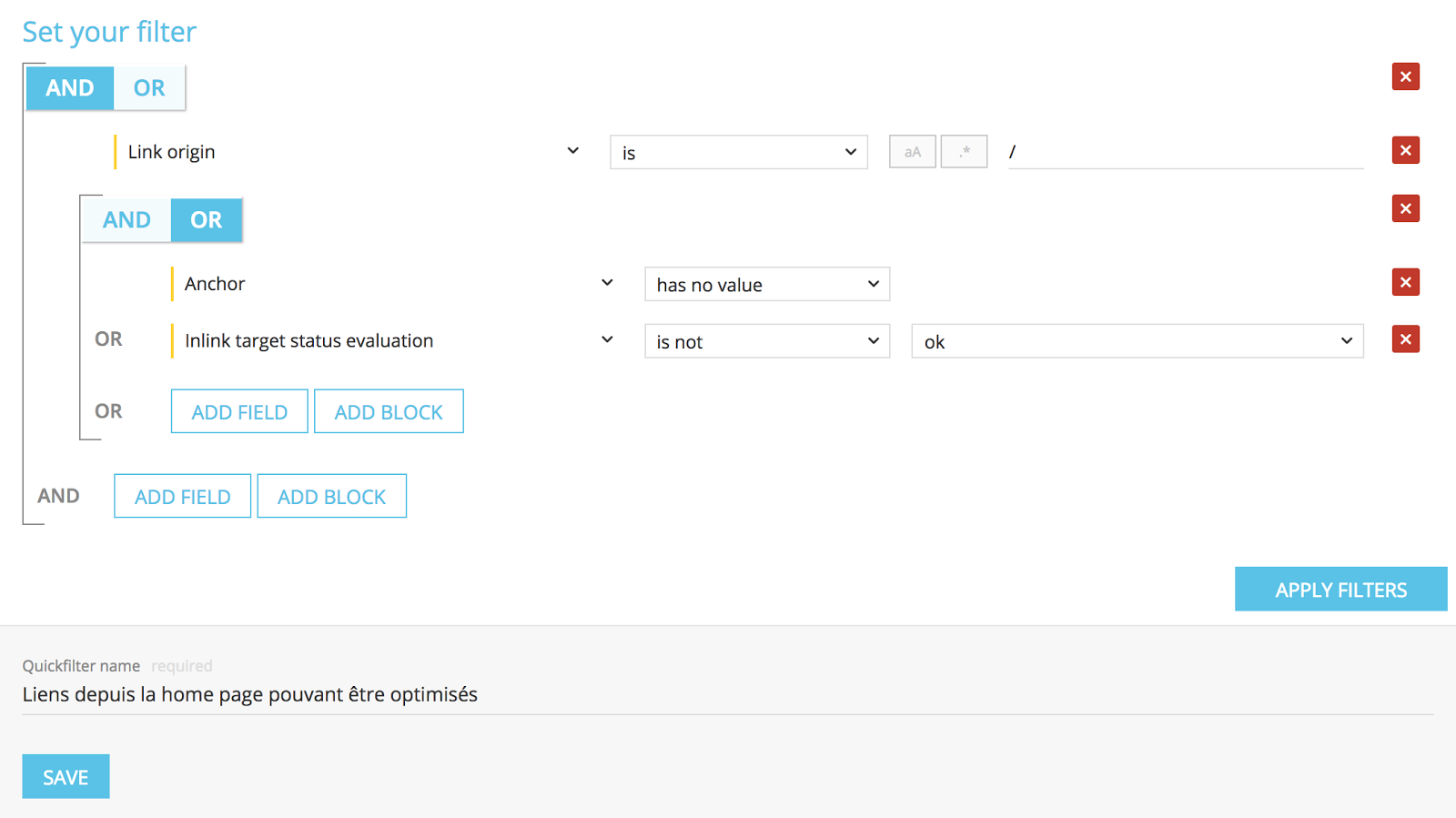

- Links from the homepage which can be optimized.

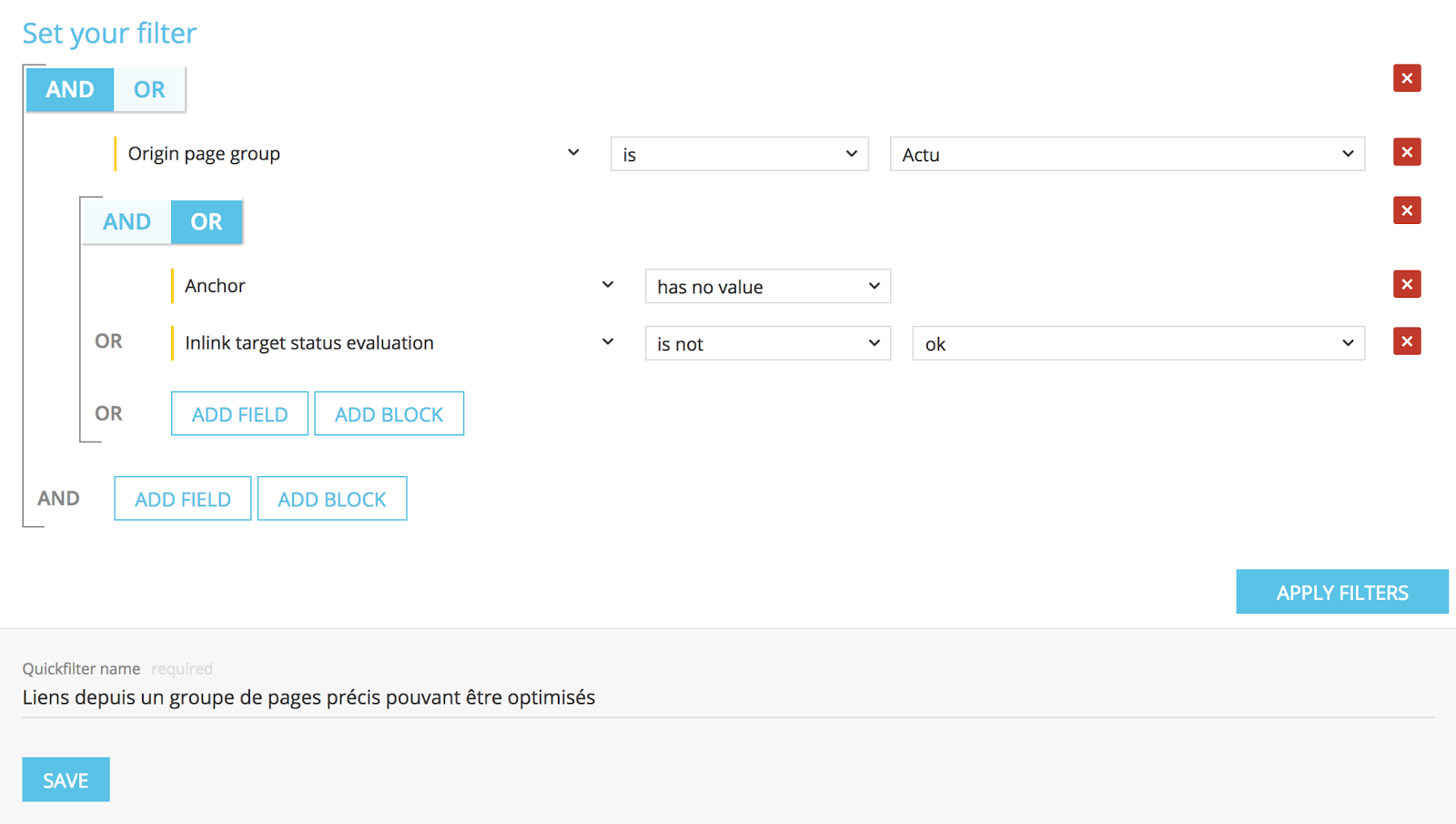

- Links which can be optimized from a specific page group.

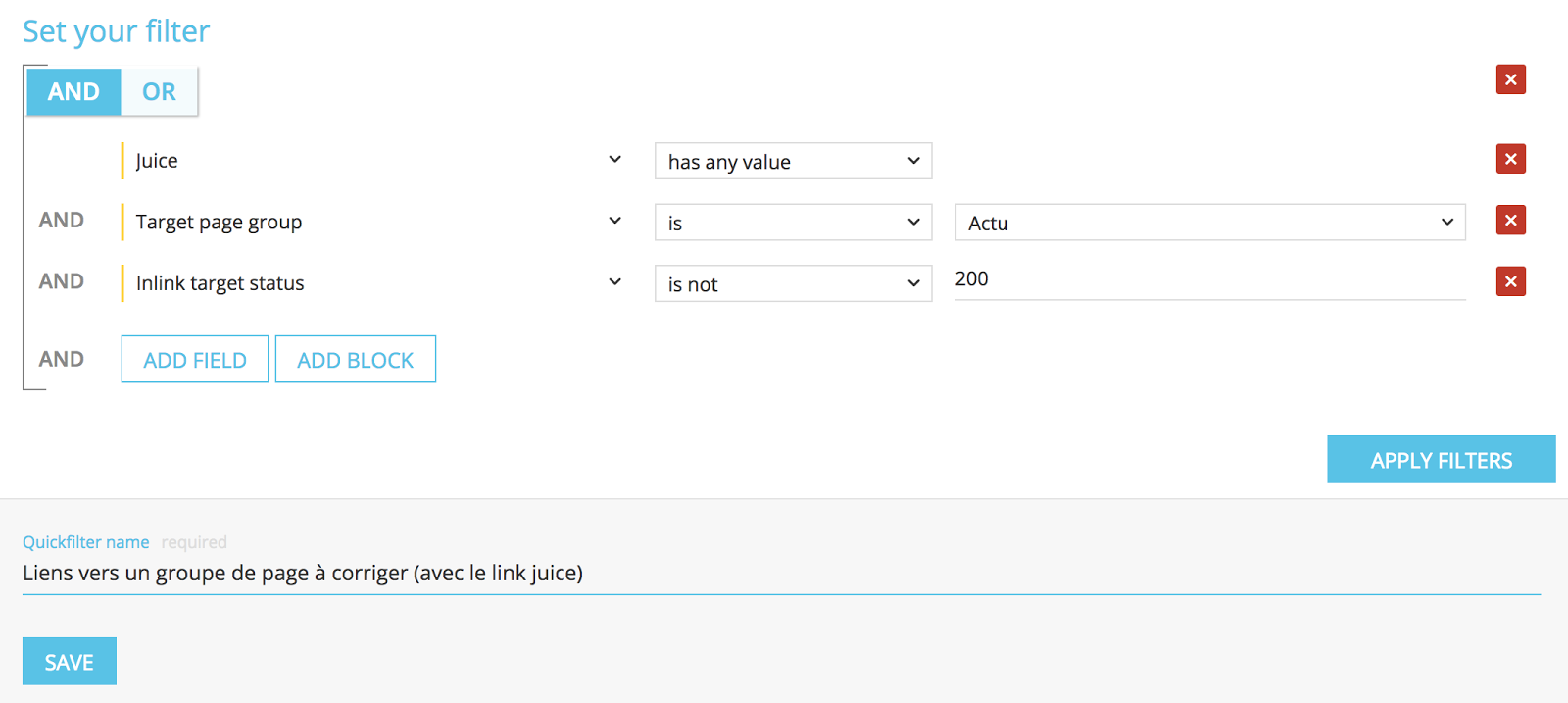

- Links towards a page group which has to be corrected (with link juice).

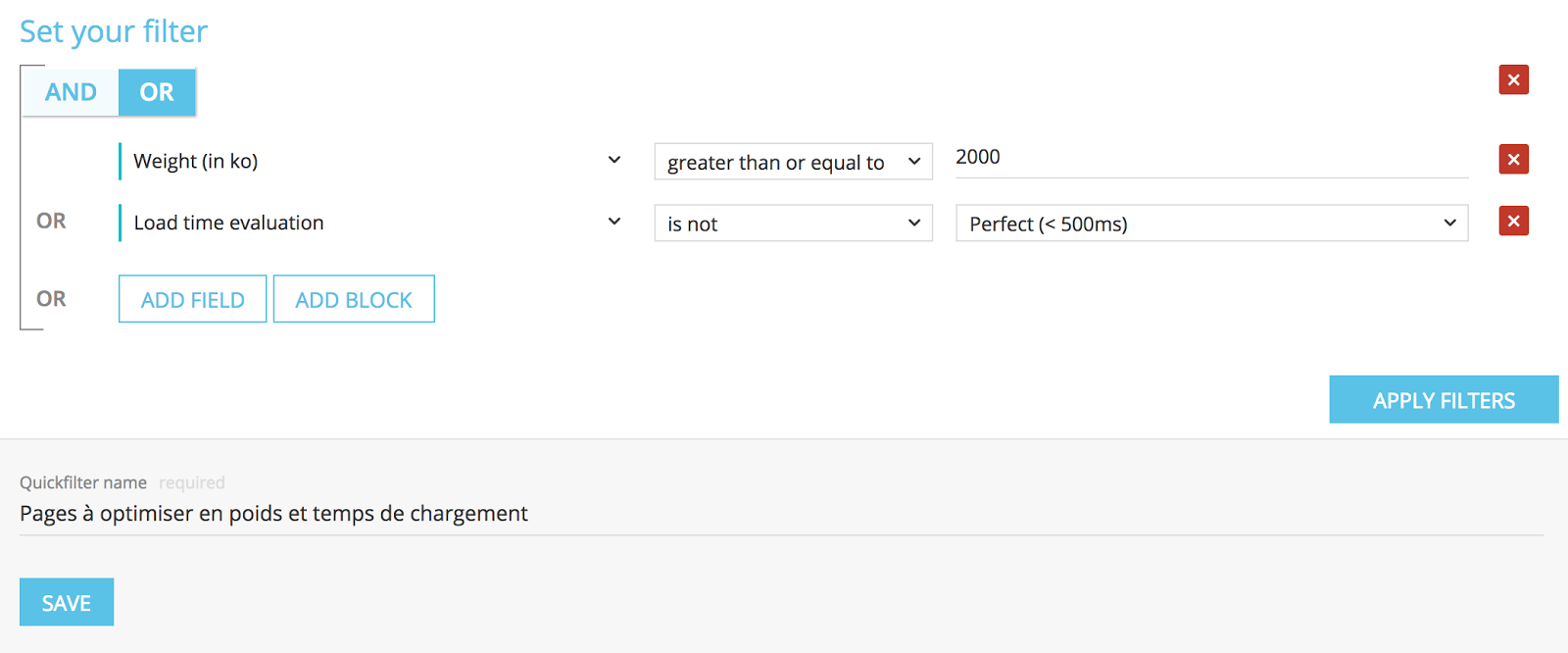

- Pages to optimized in terms of weight and load times.

Advanced Custom Filters

The advantage of Advanced projects is the possibility to combine logs data so you can refine your study to explore important pages segments – with an important visit rate – or on the contrary with a too low crawl frequency.

Here are the Oncrawl most trendy Customs Fields for this type of projects:

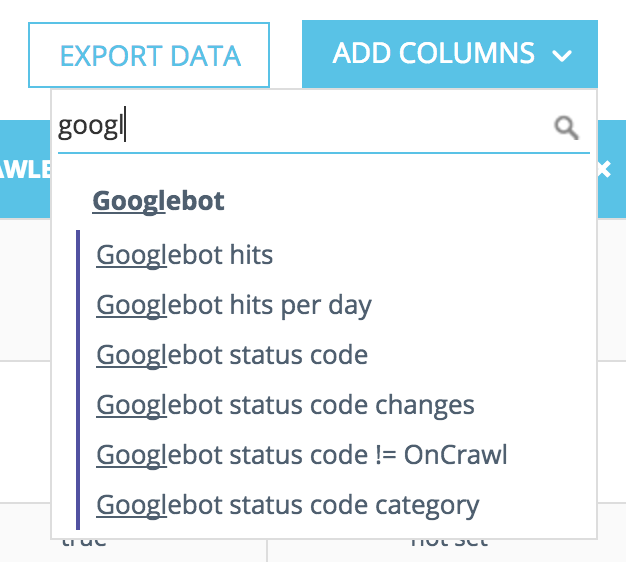

PRO TIP: for the crawl budget part, we invite you to always display the columns related to “Google” in the Data Explorer.

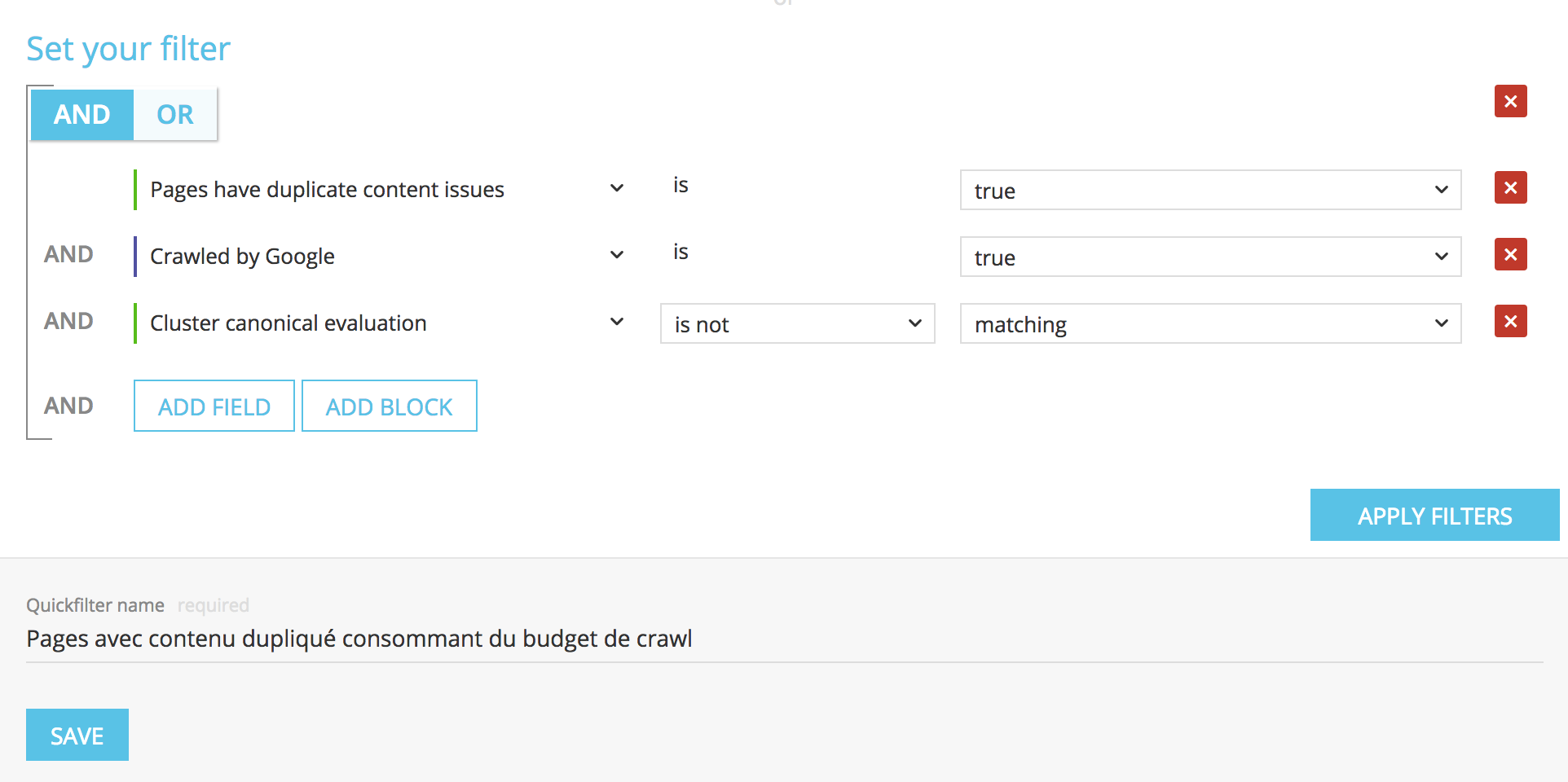

- Pages with a duplicated content consuming crawl budget.

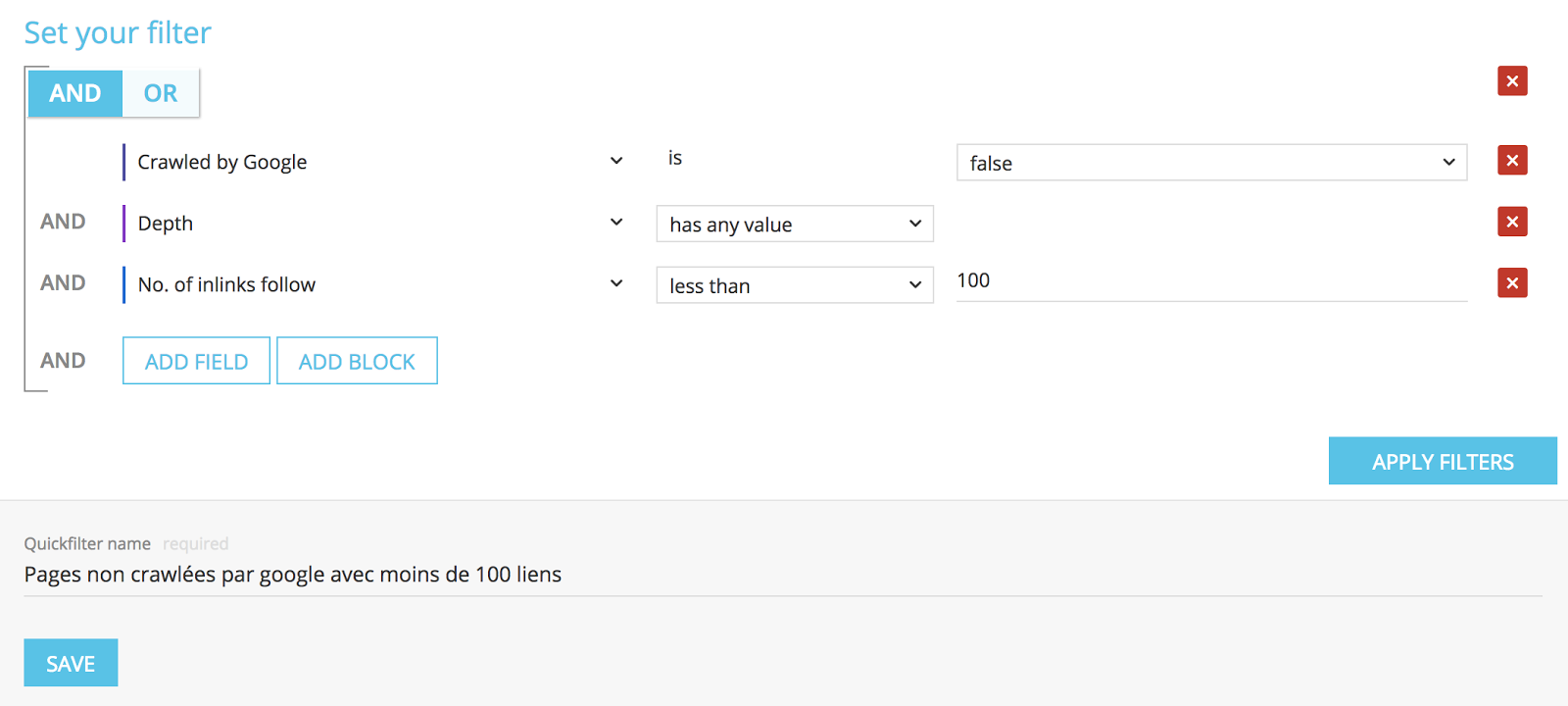

- Pages not crawled by Google with less than 100 links.

It is up to you to adapt the threshold of the number of links to analyse.

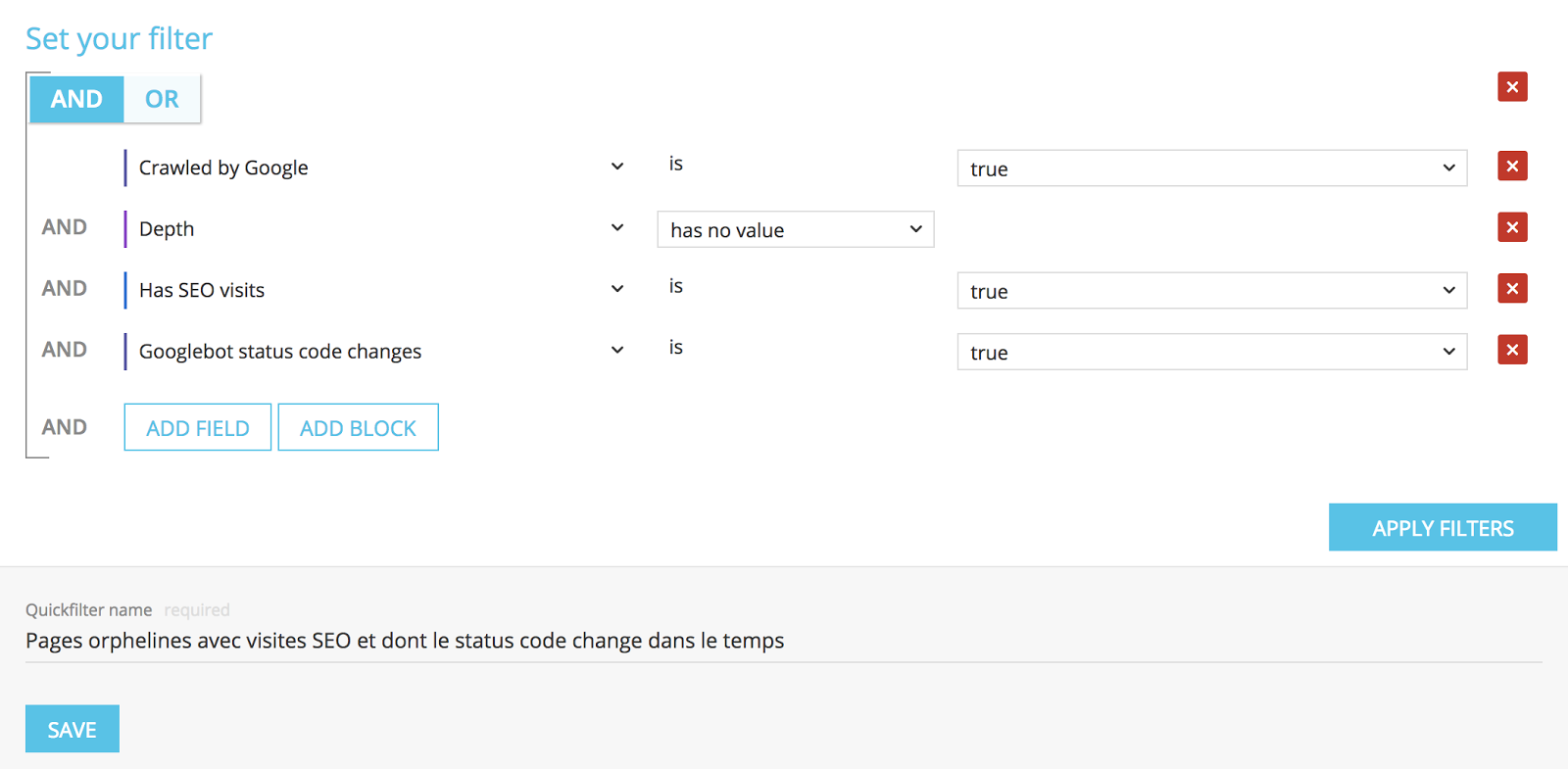

- Orphan pages with SEO visits and whose status codes evolve.

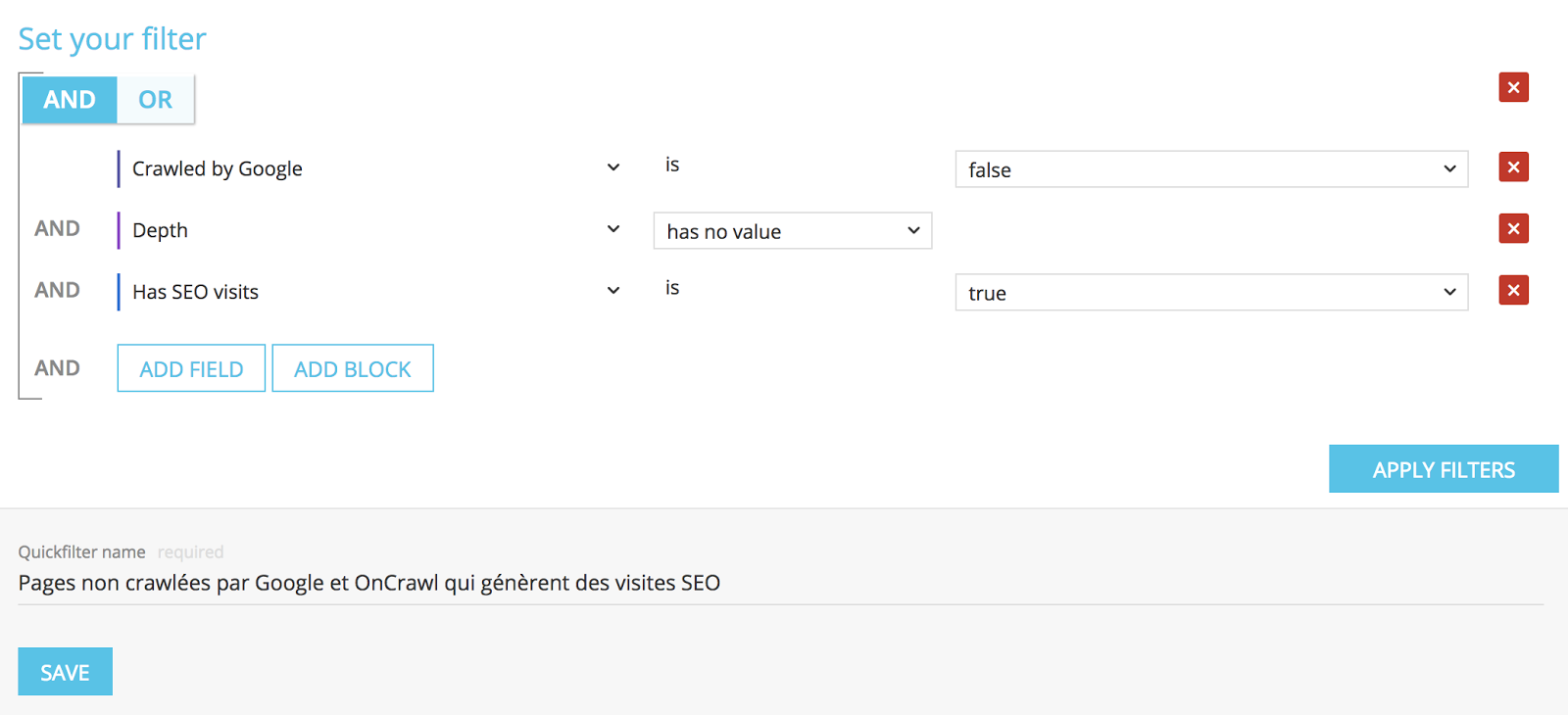

- Orphan Pages which are not crawled by Google and Oncrawl but still generate SEO visits (yes it is possible).

Pro Tip: make sure to separate the pages with and without SEO visits by displaying the column “Has SEO Visits” coming from Google and classified them regarding their Google visits (the first one is frequently the homepage). These pages are your best pages to create powerful internal linking.

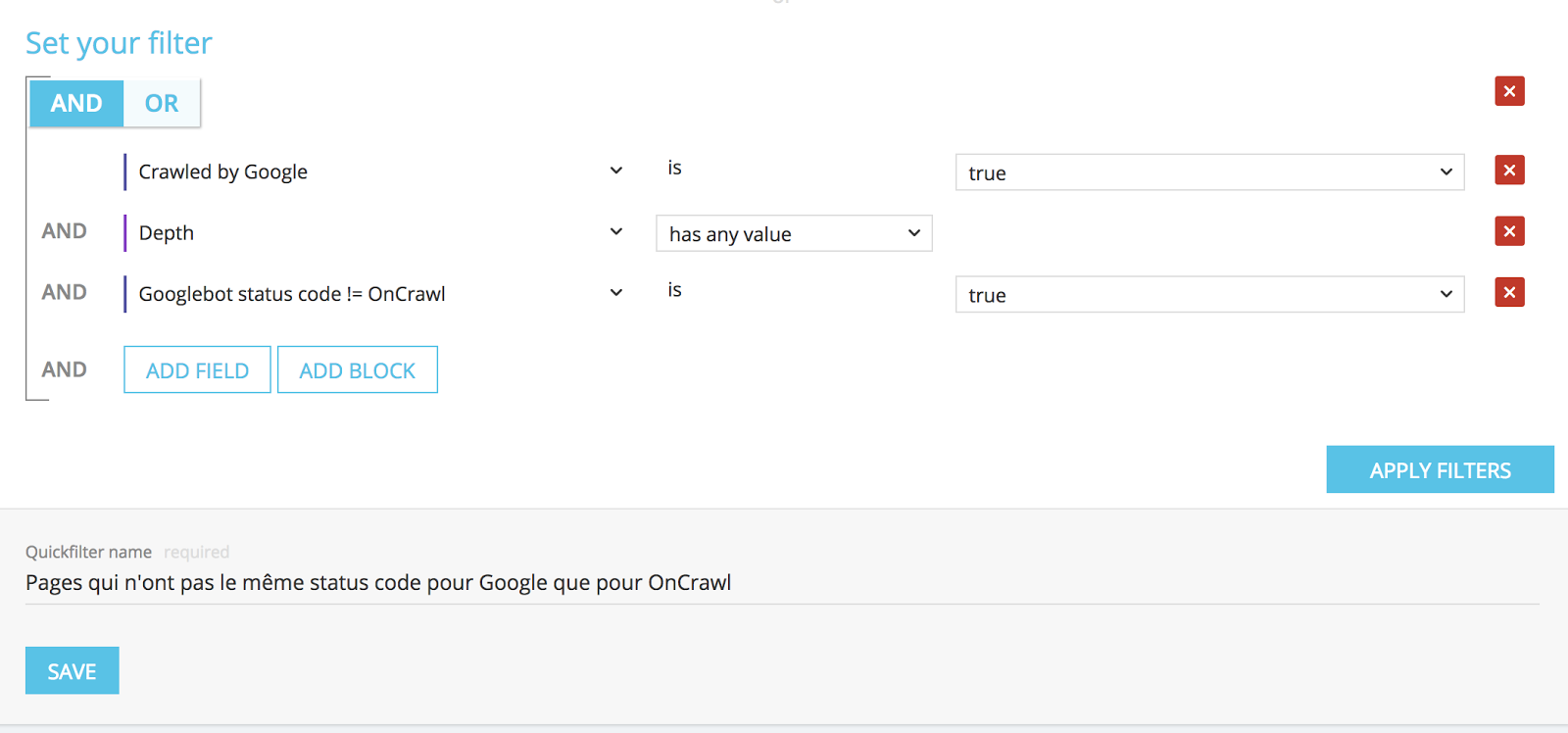

- Pages which don’t have the same status codes for Google as for Oncrawl.

These examples are only a short part of what it is possible to do, they complete and enrich the filters and graphics created by Oncrawl during your navigation in the app and in the Quick Filters.

Conclusion

The Oncrawl Custom Filters are extremely powerful and appreciated by our users because, thanks to the CSV export, they allow to create data sets which can be treated in other tools as R-studio, spreadsheets, Excel and many others.

This is also the first step for the integration of our API because you are about to manipulate our OQL request language. We already have Google Spreadsheet connectors for example.

Possibilities are almost limitless, it is up to you to create your filters according to your needs. By the way, don’t hesitate to share them with us and the Oncrawl community. We will be pleased to add them in the next versions of the app if they are relevant.

The use of Data Explorer and its filters can be complex for an unseasoned user. But don’t worry, you just need a little bit of practise to efficiently manipulate Oncrawl filters.

Our Customers Success Managers are here to support you in your approach if needed. You can contact them through the chat in the app.

Now it is your turn!