The purpose of the technical SEO audit is to identify as many issues as possible with a technical cause that affect search engine performance. This includes factors such as the website’s structure, speed, and mobile-friendliness, as well as its use of keywords, meta tags, and other elements that can affect how search engines crawl and index the site. Technical SEO audits allow you to do an in-depth checkup of the website and to explore the different ways in which your website may be underperforming due to an inefficient technical setup.

It is important to note that the technical SEO audit does not concern the content of the site, but only its structure and technical elements. However, these elements can have a significant impact on the SEO of your site and it is therefore essential to take them into account in your SEO strategy.

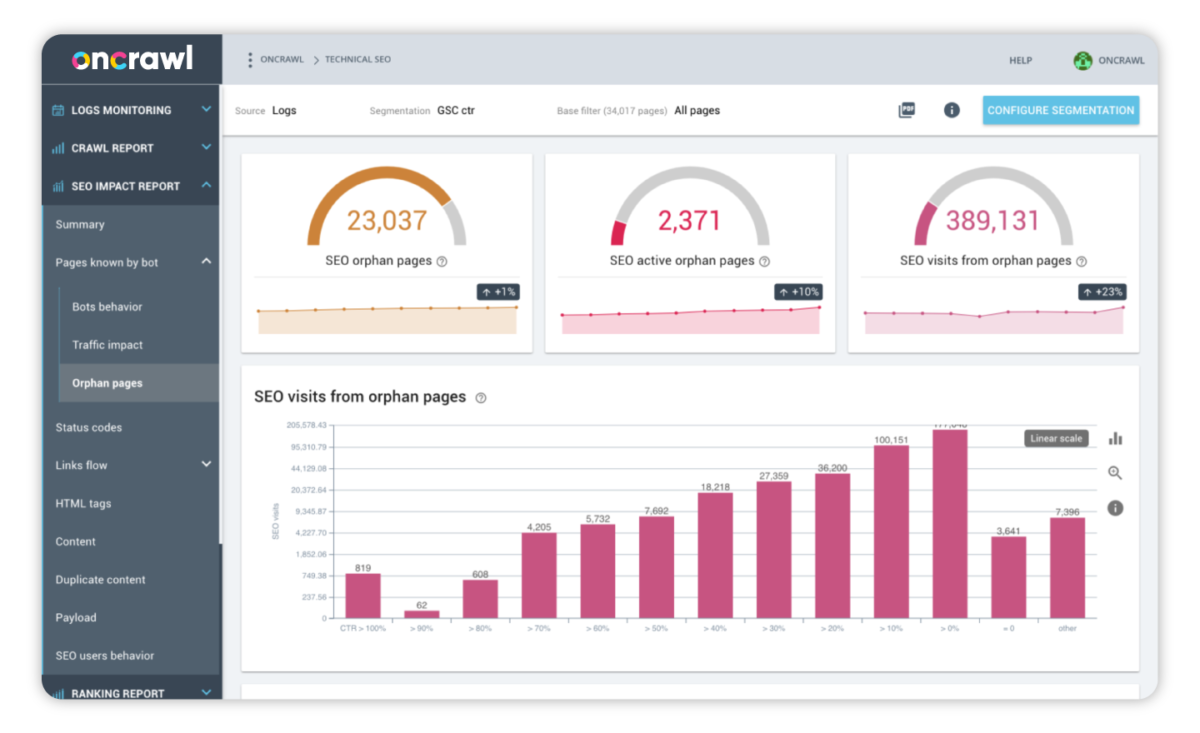

Whether or not you work off of an SEO checklist, you will need to gather technical information about all of your website’s pages and analyze the results. The most efficient way to do this is to run a crawl using an SEO crawler that will carry out many basic analyses for you. For example, Oncrawl’s SEO platform produces ready-to-use dashboards on different technical issues to make recommendations: if you’re examining http status code errors, you can see the distribution and origin of errors for your entire site in a single view.