The Oncrawl API can be used to scale your SEO data science, thus enabling SEOs to generate more creative yet precise technical recommendations that will make your website content more discoverable and meaningful to search engines. Before any data driven SEO can take place, one needs to connect to the API and extract data which we show how to do below using Python.

The objective is to get data from crawls you run and then have the basis upon which to perform data science for actionable data driven recommendations. In this article, we will walk you through how to set up and use Oncrawl’s API.

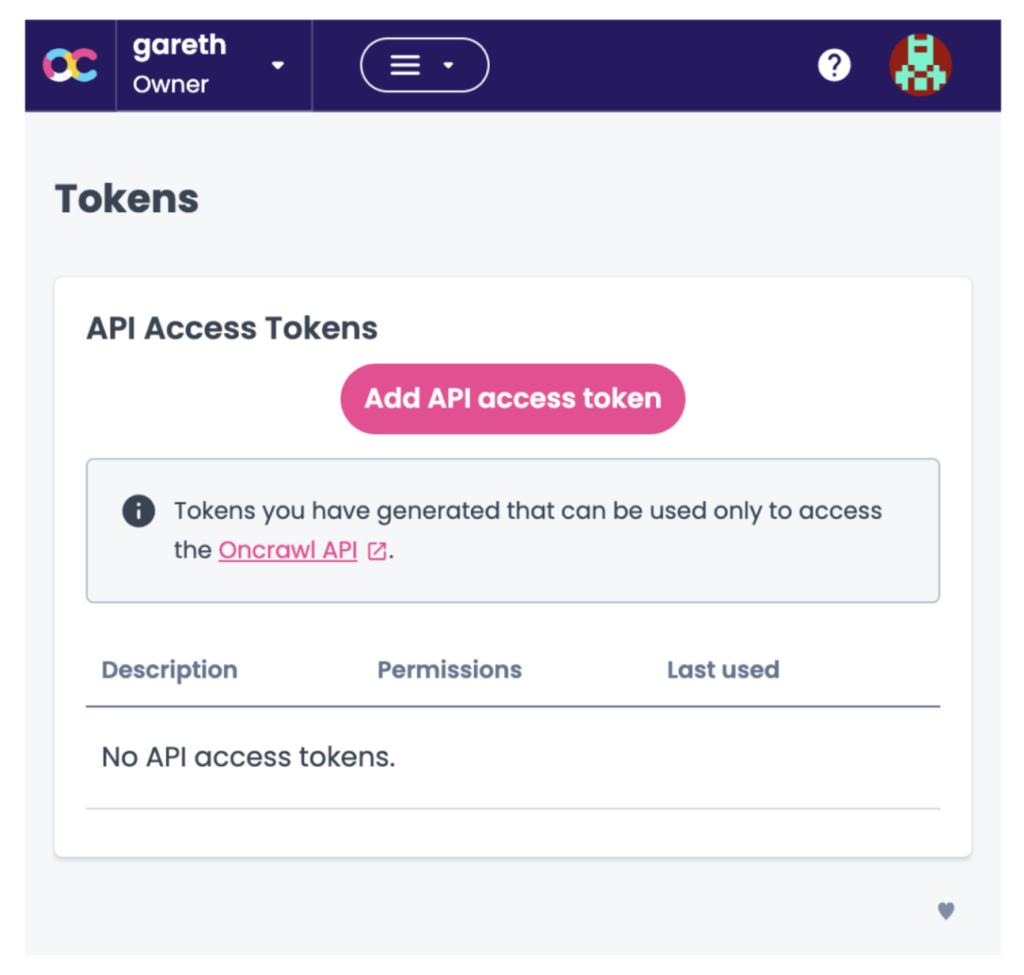

Get a token

Before you do any coding you’ll need an API token. This can be obtained by visiting the tokens page as shown below:

The next step is to click the Add API access token and note your API token.

Import libraries and set parameters

Now you can start coding! Start a new iPython notebook in Jupyter and import the libraries as below.

import requests import pandas as pd import numpy as np import json

Requests will allow you to make API calls.

Pandas allows you to manipulate data frames (think tabular data), and ‘pd’ is an abbreviation of pandas when using their functions.

Numpy contains functions for data manipulation with the np abbreviation.

JSON allows for the manipulation of data in JSON format, which is common to API data results.

api_token = 'your api key'

This was obtained earlier (see above).

base_api = 'https://app.oncrawl.com/api/v2/'

Set the base API URL to save your fingers from retyping as you’ll be calling the API a few times.

List your projects

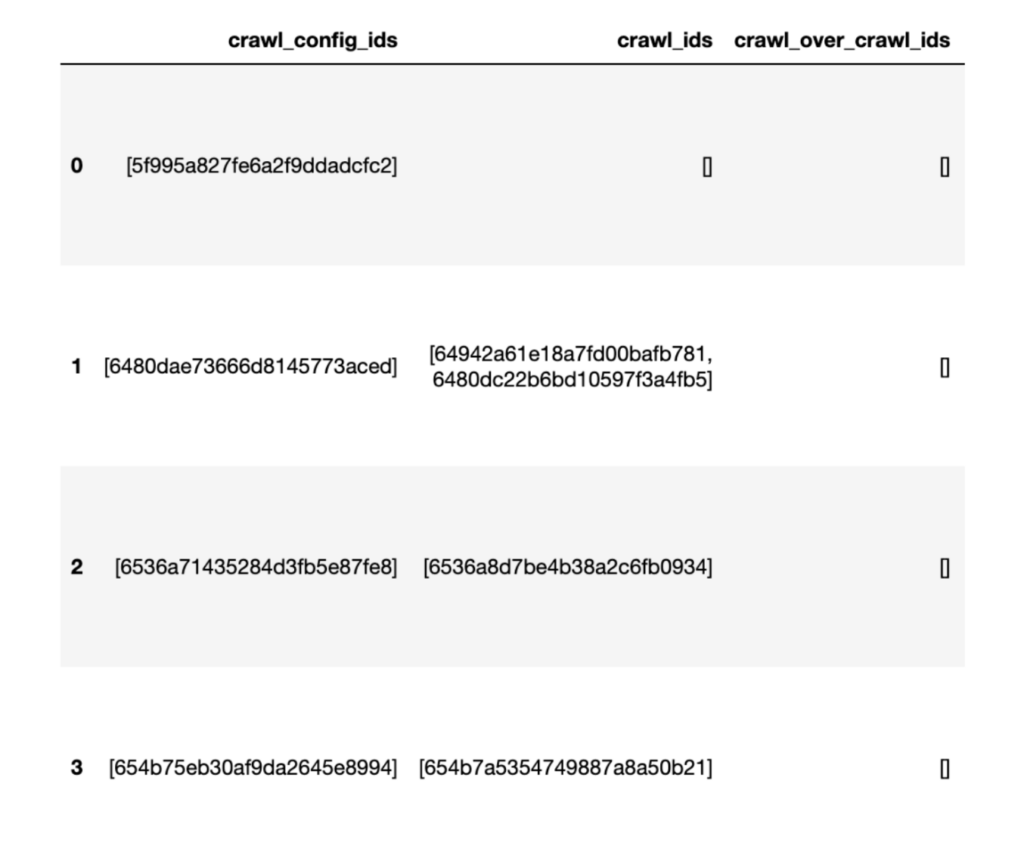

Before you can extract data from your crawl, you need to know the crawl identifier number for a later API call. We’ll extract these by querying the project’s endpoint.

endpoint = 'projects'

Next we’ll build the API query URL using the ‘get’ function from the requests library imported earlier.

As you’ll see, we input the API call URL which is built upon the base_api and the end point. The other parameters are the API token to authorize the API call.

p_response = requests.get(base_api + endpoint,

headers={'Authorization': 'Bearer ' + api_token})

The results of the API call are stored in p_response. We can now read the response using the JSON function, extract the ‘projects’ part of the API response and convert it to a data frame format which is more user friendly.

p_data = p_response.json() projects_data = p_data['projects'] projects_df = pd.DataFrame(projects_data) display(projects_df)

The extract of the data frame lists all the crawls in the Oncrawl account and the crawl identifiers (crawl_ids), which will be used to get our crawl data.

Get fields

Having selected the crawl we want data from, we’ll set the crawl identifier, the data we want on the URL which uses the pages/fields data type and the crawl data endpoint set below.

crawl_id = '654b7a5354749887a8a50b21' data_type = 'pages/fields' endpoint = 'data/crawl/'

Of course, before we get the actual data, we need to know what fields are available so we’ll grab these first.

This will be passed into the requests get function, stored as JSON and saved into the:

f_response = requests.get(base_api + endpoint + crawl_id + "/" + data_type,

headers={ 'Authorization': 'Bearer ' + api_token}).json()

Once run, the output will look like this:

{'fields': [{'actions': ['has_no_value',

'startswith',

'contains',

'endswith',

'not_equals',

'equals',

'not_startswith',

'has_value',

'not_endswith',

'not_contains'],

'agg_dimension': True,

'agg_metric_methods': ['value_count', 'cardinality'],

'arity': 'one',

'can_display': True,

'can_filter': True,

'can_sort': True,

'category': 'HTML Quality',

'name': 'alt_evaluation',

'type': 'string',

'user_select': True},

{'actions': ['not_equals', 'equals'],

'agg_dimension': True,

The output is incredibly long, considering that all we want from the fields output is the actual field names (highlighted in yellow above), so we know what data to extract from our selected site crawl.

To obtain the field names that are available in the Oncrawl API (i.e. the data features of the URLs), we’ll iterate through the JSON output produced by the API.

Oncrawl API

This will use a Python coding technique known as a list comprehension (think of this as a 1 liner for loop) that simply looks for names and stores them in a new list called api_fields.

api_fields = [field['name'] for field in f_response['fields']] print(len(api_fields)) api_fields 809 ['alt_evaluation', 'canonical_evaluation', 'canonicals', 'cluster_canonical_status', 'clusters', 'depth', 'description_evaluation', 'description_hash', 'description_is_optimized', 'description_length', 'description_length_range', 'duplicate_description_status', 'duplicate_evaluation', 'duplicate_h1_status', …

The output shows there are over 800 fields i.e. data features we can pull for each URL in our crawl. Some will be used for filtering, and others will be used for actual analysis.

Obtain crawl data

With the crawl id, which helps us request the correct crawl project and the available fields, we’re now in a position to start getting data for some SEO science.

As an SEO scientist, I personally want unlimited data because I like having the autonomy to decide what data fields I’d like included. The reason being is that I can decide which rows I want to include or reject when examining audit data. So we’ll be adding ?

Export=true to the API call URL to remove the standard 1,000 row limit.

To do that means the API call will output the data as a CSV file but there are options to stream the data to a cloud storage facility such as AWS or GCP.

We’ll set the data type to that effect.

data_type = 'pages?export=true'

Then make the call of which the output will be stored in a variable called c_response.

c_response = requests.post(base_api + endpoint + crawl_id + "/" + data_type,

headers={ 'Authorization': 'Bearer {}'.format(api_token)},

json={

"fields": ['url', 'indexable', 'inrank', 'nb_inlinks', 'status_code', 'title']

}

)

Once run, we’ll need to save the output locally as a csv file:

with open('oncrawl_pages_data.csv', 'w') as f:

f.write(c_response.text)

Ready for SEO Science

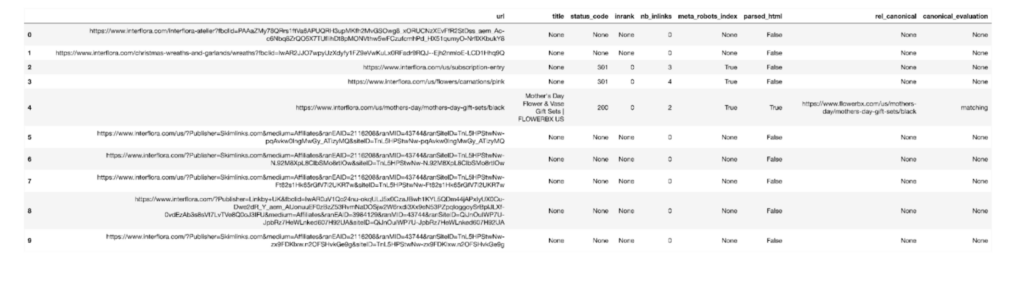

Now you’re ready for SEO science. To see the data simply use the Pandas (abbreviated as pd) read_csv function:

oncrawl_pages_df = pd.read_csv('oncrawl_pages_data.csv', delimiter=';')

oncrawl_pages_df

An extract of the API crawl data is shown below.

Of course, there are a few data steps that must be conducted before meaning analysis and insights can be derived from it which will form the basis of our next article.

In the next article, I will be applying data science techniques to examine the audit data which will help science driven technical recommendations for SEO.