This article is a follow-up to the first part about the automatic extraction of keywords from a text. The previous article dealt with the so-called ” traditional ” approach to extract keywords from a text: statistics-based or graph-based.

Here, we will explore methods with a more semantic approach. First, we will introduce word embeddings and transformers, i.e. contextual embeddings. Then we will share an example with the same text about John Coltrane’s album, Impressions (source francemusique.fr) based on the BERT model. Finally we will present some methods of evaluation such as P@k, f1-score as well as benchmarks from the latest state of the art.

Unlike the traditional approach, the semantic approach enables you to link words that belong to a similar lexical field, even if they are different. The idea behind this approach is to dispense with the calculation of word occurrence frequencies in the text in order to extract the words that make the most sense, in other words those that provide the most relevant information in relation to the text.

Word Embeddings

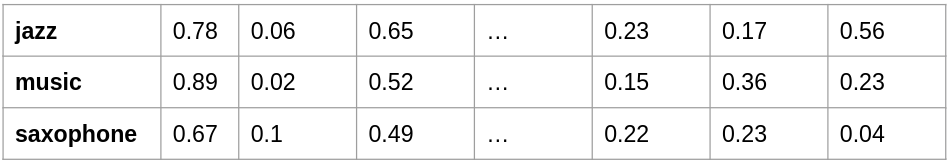

This method dates back to 2013 with the introduction of a model called word2vec. It is a “simple” artificial neural network architecture – unlike the so-called “Deep Learning” architectures – which propose to represent the set of words of a vocabulary as vectors. In other words, each word is represented by a line of numbers. This set of vectors represents the vocabulary’s semantic field, with the ability to compare these vectors with each other. By measuring a distance between these vectors, we can tell if the words are semantically similar. The representation of words as an array of numbers is called embeddings.

embeddings-example

Once you have the vector representation of your word set, you can compare these words with each other. Two words will have a close meaning if the two vectors have a very small angle between them: this is the semantic distance – a value between -1 and 1. The closer the value is to 1, the more the words have a ” similar ” meaning. A value close to -1 on the contrary will indicate “opposite” meanings, e.g. “good” and “bad”.

The objective of this model is to “manufacture” these famous embeddings from a non-negligible quantity of text documents. Let’s say you have a few hundred thousand texts, the model will learn to predict a word based on the words that are similar to it.

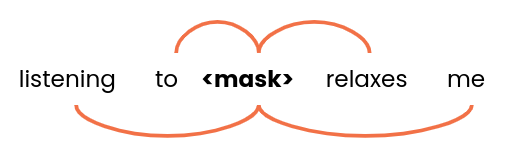

words-window

The model will take as input several groups of words – of size 5 for example – and try to find the best alternatives to <mask>. Within your corpus, there are similar phrases or groups of words; we will be able to find the words: “jazz”, “rock”, “classical” or “reggae” for example. Thus, all these words will have quite similar vectors. Thus, the model can bring together concepts and words that are in a similar lexical field.

[Case Study] Find and fix indexing issues

On the other hand, there may be ambiguities for a word that has the same spelling but does not have the same meaning in the context: these are homograph words.

For example:

- He sat down beside the Seine river bank.

- She deposited the money at the Chase bank.

This is a limitation of this type of model: the word will have the same vector representation regardless of the context.

Transformers

A more recent approach emerged mainly in 2017 with Google’s Attention Is All You Need. Where word2vec methods struggled to represent two identical words that did not have the same meaning, transformers type models are able to distinguish the meaning of a word based on its context. Note that there are also NLP models capable of taking into account sequences of words such as RNNs or LSTMs, but we will not address them in this article.

Transformers will popularize a new concept in neural network architecture: the attention mechanism. This enables you to find, within a sequence of words e.g. a sentence, how each word is related to the others.

The BERT model, created by Google in 2018, is a powerful and popular model. The advantage of these language models is that they are based on a pre-trained model, i.e. the model has already been trained on a significant amount of texts in English, French, etc. It is then possible to use it on the basis of a more detailed analysis. It is then possible to use it as is to compare text, generate answers from questions, do sentiment analysis, do fine tuning on a more specific body of text, etc.

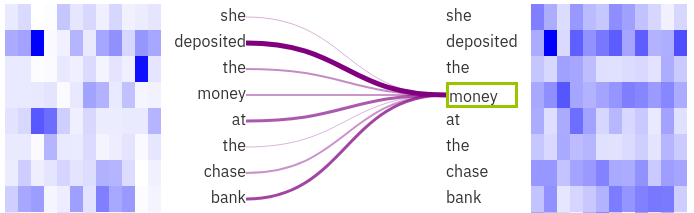

Attention Visualization

The image was generated with the exBERT visualization tool. We can see that the selected word “money” is strongly linked to “deposited” and “bank“. It is then easier for the model to remove the ambiguity on the word “bank” thanks to the context.

In the case of KeyBERT, it is a model derived from BERT called Sentence-BERT which allows us to easily compare sentences semantically.

If you want to find out more about all the open source and pre-trained models available, you can visit the Huggingface hub which specializes in storing and sharing datasets and models related to NLP (but also more recently to audio and image).

Keyword extraction with KeyBERT

So, how can we use transformers to extract relevant keywords from a text? There are several projects including KeyBERT: Minimal keyword extraction with BERT, an open source Python library that uses the BERT model.

KeyBERT will, by default, use the BERT-based sentence-transformers to represent a sentence, rather than a single word, as a vector. This is a very efficient way to compare sentences or groups of words. The principle of the KeyBERT algorithm is quite simple. Let’s look at an example text, then:

- The code will extract all the unique words from the text. In this step, stopwords are often eliminated.

- The embeddings of the entire text and of each word are calculated.

- We can then calculate the semantic distance between the vectors coming from the words and the vector which represents the text in its entirety.

Once all of the distances have been calculated, we can extract the words that are closely related to the overall meaning of the text: these will be the keywords selected by the algorithm.

Moreover, KeyBERT gives you the ability to select the length of the keyphrases: we can in fact take either single words, or pairs or even triples of words. Furthermore, the code provides a way to implement the “Maximal Marginal Relevance” (MMR) method in order to increase the diversity of the results. This helps you avoid the repetition of a term, which may be relevant with regard to the content of the document, but which is too often present in the results, for example: “jazz concert”, “jazz music”, “jazz style”, etc.

To conclude, it is also possible to use other NLP models for KeyBERT such as models from spaCy or flair.

As in the previous article, here is an example of the keywords extracted by KeyBERT from the same article about John Coltrane’s album, Impressions:

“coltrane impressions”, ” music quartet”, “jazz treasure”, “john concert”, “pearl sequence”, “graz repertoire”, “tenor saxophones”

Evaluation methods

In this article and the previous one, we presented several methods used to extract keywords from a document: RAKE, Yake, TextRank and KeyBERT. However, it is important to be able to choose the right one according to your context.

It is therefore relevant to look at evaluation methods in order to compare the results of one method with another. From data (document, keywords) where the keywords have been defined and validated by humans, it is possible to compare the results from a model with this “reality“. We have metrics such as precision, recall and f1-score that are often used in classification problems. We can therefore build a confusion matrix by knowing if a given keyword extracted by the model is indeed in the list of relevant keywords.

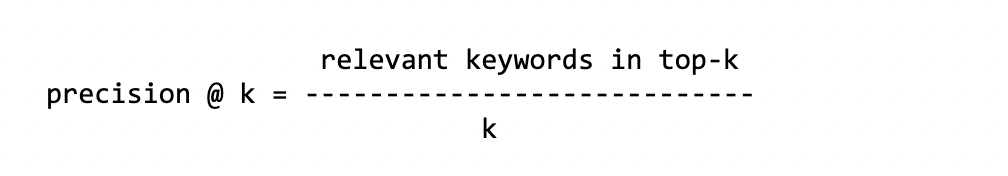

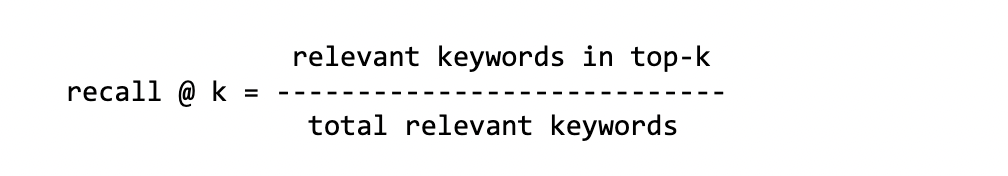

As the models can extract a series of keywords, we often compare the first k keywords with the ground truth. As the results can vary according to the number of keywords we wish to compare, we evaluate the model several times according to the k value. These metrics are called Precision@k which means: “I calculate the Precision on the top-k”.

[Ebook] How your internal linking scheme affects Inrank

Precision

This is the number of correct keywords in the top-k out of all predicted keywords.

Recall

This is the number of correct keywords in the top-k out of the total keywords of the ground truth.

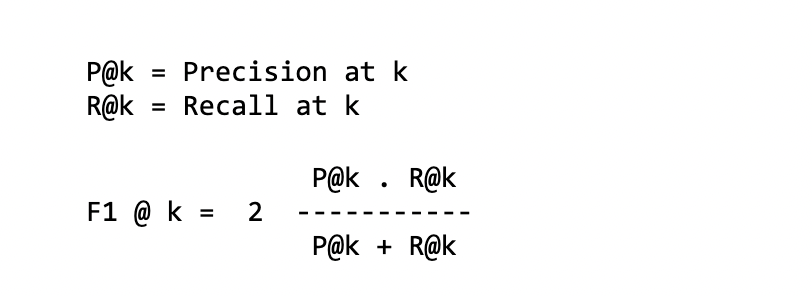

f1-score

It is a combination of the two previous metrics – or in other words a harmonious average between precision and recall:

MAP @ k

Mean Average Precision

The first step to calculate the MAP is to calculate the Average Precision. We will take all the keywords found by the model and calculate the P@k for each relevant keyword found by the model. As an example, to calculate AP@5 with 3 relevant keywords out of the 5 proposed:

keywords: jazz concert music saxophone radio relevant: 1 0 1 1 0 P@k: 1/1 2/3 3/4 AP: 1/3 (1 + 2/3 + 3/4) ~= 0.80

We note here that the position of the relevant keywords is important. In fact, the AP will not have the same score if the first three keywords out of the five are not relevant (resp. relevant).

Then, for each (document, keywords), we will calculate the average of all the APs to finally obtain the MAP.

Note that these different metrics are also widely used to evaluate ranking models or referral systems.

One small criticism of some of these metrics concerns the number of relevant ground truth keywords, which can vary per dataset and per document.

- For example the

recall@k: the score can depend a lot on the size of the relevant keywords of the ground truth. If you have up to 20 relevant keywords, for arecall@10you will compare the first 10 keywords in this set of 20 keywords. In the best case, if all the keywords proposed by the model are relevant, the recall score will not exceed 0.5. - On the other hand, if the number of relevant ground truth keywords is very low compared to those proposed by the model, you will not be able to obtain good

precision@kscores. For five relevant ground truth keywords,precision@10will at best have a score of 0.5 but not more.

Whatever the metrics used to evaluate the results, it is necessary to have a dataset (text, keywords) where the keywords have been previously defined by people – the authors of the texts, readers, editors, etc. There are several datasets, mainly in English and on different subjects: scientific articles, abstracts, news, IT, etc. For example, here is this Github KeywordExtractor-Datasets repository which lists about twenty datasets.

Benchmarks

Beyond this presentation of the various methods of evaluation, I’d also like to provide you with a “digest” of some research papers that propose benchmarks.

The first one is by the researchers who implemented the Yake! method in the paper, “YAKE! Keyword extraction from single documents using multiple local features“. They analyzed their method on about twenty different datasets: PubMed, WikiNews, SemEval2010, and KDD for the most well-known ones, using most of the metrics described above: P@k, recall@k, etc. Yake! will be compared with about ten other methods including Rake, Text Rank, etc. By consolidating all the available datasets and computing precision and recall, the Yake! method is either better or very close to the state-of-the-art methods. For instance, for f1@10:

| Dataset | Yake! | Best or 2nd |

|---|---|---|

| WikiNews | 0.450 | 0.337 |

| KDD | 0.156 | 0.115 |

| Inspec | 0.316 | 0.378 |

Yake! is often up to 50-100% better, which is close to the leading method.

In another, more recent paper “TNT-KID: Transformer-based neural tagger for keyword identification” we also compare traditional and Transformer-based methods. The methods of extraction using Transformers perform better. Be careful though, since these are so-called “supervised” methods unlike Rake, KeyBERT and Yake which do not need to be trained on a body of data to produce results.

Finally, let us take a look at two other benchmarks:

- Simple Unsupervised Keyphrase Extraction using Sentence Embeddings uses word embeddings and performs well in comparison to TextRank on datasets such as Inspec and DUC.

- A blog post that compares 7 algorithms (in Python): Keyword Extraction — A Benchmark of 7 Algorithms in Python. The author compares performance in terms of computation time across the KeyBERT, RAKE, Yake methods as well as the number of relevant words that each method can extract.

Conclusion

As you have seen throughout these two articles, there are really a multitude of very different ways to extract relevant keywords from a text. Looking at the different benchmarks, you will have noticed that there is not one that really exceeds all the others in terms of metrics. It will depend on the text and the context. Moreover, as the author of the blog article on the 7 extraction algorithms pointed out, we can also rely on the processing time of an algorithm when faced with a lengthy text.

This time will certainly be a factor when it comes to putting an algorithm into action and processing hundreds of thousands of documents. In the case of KeyBERT, you should consider an infrastructure with GPU to radically increase the processing time. In fact, this method relies on Transformers which have a Deep Learning architecture that requires a lot of computing.

If I had to make a choice, it would surely be between KeyBERT and Yake. The former for the transformers and semantic aspect, the latter for its efficiency and speed of execution.