At Oncrawl’s R&D department, we are increasingly looking to enhance the semantic content of your Web pages. Using Machine Learning models for natural language processing (NLP), we can compare the content of your pages in detail, create automatic summaries, complete or correct the tags of your articles, optimize the content according to your Google Search Console data, etc.

In a previous article, we talked about extracting text content from HTML pages. This time, we would like to talk about the automatic extraction of keywords from a text. This topic will be divided into two posts:

- the first one will cover the context and the so-called “traditional” methods with several concrete examples

- the second one to come soon will deal with more semantic approaches based on transformers and evaluation methods in order to benchmark these different methods

Context

Beyond a title or an abstract, what better way to identify the content of a text, a scientific paper or a web page than with a few keywords. It is a simple and very effective way to identify the topic and concepts of a much longer text. It can also be a good way to categorize a series of texts: identify them and group them by keywords. Sites that offer scientific articles such as PubMed or arxiv.org can offer categories and recommendations based on these keywords.

Keywords are also very useful for indexing very large documents and for information retrieval, a field of expertise well known by search engines 😉

The lack of keywords is a recurrent problem in the automatic categorization of scientific articles [1]: many articles do not have assigned keywords. Therefore, methods must be found to automatically extract concepts and keywords from a text. In order to evaluate the relevance of an automatically extracted set of keywords, datasets often compare the keywords extracted by an algorithm with keywords extracted by several humans.

As you can imagine, this is a problem shared by search engines when categorizing web pages. A better understanding of the automated processes of keyword extraction allows to better understand why a web page is positioned for such or such keyword. It can also reveal semantic gaps that prevent it from ranking well for the keyword you have targeted.

There are obviously several ways to extract keywords from a text or a paragraph. In this first post, we will describe the so-called “classic” approaches.

[Ebook] Data SEO: The Next Big Adventure

Restraints

Nevertheless, we have some limitations and prerequisites in the choice of an algorithm :

- The method must be able to extract keywords from a single document. Some methods require a complete corpus, i.e. several hundred or even thousands of documents. Although these methods can be used by search engines, they will not be useful for a single document.

- We are in a case of unsupervised Machine Learning. We do not have at hand a dataset in French, English or other languages with annotated data. In other words, we do not have thousands of documents with keywords already extracted.

- The method must be independent from the domain / lexical field of the document. We want to be able to extract keywords from any type of document: news articles, web pages, etc. Note that some datasets that already have keywords extracted for each document are often domain specific medicine, computer science, etc.

- Some methods are based on POS-tagging models, i.e. the ability of an NLP model to identify words in a sentence by their grammatical type: a verb, a noun, a determiner. Determining the importance of a keyword that is a noun rather than a determiner is clearly relevant. However, depending on the language, POS-tagging models are sometimes of very uneven quality.

About traditional methods

We differentiate between the so-called ” traditional ” methods and the more recent ones that use NLP – Natural Language Processing – techniques such as words embeddings and contextual embeddings. This topic will be covered in a future post. But first, let’s go back to the classical approaches, we distinguish two of them:

- the statistics approach

- the graph approach

The statistics approach will mainly rely on word frequencies and their co-occurrence. We start with simple hypotheses to build heuristics and extract important words: a very frequent word, a series of consecutive words that appear several times, etc. The graph-based methods will build a graph where each node can correspond to a word, group of words or sentence. Then each arc can represent the probability (or frequency) of observing these words together.

Here are a few methods :

All examples given use text taken from this web page: Jazz au Trésor : John Coltrane – Impressions Graz 1962.

Statistics approach

We will introduce you to the two methods Rake and Yake. In an SEO context, you may have heard of the TF-IDF method. But as it requires a corpus of documents, we will not deal with it here.

RAKE

RAKE stands for Rapid Automatic Keyword Extraction. There are several implementations of this method in Python, including rake-nltk. The score of each keyword, which is also called keyphrase because it contains several words, is based on two elements: the frequency of the words and the sum of their co-occurrences. The constitution of each keyphrase is very simple, it consists of:

- cut the text into sentences

- cut each sentence into keyphrases

In the following sentence, we will take all the word groups separated by punctuation elements or stopwords:

Just before, Coltrane was leading a quintet, with Eric Dolphy at his side and Reggie Workman on double bass.

This could result in the following keyphrases:

"Just before", "Coltrane", "head", "quintet", "Eric Dolphy", "sides", "Reggie Workman", "double bass".

Note that stopwords are a series of very frequent words such as “the“, “in“, “and” or “it“. As classical methods are often based on the calculation of the frequency of occurrence of words, it is important to choose your stopwords carefully. Most of the time, we do not want to have words like >"to", "the" or “of” in our keyphrase proposals. Indeed, these stopwords are not associated with a specific lexical field and are therefore much less relevant than the words “jazz” or “saxophone” for example.

Once we have isolated several candidate keyphrases, we give them a score according to the frequency of the words and the co-occurrences. The higher the score, the more relevant the keyphrases are supposed to be.

Let’s try quickly with the text from the article about John Coltrane.

# python snippet for rake from rake_nltk import Rake # suppose you've already the article in the 'text' variable rake = Rake(stopwords=FRENCH_STOPWORDS, max_length=4) rake.extract_keywords_from_text(text) rake_keyphrases = rake.get_ranked_phrases_with_scores()[:TOP]

Here are the first 5 keyphrases :

“austrian national public radio”, “lyrical peaks more heavenly”, “graz has two peculiarities”, “john coltrane tenor saxophone”, “only recorded version”

There are a few drawbacks to this method. The first one is the importance of the choice of stopwords because they are used to split a sentence into candidate keyphrases. The second is that when the keyphrases are too long, they will often have a higher score because of the co-occurrence of the words present. To limit the length of the keyphrases, we have set the method with a max_length=4.

YAKE

YAKE stands for Yet Another Keyword Extractor. This method is based on the following article YAKE! Keyword extraction from single documents using multiple local features which dates from 2020. It is a more recent method than RAKE whose authors have proposed a Python implementation available on Github.

We will, as for RAKE, rely on word frequency and co-occurrence. The authors will also add some interesting heuristics:

- we will distinguish between words in lower case and words in upper case (either the first letter or the whole word). We will assume here that words that start with a capital letter (except at the beginning of a sentence) are more relevant than others: names of people, cities, countries, brands. This is the same principle for all capitalized words.

- the score of each candidate keyphrase will depend on its position in the text. If the candidate keyphrases appear at the beginning of the text, they will have a higher score than if they appear at the end. For example, news articles often mention important concepts at the beginning of the article.

# python snippet for yake from yake import KeywordExtractor as Yake yake = Yake(lan="fr", stopwords=FRENCH_STOPWORDS) yake_keyphrases = yake.extract_keywords(text)

Like RAKE, here are the top 5 results:

“Treasure Jazz “, “John Coltrane”, “Impressions Graz”, “Graz”, “Coltrane”

Despite some duplications of certain words in some keyphrases, this method seems quite interesting.

Graph approach

This type of approach is not too far from the statistical approach in the sense that we will also calculate word co-occurrences. The Rank suffix associated with some method names such as TextRank is based on the principle of the PageRank algo to calculate the popularity of each page based on its incoming and outgoing links.

[Ebook] Automating SEO with Oncrawl

TextRank

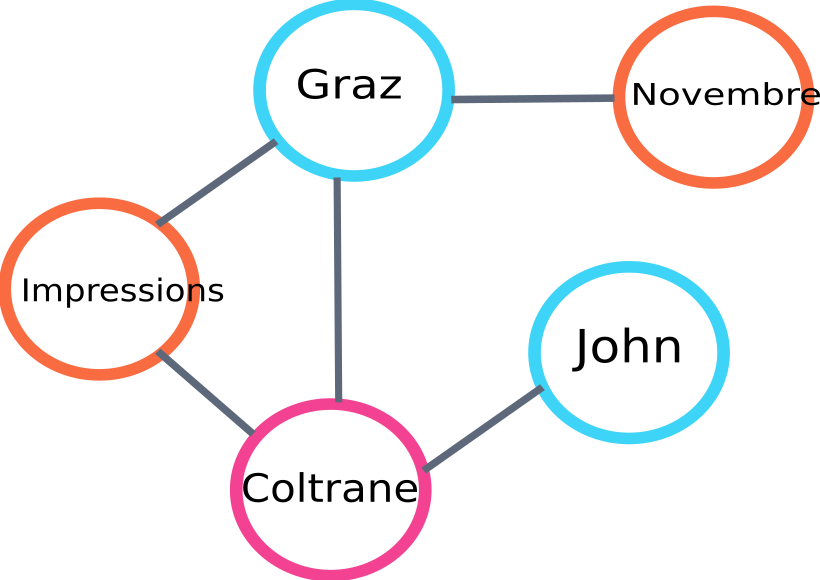

This algorithm comes from the paper TextRank: Bringing Order into Texts from 2004 and is based on the same principles as the PageRank algorithm. However, instead of building a graph with pages and links, we will build a graph with words. Each word will be linked with other words according to their co-occurrence.

There are several implementations in Python. In this article, I will introduce pytextrank. We will break one of our constraints about POS-tagging. Indeed, when building the graph, we will not include all words as nodes. Only verbs and nouns will be taken into account. Like previous methods that use stopwords to filter out irrelevant candidates, the TextRank algo uses the grammatical type of words.

Here is an example of a part of the graph that will be built by the algo :

text rank graph example

Here is an example of use in Python. Note that this implementation uses the pipeline mechanism of the spaCy library. It is this library that is able to do POS-tagging.

# python snippet for pytextrank

import spacy

import pytextrank

# load a french model

nlp = spacy.load("fr_core_news_sm")

# add pytextrank to the pipe

nlp.add_pipe("textrank")

doc = nlp(text)

textrank_keyphrases = doc._.phrases

Here are the top 5 results:

“Copenhague”, “novembre”, “Impressions Graz”, “Graz”, “John Coltrane”

In addition to extracting keyphrases, TextRank also extracts sentences. This can be very useful for making so-called “extractive summaries” – this aspect will not be covered in this article.

Conclusions

Among the three methods tested here, the last two seem to us to be quite relevant to the subject of the text. In order to better compare these approaches, we would obviously have to evaluate these different models on a larger number of examples. There are indeed metrics to measure the relevance of these keyword extraction models.

The lists of keywords produced by these so-called traditional models provide an excellent basis for checking that your pages are well targeted. In addition, they give a first approximation of how a search engine might understand and classify the content.

On the other hand, other methods using pre-trained NLP models like BERT can also be used to extract concepts from a document. Contrary to the so-called classical approach, these methods usually allow a better capture of semantics.

The different evaluation methods, contextual embeddings and transformers will be presented in a second article to come on the subject!

Here is the list of keywords extracted from this article with one of the three methods mentioned:

“methods”, “keywords”, “keyphrases”, “text”, “extracted keywords”, “Natural Language Processing”

Bibliographical references

- [1] Improved Automatic Keyword Extraction Given More Linguistic Knowledge, Anette Hulth, 2003

- [2] Automatic Keyword Extraction from Individual Documents, Stuart Rose et. al, 2010

- [3] YAKE! Keyword extraction from single documents using multiple local features, Ricardo Campos et. al, 2020

- [4] TextRank: Bringing Order into Texts, Rada Mihalcea et. al, 2004