JavaScript SEO has become one of the most important aspects of modern technical SEO and AEO optimization. If you have ever disabled JavaScript in your browser while investigating its behavior, only to be met with the message, “Please enable JavaScript to access this webpage”, that might have been a moment that confirmed how much of a site’s content could be unseen.

The message itself isn’t the problem, but it’s important to understand the SEO implications it can have.

JavaScript, a programming language known for creating interactive websites, is powerful, but if left unchecked it can create serious SEO challenges that silently and negatively affect a site’s indexation potential. This is a problem when:

- Important metadata (like titles, descriptions, or canonicals) are not being “seen” because they are injected with JavaScript.

- URLs can’t be discovered without JavaScript rendering.

- The use of heavy JavaScript files can slow load time and limit link discovery.

This is why JavaScript SEO is essential: it ensures JavaScript-heavy sites remain crawlable, discoverable, and indexable.

An SEO crawler like Oncrawl, which can render JavaScript for performance monitoring, shows exactly how content and links appear to search engines when JavaScript is enabled or disabled.

To complement this, pre-rendering can act as a bridge, transforming your JavaScript content into search engine-friendly content that is accessible and indexable.

These strategies help tackle both traditional SEO challenges and the rising complexity of AI search and AEO. But before we go any further, let’s take a look at what these challenges are.

The SEO and AEO challenges with JavaScript

Search engines need to be able to understand your site’s indexing and crawling directives. With the evolution of AI search, understanding how AI bots “see” your content is critical to ensure your content is discoverable and indexable.

To date, most AI bots do not execute JavaScript; Gemini is the exception because it leverages Google’s rendering stack; however, this should be seen as experimental rather than a permanent standard.

Oncrawl Log Analyzer

CSR and SSR rendering solutions

In terms of rendering methods, there is Client Side Rendering (CSR) where the JavaScript code is executed by your browser. While the process is seamless for users, with the magic happening behind the scenes, it is resource-heavy for Google and actually quite costly. The main downside of CSR is actually the amount of time spent rendering takes away from a site’s crawl budget, which can delay important pages getting indexed.

An alternative to CSR is Server Side Rendering (SSR), where content is rendered at the server level and then served fully rendered.

This approach is particularly relevant for Single Page Applications (SPAs). SPAs are widely used across popular sites to provide a smooth user experience; the page is loaded once and subsequently, content is dynamically updated as users interact with the site. JavaScript presents one of the biggest challenges for SPAs.

While SPAs create a fluid user experience, like adding a product to a checkout cart or expanding a product description, it also means key content and links are only generated dynamically. For search engines however, this makes crawling and indexing more complex. It can cause them to miss or delay discovering the content, which in turn can lead to a lag in indexing.

Traditional search engines have long acknowledged the need to render JavaScript; however, both CSR and SSR have their cons. While CSR challenges remain relevant, moving to SSR is both expensive and resource-heavy. This is where alternatives like pre-rendering can be extremely useful.

What is pre-rendering and how does it work?

Pre-rendering is essentially advanced rendering: a static HTML version of your page is generated when a bot requests it, cached, and served depending on the requesting user agent. This ensures that search engines, which cannot always process JavaScript, receive a fully rendered version of the content.

In comparison, SSR builds the HTML on-demand for each request at the time of the request. While effective, we’ve already established that SSR is resource-heavy, complex to maintain, and can be expensive to scale. Pre-rendering offers a middle ground that is easier to implement and more cost-effective while still addressing the core SEO challenges associated with JavaScript rendering.

Benefits of pre-rendering include:

- Delivery of the fully rendered HTML snapshots to search engine bots and AI crawlers. This ensures your content is “seen,” crawled, and indexed.

- Prevention of page timeouts so key content like SEO tags aren’t missed.

- Cost efficiency, making it an effective interim or long-term solution.

- Limited need for significant, ongoing maintenance.

Pre-rendering solutions

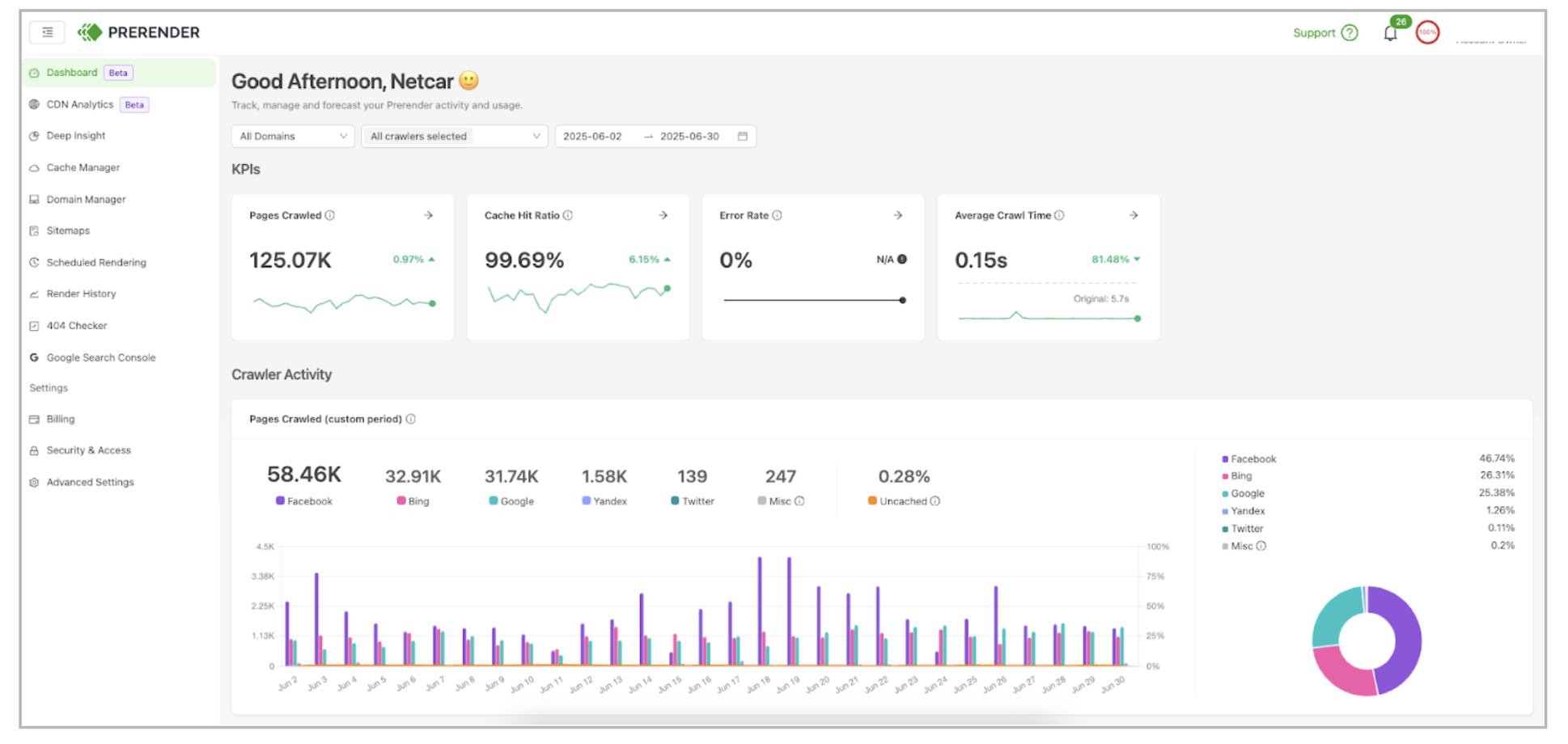

Tools like Prerender.io provide a scalable solution for delivering content that’s both search engine–ready and AI-friendly. By serving cached HTML snapshots of your pages to crawlers, Prerender.io ensures your content is visible, processable, and primed to appear in top Google SERPs and AI search results.

After integrating your site on Prerender.io, anytime a crawler requests your page, it will serve the pre-rendered version. If a page isn’t cached yet, this pre-rendering tool renders, stores, and delivers it instantly the next time.

You can also monitor what crawlers and AI bots visit your site on Prerender.io’s dashboard. It tracks visits from Googlebot, GPTBot, Perplexity Bot, and more, giving you the insights you need to fine-tune both your SEO and AEO strategies.

Using log analysis to validate and monitor indexing

While Prerender.io is a great tool to ensure your content is easily discoverable both on Google SERPs and in AI search results, combining it with log analysis for your technical SEO optimization effort is a winning combination.

Server logs are one of the most reliable sources for understanding how bots behave and interact with your site. Log files can reveal key information such as:

Bot behavior

Which bots are visiting your site and how often.

Crawl frequency and depth

How deep are crawlers actually going to reach content and which sections are prioritized over others.

Rendering vs indexing signals

Log files can show the date and time a page was requested, but they can also help you understand whether rendering issues may have prevented a page from being indexed.

The right tooling for leveraging your log files is key, especially when using the data to make action decisions at scale. This is where Oncrawl’s log analyzer steps in; the reporting offers useful insights that allow you to identify and fix your site’s issues.

Detect crawl anomalies

Highlight which bots are crawling heavy JavaScript resources and what the status code and response time are.

Identify underperforming pages

Cross-analysis reports that use crawl and log data enable you to see if important content is missing from rendered pages and if they are crawled less often.

Measure the impact of pre-rendering

Compare the crawl frequency of content before and after pre-render deployment.

Once pre-rendering is in place, log analysis becomes indispensable for validating its effectiveness and identifying ongoing issues.

[Ebook] Crawling & Log Files: Use cases & experience based tips

Combining pre-rendering and log analysis for better SEO

How can you identify JavaScript issues and use your log files to investigate and apply a fix? Within your log monitoring reports you might uncover the following:

- Unusual crawl frequency on certain templates .

- JavaScript‑heavy pages often show higher response times.

- Googlebot, or any search/AI bots you monitor, are not returning to JavaScript‑heavy pages as often.

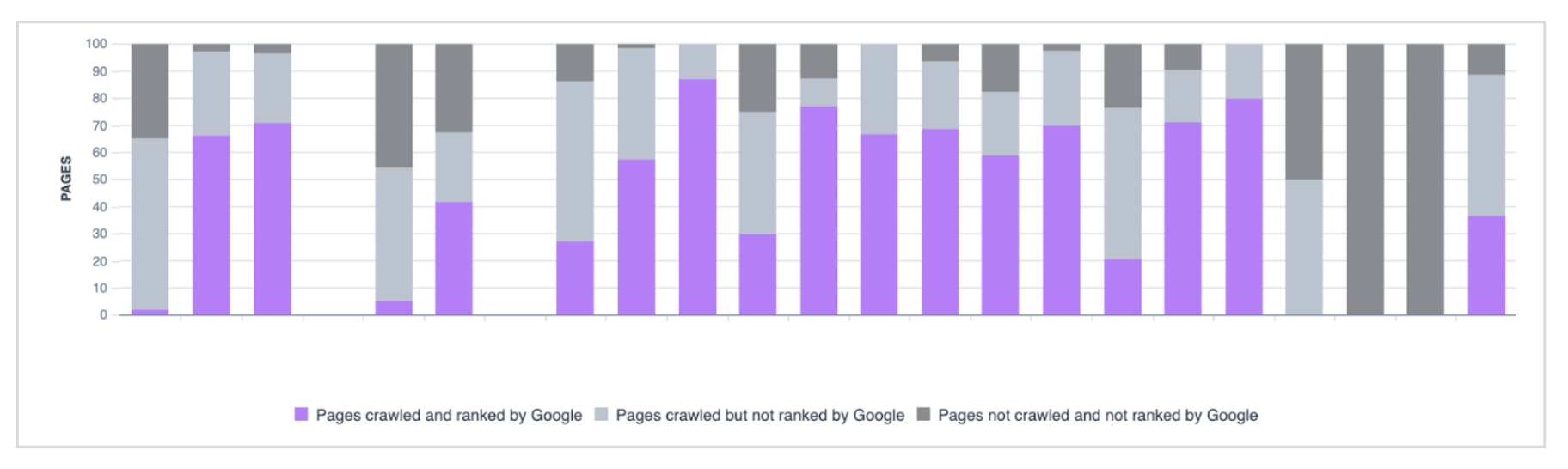

Additionally, by cross-analyzing log data with Oncrawl’s crawl data and Google Search Console metrics, you can:

- Identify pages crawled and ranking.

- Detect pages crawled but not ranking.

- Highlight pages that are not crawled and not ranking.

These insights often reveal hidden internal links or critical content missed due to rendering issues. If those links aren’t rendered, bots can’t see or follow them.

Additionally, extending log analysis over a 3–6 month period provides indexing signals by showing crawl frequency trends. A decrease in crawl activity on important pages clearly indicates that those pages need to be investigated.

Failed resource responses are another signal. Repeated 4xx/5xx errors on critical resources, such as JavaScript files, CSS, images, or SEO tags generated client-side, can block discovery and delay indexing.

Once you’ve identified the red flags and implemented pre‑rendering on the affected templates, you can now use your logs to confirm the impact. Take a look to see if there has been an/a:

- Increase in crawl frequency to newly pre‑rendered content.

- Reduction in 4xx/5xx on JavaScript resources and pages.

- Increase in volume of newly crawled pages.

- Increase in indexation and coverage for those pages.

JavaScript SEO best practices and pitfalls to avoid

When implementing pre-rendering as part of a JavaScript SEO strategy, success depends on how well it is implemented and maintained.

It’s important to be able to identify the common traps so as to ensure you don’t make the same mistakes. When adopting pre-rendering, it is important to:

- Understand when pre-rendering is (and isn’t) necessary. Consider focusing on JavaScript-heavy pages first, and make sure that you aren’t pre-rendering 404 pages.

- Ensure the freshness and consistency of pre-rendered content.

- Continuously monitor using logs.

Building on the last point, continuing to monitor pre-rendered content with your log files is a great way to make sure your pre-rendered content remains effective and up-to-date.

It also proves Google, other search engines, and AI search platforms are seeing and crawling your site. Key practices include:

- Reviewing the ratio of bot hits on pre-rendered content to all bot hits in general.

- Monitoring crawl frequency per day on critical templates. A drop could be a signal to investigate if any technical issues are occurring or if the content isn’t updated or fresh.

- Checking status codes to confirm bots consistently receive 200 responses. If any are 3xx or 4xx errors, this is another signal that the content isn’t being seen or there is a misconfiguration.

- Using segmentation to ensure the bots you are monitoring are reaching and crawling all the pre-rendered pages.

- Reviewing load time to ensure fast delivery.

Closing thoughts

There are several options to consider for handling a site that is JavaScript-dependent or has heavy JavaScript elements. The right approach depends on your site type, available resources, and budget.

Google recommends pre-rendering as an effective way to mitigate rendering issues and, with the evolution of search, making your content available to AI bots is also important.

Combined with insights from your log files, you’ll have a clear view of how bots interact with your site and how pre-rendered content can improve crawl behavior and indexation of your most important pages.