This article examines the best SEO resources for JavaScript and a test to understand how search engines (Google, Bing and Yandex) behave when they have to crawl and index pages rendered through JS. We wil also look at some considerations dedicated to SEO experts and developers.

JavaScript is a major player on today’s web, and as John Mueller said, “It won’t go away easily.”

The JavaScript topic is often vilified by the SEO community, but I believe it is due to a lack of technical skills in JavaScript development and web page rendering. But this also depends on the difficulty of communication between SEO experts and development teams.

This post is divided into two parts: the first (short) offers some resources to look at the topic in more detail; in the second (more in-depth) we look at a very simple experiment that tests search engines on a web page rendered using JavaScript.

Some resources that introduce SEO for JavaScript

In the following video Martin Splitt, referring to Google, introduces the topic, explaining the importance of thinking from an SEO perspective when developing projects in JavaScript. In particular, he refers to pages that render critical content in JS and to single-page apps.

“If you want assets to be indexed as soon as possible, make sure the most important content is in the page source (rendered server side).”

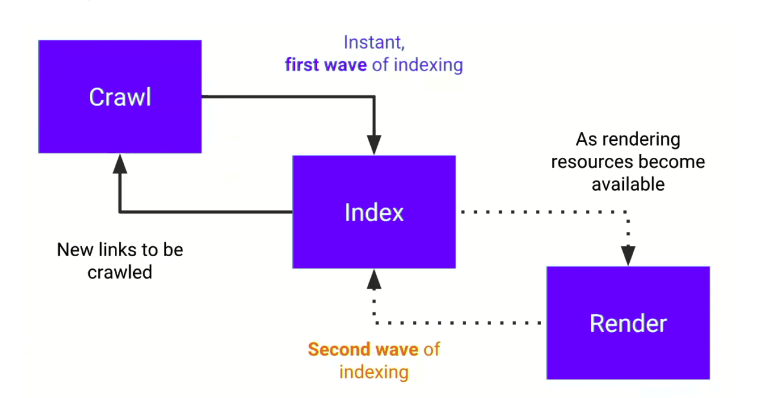

What’s the reason? Because we must always keep in mind the following scheme, that is the two waves of indexing.

The flow of crawling, indexing, rendering and reconsideration. And the two waves of processing.

Content generated by JavaScript is considered in the second wave of indexing, because it has to wait for the rendering phase.

In the following video, however, basic SEO tips on projects developed in JavaScript are explained.

“We are moving towards extraordinary user experiences, but we are also headed towards the need for ever greater technical skills.”

A simple test to clarify how search engines treat JavaScript

I will describe a test that I performed just to retrace the steps described by the Google documentation (crawling, indexing, rendering, reconsideration). A test, however, that also extends beyond the Mountain View search engine.

Note: it is a fairly “extreme” test in terms of the technical solution applied, and it was made using simple JavaScript functions, without the use of particular frameworks. The aim is solely to understand the dynamics of the search engine, not an exercise in style.

1) The web page

First, I created a web page with the following characteristics:

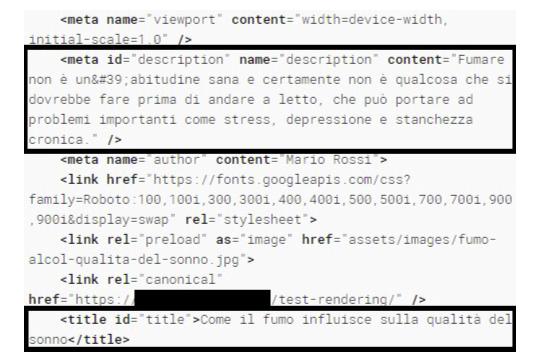

- An HTML page containing the main structural TAGs, with no text, except the content of the <title> tag, a subtitle in an <h4> tag and a string in the footer.

- The content, available in the source HTML, has a specific purpose: to give search engines data immediately upon first processing.

- A JavaScript function, once the DOM (Document Object Model) is complete, takes care of rendering all the content (a complete post generated using GPT-3).

$(document).ready( function () {

hydration();

});- The content rendered by the function is not present on the page in JavaScript or JSON strings, but is obtained through remote calls (AJAX).

- Structured data is also “injected” via JavaScript obtaining the JSON string through a remote call.

- I purposely made sure that the JavaScript function overrides the content of the <title> tag, generates the content of the <h1> tag and creates the description. The reason is the following: if the data rendered by JS had been considered, the snippet of the result in SERP would have completely changed.

- One of the two images that are rendered via JS, has been indicated in the page header in a <link> tag (rel = “preload”) to understand if this action can speed up the indexing of the resource.

2) Submission of the page to search engines and testing

After I published the page, I reported it to search engines, and in particular to Google (through the “URL check” of Search Console), to Bing (through “Submit URL” of Webmaster Tool) and to Yandex (through “Reindex pages” of Webmaster Tool).

At this stage, I ran some tests.

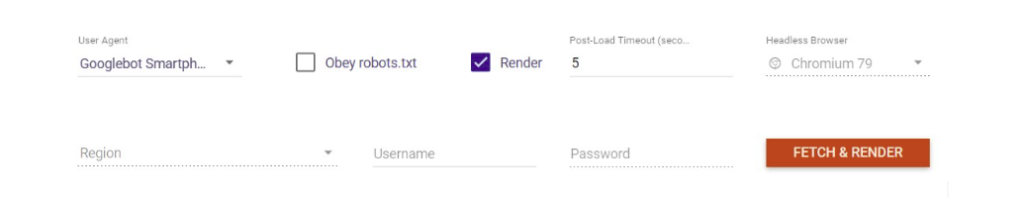

- Merkle’s “Fetch & Render” tool that I usually use to do rendering tests, generated the page correctly. The tool simulates Googlebot using Chromium 79 headless.

Note: among the Merkle tools there is also a Pre-rendering Tester, which allows you to verify the HTML response based on the user-agent making the request.

Merkle Fetch & Render: rendering test tool

- Google’s Rich Results Test successfully generated the DOM and screenshot of the page. The tool also correctly detected the structured data, identifying the entity of type “Article“.

The DOM generated by the Google Rich Results Tes

- The Search Console URL checking tool initially showed imperfect rendering at times. But I think the reason was the running time.

3) Indexing

At this stage, all search engines behaved the same: after a few hours, the result was present in all SERPs.

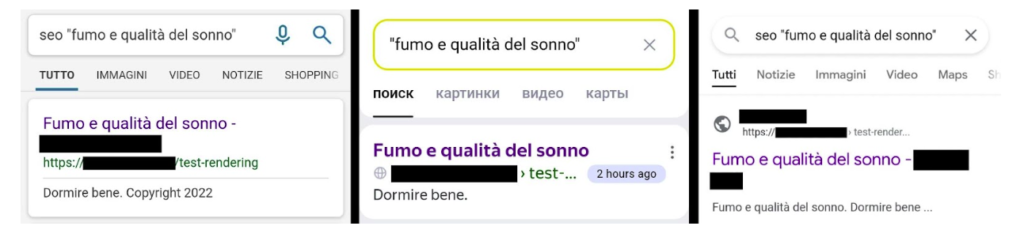

The snippet generated in the first phase on Bing, Yandex and Google

The snippets, as seen in the images, are composed of the content of the <title> tag and a description obtained from the data available in the main content.

No images on the page have been indexed.

4) Editing operations, sitemap, Google indexing API

The next day, the snippets were the same. I have completed some actions to send signals to search engines.

- I changed the HTML of the page, adding the favicon, the name of the author of the post and the date of publication. The goal was to figure out if search engines would update the snippet before a potential analysis of the rendered content.

- At the same time I updated the JSON of the structured data by updating the modification date (“dateModified”).

- I sent the sitemap containing the URL of the web page to the webmaster tools of the different search engines.

- I reported to the search engines that the page has been modified, and in particular, for Google, through the indexing API.

[Case Study] Keep your redesign from penalizing your SEO

The results

What happened after a few days?

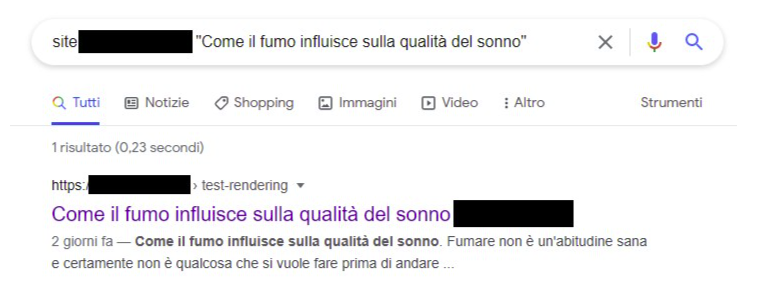

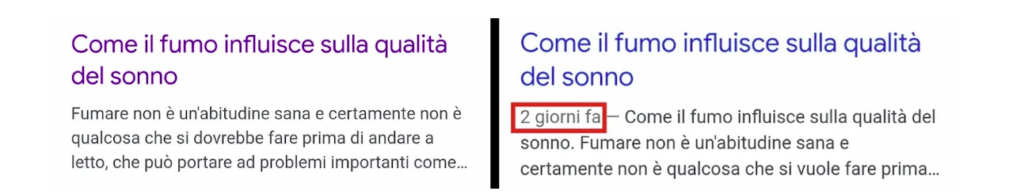

Google rendered and reworked the snippet

1) Google rendered the content by processing the JavaScript, then reworked the result on the SERP using the content correctly.

Google has indexed the rendered content using JavaScript

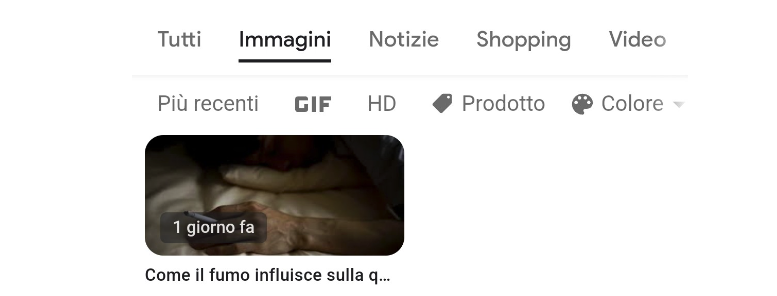

2) The first image of the post was also indexed and appeared on Google Images.

The post image, rendered via JavaScript, appears on Google Images

3) The snippets on Bing and Yandex have not changed. This means that in the days following the publication they did not consider the final DOM.

4) The image shown in the link for the preload did not receive favorable treatment as compared to the other.

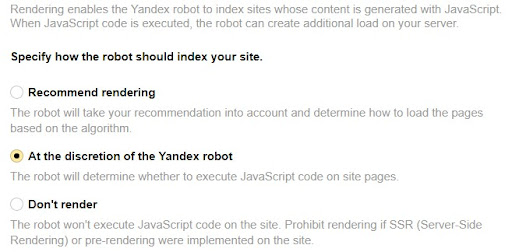

JavaScript page rendering (β) by Yandex

In the Yandex webmaster tools there is a “beta” feature that allows you to specify the behavior of the robot when it crawls pages.

The Yandex rendering configuration panel

You can suggest running the JavaScript to complete the rendering, or not running it. The default option gives the algorithm the “freedom” to choose the best action.

In the specific case where almost all of the content is generated on the client side, but more generally, for example for a JavaScript framework, it is advisable to force the rendering.

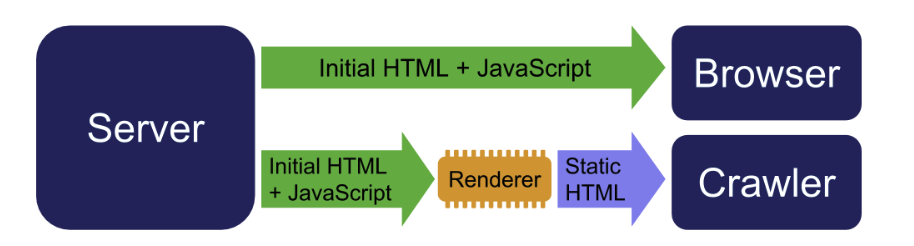

Dynamic rendering

In the last phase of testing, I implemented a dynamic rendering solution for Bing and Yandex. This solution allows to differentiate the response that is sent to the client so that:

- Users’ browsers receive the response containing the HTML and JavaScript necessary to complete the rendering;

- The seo crawler receives a static version of the page, containing all critical content in the source.

How dynamic rendering works

After completing the implementation, I requested indexing again through the Bing and Yandex tools.

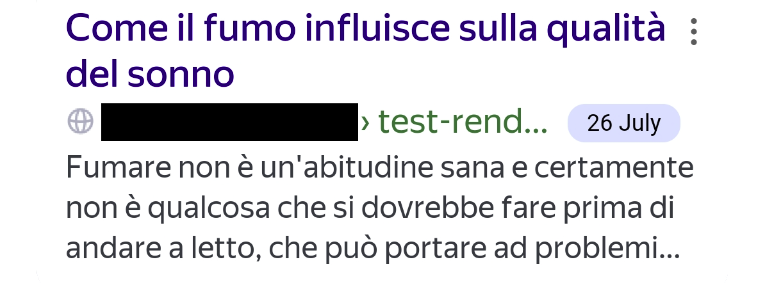

Yandex, in a few hours, rebuilt the snippet in SERP as follows. So Yandex considered the new version rendered server side.

The Yandex snippet after dynamic rendering

Bing did not change the result in the time available for the test, but certainly (ehm.. at least I hope) would have integrated it in the following days.

Conclusions

This simple test demonstrates that Google works as the documentation specifies, respecting the two phases of the scheme seen in the initial part of the post. In the first phase, in fact, it indexed the content present in the page source almost instantly (the HTML generated on the server side); in the second, in a couple of days, it indexed the parts rendered via JavaScript.

Bing and Yandex, on the other hand, do not seem to detect content generated via JavaScript .. at least in the days following publication.

These elements highlight some JavaScript-related aspects that I’ll try to explain in a little more detail below.

- The focus on the reference markets for communication must be maintained: if we address an audience that uses search engines other than Google, we risk not being as visible as we would like.

- Even if users use Google, we must consider the time it takes to get the complete information in the SERP. The rendering phase, in fact, is very expensive in terms of resources: in the case of the test, with only one page online, the snippet was obtained correctly in a few days. But what would happen if we work on a very large website? The “budget” that the search engine makes available to us and the performance of the website become decisive.

- I believe that JavaScript allows us to offer users superior user experiences, but the skills required to achieve this are many and expensive. As SEO experts, we need to evolve to propose the best solutions. Pre-rendering or dynamic rendering are still useful techniques for generating critical content on projects that need to ensure global visibility.

- All the modern JavaScript frameworks, which are based on the JAMstack architecture, such as Astro, Gatsby, Jekyll, Hugo, NuxtJS and Next.JS, offer the possibility to render the content on the server side, in order to provide the static version of the pages. Furthermore, Next.JS, which in my opinion is the most advanced, allows a “hybrid” rendering mode, called “Incremental Static Regeneration” (ISR). ISR allows the generation of static pages incrementally, with constant background processing. This technique allows very high navigation performance.

“The question is .. is it worth introducing such “complexity” into a project? The answer is simple: yes, if we are going to produce an amazing user experience!”

Additional Notes

- We must pay attention to the difference between the “document ready” and “window loaded” events: the former takes effect after the DOM has been generated, while the latter when the DOM has been generated and the resources have been loaded.

- Clearly, for tests like this, it makes no sense to analyze the cache copy of Google, because when the pages are opened the JS would be re-executed, not giving the perception of the content taken into consideration by Google.

Good JavaScript, and good SEO!