In a world full of digital marketing myths, we believe coming up with practical solutions to everyday problems is what we need.

At PEMAVOR, we always share our expertise and knowledge to fulfill the needs of digital marketing enthusiasts. So, we often post complimentary Python scripts to help you boost your ROI.

Our SEO Keyword Clustering with Python paved the way towards gaining new insights for big SEO projects, with merely less than 50 lines of Python codes.

The idea behind this script was to allow you to group keywords without paying ‘exaggerated fees’ to… well, we know who… 😊

But we realized this script is not enough on its own. There is a need for another script, so you guys can further your understanding of your keywords: you need to be able to “group keywords by meaning and semantic relationships.”

Now, it’s time to take Python for SEO one step further.

The traditional way of semantic clustering

As you know, the traditional method for semantics is to build up word2vec models, then cluster keywords with Word Mover’s Distance.

But these models take a lot of time and effort to build and train. So, we’d like to offer you a more straightforward solution.

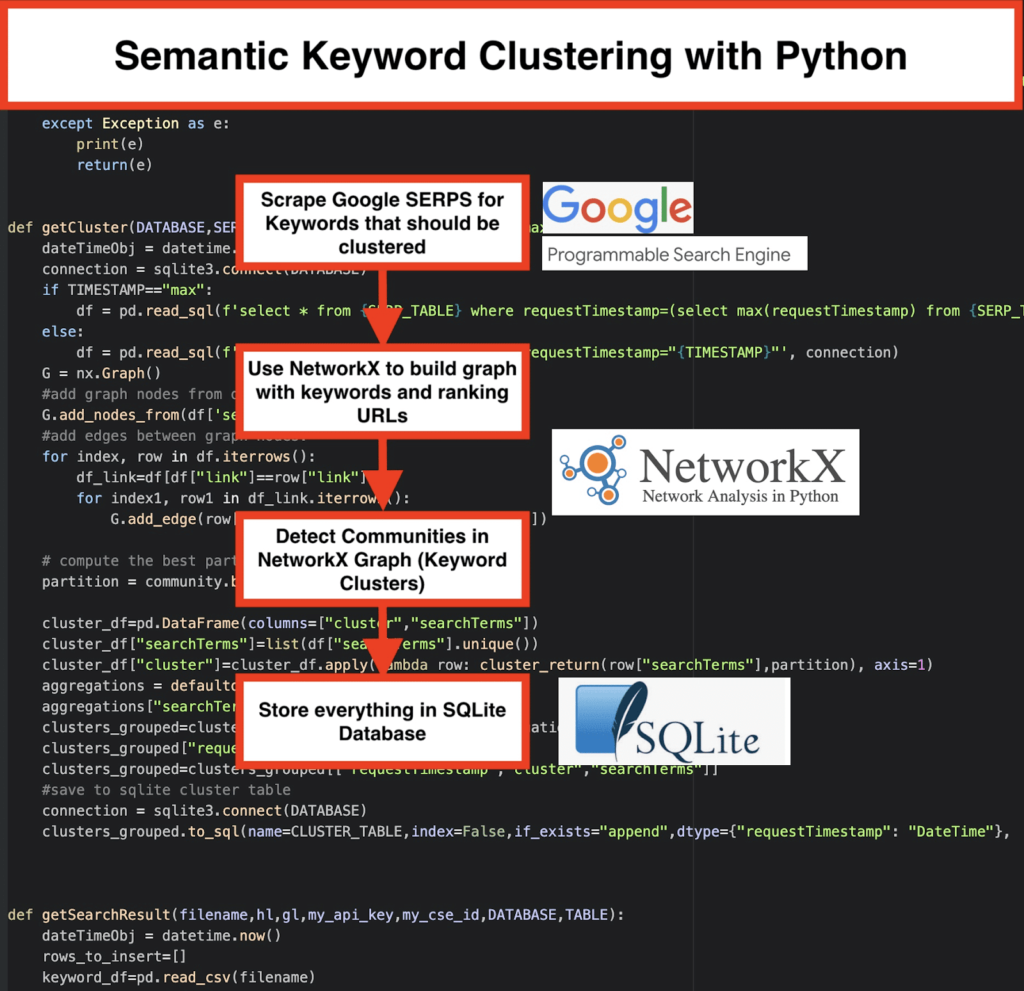

Google SERP results and discovering semantics

Google makes use of NLP models to offer the best search results. It’s like Pandora’s box to be opened, and we don’t exactly know it.

However, rather than building our models, we can use this box to group keywords by their semantics and meaning.

Here’s how we do it:

✔️ First, come up with a list of keywords for a topic.

✔️ Then, scrape the SERP data for each keyword.

✔️ Next, a graph is created with the relationship between ranking pages and keywords.

✔️ As long as the same pages rank for different keywords, it means that they’re related together. This is the core principle behind creating semantic keyword clusters.

Time to put everything together in Python

The Python Script offer the below functions:

- By using Google’s custom search engine, download the SERPs for the keyword list. The data is saved to an SQLite database. Here, you should set up a custom search API.

- Then, make use of the free quota of 100 requests daily. But they also offer a paid plan for $5 per 1000 quests if you don’t want to wait or if youhave big datasets.

- It’s better to go with the SQLite solutions if you aren’t in a hurry – SERP results will be appended to the table on each run. (Simply take a new series of 100 keywords when you have quota again the next day.)

- Meanwhile, you need to set up these variables in the Python Script.

- CSV_FILE=”keywords.csv” => store your keywords here

- LANGUAGE = “en”

- COUNTRY = “en”

- API_KEY=” xxxxxxx”

- CSE_ID=”xxxxxxx”

- Running

getSearchResult(CSV_FILE,LANGUAGE,COUNTRY,API_KEY,CSE_ID,DATABASE,SERP_TABLE)will write the SERP results to the database. - The Clustering is done by networkx and the community detection module. The data is fetched from the SQLite database – the clustering is called with

getCluster(DATABASE,SERP_TABLE,CLUSTER_TABLE,TIMESTAMP) - The Clustering results can be found in the SQLite table – as long as you don’t change, the name is “keyword_clusters” by default.

Below, you’ll see the full code:

# Semantic Keyword Clustering by Pemavor.com

# Author: Stefan Neefischer (stefan.neefischer@gmail.com)

from googleapiclient.discovery import build

import pandas as pd

import Levenshtein

from datetime import datetime

from fuzzywuzzy import fuzz

from urllib.parse import urlparse

from tld import get_tld

import langid

import json

import pandas as pd

import numpy as np

import networkx as nx

import community

import sqlite3

import math

import io

from collections import defaultdict

def cluster_return(searchTerm,partition):

return partition[searchTerm]

def language_detection(str_lan):

lan=langid.classify(str_lan)

return lan[0]

def extract_domain(url, remove_http=True):

uri = urlparse(url)

if remove_http:

domain_name = f"{uri.netloc}"

else:

domain_name = f"{uri.netloc}://{uri.netloc}"

return domain_name

def extract_mainDomain(url):

res = get_tld(url, as_object=True)

return res.fld

def fuzzy_ratio(str1,str2):

return fuzz.ratio(str1,str2)

def fuzzy_token_set_ratio(str1,str2):

return fuzz.token_set_ratio(str1,str2)

def google_search(search_term, api_key, cse_id,hl,gl, **kwargs):

try:

service = build("customsearch", "v1", developerKey=api_key,cache_discovery=False)

res = service.cse().list(q=search_term,hl=hl,gl=gl,fields='queries(request(totalResults,searchTerms,hl,gl)),items(title,displayLink,link,snippet)',num=10, cx=cse_id, **kwargs).execute()

return res

except Exception as e:

print(e)

return(e)

def google_search_default_language(search_term, api_key, cse_id,gl, **kwargs):

try:

service = build("customsearch", "v1", developerKey=api_key,cache_discovery=False)

res = service.cse().list(q=search_term,gl=gl,fields='queries(request(totalResults,searchTerms,hl,gl)),items(title,displayLink,link,snippet)',num=10, cx=cse_id, **kwargs).execute()

return res

except Exception as e:

print(e)

return(e)

def getCluster(DATABASE,SERP_TABLE,CLUSTER_TABLE,TIMESTAMP="max"):

dateTimeObj = datetime.now()

connection = sqlite3.connect(DATABASE)

if TIMESTAMP=="max":

df = pd.read_sql(f'select * from {SERP_TABLE} where requestTimestamp=(select max(requestTimestamp) from {SERP_TABLE})', connection)

else:

df = pd.read_sql(f'select * from {SERP_TABLE} where requestTimestamp="{TIMESTAMP}"', connection)

G = nx.Graph()

#add graph nodes from dataframe columun

G.add_nodes_from(df['searchTerms'])

#add edges between graph nodes:

for index, row in df.iterrows():

df_link=df[df["link"]==row["link"]]

for index1, row1 in df_link.iterrows():

G.add_edge(row["searchTerms"], row1['searchTerms'])

# compute the best partition for community (clusters)

partition = community.best_partition(G)

cluster_df=pd.DataFrame(columns=["cluster","searchTerms"])

cluster_df["searchTerms"]=list(df["searchTerms"].unique())

cluster_df["cluster"]=cluster_df.apply(lambda row: cluster_return(row["searchTerms"],partition), axis=1)

aggregations = defaultdict()

aggregations["searchTerms"]=' | '.join

clusters_grouped=cluster_df.groupby("cluster").agg(aggregations).reset_index()

clusters_grouped["requestTimestamp"]=dateTimeObj

clusters_grouped=clusters_grouped[["requestTimestamp","cluster","searchTerms"]]

#save to sqlite cluster table

connection = sqlite3.connect(DATABASE)

clusters_grouped.to_sql(name=CLUSTER_TABLE,index=False,if_exists="append",dtype={"requestTimestamp": "DateTime"}, con=connection)

def getSearchResult(filename,hl,gl,my_api_key,my_cse_id,DATABASE,TABLE):

dateTimeObj = datetime.now()

rows_to_insert=[]

keyword_df=pd.read_csv(filename)

keywords=keyword_df.iloc[:,0].tolist()

for query in keywords:

if hl=="default":

result = google_search_default_language(query, my_api_key, my_cse_id,gl)

else:

result = google_search(query, my_api_key, my_cse_id,hl,gl)

if "items" in result and "queries" in result :

for position in range(0,len(result["items"])):

result["items"][position]["position"]=position+1

result["items"][position]["main_domain"]= extract_mainDomain(result["items"][position]["link"])

result["items"][position]["title_matchScore_token"]=fuzzy_token_set_ratio(result["items"][position]["title"],query)

result["items"][position]["snippet_matchScore_token"]=fuzzy_token_set_ratio(result["items"][position]["snippet"],query)

result["items"][position]["title_matchScore_order"]=fuzzy_ratio(result["items"][position]["title"],query)

result["items"][position]["snippet_matchScore_order"]=fuzzy_ratio(result["items"][position]["snippet"],query)

result["items"][position]["snipped_language"]=language_detection(result["items"][position]["snippet"])

for position in range(0,len(result["items"])):

rows_to_insert.append({"requestTimestamp":dateTimeObj,"searchTerms":query,"gl":gl,"hl":hl,

"totalResults":result["queries"]["request"][0]["totalResults"],"link":result["items"][position]["link"],

"displayLink":result["items"][position]["displayLink"],"main_domain":result["items"][position]["main_domain"],

"position":result["items"][position]["position"],"snippet":result["items"][position]["snippet"],

"snipped_language":result["items"][position]["snipped_language"],"snippet_matchScore_order":result["items"][position]["snippet_matchScore_order"],

"snippet_matchScore_token":result["items"][position]["snippet_matchScore_token"],"title":result["items"][position]["title"],

"title_matchScore_order":result["items"][position]["title_matchScore_order"],"title_matchScore_token":result["items"][position]["title_matchScore_token"],

})

df=pd.DataFrame(rows_to_insert)

#save serp results to sqlite database

connection = sqlite3.connect(DATABASE)

df.to_sql(name=TABLE,index=False,if_exists="append",dtype={"requestTimestamp": "DateTime"}, con=connection)

##############################################################################################################################################

#Read Me: #

##############################################################################################################################################

#1- You need to setup a google custom search engine. #

# Please Provide the API Key and the SearchId. #

# Also set your country and language where you want to monitor SERP Results. #

# If you don't have an API Key and Search Id yet, #

# you can follow the steps under Prerequisites section in this page https://developers.google.com/custom-search/v1/overview#prerequisites #

# #

#2- You need also to enter database, serp table and cluster table names to be used for saving results. #

# #

#3- enter csv file name or full path that contains keywords that will be used for serp #

# #

#4- For keywords clustering enter the timestamp for serp results that will used for clustering. #

# If you need to cluster last serp results enter "max" for timestamp. #

# or you can enter specific timestamp like "2021-02-18 17:18:05.195321" #

# #

#5- Browse the results through DB browser for Sqlite program #

##############################################################################################################################################

#csv file name that have keywords for serp

CSV_FILE="keywords.csv"

# determine language

LANGUAGE = "en"

#detrmine city

COUNTRY = "en"

#google custom search json api key

API_KEY="ENTER KEY HERE"

#Search engine ID

CSE_ID="ENTER ID HERE"

#sqlite database name

DATABASE="keywords.db"

#table name to save serp results to it

SERP_TABLE="keywords_serps"

# run serp for keywords

getSearchResult(CSV_FILE,LANGUAGE,COUNTRY,API_KEY,CSE_ID,DATABASE,SERP_TABLE)

#table name that cluster results will save to it.

CLUSTER_TABLE="keyword_clusters"

#Please enter timestamp, if you want to make clusters for specific timestamp

#If you need to make clusters for the last serp result, send it with "max" value

#TIMESTAMP="2021-02-18 17:18:05.195321"

TIMESTAMP="max"

#run keyword clusters according to networks and community algorithms

getCluster(DATABASE,SERP_TABLE,CLUSTER_TABLE,TIMESTAMP)

Google SERP results and discovering semantics

We hope you’ve enjoyed this script with its shortcut to grouping your keywords into semantic clusters without relying on semantic models. Since these models are often both complex and expensive, it’s important to look at other ways to identify keywords that share semantic properties.

By treating semantically related keywords together, you can better cover a subject, better link the articles on your site to one another, and increase the rank of your website for a given topic.