Hey everybody! It’s the future. Oh no! Skynet, where’s my flying car, jetpacks, etc.

Everybody’s been talking about AI recently– mostly about ChatGPT and the search chatbots based on ChatGPT. This is one application of Natural Language Processing (NLP). NLP (not NeuroLinguistic Programming), is a branch of Artificial Intelligence (AI) focused on the understanding, manipulation, and generation of human language.

But, did you know you don’t have to use Generative pre-trained transformers (GPT), to use NLP for SEO? And that NLP is kind of the core of SEO?

This article is going to go over NLP; explaining some of the tricky bits. Then we’re going to go through some ways you can use Python, Colab, and NLP to automate SEO tasks and find insights. We’ll then go over some theory based on what we practiced. Finally, we’ll talk about pitfalls, problems, and possibilities using NLP in this line of work.

Let’s get cracking!

What is Natural Language Processing (NLP)?

NLP is the intersection of statistics, linguistics, ML/AI, and computers. It can cover generative tasks like responding to questions, a la GPT, or tasks like analyzing sentiment and understanding natural language.

How does it relate to SEO?

So, pop quiz; how does Google use NLP? Well, as far as we know, it’s all of the above.

SEOs are usually familiar with Google’s crawl cycle: crawl, render, index, and rank. NLP often falls under that last step: ranking. Google uses NLP to process the content of a page and match it up with the query of a user.

We, as SEOs on the other hand, can look at the way Google uses NLP and apply it to our own work.

For example, we know BERT, the language model released in 2018, provides Google with a much better understanding of natural language inputs. Neural matching allows Google to make better inferences about synonyms. And MUM (Multi-tasking Unified Model) allows Google to better understand the contextual meaning of words.

So how can we apply this to our SEO?

Technical stuff for nerds

I want to take a second to talk about some of the more technical elements of ML algorithms, but you can skip over this if terms like “vector analysis” make your eyes glaze over.

Remember y=mx +b, from algebra 1? Well, the most basic versions of ML are that– using linear regression to predict outcomes. But, more complex ML algorithms like Word2Vec and Glove use vector analysis to better understand the meaning of words.

The most basic ML algorithms for NLP are based on the idea of “word embeddings.” This means that each word is represented as a vector, or a set of numbers that describe its relationship to other words and its context in a sentence. This allows us to better identify related topics and sentiment in natural language. Essentially, they measure the relationships between words to create a numeric representation of a word. This helps Google better understand the context of a search query, instead of just looking for a specific keyword.

Vectors look a bit like this: [1.2, -0.3, 0.8, 0.2].

You can also use vector analysis to compare two documents, or two terms, and see how similar they are. For example, this can be helpful for understanding the semantic intent of a query and for identifying related topics.

Another term you’ll probably hear a lot with more advanced NLP algos is “transformer.” A transformer is a deep learning model that uses self-attention, differentially weighting the significance of each part of the input data. Now, what does this mean? I’m going to use a metaphor to explain:

Imagine that you’re a solo sandwich vendor on a busy night. You have a lot of orders to fill and they all need to be done quickly. So, you have to figure out which ingredients to prioritize. You do this by differentially weighing the importance of each ingredient. Every sandwich uses butter, and next to none uses ham salad. Basically, you’re using self-attention, like a transformer does.

As they say: attention is all you need!

To really simplify, ML algorithms turn words into numbers and these numbers are used to create a model of the relationships between words. Transformers help machines better understand natural language by differentially weighting the importance of each input. Cool? Cool!

Now that we’ve got the technical stuff out of the way, let’s talk about how to use NLP in SEO.

[Case Study] Refine your SEO strategy based on relevant data and granular segmentation

Common uses of NLP for SEO

As NLP has developed over the years, there have been a few common uses for which it has become the go-to in SEO. Let’s take a look at some of the more popular ones.

Analyzing sentiment and sentiment changes

One of the most basic tasks in NLP – not basic as in “easy” but as in “one of the first ones new devs play around with” – is sentiment analysis. Sentiment analysis is basically how text reflects the feelings of a person. In some cases, the text can be very easy to analyze.

Meanie2004: “I HATE YOU! THIS SUCKS AND IS BAD!”

nicegirl: “Wow, this is fantastic! <3”

And in other cases, it’s more difficult.

Meanie2004: “This isn’t great, but I guess it could be worse.”

This is where NLP comes into play. Using algorithms, we can analyze the sentiment of a text by looking at the words used, the syntax (grammar, etc), and context.

A practical way in which you can use this for SEO is to prepare if you get a sudden rush of bad reviews. You can use the python code below to check the sentiment of a text:

``` from textblob import TextBlob text = 'This is an amazing experience!' # create a TextBlob object text_blob = TextBlob(text) # returns sentiment polarity print(text_blob.sentiment.polarity) ```

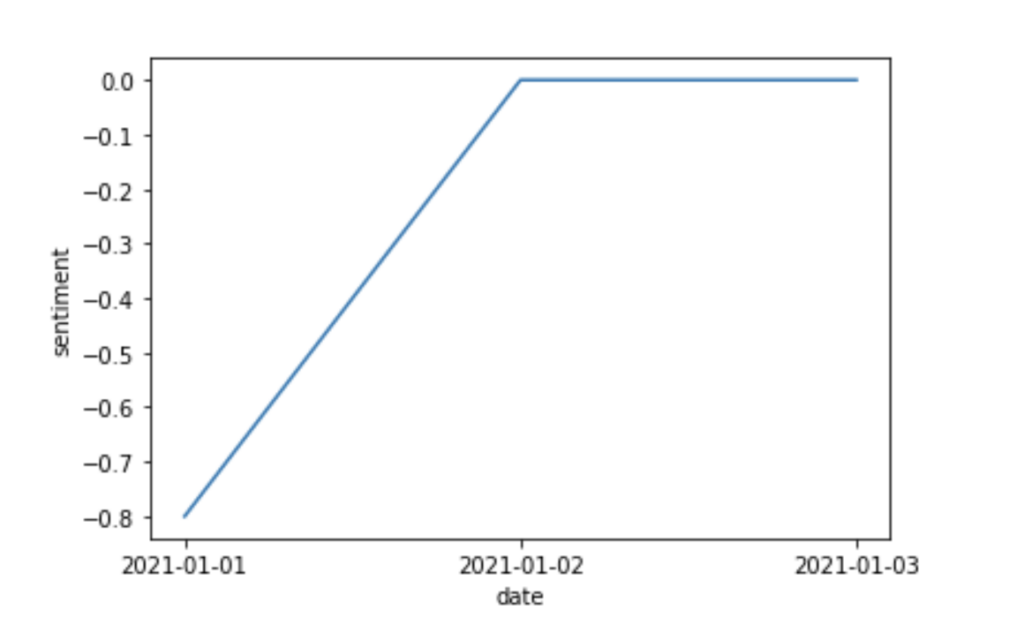

If you plug this into Seaborn, or another visualizer, you can see how your reviews change over time.

Result! Yours will probably look a little less simple than this, though (hopefully). Unless you only have three reviews…

If you have a big set of dates and comments, you can look at how people are responding over time. If there are dips, you can look at what you did at that point that might have frustrated people. You could even use this on blog comments; which blogs made people super excited and/or happy?

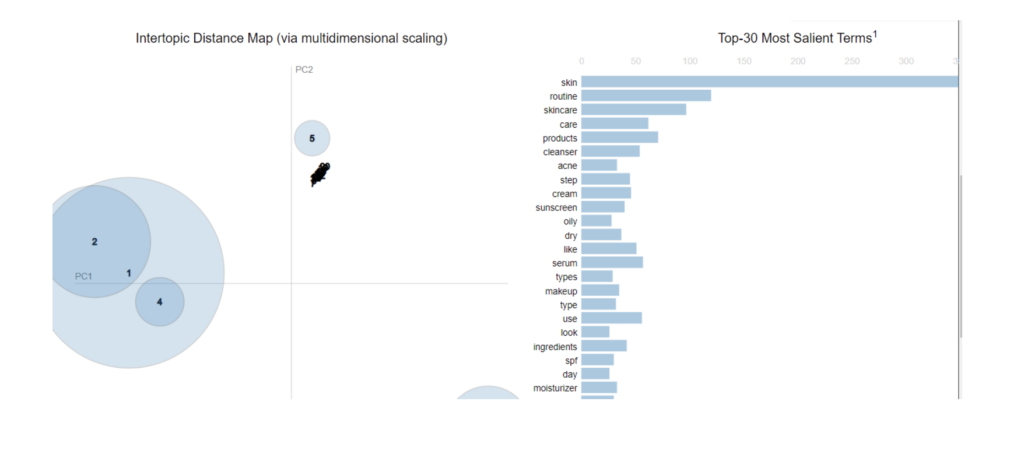

Topic Modeling

One thing that both Google and people do is “topic modeling.” Topic modeling is a way to identify groups of similar words within a corpus. It helps uncover the most common themes and topics in which people are interested.

If you work on SEO, you could use this to analyze conversations about topics, to better tailor your content, or look at what competitors focus on in their high-ranking content. It can help you identify key words and phrases that might be missing in your content.

Keyword extraction

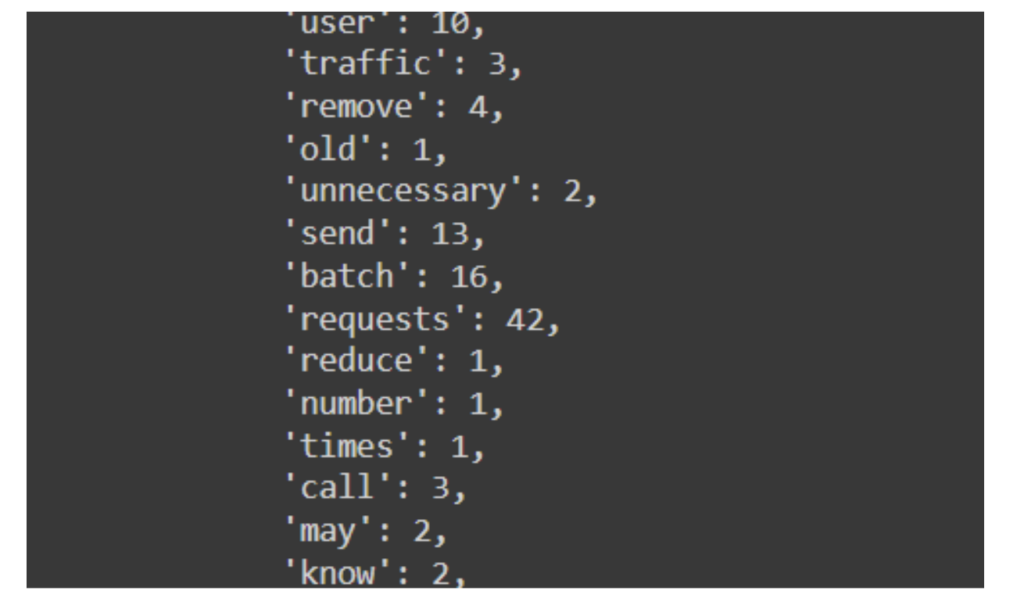

Sometimes you need a list of keywords, and your usual tools aren’t pulling out what you want. In this case, you can use libraries to pull out a long list of keywords, either from your content (compare to what it’s ranking for!) or from highly ranking competitors.

Text summarization

Text summarization has two popular methods: abstractive and extractive. It also has a lot of uses, whether summarizing large amounts of text when you’re doing research, seeing what the most salient or important topics seem to be in your text, creating meta descriptions, or recapping TL;DR paragraphs. Basically, if you don’t have time to read a whole document or article, summarizing can help you save time.

Example of text summarization

Intent classification

Intent classification is a super useful task in SEO; being able to tag intent on a wide level can really help with understanding what people want to achieve or take away from your website.

Semantic intent is the goal behind a query– what a user is really trying to do when they enter a search query.

Let’s say, for example, someone searches for “buy iPhone 11 pro”, they’re probably very close to the action phase of the purchasing funnel.

But if someone searches for “iPhone 11 pro review”, they may not be ready to buy, and instead, they are trying to find out what other people think about the product – closer to the interest level of the funnel.

Using NLP and ML algorithms, you can better identify the intent behind a query and adjust your content accordingly. You could use code, similar to that above, or we could get spicy and use a kaggle dataset to train and label our own data!

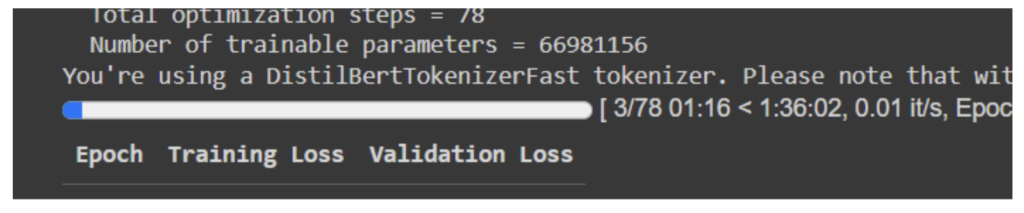

I love the example from this code here: Intent identification with BERT | Kaggle. Using distilibert and my own data, I tried to make my own intent classifier (warning: this might use a lot of RAM.)

``` # load the data - get from your SEO data source of choice

Or use:

https://app.surgehq.ai/datasets/search-queries-and-intents

Or even use this Google sheet I put together. Make it a data frame (see colab).

Once you get to the end, there is a classifier you can use to see why people are coming to certain pages on your site. You can then develop your content to better understand the sales funnel and the way your content guides people down that purchasing funnel.

There are a lot of possible ways to use classifiers to classify text.

Less common uses of NLP for SEO

So far, we’ve stayed mostly in the realm of more common SEO things. Now let’s do some weird stuff– stuff that might be useful in a different way.

Image text extraction

This might not necessarily or strictly be an NLP task, but it can be incorporated into many NLP projects. If you have images that have text in them, that text should also be in the alt-text– and it should also be salient to the page it’s on! While the code in my colab is mostly about text extraction from an image, you can use image processing libraries like openCV to do image recognition, then you can use other NLP techniques to make sure your images are relevant.

Additionally, you can do competitor research this way; if every competitor has a relevant image that involves x, and you only have images that involve y, maybe try something different.

Python actually has a few ways you can extract image data – as I mentioned, OpenCV – but you could train your own image recognition engine with TensorFlow, or use an API from Amazon or Google. The world is your oyster!

Keyword research

Keyword research: I don’t like doing it, so let’s get bots to do it for us!

Let’s use LDA again and pull out the topics from our competitor websites. Once you have this data, you can see what they cover that you don’t and vice versa.

Often a way to do this is something called TF:IDF, or Term Frequency/Inverse Document Frequency. It’s statistics again; this time a method to reflect how important a word is in a document or a collection of documents. One thing you can do with TF:IDF and LDA is see what topics are important in a ranking document versus lower ranking documents: do the higher ranking docs cover subjects or topics that lower ranking documents don’t?

Luckily, for the lazy developers amongst us (naming no names [it’s me]), scikit-learn has a TF-IDF vectorizer you can use super easily. This lets you pull out content and keywords in competitor text you might want to focus on.

Other automated content analysis

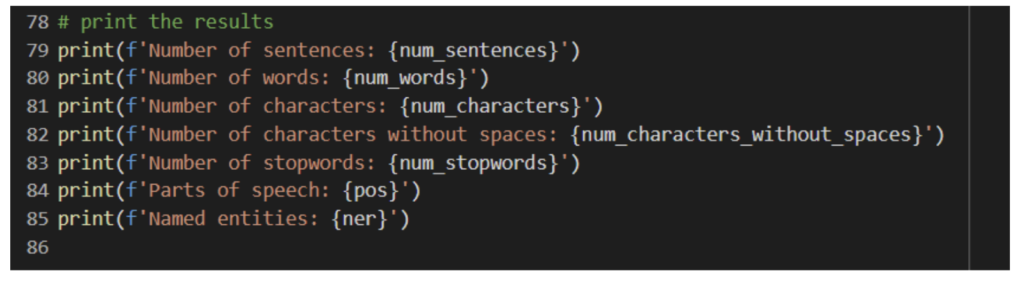

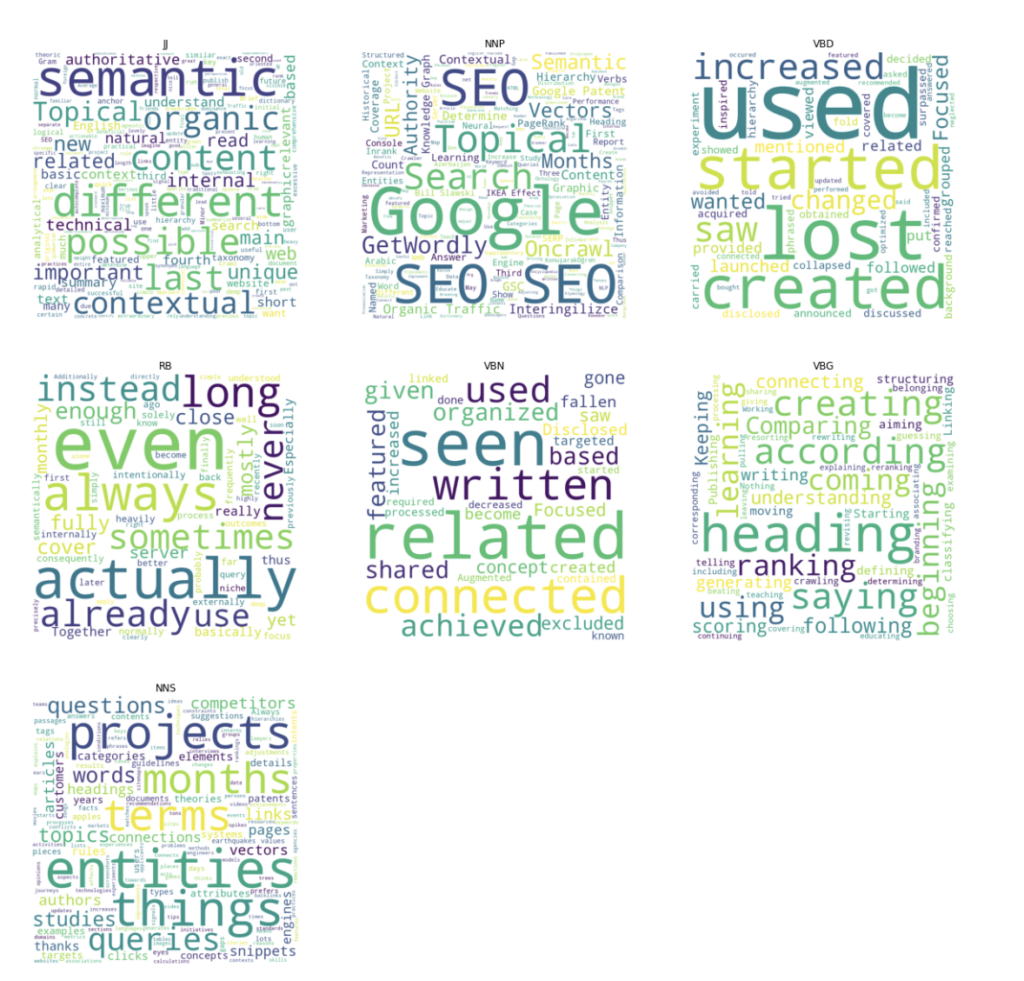

Some other automated content analysis you can do includes a ton of stuff: not just basics like number of words, but you can get named entities (something Google seems to care about!), reading level, parts of speech, and tons of other information. Again, this can be useful for competitor research, but it can be useful too to match with your customer data.

If your pages which have lots of verbs and other action-oriented parts of speech do better than pages that don’t, that tells you something. Do your customers crave calls to action, descriptions, or just want plain language? Do they prefer 8th grade or college level talk? NLP gives us ways to get that information in bulk, letting us A/B test in mass and see the shape of how our clients and customers interact with text.

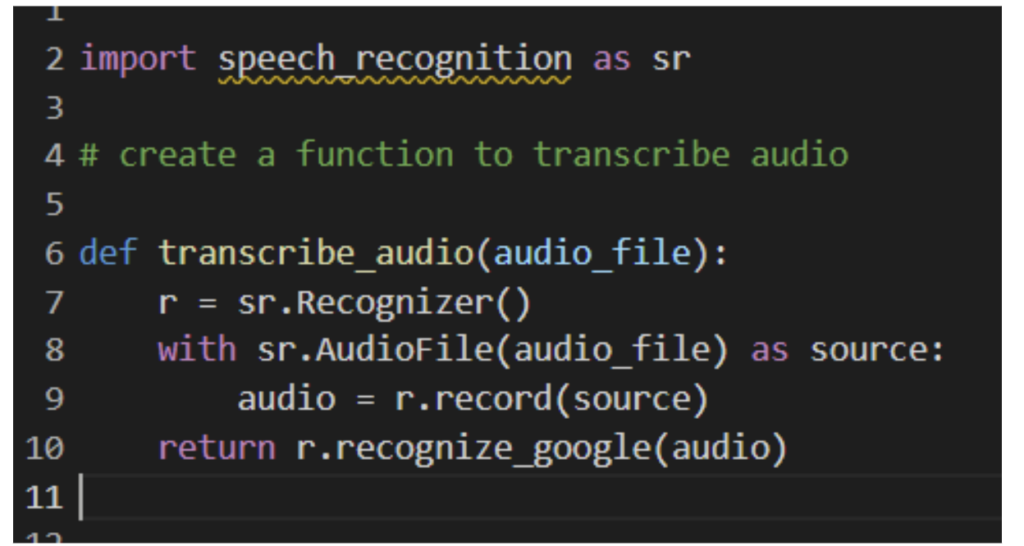

Video and audio transcription

If you work with video and audio, you must have transcripts. If you are a small studio or a one person media creation machine, video and audio transcripts can be burdensome. I will caution you against fully relying on speech recognition to make transcripts, but it can give you the bulk of your transcript. You can then go through and correct or fix any issues, saving yourself plenty of time while making sure your audience can access your content, even if they hate your voice.

Some tips for audio/video: do not transcribe foreign languages with a blanket expression such as “[foreign speaking]”; if someone who speaks Spanish and English would understand that text in an audio medium, they should be able to read it as well.

Also, don’t censor your subtitles! It might be tempting in a bid to please the everpresent GOOG, but transcripts and subtitles are supposed to truly reflect the content involved. Doing it any other way is a disservice to your audience!

Schema opportunities

I put this one together because I saw a tweet from Lidia Infante:

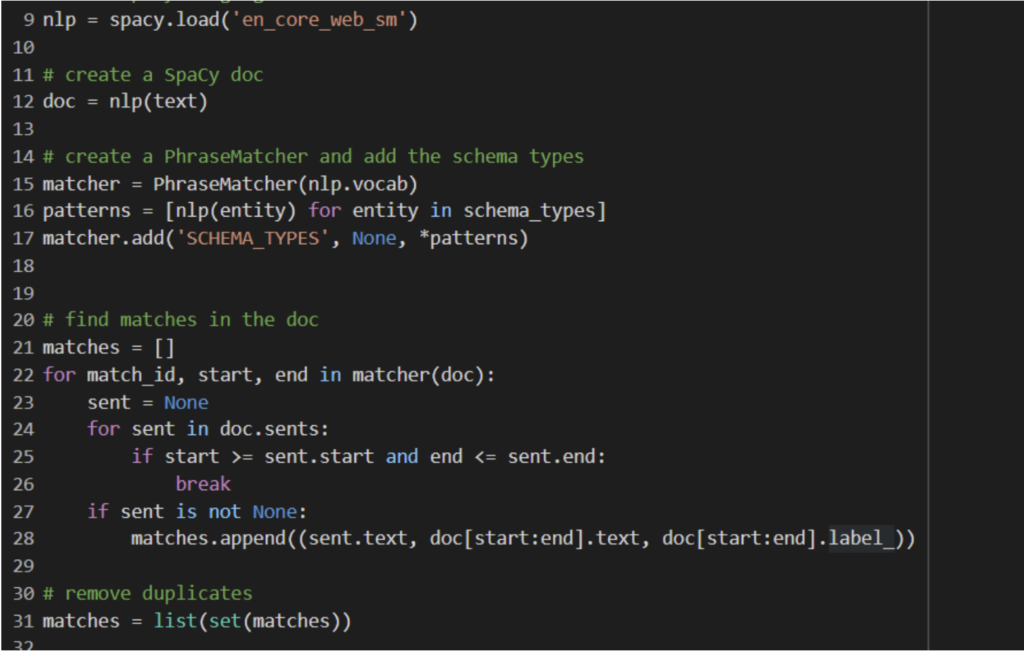

It got me thinking, how could you do that? The colab has a rough implementation, using Spacy.

Jess, you might say, please don’t show me code like this, it makes me scared.

It’s pretty simple, my friend. I’m using spaCy “PhraseMatcher”, which is an industry solution to efficiently match large terminology lists. Here, I’m using NLP to create a list of entities plus schema, and then looking for entities in the text that might match those schema entities. You could definitely make this a neater solution; adding a few more PhraseMatcher rules could make a totally streamlined schema machine.

Content calendar

One thing I think is a fun way to use NLP is to create your content calendar.

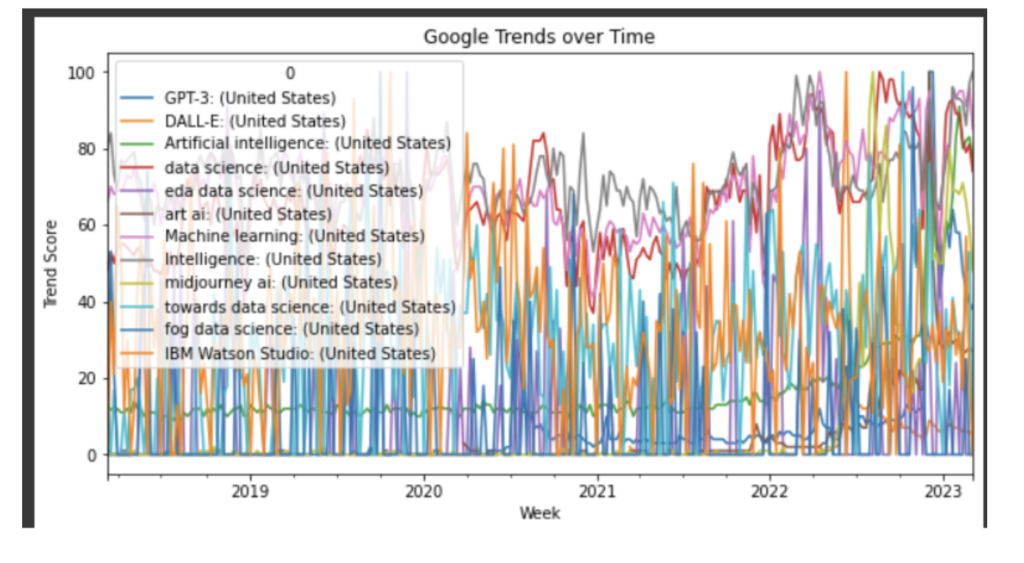

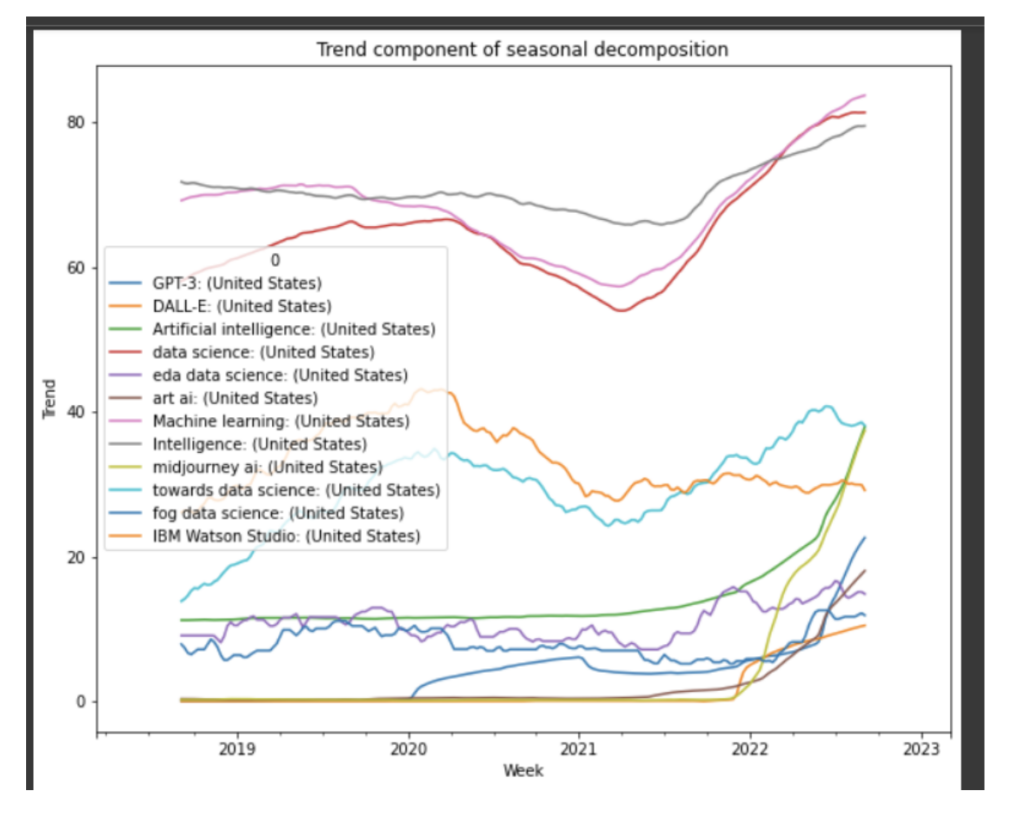

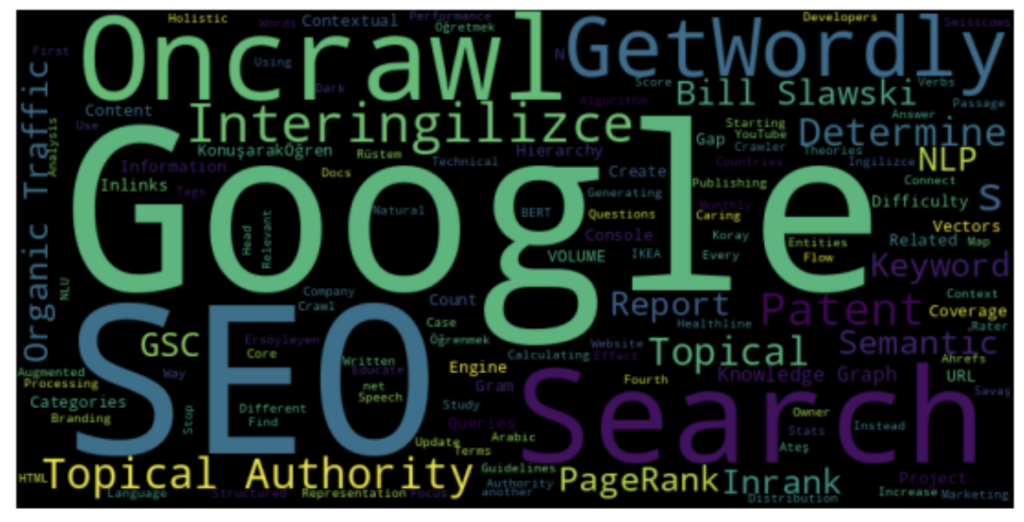

For this, I pulled trends data for a trend over time, then disambiguated it out to get other trends. Google’s Trends API is very finicky, so to save us all a headache, I saved these weekly trends in a Google sheet.

You can then use that to track trends over time and track seasonal decomposition. This can let you see when different trends spike; if certain ones always seem to spike in spring, maybe that’s a good time to put out your own content on that topic.

So, where does NLP come into this?

Well, if you use the topic code I suggested up there, you can look at search trends for your own site or even competitor sites: you could pull in data from social media and chart what topics tend to spike over seasons. If you get popular content at certain times of the year and extract the NLP info, you can create a content calendar that is based on data and one that might include topics you hadn’t thought of before!

Keyword mapping

Keyword mapping is a technique used to identify the most important keywords in a document and then map them to a specific topic. Keyword mapping libraries typically use NLP techniques including named entity recognition, part-of-speech tagging, and TF-IDF/LDA to pull out this content. This is a way you can identify the most valuable content based on the content itself, rather than external data.

Keyword mapping can be pretty accurate at identifying important keywords, but it’s important to remember that it’s not a perfect science.

Theory for nerds

One thing I’ve found extremely useful when going through ML is getting a grasp of some of the theories. Especially for folks who are less STEM adjacent, understanding at least some of the how and why of things can happen. Often people will ask “why did GPT do that? Why can’t Midjourney do hands?” Understanding some of the theory can help answer those questions. This next section will go through some stuff about ML in hopefully an interesting enough way!

Machine learning and neural networks

At this point, we’ve done some machine learning. But what is it? And why won’t people shut up about it?

Traditionally, to make a computer do something, you have to tell the computer to do said thing. Machine learning is a way to make computers do things without explicitly telling it “Do Thing.”

There are two ways to do machine learning: supervised and unsupervised.

[Case Study] SEO storytelling to sell your SEO projects

Supervised learning is like having a micromanager– or really, it’s like BEING a micromanager. You provide the computer with a set of labeled data and it learns from those examples to predict the labels of new data. It’s like pavlovian learning: if you ring a bell, the machine will expect to eat.

Imagine you want to train a computer to recognize different types of screams. You would provide the computer with a labeled dataset of screams, with each scream labeled by type of scream. The computer would learn from these labeled examples and use that knowledge to predict the type of scream in new, unlabeled audio clips. But, if you present it with a dog woofing, it probably won’t know what to do with that.

As the micromanaging supervisor, you then have a choice: do you expand your app to understand dogs’ woofing? That would involve labelling and uploading a wide dataset of dog noises. Or do you decide that dog noises are out of scope?

Unsupervised learning, on the other hand, is like trying to learn to swim for the first time after being dropped in the middle of the ocean. A digital ocean. You provide the computer with a bunch of unlabeled data and hope it finds patterns and clues in that data.

Think about it like this: you analyze the dialogue of characters in a TV show to find patterns in their speech. You provide the computer with an unlabeled dataset of transcripts from the show and let it find similarities and differences between the characters’ speech patterns. The computer might discover that one character says “bazinga” more than others, etc.

There are different types of machine learning algorithms, such as classification algorithms and regression algorithms.

Classification algorithms are used to sort data into different categories or classes, while regression algorithms are used to predict continuous numerical values.

For example, say you have a dataset of customer complaints, with each complaint labeled as either a product defect, a customer service issue, or a delivery problem. A classification algorithm would look at the input features of each complaint (such as keywords or phrases) and use that information to predict to which category each complaint belongs.

Popular classification algorithms include Naive Bayes algorithms and Support Vector Machines. Naive Bayes algorithms use Bayesian statistics to figure out the probability that each class is the correct label, while Support Vector Machines draw lines (hyperplanes) to separate different classes as much as possible.

Regression algorithms, such as linear regression and logistic regression, are commonly used to predict the future. For example, they can be used to predict stock prices or the cost of a house. When building a regression model, it’s important to select the right input features to accurately predict the output value. Feature selection techniques like correlation analysis, principal component analysis (PCA) and regularization can help with this.

To evaluate the accuracy of a regression model, metrics like mean squared error (MSE), mean absolute error (MAE), and R-squared are commonly used. These metrics help to assess the accuracy of the model and determine whether it’s performing well on the test data.

Neural networks are another type of machine learning algorithm that are inspired by the structure and function of the human brain. They are often used for tasks such as image recognition, speech recognition and natural language processing. There are different types of neural networks, such as feedforward neural networks, convolutional neural networks, and recurrent neural networks. Each type is used for a specific purpose, such as image recognition or sequential data analysis.

Word embeddings and vectorization

In NLP, one of the biggest challenges is to represent words in a way that a computer can understand. This is where word embeddings and vectorization come in.

The bag-of-words model is one way to represent text data in a vectorized format. This model counts the occurrence of words in a document and creates a vector that represents the frequency of each word. However, this model doesn’t capture the relationships between words, which can limit its usefulness in certain NLP tasks.

Let’s say we have a sentence: “The quick brown fox jumps over the lazy dog.” The bag-of-words model would represent this sentence as a set of words, like this:

{“the”, “quick”, “brown”, “fox”, “jumps”, “over”, “lazy”, “dog”}

We can also represent the frequency of each word in the sentence using a vector:

[1, 1, 1, 1, 1, 1, 1, 1]

This vector tells us that each word appears once in the sentence.

Word2Vec and GloVe algorithms are two popular approaches to creating word embeddings, which are vector representations of words that capture the relationships between them. Word2Vec creates embeddings by predicting the context of a word, while GloVe creates embeddings by considering the co-occurrence statistics of words in a corpus.

Here’s an example of a Word2Vec embedding for the word “king”:

[0.2, 0.1, -0.3, 0.5, 0.8, -0.2, -0.1, 0.4, 0.6, 0.2]

This vector tells us that “king” is similar to words like “queen,” “prince,” and “monarch” in meaning and context.

And here’s an example of a GloVe embedding for the word “king”:

[0.2, 0.1, -0.3, 0.5, 0.8, -0.2, -0.1, 0.4, 0.6, 0.2]

This vector represents the co-occurrence statistics of “king” with other words in a corpus, indicating that “king” is likely to co-occur frequently with words like “queen,” “prince,” and “monarch.”

The GloVe algorithm uses matrix factorization to find embeddings that capture these co-occurrence statistics, resulting in a vector representation that captures both the semantic and syntactic relationships between words.

Term frequency-inverse document frequency (tf-idf) weighting is another technique used in vectorization. This method weighs the importance of each word in a document based on its frequency in that document and its rarity in the corpus as a whole.

Here’s an example of tf-idf weights for a set of documents:

| Document | Word | tf-idf weight |

|---|---|---|

| 1 | dog | 0.2 |

| 1 | cat | 0.1 |

| 2 | dog | 0.3 |

| 2 | cat | 0.3 |

| 3 | dog | 0.1 |

| 3 | cat | 0.4 |

This table tells us that the word “cat” is more important in document 3, while the word “dog” is more important in document 2.

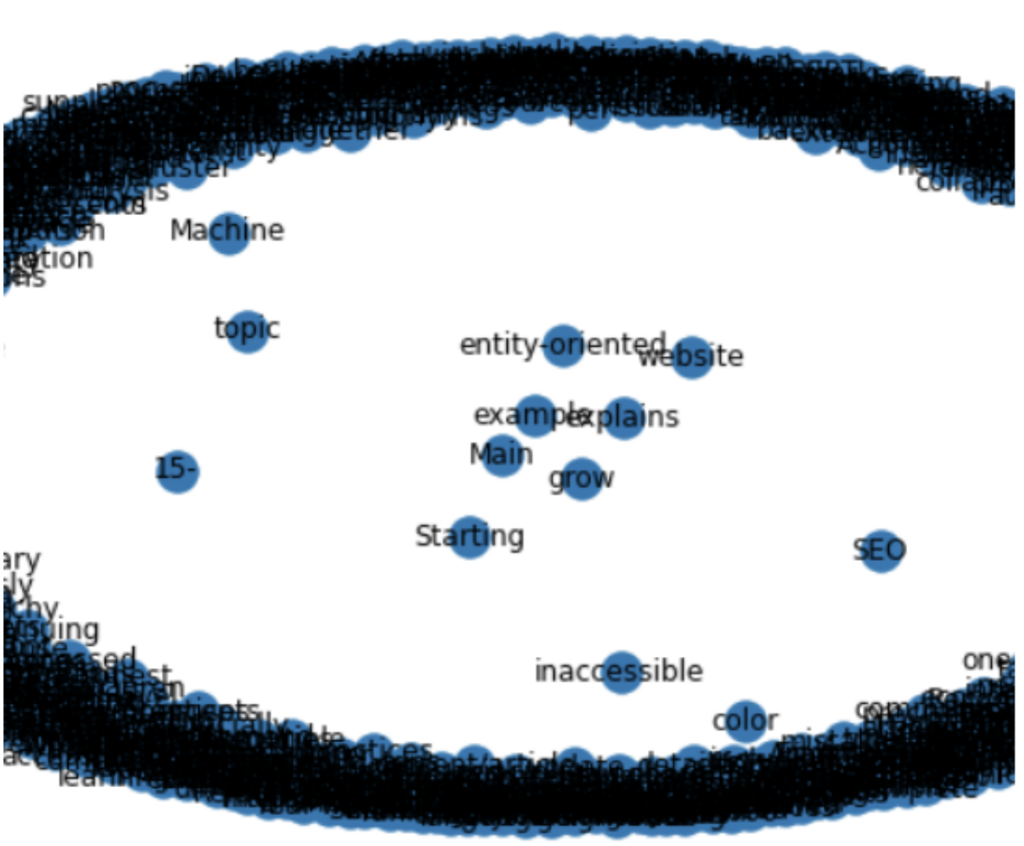

Dimensionality reduction techniques like principal component analysis (PCA) can be used to reduce the dimensionality of the vectorized data. This can be useful for visualizing the data and improving the performance of machine learning models that are trained on the data. By reducing the number of dimensions, the data becomes more manageable while still preserving the most important information.

Semantic analysis and topic modeling

Now, let’s dive into some more technical details and talk about semantic analysis and topic modeling! This is how you figure out what people are actually talking about with all this NLP stuff.

First up, we have Latent Semantic Analysis (LSA). This is like a language detective who can figure out the hidden meaning behind words.

LSA uses a mathematical technique called singular value decomposition to identify patterns and relationships between words in a large corpus of text.

Think of it this way: imagine you’re reading a bunch of book reviews. Some people might use the word “amazing” to describe a book, while others might say “incredible.”

LSA looks for patterns in these reviews and tries to group together similar words that are used to describe the same thing. So, it would group “amazing” and “incredible” together because they both mean something is really good.

“Singular value decomposition” breaks down the relationships between words in a text into smaller pieces, like building blocks. These pieces are then analyzed to identify the most important ones that define a particular topic or concept. LSA rearranges these important pieces to create a summary or representation of the text that captures its underlying meaning.

Next, we have Latent Dirichlet Allocation (LDA). This is like a party planner who can help us figure out what topics are most likely to be popular given a group or “text”. LDA works by assuming that each document (or piece of text) is made up of a combination of different topics and then tries to figure out what those topics actually are.

In a collection of, say, news articles, LDA could help us identify that one article is mostly about politics, another is about sports, and yet another is about celebrity gossip. This can help us quickly sort and categorize large volumes of text data.

Thirdly, we have Non-negative Matrix Factorization (NMF). This is like a DJ who can remix words and phrases to create new, more meaningful combinations. NMF takes a matrix of words and their frequencies in a corpus, and then breaks it down into two smaller matrices that represent different “topics.” These topics can then be combined to create new, more informative representations of the text.

For instance, let’s say we have a dataset of customer reviews for a restaurant. NMF could help us identify that one topic is related to the food, another is related to the service, and a third is related to the ambiance. We could then combine these topics in different ways to create more informative summaries of the reviews.

Finally, we have word sense disambiguation. This is like a language referee who can make sure everyone is on the same page and using words correctly. Word sense disambiguation helps us figure out which meaning of a word is being used in a given context. For example, the word “bank” could refer to a financial institution or the side of a river. By analyzing the surrounding words and context, we can figure out which meaning is being used.

Some examples of words that change meaning based on context:

- Bat (Baseball, animal)

- Bear (mammal, slang, endure something)

- Slug (mollusc, boxing)

- Rock (mineral, music, Dwayne)

Natural language processing algorithms

NLP tends to focus on a specific set of algorithms, seen below.

Part-of-speech tagging is when you assign each word in a sentence a part of speech, such as noun, verb, adjective, etc. This helps us understand the grammatical structure of text and make more sense of it. For example, if we know that a word is a verb, we know that it expresses an action or state of being.

Named entity recognition is when you scan text to find and classify named entities like people, organizations, and locations. This helps us better understand the context and meaning of the text. For example, if we know that a particular word refers to a person, we can make assumptions about their role in the narrative.

Sentiment analysis is a mood ring for text. We analyze the overall sentiment or emotional tone of a piece of text, whether it’s positive, negative, or neutral. This helps us understand how people feel about a particular topic, product, or service. (It’s also sometimes just as accurate as sentiment analysis).

Dependency parsing is about identifying the relationships between words in a sentence and trying to figure out how they fit together to build meaning. By identifying the dependencies between words, we can build more accurate models and perform more advanced NLP tasks like semantic analysis and topic modeling. For example, if we know that one word is the subject of a sentence and another is the object, we can infer that the first word is performing an action on the second word.

Common pitfalls and issues

With any technical process– or any process really, there is the possibility of making a mistake. It happens to the best of us. Some of the common ones are detailed below.

Over-optimizing for keywords

Keyword optimization is a thing in SEO but it’s also a thing in NLP. And a common pitfall for both fields is over-optimizing for those keywords. While it can be useful, it can create misleading and inaccurate results. If you focus on some specific areas, you might miss others. Like filtering only for “food” based reviews, you might miss issues or concepts that are just as important elsewhere.

You can also get biased results that way: if you focus on one area, you don’t pick up on another, and you don’t know what you might be missing. I know my client does x and y, but using NLP might help me see the z I’m missing.

Poorly trained AI models/using the wrong model

Another issue you might run into is using poorly trained models or using the wrong model altogether. In my example training data for intent analysis, I focused on purchase intent keywords. But, if I was looking for intent for patient reviews, that data and that model wouldn’t be advisable.

Using a model that isn’t adapted for a specific purpose can really, uh, make things go bad. Like using a language model as an information retrieval model, and that kind of thing.

Lack of diversity in data

Your model is biased. Some of this might be on purpose (biased towards a result, biased towards a field), but likely some of it is inadvertent. Some of these biases might not even matter, but they’re going to be there. If the training data is biased, it will do weird things to the results of the data. Probably, even if you don’t have…

Poorly written content

If you’re writing something using NLP tools, you might not end up with good results. If the input is poorly written, it can be difficult for NLP to accurately analyze it. And, the results don’t just have to be comprehensible to machines, they have to be comprehensible to people too.

Conclusion

Fundamentally, one of the good things about using NLP for SEO is that Google is also using NLP (also for SEO, but in reverse).

The thing about the web, in general, is this:

A searcher (a person), uses Google (a collection of algorithms) to find sites (machine code) written by marketers (people, probably.) NLP is how you make all these elements work together, understanding it helps you understand how search engines look at your site.

Additionally, we are collectively in a new era of Talking About Words and Computers. Hopefully, this guide has given you some new tools to understand the what, how, and why of how these algorithms work.