Generative AI (GenAI) is reshaping how we create and consume content. It’s a powerful tool, but when something consumes the internet, it will reflect the good, the bad and the biases. These biases are not technical glitches. They’re reflections of our societal issues and that includes outdated SEO practices that prioritize certain narratives over others.

Think of Gen AI as a puppy trained by someone else and has learned from its environment. This puppy can learn to like and dislike the same people its trainer likes or dislikes. As a society, we accept that you can train a puppy to become a guide dog or a guard dog. We need to take the same approach when using GenAI, being conscious of how it learns and deliberate in how we use it to shape our content and narratives.

Understanding GenAI bias

Like a puppy, Gen AI learns from its environment. The content it consumes—often a mirror of what’s available online—shapes its behavior. However, in order to properly understand how content from the internet reflects our bad SEO habits, we need to take a look at the different types of bias that exist.

Data bias

Data bias occurs when AI training datasets don’t accurately reflect the diversity of real-world populations.This lack of diversity in the training data shapes how AI behaves and decides. It can lead to AI systems that are ill-equipped to understand or represent the experiences of many groups, setting the stage for further bias in how these systems operate.

A real-world example of data bias, Dr. Joy Buolamwini’s research from 2016, has shown that facial recognition technologies often work poorly on darker-skinned women compared to lighter-skinned men. This is because of the disparity in the training data used for these systems: the data includes fewer images of darker-skinned individuals, leading to inaccurate results.

Furthermore, research estimated only 27% of training data used for ChatGPT-3 was authored by women. Consequently, AI might unintentionally prioritize male perspectives simply because they were more prevalent in the data.

Algorithmic bias

We unknowingly interact with algorithms in most aspects of our lives, whether we are browsing social media, searching for information online or even using GPS navigation. Some AI systems process information in ways that unfairly favor or disadvantage certain groups.

In her book Algorithms of Oppression, Safiya Umoja Noble explores how search algorithms can reinforce existing biases, affecting what information is shown and to whom.

For instance, a search for a specific profession might show images that predominantly feature one gender or race, thereby reinforcing stereotypes rather than reflecting reality.

This type of bias is particularly tricky to spot because these systems often operate as a “black box”; their decision-making processes aren’t easily understood, even by their developers. The lack of transparency makes it challenging to identify where and why certain decisions are being made, let alone correct them.

The problem deepens with more complex models like GenAI, which run on Large Language Models (LLMs). These systems don’t just reflect the biases in their training data, they can amplify and expand them in unexpected ways.

[Ebook] AI & The Evolution of Search

User interaction bias

User interaction bias arises when the prompts and responses reflect existing societal prejudices. The model becomes influenced by how users interact with it and by using these GenAI tools, we actively take part in the training.

And when we say “we actively take part”, it’s important to note that full access to GenAI tools typically sits behind a paywall. The “we” is predominantly limited to those who can afford it, giving them disproportionate influence over the model’s behavior.

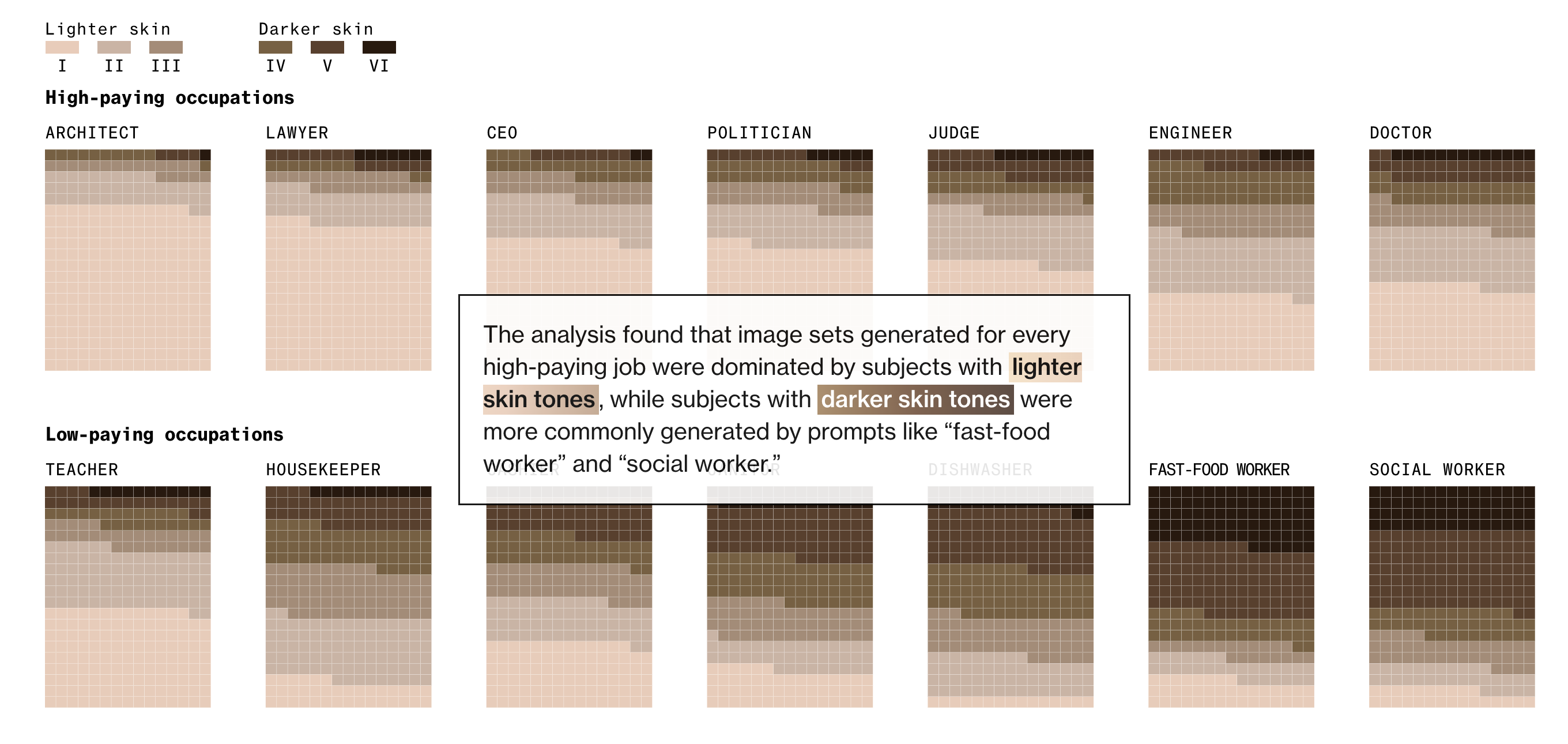

A Bloomberg study found that when generating content, GenAI tools tend to mirror prevailing stereotypes. For example, prompts related to professional roles often resulted in gendered or racially biased imagery and descriptions, reflecting the unequal representation in the training data.

Source: Bloomberg

When users consistently favor these types of responses, the AI learns to amplify these patterns, creating a feedback loop where biases become embedded and intensified over time.

Without careful oversight, GenAI can exclude underrepresented voices and perpetuate the very inequities it might otherwise help address.

What does AI think a technical SEO specialist looks like?

When prompted to generate images of SEO professionals, GenAI tools produced stereotyped representations. These AI-generated images often default to narrow demographics, typically depicting technical SEO specialists as predominantly male, predominantly from one ethnic background, and usually shown in generic high-tech office settings with laptops and analytics dashboards.

Source: Gemini

Prompt: Technical SEO Director

These images are a stark reminder that GenAI doesn’t create from a neutral perspective. It creates from patterns in existing content and when that content has historically underrepresented other people in technical roles, the AI perpetuates that erasure.

What the AI “thinks” an SEO professional looks like is simply a reflection of whose voices and faces have dominated the content it learned from. And as more users generate and share these stereotyped images, the feedback loop continues.

What impact does GenAI bias have on SEO?

The ultimate goal in SEO is to ensure our content reaches those who want, need or are looking for that information. While GenAI tends to be associated with the creation of content, it also has direct implications for how that content performs and who it reaches.

Content quality and audience reach

GenAI is currently used to analyze existing content and identify reproducible patterns. Your aim is to produce high-quality content that ranks well in Google, but if AI is trained on content that perpetuates stereotypes or biases, it will generate new content following these same patterns.

A UNESCO study examining major GenAI tools found alarming evidence of regressive gender stereotypes being baked into AI outputs.

As the study notes:

“Every day more and more people are using Large Language Models in their work, their studies and at home. These new AI applications have the power to subtly shape the perceptions of millions of people, so even small gender biases in their content can significantly amplify inequalities in the real world.”

Biased AI can alienate parts of your intended audience, reducing engagement and eroding trust. When users don’t see themselves represented in content, or worse, encounter stereotyped portrayals, they’re more likely to bounce, spend less time on site, and avoid returning. An inclusive approach enhances user experience and broadens market reach.

Personalization limitations

Generative AI can analyze user behavior data – such as browsing history, preferences, demographics – to create personalized content experiences. While this can be great in terms of user experience, it doesn’t account for constantly changing preferences. By the time AI catches up and notices a shift in your behavior, your preferences may have already evolved again.

It also creates filter bubbles that limit what users see. Your socio-economic status or cultural background doesn’t always play a role in how you search, but AI isn’t yet sensitive enough to make that distinction. That is where human oversight will always be necessary.

Accessibility and inclusivity

Is AI recreating accessible content? If not correctly implemented, AI-generated accessibility features can actually hurt your SEO. Features like excessive alt text or improper heading hierarchies could be analyzed and reproduced by AI unless we specifically train it otherwise.

The same concern applies to image generation tools like DALL-E, Midjourney, and Generative AI by Getty. If their training sources contain biased representations, those biases transfer directly into the images they create.

Identifying and mitigating GenAI bias

Now that we know these hurdles exist, what can we do to address them?

Stay aware

Regular audits of AI outputs are essential to catch bias before it reaches your audience. You’ll need to systematically check for stereotypes, exclusionary language, and gaps in representation.

Leveraging diverse datasets and including varied user feedback during the training phases can help identify biases that might not be immediately apparent.

If you’re using third-party GenAI tools where you can’t control the training data, focus on what you can control: your prompts and your human review process.

Keep a human in the loop

To break the cycle of bias in Gen AI, we as marketers need to do what we know how to do: focus on genuine user needs from an inclusive lens. Every piece of AI-generated content should pass through human review that specifically looks for bias, exclusion, and accuracy. This means:

- Maintain transparency. Be clear with your audience about when and how you’re using AI, and take accountability for the content it produces.

- Seek diverse perspectives. Engage with stakeholders from underrepresented groups. Their insights will reveal blind spots you might otherwise miss.

- Establish ethical guidelines. Create clear standards for acceptable AI-generated content and enforce them consistently across your team.

Leverage bias-detection tools

Consider using specialized tools, like InClued.ai, which are designed to scan content for biased language, stereotypes and exclusionary terminology. These platforms can flag issues that might slip past human review, particularly in high-volume content creation.

Closing thoughts

The responsibility for mitigating AI bias doesn’t rest with developers alone, it sits with everyone using these tools.

As SEO professionals and marketers, we should all be training our own puppies. We are shaping the content that will influence how AI systems understand and represent our industries and audiences.

Eliminating bias entirely may not be immediately achievable, but that doesn’t mean we can’t make significant progress. It starts with staying vigilant, recognizing the potential for bias, implementing human oversight and making deliberate choices about how we use these tools.

The biases baked into GenAI won’t disappear on their own, but with consistent auditing, diverse perspectives and a commitment to inclusive practices, we can ensure our AI-generated content reaches and resonates with the audiences who need it most.