A deep dive into the real challenges, environmental impact, and practical solutions for using LLMs in SEO without breaking the bank or the planet.

The reality check we all need

Let’s be honest, we’re all experimenting with AI in SEO. Whether it’s ChatGPT for content ideation, Claude for technical analysis, or any number of other LLMs for scaling our processes, AI has become the shiny new toy everyone wants to play with.

But, scaling with AI isn’t just about business impact anymore. It’s about understanding the real-world consequences of our choices and finding ways to grow responsibly, even when budgets are tight.

Full warning: this is going to be a bit depressing, but very much needed! It’s also not intended as a sanctimonious sermon. I’m ‘guilty’ as much as the next person. In fact, the first draft of this piece was AI-generated based on my Women in Technical SEO Fest presentation.

The aim is not to throw stones. It’s to encourage us all to think about using AI more responsibly.

The uncomfortable truth about LLM impact

Let’s start with what we all know: it’s risky for a business to just leave it all to AI.

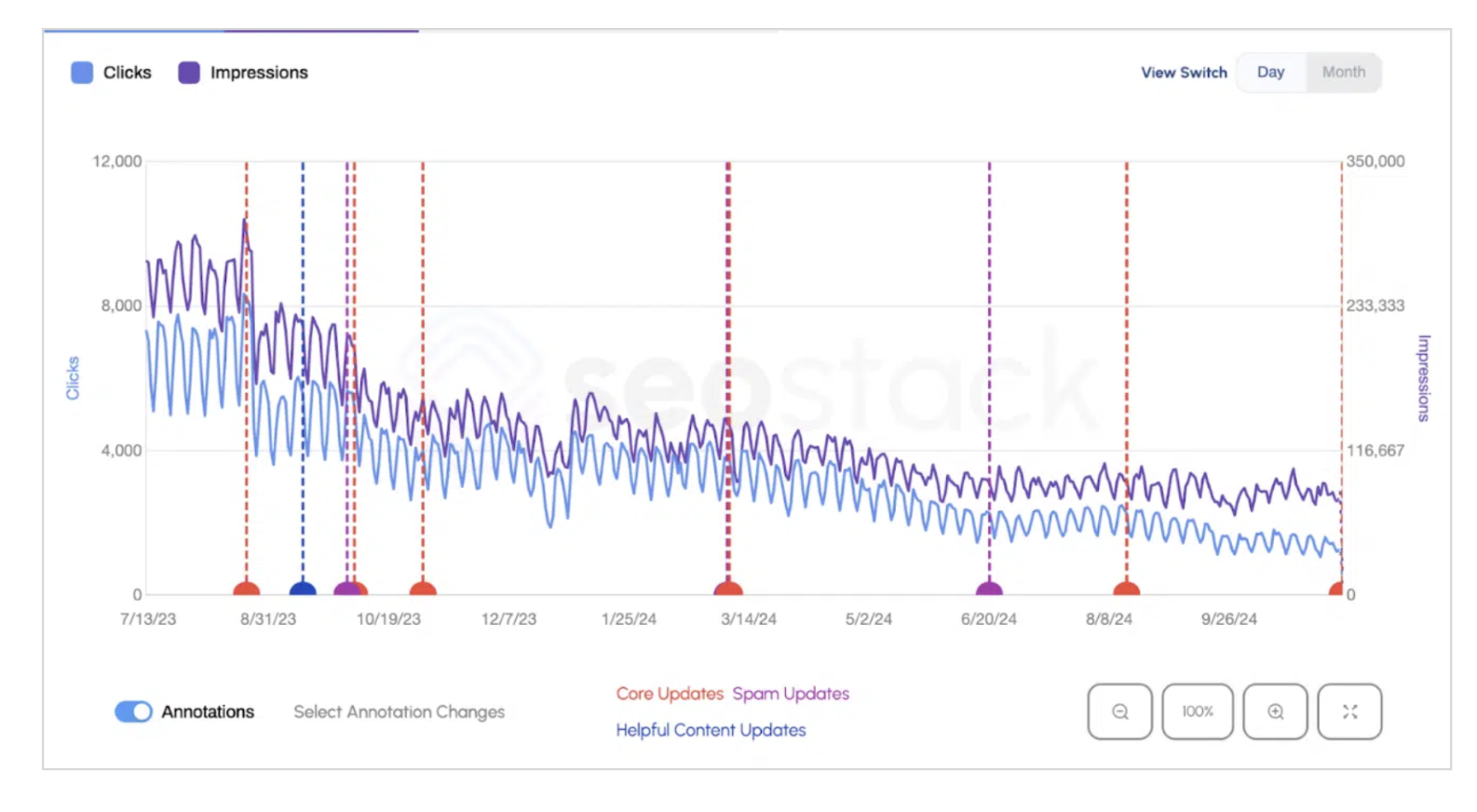

Recently, I worked on a project where the company produced thousands of pages using an SEO shortcut – AI-powered programmatic. It worked, until it didn’t. Google rolled out their Helpful Content Update, and the site tanked.

The business lost most of its revenue as a result. But it’s not just about the business impact – far worrying numbers come into play when you look at the impact LLMs have on the world we live in…

Energy consumption: More than you think

LLM’s are hungry for energy, water and they leave a huge carbon footprint!

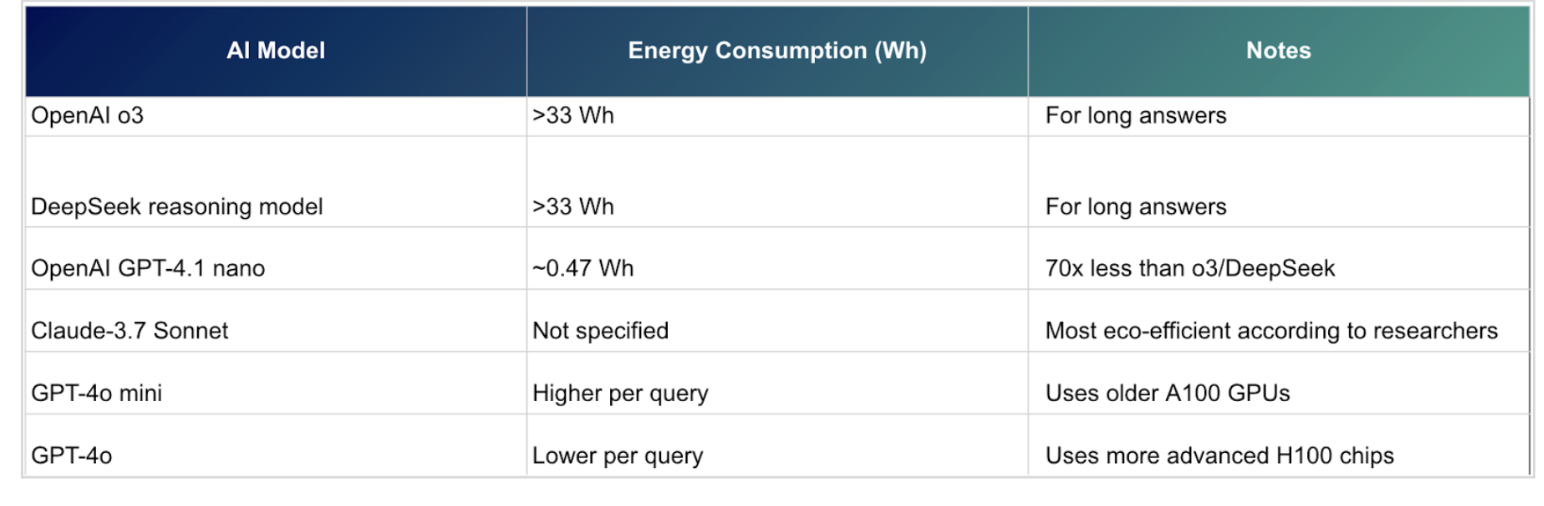

A single long response from OpenAI’s o3 or DeepSeek’s reasoning model consumes over 33 Wh of energy. That’s like running your laptop for half an hour for one AI response. Even the more efficient models like GPT-4.1 nano still use around 0.47 Wh per query.

Obviously, one could just keep queries short and conserve energy that way. The issue is that, in our experience, people don’t tend to keep queries short. In fact, we tend to treat chats like humans. We forget that every “please” and “thank you” said to ChatGPT costs millions of dollars.

Even when we do keep it ‘brief’, the issue is scale of adoption. A single brief GPT-4o prompt is about 0.43 Wh, which sounds reasonable. Here’s where it gets really wild: if you multiply that “brief” 0.43 Wh GPT-4o query by the estimated 700 million daily calls, you are looking at 392-463 gigawatt hours annually. That’s enough to power 35,000 homes for a year.

Carbon emissions: The hidden cost

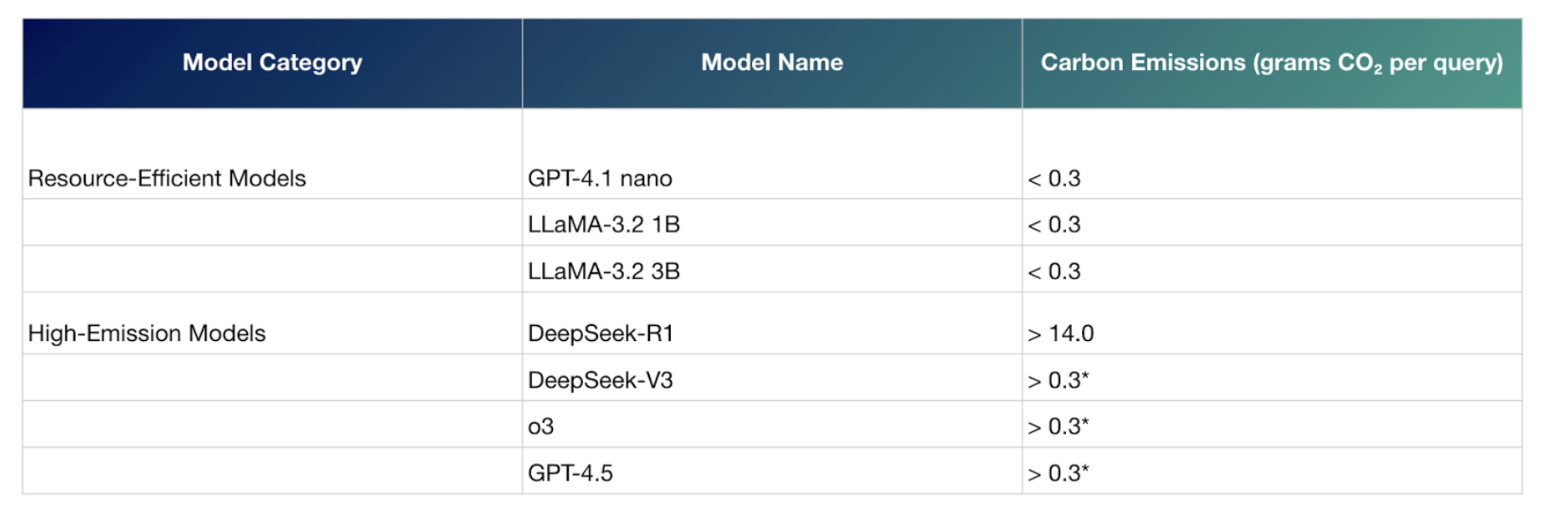

Unsurprisingly, the carbon footprint also varies dramatically between models.

Resource-efficient options like GPT-4.1 nano produce less than 0.3 grams of CO₂ per query, while DeepSeek-R1 pumps out over 14 grams per query.

GPT-4o’s annual emissions equal 30,000 gasoline-powered cars or 2,300 Boston to London flights.

I couldn’t find data on grams/per query for Gemini, but the usage has grown from 9.7 trillion to 480 trillion tokens per month (that’s 50x growth in 12 months!). At the same time, Google’s emissions climbed nearly 50% in five years due to AI energy demand.

Water consumption: The forgotten resource

ChatGPT-4o’s annual usage requires the same amount of water as 1.2 million people need for drinking purposes. Let that sink in for a moment…

The good news is that many of these companies are working on developing more efficient models. The challenge is that any efficiency gains are, at the moment, offset by rapid expansion in use.

And 90% of a model’s total lifecycle energy use is in inference, not training!

Beyond the environmental impact

There’s also the elephant in the room: data privacy and transparency. With ongoing cases like the NYT vs OpenAI litigation highlighting data preservation concerns, we need to be extra careful about what client data we’re feeding into these models.

Trust is eroded on several other levels, such as bias and the tendency to hallucinate. Back in 2022 when ChatGPT came out, I went head-to-head with the model to write a piece on how AI is changing SEO. What was telling wasn’t really the write-up so much as was the image given to me by Dall-e to accompany the piece.

If I were a man, I would not be standing in this ring like I’m selling cars in the 70s! There is a danger that these tools are learning from a place of misogyny and other biases that lurk online.

Far more devastating impacts can be found further afield. A couple of recent examples include The Microsoft-powered bot that advised bosses to take workers’ tips and landlords to discriminate based on source of income and the iTutor recruiting software that turned out to be agist.

The newest DeepSeek V3 model and the recently launched Alibaba Qwen 2.5 present a small opportunity to deal with some of the environmental and sustainability issues at least. A great article to be read here by Ben Thomson explaining the ins and outs of the V3 model.

The challenge here is the privacy side of things. DeepSeek’s newest model has already been hacked. Only days after release, there are security questions around Qwen as well. Not that I’m saying the US models are bulletproof, either!

I could go on further… For anyone interested in digging into this area, check out the European Union AI Act.

Getting practical: How I’ve been experimenting

Despite these concerns, I’m not suggesting we abandon AI entirely. Instead, I’ve been testing ways to use it more responsibly. Here are three examples we are running at our digital marketing agency, Vixen Digital to inspire you:

1. Reddit mining for audience research

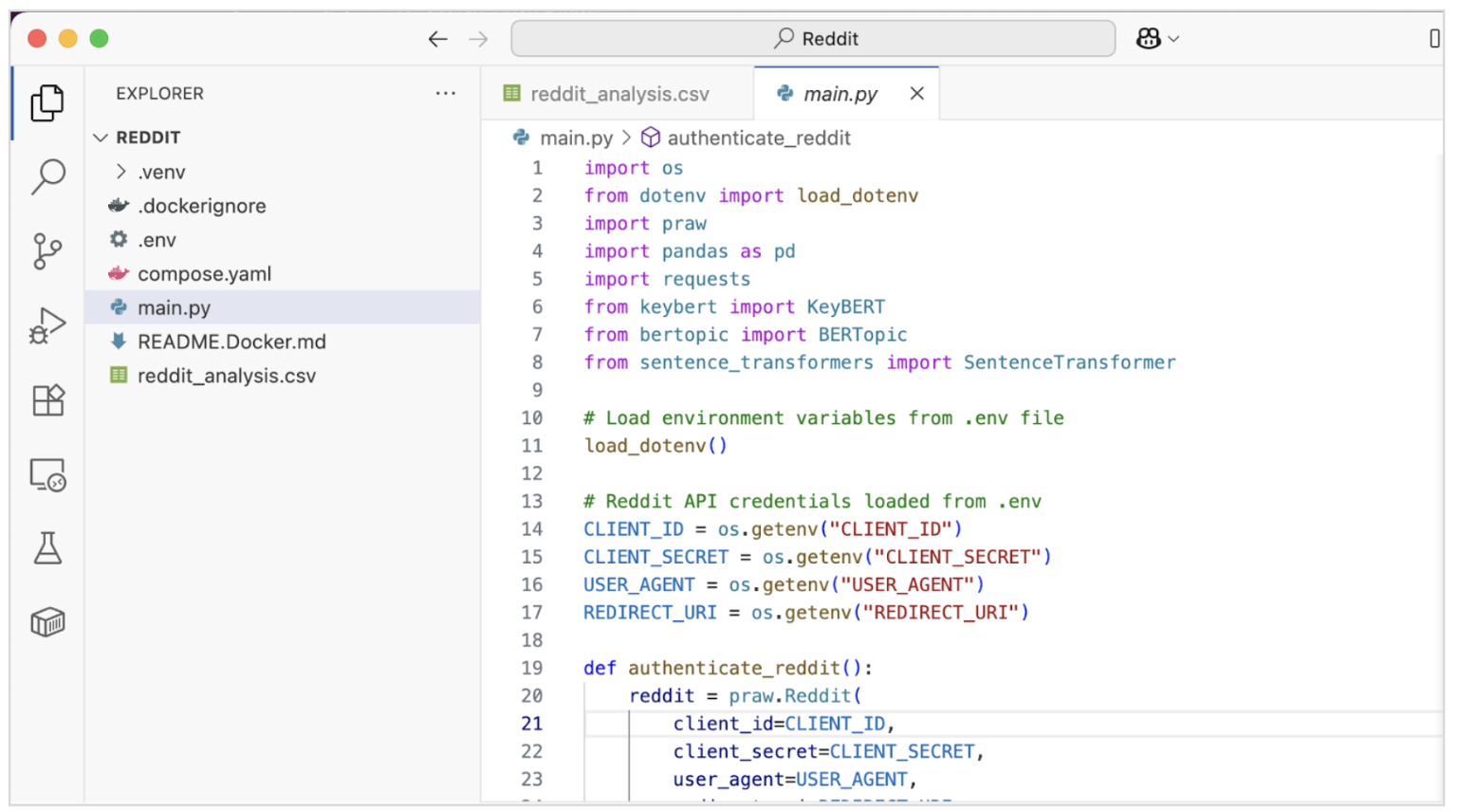

One of my favorite experiments combined Python with Reddit’s API – using KeyBERT and BERTopic for topic mining. I took client topics, tokenised metadata, and used BERTopic with cosine similarity to map existing content gaps.

I’ve seen this done using ChatGPT. After all, it’s far easier to just prompt ChatGPT to do this than to have to set it up using BERT models. The problem is that ChatGPT is not the best for classification tasks, and it wastes far more energy than the models used here.

For bulk keywording/topic‑modelling on hundreds or thousands of docs, KeyBERT + BERTopic (with a small embedding model) will typically use less compute and energy per result than running ChatGPT on every item.

To mitigate some of the other risks, I also hosted this locally on my machine, tested in batches and cleaned up the data before use.

Manual checks were also essential, especially for low-scoring results. It definitely didn’t take just 5 minutes like I initially hoped! But the insights were valuable for understanding audience needs without relying on traditional (and expensive) research methods.

2. Content cannibalization fixes

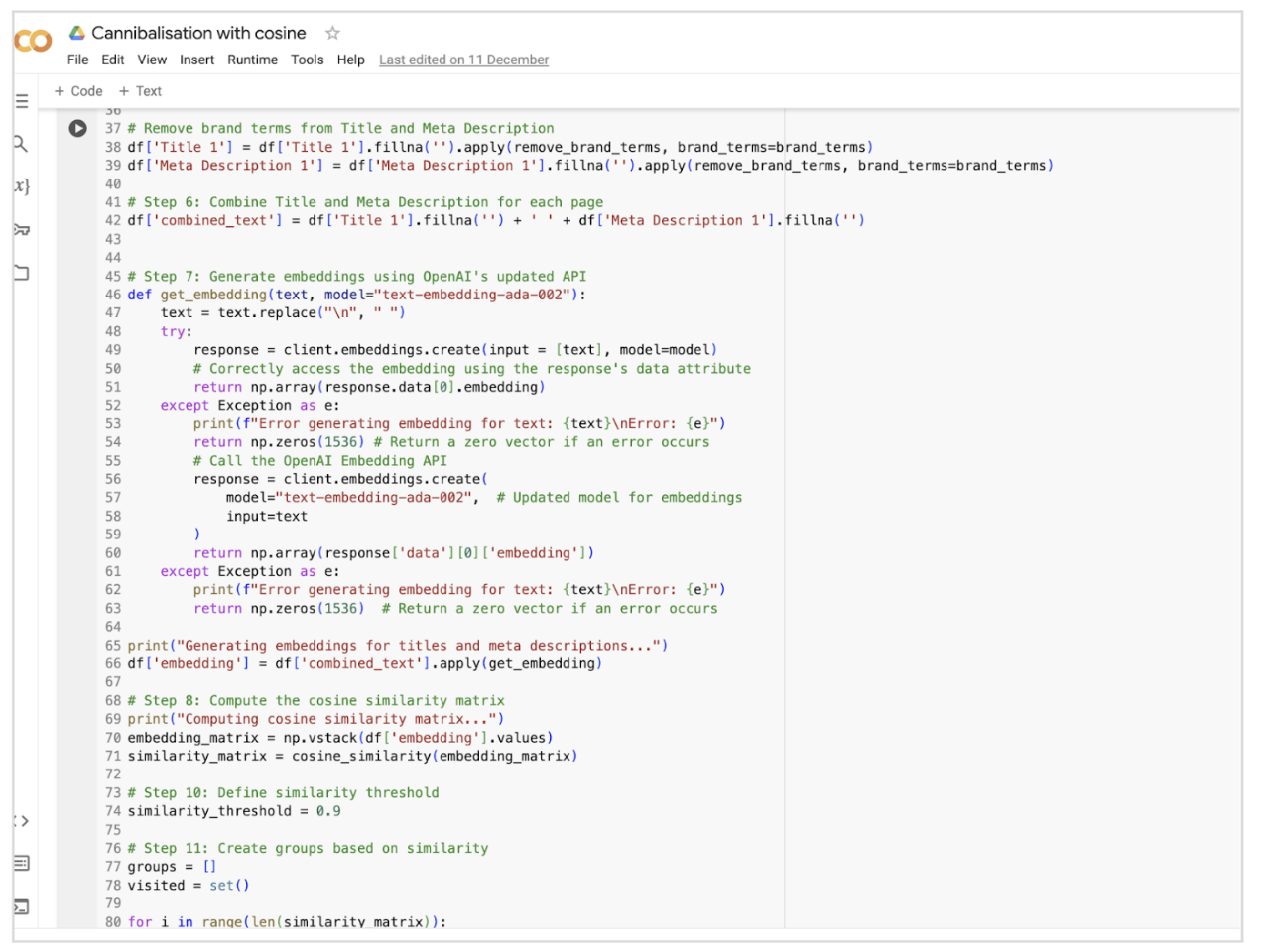

We built a lightweight cannibalisation detection process using Google Colab (Python), the OpenAI mini embedding model, and cosine similarity. This approach allowed us to quickly identify when multiple pages were competing for the same keywords or intent.

For bulk analysis across hundreds of pages, the OpenAI GPT‑3 Ada mini embedding model delivers faster inference times and lower energy consumption than running ChatGPT on every item.

To improve accuracy and cut unnecessary computation, I also stripped out irrelevant noise from the dataset, like the brand name, which was skewing results and wasting resources.

Again, I tested the process in batches, experimenting with different cosine similarity thresholds until I found the sweet spot. For this dataset, 0.9 worked best. Try not to run the whole dataset each time, it’s wasteful and makes QA-ing more difficult.

3. Google Trends automation

With the absence of the official Google Trends API and the unofficial API stopping working, this was a perfect use case for us to dive into creating agents.

The agent was built using the Browser Use GitHub Library and GPT-4o (a more environmentally friendly model). It runs locally for one minute each day, mines Google Trends’ first page and gives us the relevant trending topics for our client.

It costs under $1 to run while saving at least 30 minutes of daily work.

Sometimes the simplest solutions are the most effective.

Recently, Google announced the long time coming Google Trends API! It might well be time to retire our hard-working Trends agent. It has served us well to date.

A framework for responsible AI use

As an agency, we are trying to take responsible AI pretty seriously. Seriously enough to be one of the only digital marketing agencies with an ISO/IEC 42001:2023 certification. Investing in an ISO is definitely the thing to do, but only if you are not going to treat it as a checklist exercise. But even if your budget is tight, there are things you can do to become more responsible.

On a company level, here are a few simple things to do to use AI more responsibly:

- Start by being clear that you use AI to support your work (ie. add it into contracts).

- Create an AI Policy

- Set your chats to Do not train

On a project level, start thinking about scaling with AI responsibly by breaking it down into 3 phases:

Phase 1: Before you start

Before jumping in, take a step back and get clear on why you’re using AI in the first place. Start with the problem you’re trying to solve, then think through data privacy and permissions before you even touch a model. This upfront work helps you avoid wasted effort and sets you up to make smarter, more responsible choices later on.

Problem check

- Write down exactly what problem you’re trying to solve – a couple of great resources to help here: Lazarina Stoy’s excellent problem formulation framework OR Elias Dabbas’ LLM app framework

- Ask around – has someone already solved this? There might be a simpler non-AI solution you can use instead.

Permission check

- Do I have permission to use this data/content?

- Is this allowed by company policy?

- Would I be comfortable if this was made public?

- Am I putting sensitive info into the AI?

Choose your model

- Pick responsibly – research energy consumption and carbon emissions

- Consider efficiency over flashy features

- Think about the cumulative impact of your choices

Phase 2: While you work

Once you’re in the thick of it, it’s about staying intentional and in control. Testing in small steps, checking the quality of your outputs, and keeping humans firmly in the loop will help you avoid mistakes and bias creeping in. This phase is about slowing down just enough to ensure your work is both accurate and responsible.

Quality control

- Test with small batches first

- Check AI outputs for hallucinations

- Don’t trust everything – verify always!

Stay in control

- Keep human judgment in the loop always

- Don’t automate important decisions completely

- Understand what the AI is doing

- Have a backup plan ready

Avoid bias

- Check if results seem unfair to any group

- Ask others to review your work

Phase 3: After you’re “done”

Finishing doesn’t mean walking away. This phase is about closing the loop: being transparent about how AI was used, documenting your process so others can learn from it, and monitoring results over time. AI outputs can drift or degrade, so ongoing review is key to keeping things relevant and responsible.

Communication

- Tell people you used AI on this project (don’t hide it)

- Explain which parts were AI-generated

- Share how others can use your approach

- Document what worked and what didn’t

Monitoring and improvement

- Check regularly that outputs still make sense

- Gather feedback continuously

- Regularly review and update your approach

- Be ready to switch tools if needed

The bottom line

Yes, you can scale SEO with LLMs responsibly, even on a tight budget. It’s about making informed choices, understanding the trade-offs, and always keeping the human element in the loop.

The key isn’t avoiding AI entirely, it’s using it thoughtfully. Choose efficient models, batch your requests, verify outputs, and always consider the bigger picture impact of your decisions.

Scale should be about sustainable growth, plus responsibility to the world we all live in.