In early August 2024, a lot of SEOs started noticing that more and more, an unusual parameter was being attached to their organic search listings – the srsltid parameter.

If you work in SEO or with Google Merchant Center (GMC), you might have seen it more of late, but the parameter was actually introduced to the SEO world back in February 2022.

What is srsltid?

The srsltid is a parameter designed to track organic traffic connected to products managed by feeds in GMC. The srsltid appended URLs initially appeared in Google Shopping, but in August, a subtle shift occurred.

Instead of being added solely to product URLs, the srsltid was appended to every URL in the search engine results page (SERP) if an organic feed was present and auto-tagging was enabled.

We can’t be sure why, maybe it had to do with the August 2024 Core Update. However, regardless of the cause, many thought it was a mistake that would soon be fixed, but here we are still talking about it a few months later.

Google Merchant Center – Auto-Tagging set

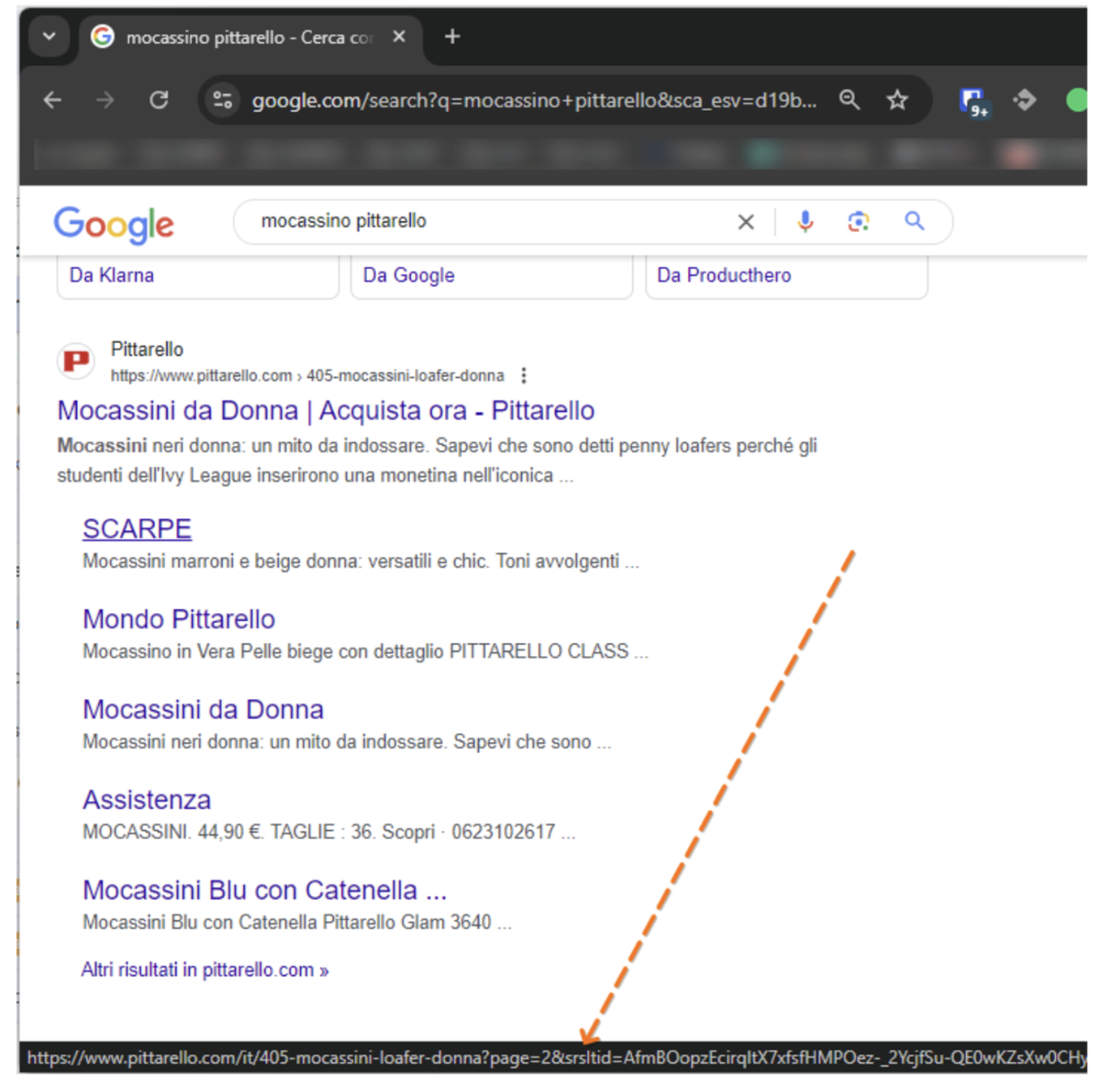

Before we start asking questions about how srsltid impacts us directly, let’s take a look at how it appears.

The srsltid is a query string parameter added to the end of product URLs.

“These URL parameters are added for Merchant Center “auto-tagging”. They’re used for conversion tracking for merchants.”

As mentioned above, they are more prevalent in the SERPs nowadays and relatively easy to spot.

What impact does srsltid have on SEO?

So, why have we decided to analyze the srsltid now? Well, it doesn’t seem to be going away and with this new parameter, there are important questions about how Google handles it during the crawling and indexing processes.

For SEO professionals, understanding whether Google treats srsltid-tagged URLs as unique or if they’re filtered to avoid duplication is key. These insights can help us determine if this parameter affects crawl budget, indexing patterns, or even page rankings.

In this analysis, we’ll use tools like Oncrawl to dig into the data, to help us see how often srsltid-tagged URLs appear, whether they’re treated as distinct pages, and what kind of impact, if any, they might have on overall SEO performance.

By breaking down the srsltid parameter, we’ll gain a better understanding of how it influences organic traffic reports and affects the visibility of product feeds in GMC, empowering you to make data-informed decisions on how best to manage these parameters in your own strategy.

The impact of this parameter can be varied and I’ll try to analyze the different scenarios.

Crawling consequences

The first question I want to address: Does Google crawl those URLs or does it use srsltid only as a tracking parameter in the SERPs?

Although Mueller explained that, “[It] doesn’t affect crawling, indexing, or ranking…”, just to be sure, we analyzed log files of a large e-commerce website (just before it autonomously decided to remove auto-tagging).

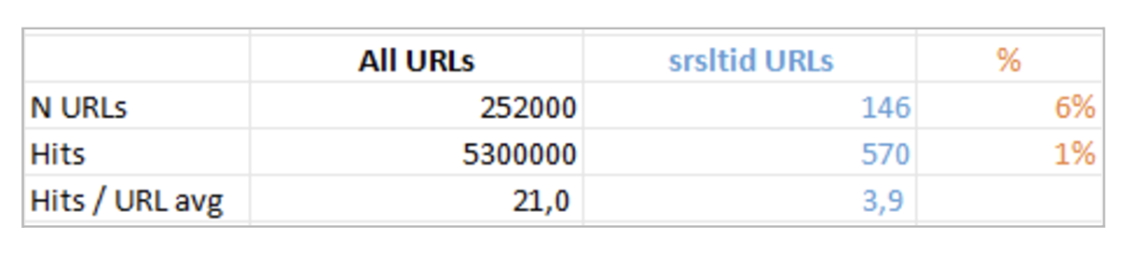

We analyzed three months of logs and saw the following results:

As we can see, the impact on crawling is minimal. It appears as though Google crawls them only if it finds them (e.g. backlinks, sitemaps, …).

For that reason, Google adds them to the crawling queue for subsequent analysis, just as if srsltid was a standard query string parameter.

SERP consequences

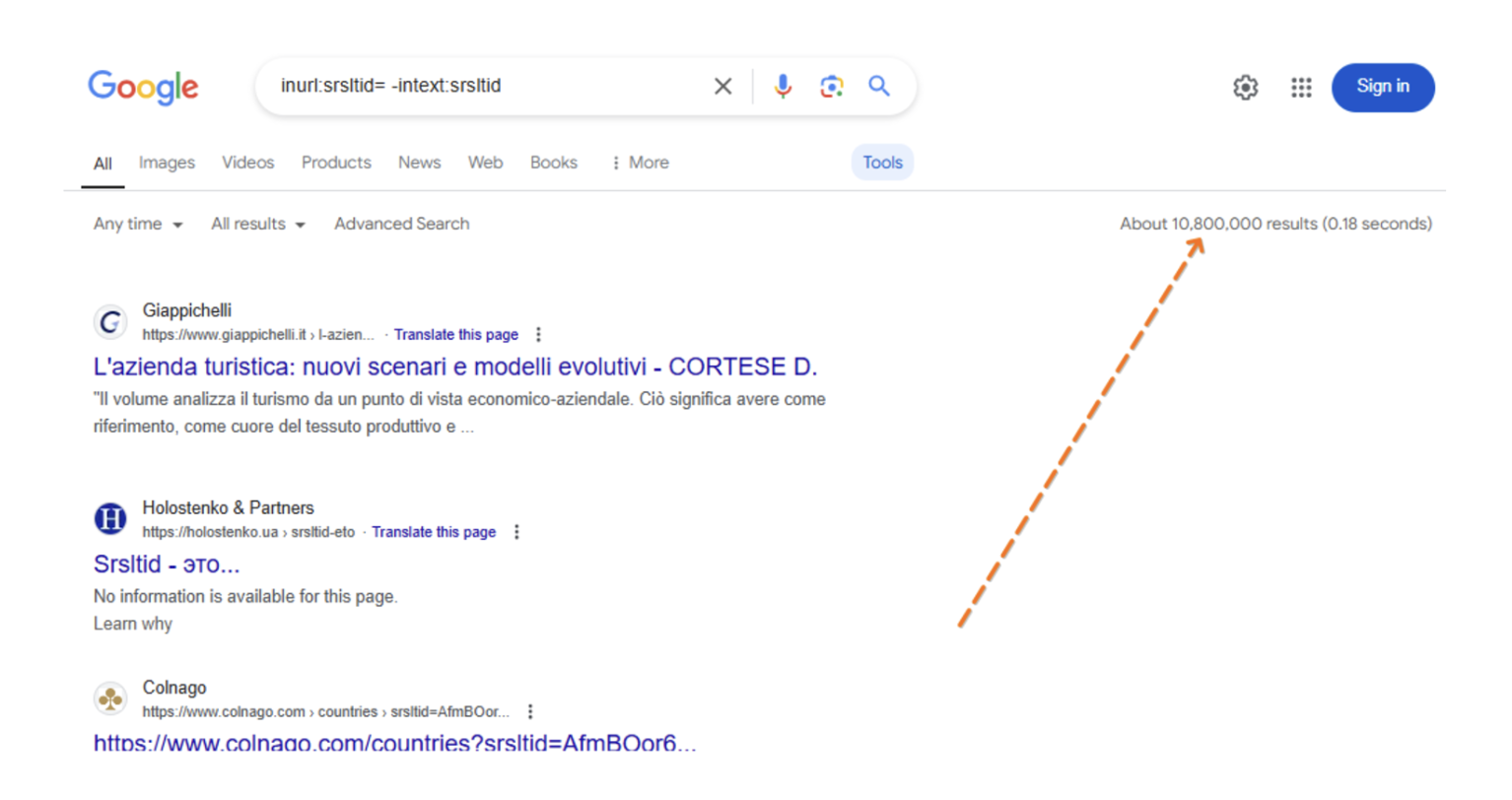

Crawling is only one part of the equation, now it would be interesting to analyze whether or not the srsltid URLs are indexed. The short answer, yes!

When you conduct a Google search for something similar to:

inurl:srsltid= -intext:srsltid

the SERPs return approximately over 10 million results. So yes, there are a lot of URLs in Google’s index.

With so many URLs ultimately pointing to the same page, we are bound to run into problems with duplicates. It means that a correct setting of the canonical links is mandatory!

Analytics consequences

Not only do we need to look at the SERPs, but it’s also important to understand where srsltid impacts our analytics.

Google Search Console

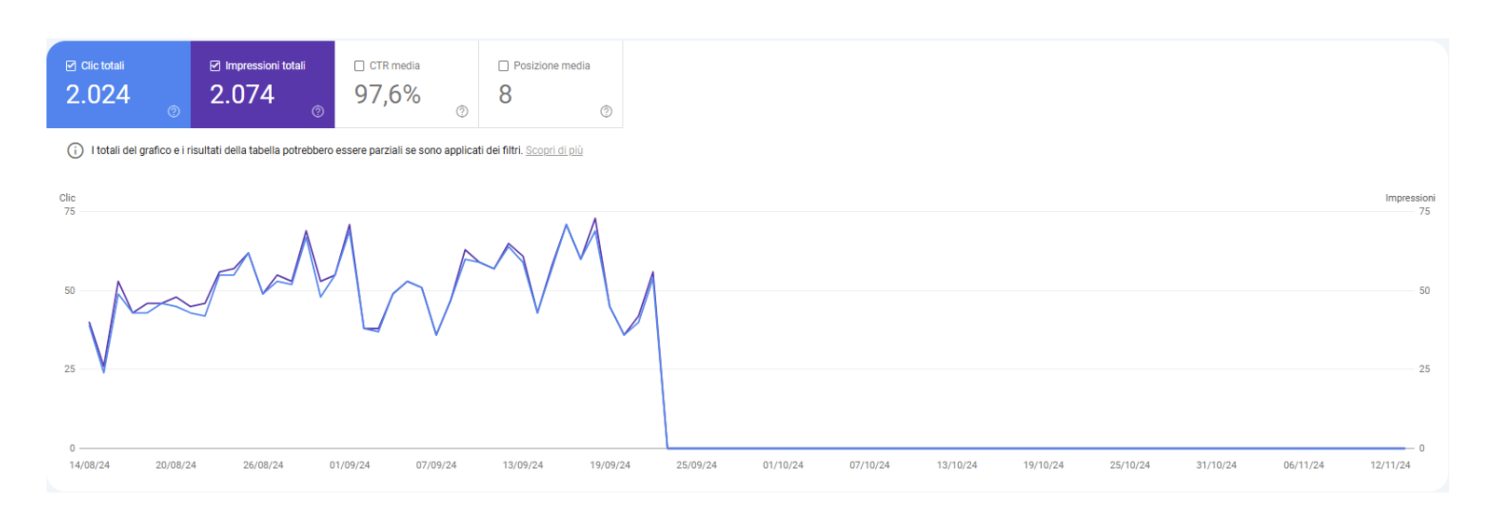

Something very strange is happening in the Google Search Console. Take a look at the diagrams below, they come from two different customers’ GSC accounts and they show URLs with the srsltid parameter.

In the first diagram, we see that the number of clicks and impressions were practically identical. However, after September 22, both metrics dropped sharply to zero.

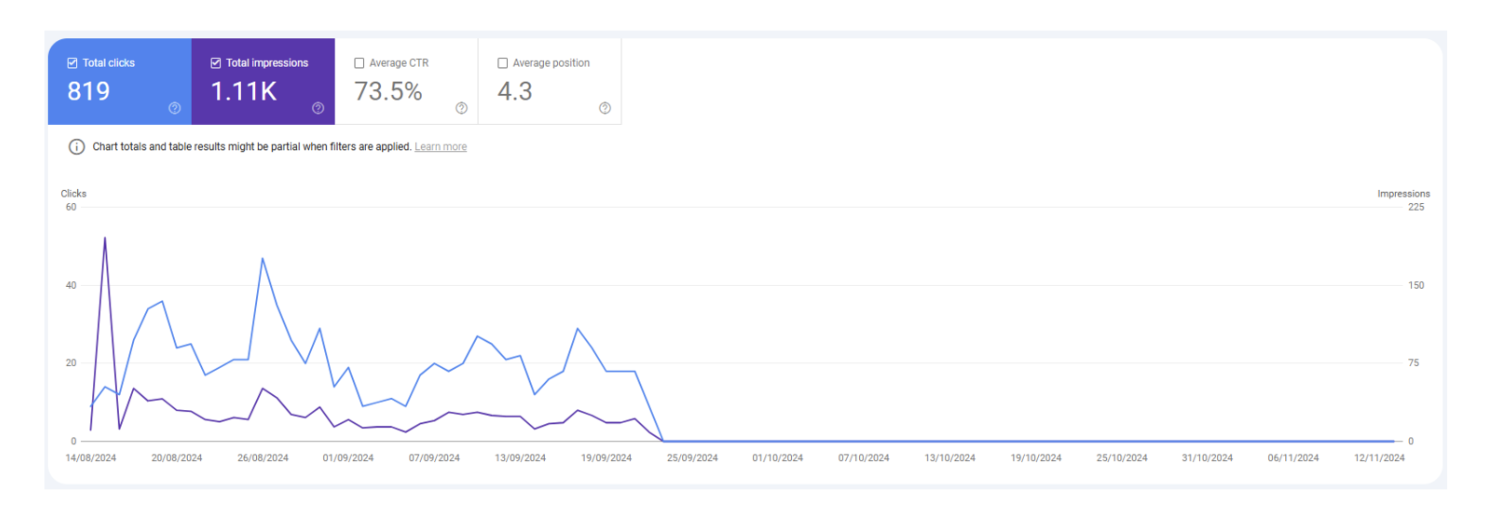

Below, the diagram from another e-commerce website shows very similar behavior. In this case, while clicks and impressions have differing values, both metrics dropped to zero after September 21, 2024!

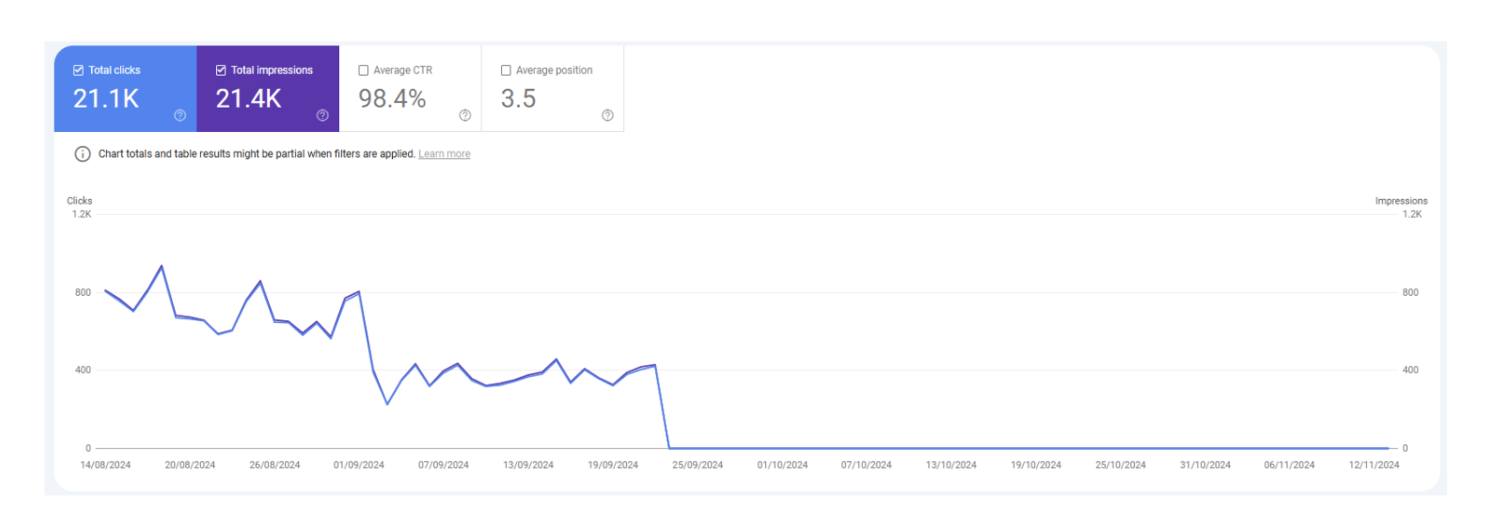

This appears to be very strange behavior, but they aren’t isolated instances. Let’s take a look at two other unrelated sites we were able to analyze in GSC.

In both examples, we see again the familiar pattern of similar clicks and impressions before a sudden drop. The one major difference we did notice is in the example below, the date of the drop occurs several days earlier, occurring near the beginning of September rather than around the 21–22.

What’s causing this? I honestly couldn’t say with any certainty because Google hasn’t provided any information.

However, if I were to hazard a guess, it looks as though sites no longer have access to performance indicators for URLs with the srsltid parameter even though these pages continue to be indexed.

Google may have simply added a filter to the report, excluding URLs with or without the srsltid parameter.

In other words, that implies that when Google appends this parameter and indexes it along with or in place of your canonical URL, you risk losing all visibility regarding your page’s performance in Google SERPs and risk additional traffic loss due to potential cannibalization.

In summary, it’s ignoring the parameter on URL performance evaluation and as of September 22, you can no longer verify or mitigate that information as it appears to be no longer accessible.

GA4

In Google Analytics 4, we couldn’t find any data for landing pages with the query string parameter srsltid. But, if we set the export of GA4 to BigQuery, we found surprising results.

We ran this analysis using data from another one of our clients for whom we manage their Google Analytics and Big Query.

When we set the export to BigQuery, low and behold, srsltid appears! Below, you can see the result of removing all the source/medium different from null and google/organic:

OK, so we found some interesting information, but what does this mean for SEO and how can we use it?

This is a difficult question to answer and the main reason is that we think what is currently happening is a bug on Google’s part.

All of the organic results have that parameter appended, in Big Query, we can only see a few parts (< 15%). If all the results have it, it becomes useless to filter the traffic.

Where we used Oncrawl in our analysis

Tracking how often Googlebot reads srsltid pages is pretty straightforward, you just need to pay attention to one key detail: where the hits are coming from.

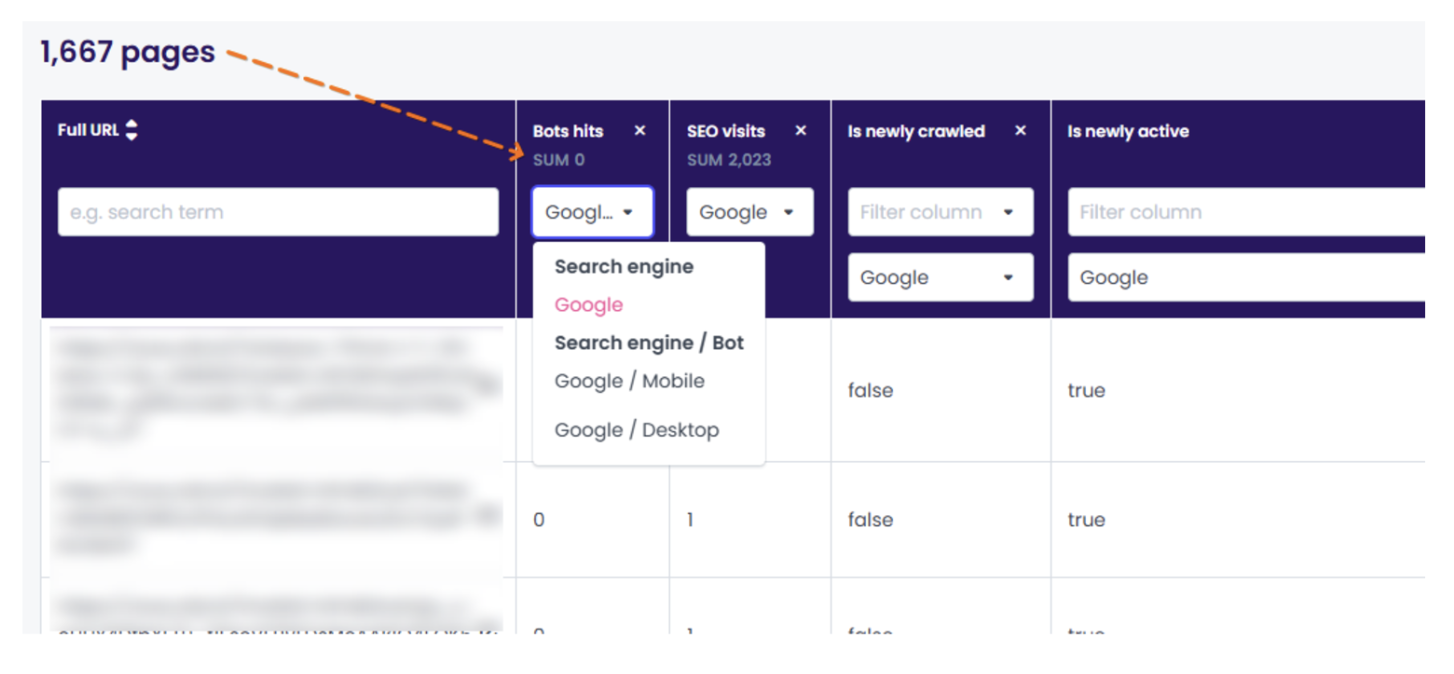

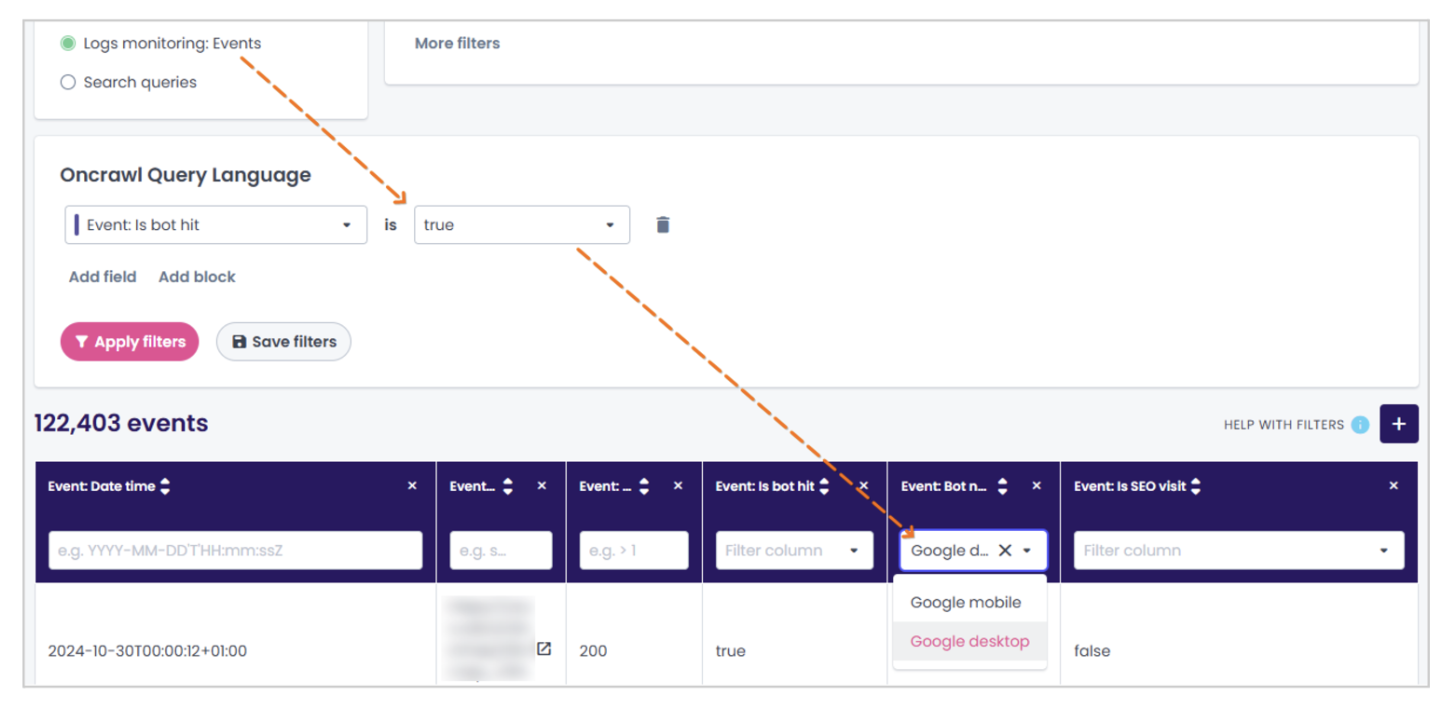

By default, Oncrawl shows all the pages it discovers in its reports, but in order to identify only what Googlebot sees, you need to filter the report for that kind of bot hit. We will show you how to do that below.

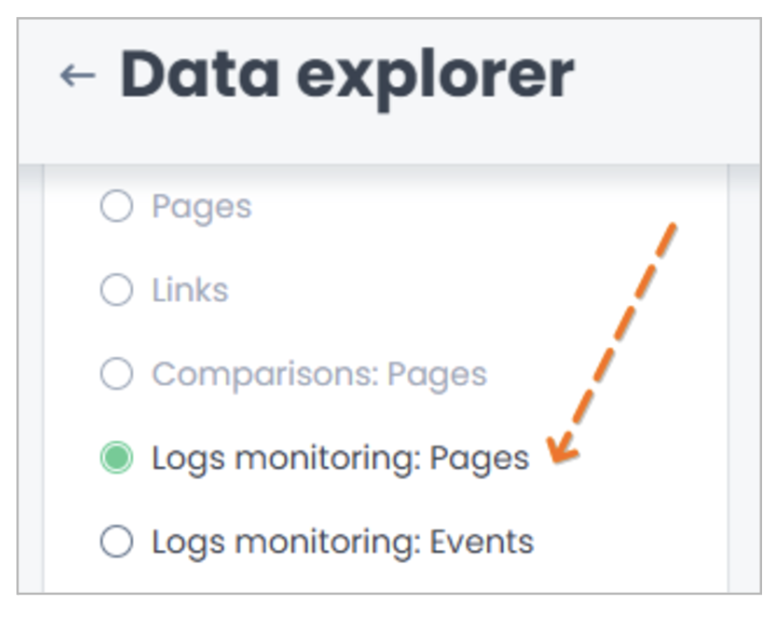

The best tool for this task is the Data explorer. You can access it in the analysis dashboard of any crawl report.

In the Data explorer, you can launch a custom search by pages or by events. Here’s an example:

In the Pages mode, you can analyze data grouped by pages. In the Events mode, you can view data in a row format by events, with details for each individual hit.

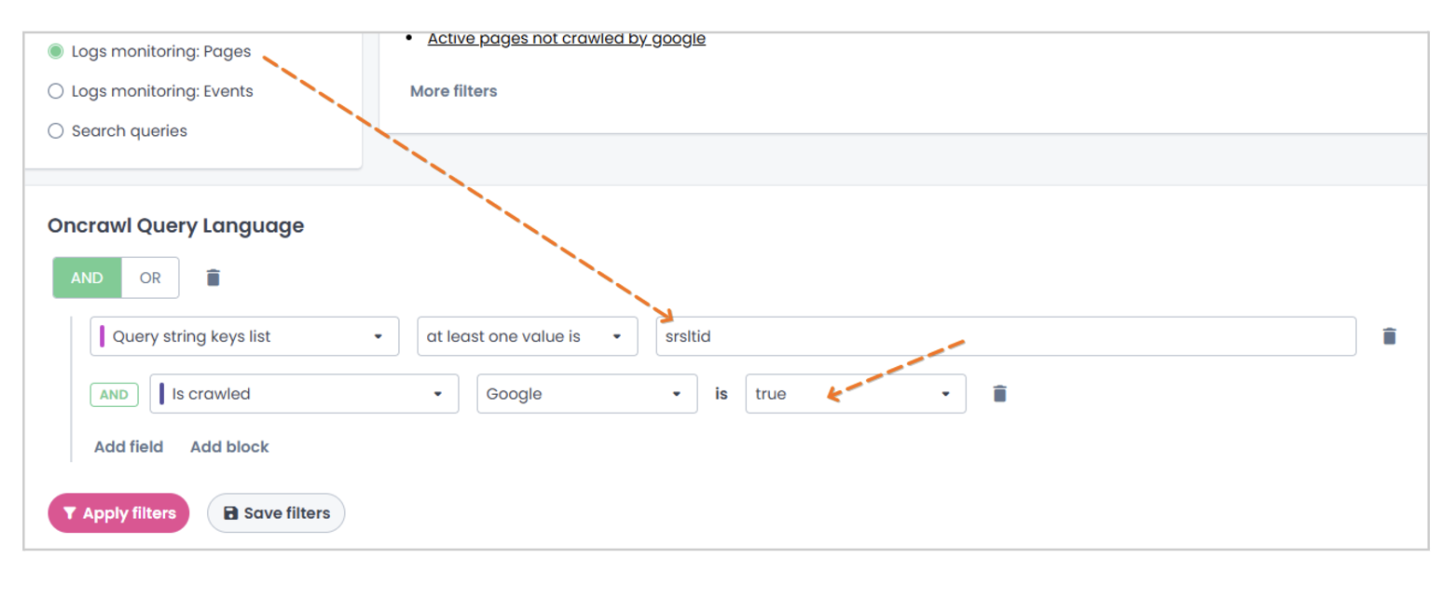

For an initial, streamlined approach, I recommend starting with the Pages view and applying a filter using the powerful Oncrawl Query Language feature. Here’s an example:

Going back to the key detail I mentioned earlier: you need to pay attention to the SUM of “bot hits” or specifically “Googlebot hits.”

For instance, in the screenshot below, there are over 1,600 pages in the report with the query string parameter srsltid, but none have been read by Googlebot or any other search engine bot.

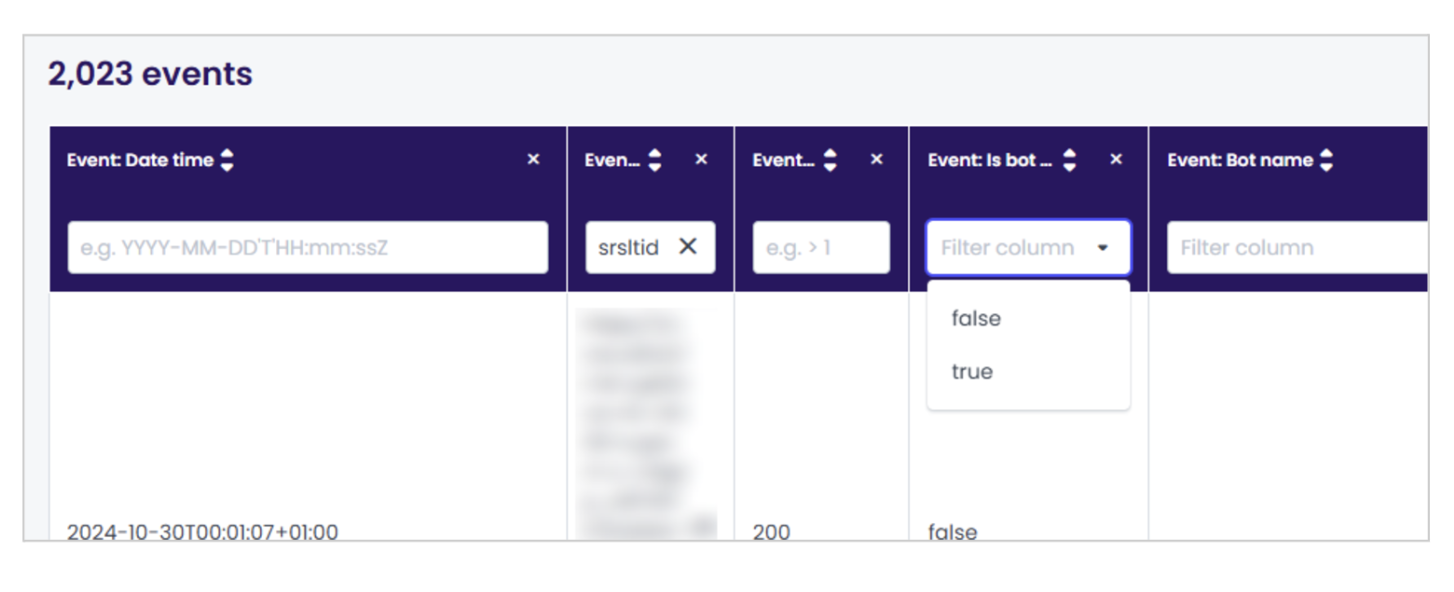

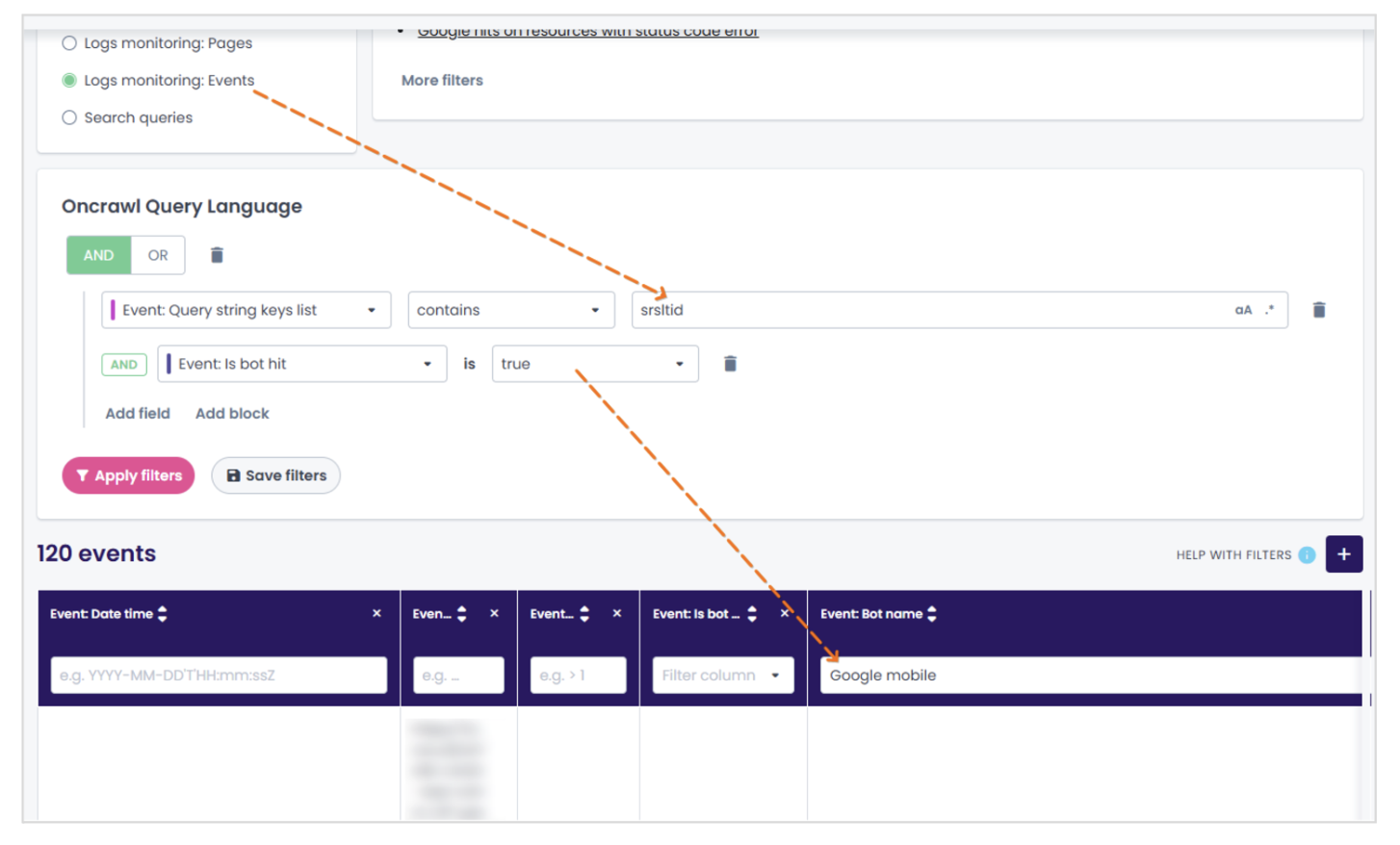

The same thing may happen in the Events view. In this case, the column to focus on is Event: Is bot.

In the screenshot below, you can see that I’ve also filtered the report by srsltid directly in the Event: Full URL column.

By using the Pages or Events views for our analysis, we were able to quickly manipulate and see the data that helped us determine if and where srsltid was impacting our client sites.

You can do a lot with log analysis.If you’d like to deepen your understanding of log analysis, I recommend you take a look at my blog post which provides a great overview of the subject.

An example of our srsltid analysis

Once we had the right data to analyze, we organized it in a table. The table below summarizes the impact of srsltid pages on the total number of pages. We used the results from the “Data Explorer” tool, leveraging both the report by pages and the report by events.

| All pages | srsltid pages | Percentage | |

|---|---|---|---|

| Pages crawled | 81,236 | 120 | 0,14% |

| Googlebot Hits | 30,4873 | 120 | 0,04% |

| Googlebot Hits (mobile) | 182,470 | 120 | 0,06% |

| Googlebot Hits (desktop) | 122,403 | 0 | 0 |

As you can see, the impact of srsltid pages on crawling is very low, both in terms of distinct pages and total hits. Interestingly—though it would be worth investigating on other websites or over a longer period—all the srsltid pages crawled were accessed by Googlebot mobile, not desktop.

Here are some screenshots of the filters we used:

Recommendations on how to deal with srsltid

The widespread use of the srsltid parameter raises several critical issues for SEO professionals including concerns over duplicate content, the potential mismanagement of crawl budgets, and inconsistent analytics reporting.

Furthermore, Google’s lack of clarity about how these parameters are processed exacerbates the difficulty of effectively addressing their impact.

I tried asking Google directly how they are managing this parameter and how it impacts the canonicalization process, but unfortunately, no one has yet replied to me.

Without clear guidance or a fix from Google, we, as SEOs, are left to navigate these challenges independently. The way I see it (while we wait for an official response from Google or for them to fix the problem) is that we have two solutions:

- Removing the auto-tagging (suggested)

- Maintaining the auto-tagging

Removing auto-tagging

When we remove the auto-tagging, the srsltid completely disappears. Due to it being appended in every URL in the organic SERPs, from our point of view it was useless. Our suggestion is to instead add utm_source and utm_campaign to the feed URLs. This way you can perfectly track the organic traffic from Google Merchant.

Maintaining the auto-tagging (if you can’t remove it!)

Based on what I wrote a few lines before, we suggest adding the utm_* query string parameters to the feed URLs also if it maintains the auto-tagging! This should help Google recognize the URL with parameters as a non-canonical URL.

The other important thing is to add the <link rel="canonical"> for every page with the right value, based on your SEO strategy.

Another important thing to do, if you have a caching system, is to set it so it ignores the srsltid parameters (the same thing you should do for fbclid, gclid, …).

Why did we suggest this? As standard behavior, caching systems consider the pages to be different if the URLs are different. So, setting that “ignore” configuration, right pages will be cached, even if the srsltid (or other tracking parameters) changes its value.

Conclusion

Honestly, I’m not sure how Google plans to manage this situation or if it even has a clear strategy in place. Communication on the matter has been nearly nonexistent in recent weeks.

However, during the drafting of this blog post, something significant changed, and I’m pleased it happened before publication: the impressions and clicks in GSC were set to zero as we saw above.

We’re unsure if this is merely a visual issue at the reporting level or if it reflects a real-world impact. To confirm, we’ll need to analyze log files and monitor real-user hits.

My suggestion for the time being is to ensure every canonical setting on your websites is correctly implemented and to stay curious about what’s happening to your site, particularly about how Googlebot behaves. And yes, if you can, remove auto-tagging from Google Merchant settings and return to the old great “highlander” UTM parameters ;)