Are you a seasoned Oncrawler who thinks they know the platform like the back of their hand? Or, have you recently partnered with us and you’re looking for insider tips to kick you off?

As a part of the CSM team at Oncrawl, I have a particular view on the tools of the platform that I’ve noticed are visited less frequently. So, regardless of your situation, here is a round-up on my top 5 underrated Oncrawl features that you may have overlooked or you just don’t know about yet!

Custom fields (data scraping)

A quick recap on custom fields at Oncrawl, we offer two methods for scraping, XPath and Regex. And before going further, if you need a deeper look at web scraping, data extraction from websites, check out this article that goes over it’s useful functions.

Now the custom field features may not necessarily be ground breaking, but what is incredibly convenient is the ability to use a mix of both Regex and XPath rather than being limited to one method.

To put it simply, Regex and Xpath are methods to find things. Rather than doing individual searches for text, you can do a search based on a pattern.

Let’s say you want to identify the month in this text, “September, 21”, rather than searching for “September”, with Regex you can use \w+ to pick up the word character. While Xpath lets you display text elements from an XML or HTML document.

In this case, let’s say you want to display the content within the div element, you’ll start off with //div[@class and so on. In some cases you just want to display the content, in others you want to identify a specific pattern, but sometimes you need a mix of both.

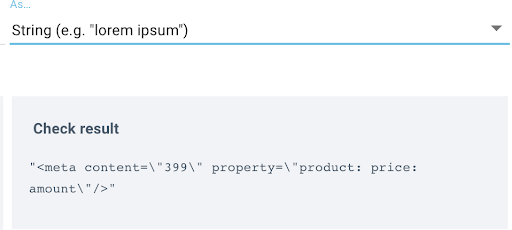

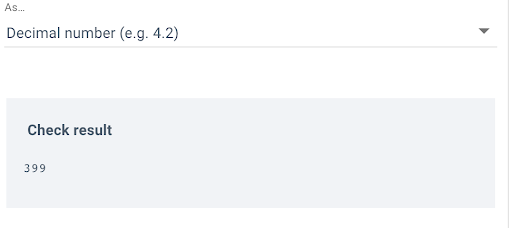

For example, let’s say I want to scrape the price for all product items on a site. In my first rule, I’m using XPath and you can see that I’m pulling the raw data to verify that I’m picking up the pricing element:

Then, I use Regex for my second rule to only pick up any element until the quotation mark, in this case, the pricing: content=”([^\”]+):

It makes creating scraping rules a little quicker and easier. And If you’re not comfortable with either method yet we have a nice help article with some tips.

Custom filters in the Data Explorer

Do you ever find yourself in the Data Explorer trying to remember the filters you used with the Oncrawl Query Language? Or maybe each time you’re there you’re always manually using the OQL to filter through crawled pages and add each relevant column one by one.

Well you could be saving yourself some precious time by using the custom filters feature, which is conveniently located to the right of the DataSet options:

You have the Quickfilters option which is made up of common queries, and every custom filter you save will be added under the ‘Own filters’ section where you can easily retrieve the pages you’re looking for.

This can be done for every Dataset available to you, by default you’ll have Pages and Links, but if you have Log Monitoring enabled in your subscription you’ll see the option for Logs monitoring: Pages, and Logs monitoring: Events.

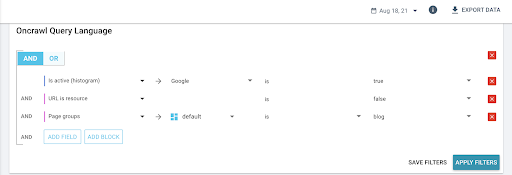

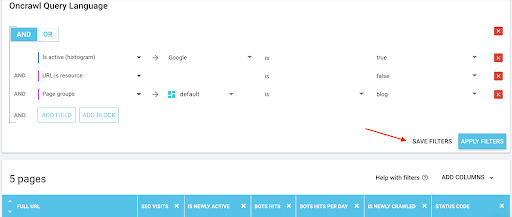

All you have to do is use the OQL to filter the pages, here we are looking at SEO Active pages for August 18th, specifically for the Blog page group:

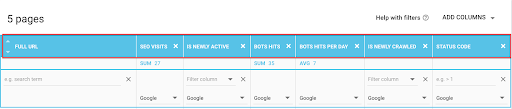

And then you can use the drop down menu (“ADD COLUMNS”) to add the relevant metrics to your search:

Then click on “SAVE FILTERS” to save your custom filter and it’ll be available under “Own filters”

Log Alert/Notifications

A recent addition to our feature upgrades for 2021 which can be overlooked is the option to enable log alerting – it’s a great way to be aware of any processing issues with your logs.

Rather than waiting to discover an issue with any deposits when you log into the platform, you can enable the log alerting to let you know when logs haven’t been deposited recently, or what is deposited isn’t considered useful.

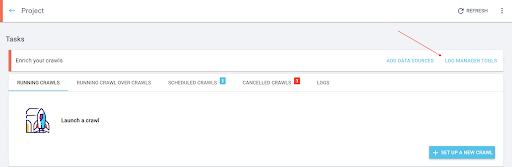

It’s pretty straightforward to use, head into your project which has Log Monitoring enabled and click on the “Log Manager Tools” tab:

Once you’re in the Log Manager Tools report you’ll click on, “Configure Notifications”:

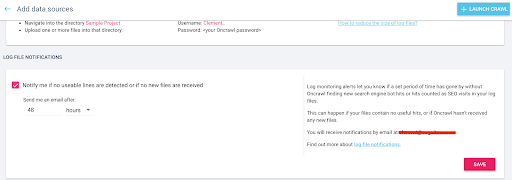

From here you’ll be able to enable the notifications and define a threshold for when you should be contacted if either no useful lines or no new files have been deposited.

This can be time saving in discovering what exactly has gone wrong with some deposits, which gets you that much closer to having up-to-date reports sooner.

[Ebook] Four Use Cases to Leverage SEO Log Analysis

Log Manager Tools

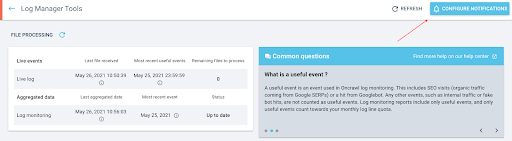

Since we’re on the topic of logs, it seems fitting to mention that the Log Manager Tools report is another great area of the platform that oftentimes isn’t utilized as often as it could be. It’s a nice little hub tucked away in the project level which is composed with the details on what exactly is being deposited, in regards to log files.

When you jump in, not only are you going to see the exact files that have been deposited, you’ll see the exact date and time of each deposit, the break down of each type of log line (OK, filtered, erroneous), a graph that monitors that amount of fake bot hits detected per date of deposit, and you can see a breakdown of the quality of the logs deposited and the distribution of useful lines.

It’s a great place to check on the quality of the file deposits, for example making sure if the files are compressed, if you are actually depositing is SEO related lines, as in organic visits and bot hits, and verifying the frequency of the deposits.

If you start to notice anything odd with your log reports a great place to start your investigation is in the Log Manager Tools. You might discover that the log line format has changed and our Customer Success team needs to update the parser, or maybe you’ll notice that the bucket name has changed and you need to send us new credentials. In any case, you can always take a look and reach out to us if you need help digging deeper.

Personalized segmentations

Last but not least, our most recent addition to our arsenal, Personalized segmentations! We now have a collection of segmentation templates to inspire those new to Oncrawl who’ve never worked on a segmentation, or to make the development of one a little smoother.

Quick run through on segmentations, they’re essential to developing since you want to know what part of the site you’re looking at when inspecting crawl results. They can be as simple as grouping pages by the first path in the URL, or can be as specific as grouping pages by a range of GA sessions.

In addition to the templates now giving you a little guidance on why you should use it, you’ll also have multiple templates available according to the external data connectors you set up for the project.

If you have log data, you’ll have a template suggestion for SEO Visits or bot hits, and if you have Google Search Console connected you’ll see the option to create a segmentation based on positions or ranking pages.

It’s up to you on how you want to view the site, we’re here to make it a little easier. So go ahead, give it a shot and tell us what you think.