JavaScript frameworks allow building modern, interactive and smoothly loading apps. In many situations, this is a huge advantage to users. However, from the SEO point of view, JS is seen as a nightmare as there are often so many horror stories after implementing it. JavaScript is our new reality. We shouldn’t treat it as a monster but rather learn how to co-exist.

JS adds complexity and we need to accept it

JS adds additional complexity to a standard website. In many cases, it’s more difficult to audit and debug JS-powered websites. Even if armed with crawlers that can execute JS and reliable data, we need to be prepared to solve unique, never-before-seen issues. As a result, SEO has gained a new branch: JS SEO. In fact, JS SEO is standard technical SEO (every website should always follow good practices of on-page optimization) enhanced by checking how Google interacts with JS-powered websites.

Yes, JavaScript makes our life harder. And since SEOs won’t stop the skyrocketing trend in JS frameworks development, we had best be prepared.

Moving to a JavaScript framework

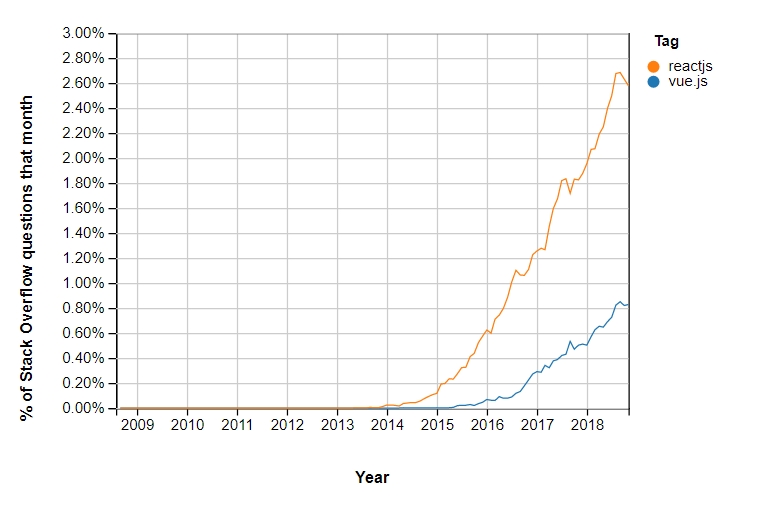

I can imagine a situation where a CTO and developers are planning to implement major changes to the platform you are working on. Most likely they will go with one of the JS frameworks, as we can see in the data from Stack Overflow Trends, interest in the biggest frameworks is continually growing:

If you are faced with such a scenario, do not quit the job. Plan the whole process of implementing and maintaining the JS framework. My vision of the future holds that JS SEO will become a natural element of technical SEO, because more and more websites will have JS dependencies.

In this article, I’ll provide some key elements that you need to watch out for to make sure that the shift to a new framework won’t ruin the whole business.

To pre-render or not to pre-render?

For the sake of clarity, in this article, I use the term “pre-render” to describe the concept of rendering dynamic pages with a freely chosen infrastructure and sending it when a given User-Agent makes the request.

Before a migration, you will need to decide if you should pre-render (or Server-Side Render) the pages. The answer is, it depends ( :) ) but in most cases, yes, you should.

Below you will find the paths you can choose if you have (or you will have) a JS-heavy website:

- Leave it as it is and rely on client-side rendering (CSR)

Sidenote: Google can deal with processing JS (keep in mind that it is still far from ideal), however other search engines do not have this ability. So if you are focused on growing in Bing or Yahoo, never rely on CSR.

- Implement Server-Side Rendering (SSR) – you have many options to do so. They depend on your framework, website size, and the type of content.

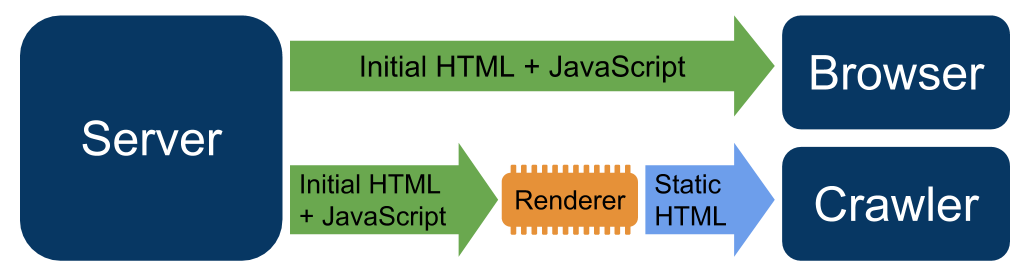

The difference is represented by this diagram:

If you pick SSR, you have two approaches:

- Dynamic Rendering – in this case, you need to build an additional infrastructure that will detect a User-Agent that makes the request to your website, and if it is a search engine crawler, it serves the pre-rendered version of the page. Dynamic rendering is a concept that was introduced during Google I/O 2018 and is well-described in Google’s documentation. Basically, it means that you can detect the User-Agent and send a Client-Side Rendered (CSR) version to normal users and a Server-Side Rendered (SSR) version to crawlers.

- Hybrid Rendering – in this case, both the users and crawlers receive the SSR version of the page on initial request. All subsequent user interactions are served with JS. Keep in mind that while it crawls, Googlebot doesn’t interact with the website. It extracts the links from the source code and sends the requests to the server, so it always receives the SSR version.

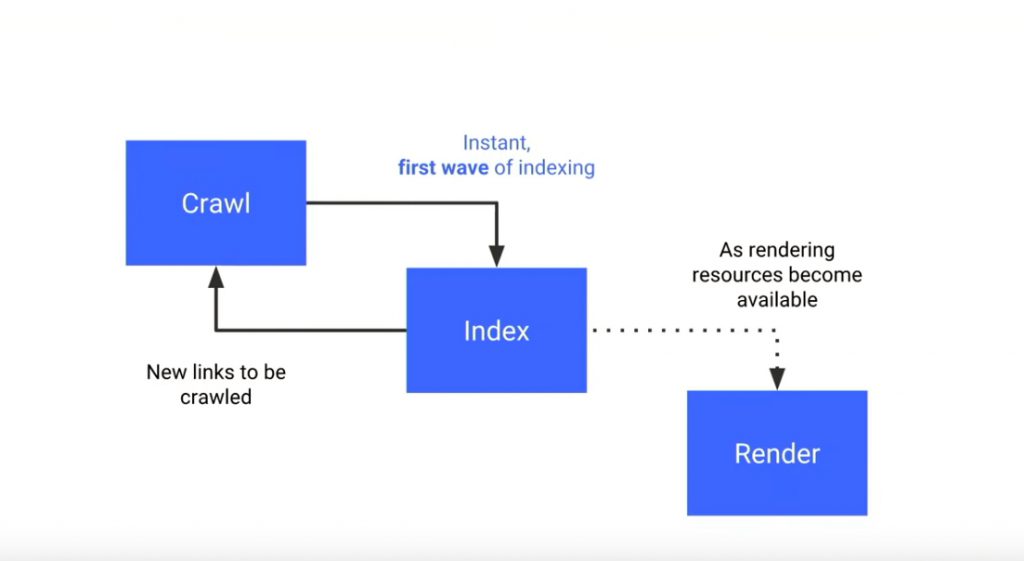

So how do you pick the best option? Actually, it depends on the characteristics of the website. If you have a big structure with dynamic content that is refreshed very often, you should probably implement the Dynamic Rendering or Hybrid approach. The reason for that is the rule of the two waves of indexing that Google applies to JS-heavy websites.

In the first step, they index the page without executing JavaScript, so the content and navigation depending on JS won’t be discovered. If they have

resources available, they execute the JavaScript on your website and Google can finally see the content and index it properly. The whole process may take a while longer.

If you have a news portal or you publish time-limited offers, it may happen that Google won’t discover the content on time and the competitors will have the advantage over you. To avoid this issue you will need to render the pages for Google and send them directly to the crawler. But if you have a small website and you don’t change the content very often, you can give CSR a try.

Hi @JohnMu, what are your current thoughts on GB rendering of SPA’s? …. ???? or ???? or ???? – I’m not sure if JS rendering is working “well” for me yet.

— Kim Dewe (@kimdewe) July 16, 2018

Google can execute and render JS-powered websites but you need to check if they do it correctly and, as importantly, efficiently.

Validated version served to Googlebot

Whatever your choice was in the case of SSR or CSR, you need to check what you serve to Googlebot. I gathered some critical elements that you should include in your debugging process to make sure that Google sees the content correctly and can efficiently index the website.

How does Google render my page?

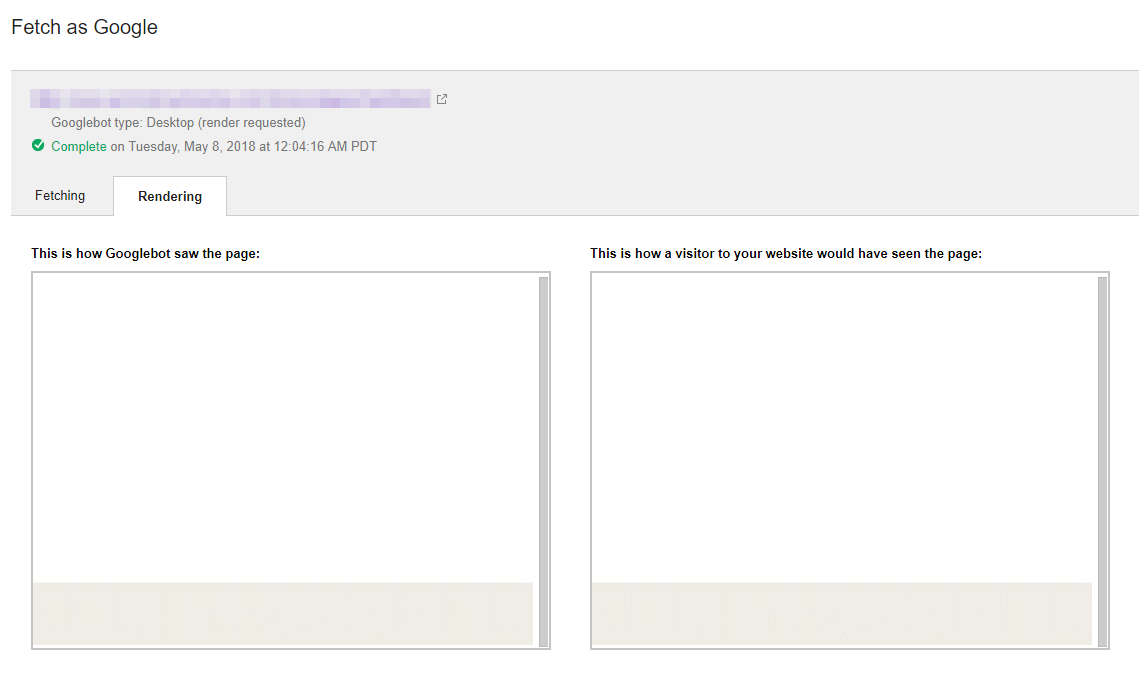

Google Search Console can check if Google is able to render the page correctly. All you need to do is use the Fetch and Render tool both as a Desktop and a Smartphone User-Agent. If you see that the page is rendered correctly, that’s great. However, I would recommend tempering your expectations as to what we see there because, in the case of waiting for the downloading of resources, the Fetch and Render tool is more patient than the Indexer. It might be the case that Google won’t index the content even if they can technically render the page because of a timeout of resources such as JS, CSS or images files. This problem may appear when the server infrastructure is not efficient enough to serve the resources. If they won’t be delivered on time, the rendering process will be crushed. As a result, you may see that the page was rendered partially or that Google may have received a blank page.

In such a case, they may not index your page at all or won’t see the content you want to get indexed. If you send the blank pages at a massive scale, Google may mark them as duplicates.

There are different methods of identifying this issue:

- A manual check if the content is indexed (site: command)

- In the crawlers’ data, look for the pages with a low number of words. This method is applicable if you know that these pages should have better metrics.

Can Google execute JS on my website?

This should be checked if you are not using SSR.

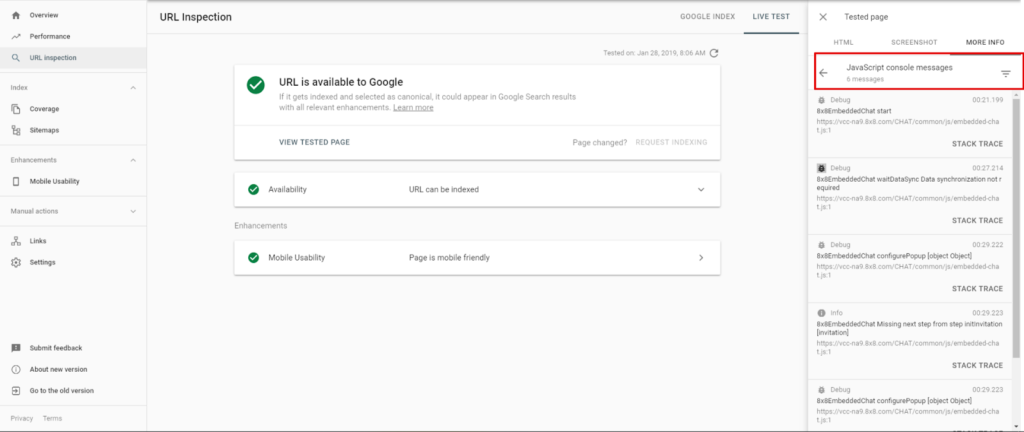

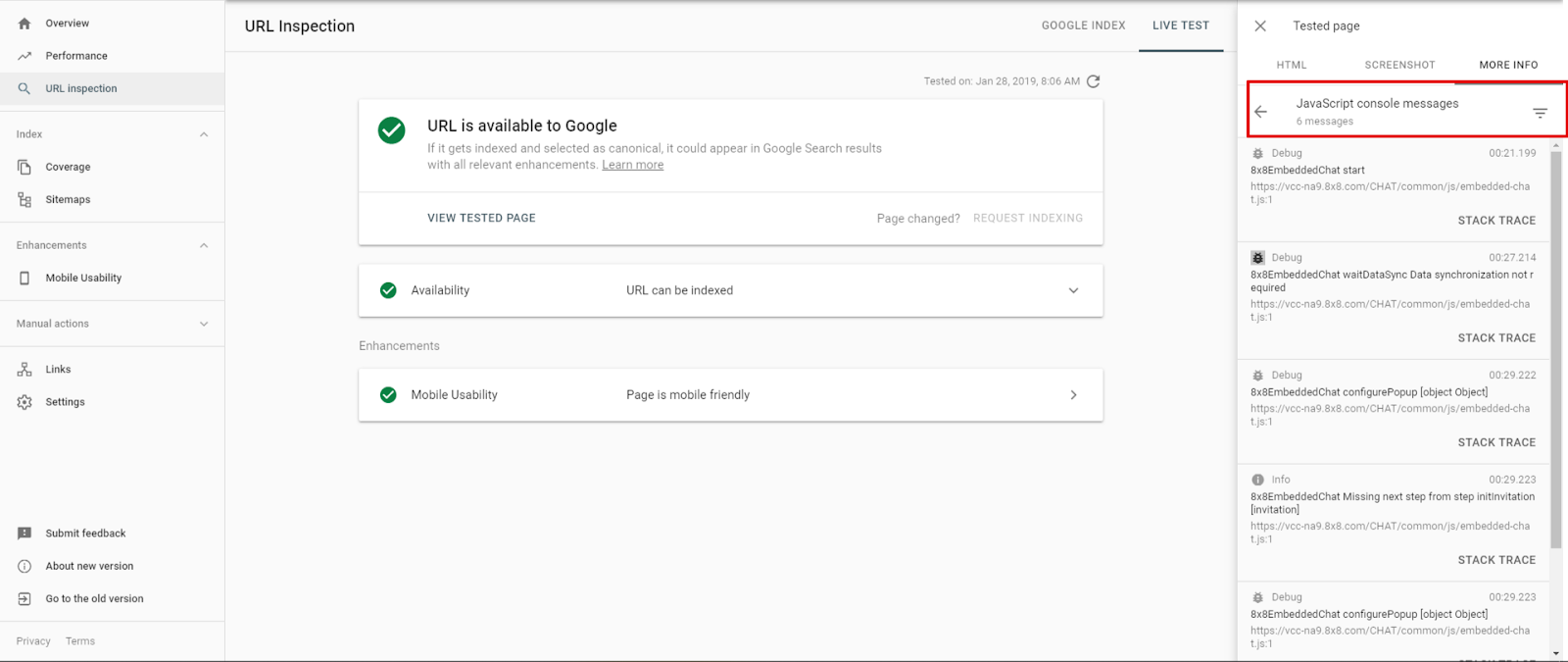

Google may find problems with JS on your website that will prevent proper rendering. In the past, there were 2 options to check:

- Downloading Chrome 41 (3-year-old browser, used by Google for rendering the pages) and checking the console.

- Checking for errors in Mobile Friendly Tester

Now, Google provides a new feature in GSC that allows you to check JS errors on the desktop version of your website. You need to use the Inspect URL tool and see what types of problems Google encounters.

For now, the JS errors we can see in Inspect Element show only the data for the desktop version. If we want to check errors for the mobile and desktop website, you need to use both: Mobile-Friendly Test and Inspect Element in Google Search Console.

Cache settings

If you create pre-rendered snapshots you need to watch out for the cache setting for these files. This is quite a common issue where rendering is not synchronized with the moment of uploading new offers or changing the content. As a result, the versions served to users and to the crawlers are slightly different. In some niches like real estate, even small delays in refreshing the offers may have dramatic results in organic search. Many times, the same offer is published on different portals. Whoever indexes it first gains the privilege of being the source.

Remove JS from pre-rendered version

JavaScript can manipulate the elements on the website. It may lead to dramatic results if it intervenes with metadata or crucial content on your website. Google executes JS so they will notice these changes too. If you decided to serve a Client-side Rendered version to Google, make sure that JS doesn’t make changes in:

- Meta titles and description

- Meta robots instructions

- Hreflangs

- Content

@JohnMu does Googlebot fully understand Angular.js ng-if robots meta tags? e.g. <meta ng-if=”pageHasNoResult” name=”robots” content=”noindex,follow”/>

<meta ng-if=”!pageHasNoResult” name=”robots” content=”noarchive,index,follow”/>— Lloyd Cooke (@lloydcooke) February 23, 2018

Even if you decided to serve pre-rendered pages to Google, it doesn’t mean that you are free from this issue. It may happen that Googlebot will receive a static, pre-rendered version that still has some JS that changes the elements on the page. The worst scenario: it changes the meta robots directives, Google executes it and overwrites the initial setup.

Unfortunately, you can’t rely on the data in the Fetch and Render tool, because it shows the raw HTML. To diagnose this issue you need to check the DOM (code after executing all JS) in Mobile-Friendly Test and see if all the important elements (meta data, content) are unchanged.

Clear cookies while rendering the content

This problem may affect websites that use a headless browser to render the pages for search engine crawlers. Cookies are tiny but extremely powerful bits of information stored by the browser. Some websites use them to store user preferences like language settings, currency or device. They are powerful because they can trigger redirection or update the content on the page. Google, while crawling and rendering, doesn’t store cookies so they won’t notice if you change something on the basis of the information stored in cookies. If you decide to use Dynamic Rendering to render the pages for crawlers, you need to watch out if cookies are stored in the rendering tool. If yes, you need to clear them just after a single page rendering. Keep in mind that behind Puppeteer or Rendertron stands a headless browser that runs the scripts, renders the page and creates a static version of the page. So it behaves like a standard browser.

A headless browser also has the ability to store cookies and it may happen that the snapshots will contain changes triggered by them.

We were involved in consulting on a Dynamic Rendering implementation. If a cookie stored in the browser didn’t match the language of a given page, the cookies triggered a redirection to a homepage. Of course, it was buggy from an international SEO point of view, but it also had extremely negative consequences on indexing. Unfortunately, Puppeteer, installed on the server to render the pages, stored the cookies and it received a redirection as normal users did. A large amount of URLs contained the home page view rather than the content of a given page.

Monitor performance

Site speed is something that will not only impact crawling speed but also affect user experience. JS-powered websites might be extremely heavy and if you do not follow the best practices of shipping the resources with performance in mind, your users will suffer from a laggy application.

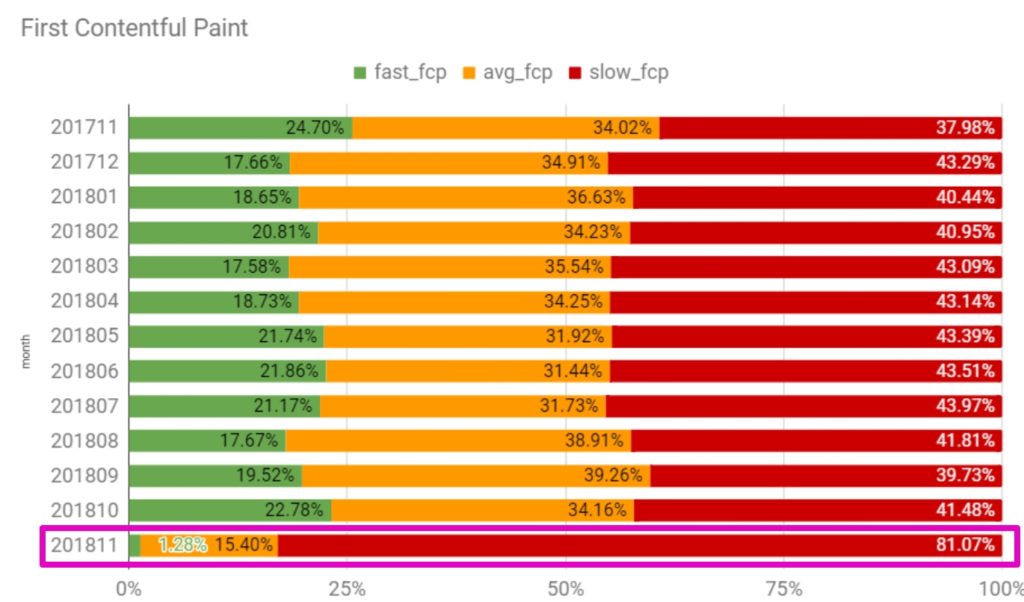

Lighthouse and Webpagetest are some of the best free tools for testing performance in a lab environment. They can reveal the bottlenecks and give hints on what to improve. These tools help you to capture the metrics in given conditions, however, they do not provide information on how real users perceive the performance of your website. Invaluable insights are given by the Chrome User Experience Report where you can see how real users perceive performance. These metrics are captured for users using the Chrome browser. Below you will see what may happen after the change to the JS framework. Until November, it was a standard, static website. Then they switched to a JS framework and the data from the CRUX report showed the following impact on users.

81% of users perceived FCP as slow. This number was doubled in comparison to the previous months! The users did not appreciate the change and the website owners are faced with the long road to performance improvement.

Practice explaining very complex issues in simple words

This is not strictly SEO-related advice. The analyzing and debugging of JS-powered websites moves us to a different level of technical analysis. SEOs need to understand what happens on the server and how exactly the page is rendered.

The web has moved from plain HTML – as an SEO you can embrace that. Learn from JS devs & share SEO knowledge with them. JS’s not going away.

— ???? John ???? (@JohnMu) August 8, 2017

JS websites all share the commonality of having extremely unique issues and many times they are not easy to capture and explain. SEOs need to learn how to communicate to developers and cooperate with them. Explaining complex and tricky issues in very precise words is now even more important than it was before.

I feel that the biggest issue with implementing SEO is the miscommunication between SEOs and dev teams. In the new world, there is little space for misunderstanding.

Final thoughts

Javascript is not a nightmare (but it can be). There are plenty of JS-powered websites that grow in organic search. In this article, I presented some key elements that need to be verified in the case of JS-powered websites (it is not the ultimate list, though) extended by the recent findings of Elephate’s team. Happy analyzing!