Data is becoming more and more important thanks to the evolution of data collection methodologies and computational power. Digital marketing and SEO saw this uptick in new technologies thanks to the effort of some SEOs that first employed some of these techniques.

Natural Language Processing (NLP) tools are such an example because they tackle problems such as clustering keywords and even recognizing entities in documents. Text generation is another hot topic right now and it’s thanks to this field of machine learning that we can test and use such new tools.

In this article, I would like to address why integrating these new skills can be extremely handy, especially if you are a novice SEO and you are tired of reading the usual advice.

Below, we’ll walk through a list of tasks and examples which explain how data can enhance your SEO strategy and save you a lot of time.

Let’s say, for example, that you just made a script that automates a task that takes you one hour on average. If it takes one minute to run the script and five hours to write it, you only need to use it six times to reap the value of your efforts.

Data analysis is no different. Some insights are not visible to the plain eye and Excel is neither powerful nor versatile enough to tackle some problems.

Clustering

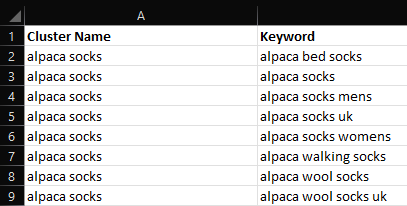

Clustering is a set of techniques aimed at grouping objects together. The techniques are powerful for keyword research and detecting issues at scale as manually grouping keywords can be a big waste of your time, especially if you are dealing with 10K or more of them.

Imagine you have to understand how many articles you should write given a set of keywords. You would have to manually go through the file and create some groups, hoping that you got it right. That represents an immense amount of work and there is no reason to do that considering clustering can solve this issue and provide you with a solid basis to begin with. Undeniably, there is a percentage of manual work involved in most cases, but it’s by no means comparable.

There are many types of clustering techniques, which I’ll detail below; however, I think the best performing are the sentence-transformers. It’s my top choice because it’s able to capture the semantics behind sentences and words.

By the term transformer, we refer to a specific type of neural network architecture based on attention. In other words, attention is a mechanism that allows these models to connect any part of the sequence of text they are analyzing. This is a very convenient feature for spoken language because the words we use assume a different meaning based on the context.

Other common techniques include K-means and DBscan which are not based on neural networks. As a consequence, I’ve found they don’t perform as well as transformer models.

Making the right choice for you depends on the speed you want and on the degree of accuracy. In cases where speed is important, you can stick to K-means and use TF-IDF as your main metric. Don’t worry too much about knowing all the possible types. You only need to focus on what works for you and what can increase the efficiency of your projects.

Pros:

- Top solution for automation

- High ROI potential

Cons:

- It’s easy to get it wrong and get bad results

- Paid tools are expensive

[Ebook] Crawling & Log Files: Use cases & experience based tips

Data visualization (DataViz)

Showing is almost always better than telling and data is no exception. There are times when you want to make points clear and leave room for no mistakes. For instance, showing how many queries for which you are no longer ranking is a good example. Or, if you want to show how much revenue you are losing over time, data visualization would be an impactful way to do so.

DataViz is more of an art than a science and it takes a lot of practice and domain knowledge to create powerful visualizations. As an SEO, you don’t need to be a master but understanding how to plot data and what to present to potential stakeholders is no easy feat.

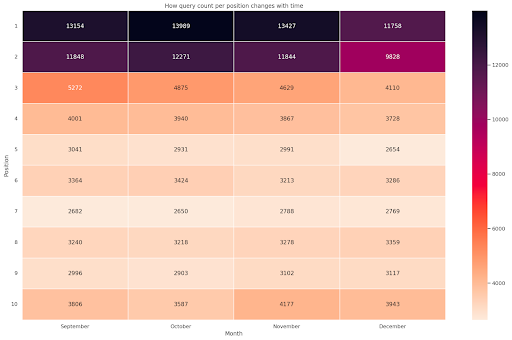

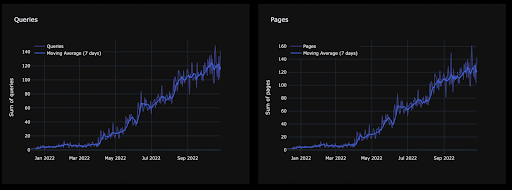

Let’s look at a practical example. Google Search Console doesn’t show you much information as the interface is quite limited and you shouldn’t report basic metrics such as clicks and impressions without context.

After some pivot plays, you can easily plot a heatmap to show progress over time, it’s not that hard with basic Excel knowledge. I previously addressed the subject in my article about extracting data from GSC.

What’s challenging is not the coding per se, it’s more so about the story you want to tell and how to fit what you produce into a wider context.

There are some types of visualizations that are definitely better for some scenarios. The aforementioned heatmaps, for instance, are great if you are dealing with dates and categorical variables, such as position brackets for your queries.

The main issue with DataViz is that inexperienced users may misinterpret the information. Visualization isn’t just about coding, you need a bit of color theory and common sense. Plots are powerful weapons if you know how to use them, otherwise, they can be dangerous.

Many people miss the real point of data, which is storytelling and effective communication. DataViz is the ideal form of training to hone your skills and say more with less. This is what makes the difference between a great SEO analyst and the average technical person with some coding knowledge.

Pros:

- Makes it easier to visualize patterns

- Powerful weapon to convince the management or skeptic C-level

Cons:

- Hard to master

- It’s easy to get it wrong and draw misleading conclusions

Scraping

Our job often involves scraping websites to check if there are errors or to look for technical fixes. Scraping can be used to gather data too and some of the most value-adding activities involve this process.

The possibilities are numerous. You can:

- Scrape your competitors’ sitemap and get their publishing rate

- Understand what are the most common words or topics

- Analyze their text to look for entities

You don’t need to learn to code to execute this task as there are many tools readily available. The main uses involve monitoring prices, getting new articles or products daily from a list of websites, and analyzing your competition. In general, scraping can give you access to the best dataset out there. This is one of the activities I recommend you learn because the potential is extremely high.

E-commerce SEOs particularly have a lot to gain from this because scraping prices in competitive industries is a big challenge and allows you to quickly detect anomalies in pricing policies.

If you are more into content, this is still key because you can scrape RSS feeds in search of the latest posts. Alternatively, you can just get a long list of articles from other websites and then crawl those URLs to analyze their text.

Scraping is the foundation to start gathering your data and leveling up your SEO game. I highly recommend you start here.

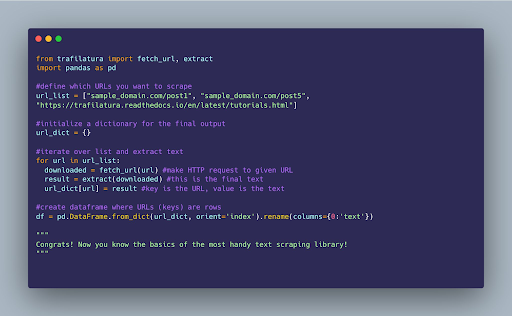

My favorite library for this task is trafilatura if I have to scrape the text of blog posts, otherwise it’s Scrapy without a doubt.

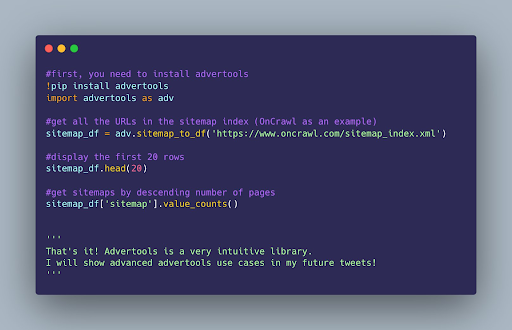

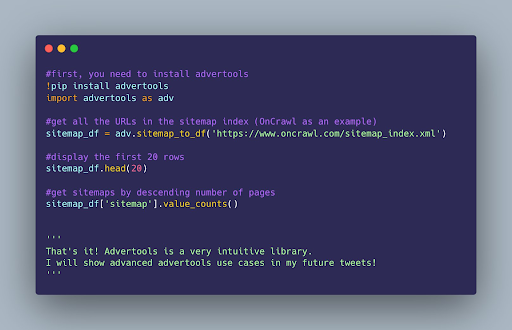

Since Scrapy is quite hard to use the first time, you can just use advertools, it’s built on top of that. You can use one line of code to perform something that would take much more effort.

Pros:

- The only way to get some data that is not obtainable via tools

- Maximum flexibility

- Key for programmatic SEO

Cons:

- Resource-intensive

- It’s not as easy as it seems

Text analysis

My favorite choice and one of the most underrated data techniques in the SEO community is text analysis. There is a lot of focus on automation but way less on analysis and insights. Text analysis, a form of intelligent automation, allows you to examine a large amount of text and find interesting opportunities for optimization. The process, which often relies on software that leverages machine learning and natural language processing algorithms, makes examining a lot of text-based data scalable and consistent.

The SEO tools you may have heard about include Google’s AutoML or Natural Language API or Amazon’s Comprehend. These tools employ techniques such as checking n-grams and entities to find relevant, potential improvements for your articles. Although there may not always be much useful information extracted, you may hit the jackpot with the right mindset.

Pros:

- Not many people use it for SEO, easy to get original insights

- Next-level optimization

- Can be used to find customer complaints or analyze scraped data

Cons:

- Computationally expensive

Topic modeling

Sometimes it’s hard to understand subtopics on a website. If you are working on a content website, chances are that you can’t compete with goliaths, you need to target less popular and specific topics. Finding them can be hard if you don’t know the industry and have to go manually through them.

Another perspective on the subject involves thinking about topics as potential elements on which to judge a website. You can obtain text data from your competitors’ websites and then model the topics they have to understand how they could be perceived by a search engine.

My favorite library for that is BERTopic, the best Python library for this task. The most common use case is understanding your competitors’ websites at scale and checking where you should invest. This method is excellent for preliminary analysis and for niche selection.

Pros:

- Mimic how a machine could interpret your website

- Find latent topics

Cons:

- Computationally expensive

- Not always accurate for some topics

- Easy to get it wrong

[Ebook] Data SEO: The Next Big Adventure

Entity extraction

Scouting for entities is quite an underrated task as many SEOs still ignore them. We know for sure that Google and modern search engines rely on semantics and different criteria to index and rank documents. Knowing what entities are popular in a certain niche is key if you want to scale your content quality or just find new topics that you didn’t cover.

The most popular choices involve either scraping SERPs with a list of keywords to spot interesting opportunities or analyzing a competitor’s website. You can also check your website and perform a gap analysis to see where you are lacking.

In other words, you can just check which entities are not in common between your website and another one. Those that are missing are of more interest and can be integrated into your domain.

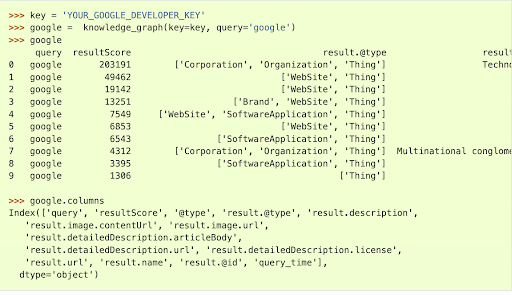

One of the most famous APIs to perform this task is Google NLP. I don’t use it anymore because it was trained on a very “small” and old dataset, so it completely fails in niches like fiction or e-commerce.

This API has one good metric though, called salience. It measures the importance of entities in your text, meaning that a high value signals more relevance. Nonetheless, salience isn’t really the most important metric, as you may prefer different interpretations. The spaCy library is quite advanced and can be trained with new texts to perform even better.

The example above shows you why it’s necessary to train models correctly. Some fictional names are recognized with the wrong type and the only way to adjust this is to train a custom model.

SEOs don’t need to be scientists or engineers, but if you are going to take this path, you need basic proficiency in handling these problems. Think in terms of domains and industries, you will likely need to recognize entities for specific topics.

The only issue with this approach is that it takes a lot of time and resources to do so. For this reason, I prefer using other services like TextRazor for entity extraction. This task is just one of the many NLP tasks you can do today, and one key example of how machine learning affects your digital marketing campaigns.

Pros:

- Useful to uncover topic gaps

- Find entities and build a content network around them

- Essential to evaluate your entire website

Cons:

- You need a good entity extractor

- It can easily cost a lot of money

Automation

Automation is one of the tasks that adds the most value and also the one that can save you the most time. Automating boring tasks is a good way to work on the strategic part of SEO and avoid wasting time on operations of lesser importance. You can pretty much automate every SEO task, and common examples include creating title tags, and descriptions and even improving image resolution.

Coding languages such as Python and R are the best choices, even though the former has the edge because it’s a more generic use programming language and has better libraries for this task.

The main issue with automation is that you risk losing control over some tasks where you should be vigilant and give more than a simple input.

Keyword research, for example, can be easily automated but my recommendation for this specific case is to use scripts to aid you in your work, and not replace it completely.

For instance, it’s quite hard and unwise to use volume as a metric in some industries because it doesn’t tell you anything about the intent of a page. It’s possible to automate even non-SEO tasks, as long as you find it’s worth your time.

Pros:

- Save time and resources for tedious tasks

- It increases your productivity since you work less on some tasks

- Not so hard to pull off

Cons:

- Some automation solutions can take entire weeks

- Automating some processes can cause more harm than good

General data analysis

It’s hard to define what data analysis really is, but essentially, we can say that word analysis refers to simplifying something into its basic components. Now, let’s see how we can apply this knowledge to SEO.

The most common data sources are Google Search Console, Google Analytics, scraping data, and third-party metrics. A good data analysis workflow is able to give you enough insight on what to do next or to investigate what’s happened or what’s going on right now.

The importance of data analysis stems from the need to understand how to work in complex situations. Should I optimize a certain topic? Is this product bringing me any good leads? You can only know this with analysis.

In fact, analysis involves a certain degree of abstraction and domain knowledge.

The most important takeaway is that analytics is not meant to answer questions. If you analyze something, you want to improve the quality of your questions. That is, unless you already have the answer or the scenario you’re dealing with is quite transparent.

For example, you can’t apply the same criteria of B2B to a B2C industry, because you need different metrics and you have other goals as well.Measuring traffic or impressions for a B2B brand is useful for content but your interest is in getting leads and increasing your profits.

A publisher is generally very interested in how much organic traffic it gets to sell more subscriptions. Using mere traffic increase for a B2B SaaS is misleading because it is a vanity metric. These values hold no real significance for a business and shouldn’t be what you present.

Nonetheless, this is one of the most underrated practices in marketing, as it’s often misunderstood and exchanged for using data dumps or simple Excel sorting options.

Pros:

- It’s still niche in the SEO community, you can use it to uncover a lot of opportunities

- You can get unique insights that the majority of SEO tools fail to provide

Cons:

- You need to know your industry, coding isn’t enough

- Bad data quality can harm your results

Graph theory

Graphs offer a level of flexibility that other data structures can’t offer for some use cases. For instance, they can represent the internal linking structure of a website and how URLs interact with each other.

Graph theory is extensively used by modern search engines to evaluate backlink networks and for their (in)famous knowledge graph. And, did you know that your sentences are converted into a graph for evaluation? Google really is a smart search engine, even though updates and some of its behaviors are often “quirky”.

A graph is made up of nodes, edges, and labels/attributes. Let’s apply this concept to SEO:

- Nodes are the URLs.

- Edges act as connections between nodes, so they are links.

- A label can be the actual URL string or any other information you want to append to a node.

SEOs can use some basic knowledge to audit websites and spot abnormal scenarios.

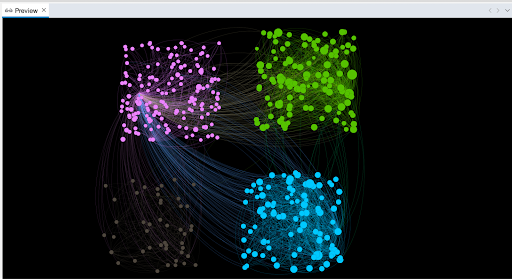

My favorite tool for visualizing graphs is Gephi, open-source software that can handle pretty much everything you need. Python is not suitable for visualizing this type of data and my usual workflow takes that into account.

First, I scrape and get the data with its help, then I save the generated graph into a readable Gephi format. From this point, I move to Gephi and start working on my graph. You can run several statistics that you will later use to filter or highlight your data. Based on that, you can visualize interesting patterns or see if some URLs are that important within the structure of your website.

The example below shows you four clusters within a website. The size of the node (URL) is expressed by the hub metric, meaning that pages with high values can be referred to as “hubs” in the SEO jargon.

What’s strange here is that the green group is showing many circles with similar sizes, meaning that linking is equidistributed. And this is not the best outcome for what I’ve planned because there should be two visible hubs in that cluster.

The gray group is about a secondary topic so it’s fine to leave it be.

If you know how to tell a story with this data and how to present them, no SEO will stand in your way. Effective data communication is the best skill you can wish for.

Pros:

- The best for presenting data and audits

- I don’t know a better alternative for checking links

Cons:

- Doing it wrong is easier than you think

- You need to be decently proficient with graphs

Knowledge graphs and SPARQL

As already stated when talking about semantic SEO, knowledge graphs play a key role in modern search engines. Basically, it’s a network that connects entities (nodes), and the connections themselves are called edges. Every page on your website can be considered a node, connected to other pages via links, your edges.

But this also applies to the words in your text, as syntax is a form of order by definition. This means that how you write your sentences has a certain effect on search engines. Knowledge graphs allow you to understand and visualize your “latent” structure. It’s quite easy to understand the internal linking of a subset of pages if you can see it.

Normally, this data structure is represented in the form of RDF, a standard format based on triples. Simply put, the structure follows the model subject-predicate-object.

Paul (SBJ) eats (PRD) an apple (OBJ).

In the sentence above, you have two entities connected by the relationship specified by the verb. Knowledge graphs combined with syntax tags allow you to identify these patterns.

The actual use for this is very weak and I prefer more practical tasks like assessing internal linking validity or visualization.

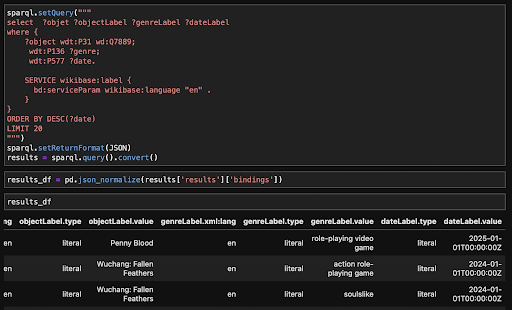

Speaking of which, many data sources used by Google to feed their knowledge graph use RDF data. The query language to retrieve this data is called SPARQL, a SQL dialect. It’s possible to get data from Wikidata and IMDB along with their semantic relationships.

Do you see the difference with scraping? RDF data have this semantic information that can be used to connect concepts.

This is extremely beneficial for programmatic SEO or even online databases. Traditional scraping lacks the ability to give sense to your text and this additional semantic information can change the way you build your pages.

I am currently studying it so I don’t have a lot of practical experience in writing complex queries yet. Nonetheless, the potential is huge and I can see that this is a must for anyone working with a large bulk of data.

Pros:

- This is where the industry is headed

- Scalable structures for large websites

Cons:

- This is not always what you want, keep it simple

- There are very few use cases