Oncrawl recently released a Splunk integration to facilitate log monitoring for Splunk users. We’ve found that companies use our Splunk integration for two main purposes: process automation, and elevated security control. But the advantages of the tool don’t stop there. Here are five ways you can use the Oncrawl Splunk integration to improve your technical SEO.

SEO log analysis: the basics

What is SEO log analysis?

Your log files represent all activity on your website as recorded by the website’s server itself. It is the most complete and most reliable source of information regarding what happens on your website. This includes the number and frequency of bot hits, the number and frequency of SEO organic hits coming from SERPs, breakdown by device type (desktop vs. mobile) or by URL type (page vs. resource), precise page sizes and actual HTTP status codes.

Some of the many advantages provided by SEO log analysis:

- Spotting peaks or changes in crawl behavior that indicate modifications in how your site is handled by Google

- Knowing how long, on average, it takes for new pages to get indexed and to receive the first organic visitors

- Monitoring how bot and user activity affect a page’s rankings

- Understanding how bot and user behavior correlate with other SEO factors

What is Splunk?

Splunk is an enterprise solution for machine data aggregation. Capable of indexing and managing data from multiple sources at scale, it includes functions for server log processing for site security and reporting purposes.

Some of the advantages of Splunk:

- Indexing and searching for improved data correlation

- Drill Down and pivot capabilities for better reporting

- Real time alerts

- Data dashboards

- Highly scalable

- Flexible deployment options

Log monitoring in Splunk

Splunk users benefit from an Oncrawl integration to connect server log data managed in Splunk with SEO data in the Oncrawl platform.

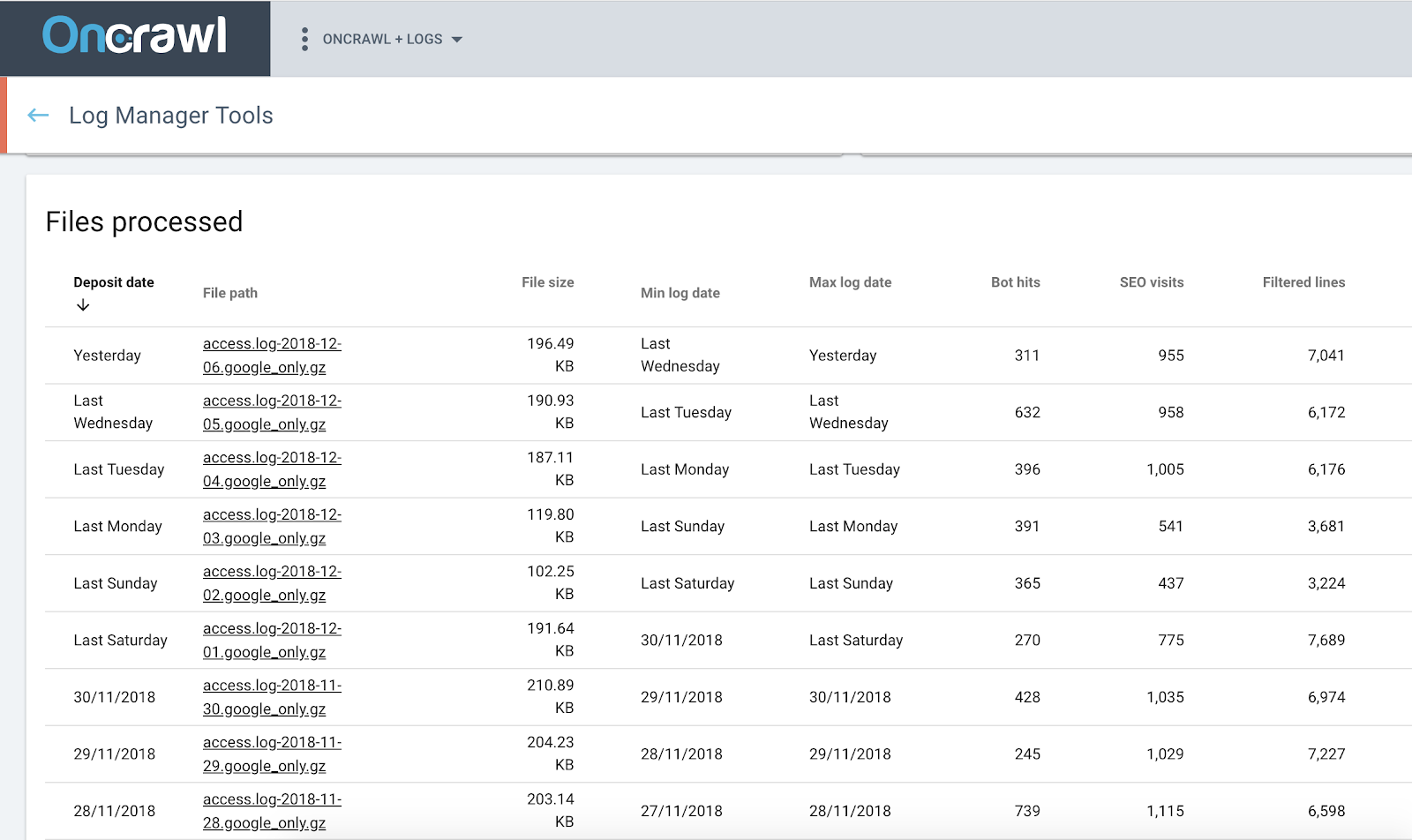

Oncrawl Log Analyzer

Improving your technical SEO with Oncrawl + Splunk

1. Use logs for in-depth SEO analysis

Splunk proves the ability to aggregate, search, monitor, and set up alerting for log data. It parses and reindexes content in server logs. Using powerful search and filters, it provides answers to questions about the data in your log files. This processing step provides statistics for trends revealed in log data.

When you want to apply this to SEO data, however, it’s best to start with the raw data. And that’s exactly what the Oncrawl Splunk integration does.

Instead of just showing separate statistics for log data, this allows you to integrate information from logs with all of the other data sources in the Oncrawl platform. In turn, this allows you to examine relationships between SEO metrics and information on user and bot behavior in your log files.

Number of organic visits by page click depth.

This cross-data analysis can include axes useful to SEO:

- Crawl behavior breakdown by individual bots

- Time between the first crawl and the first organic visit

- Comparison between pages served to users and bots, and pages served during an audit crawl

- Discovery of orphan pages

- Correlations between crawl frequency and ranking, impressions, CTR

- Influence of the internal linking strategy on user/bot activity

- Relationship between page click depth and user/bot activity

- Relationship between internal page popularity and user/bot activity

- Breakdown of user and bot activity across pages grouped by SEO performance

2. Make setup easier

Whether or not you need automation or finer controls for data security, if you’re a Splunk user, you’ll

like how simple it is to set up.

If you’re not a system administrator, setting up log monitoring for SEO can seem like a complicated task.

Our suggestion is to simply skip the hard parts. You can now set everything up directly in Splunk and use the key you generate to create the connection with Oncrawl.

That’s it. You’re ready to go. It couldn’t be easier.

3. Take advantage of process automation with Splunk

The manual process of using log data collected in Splunk requires multiple steps:

- Create filters to search for the correct selection of log data

- Create a saved search

- Set up automation for running the search

- Output to CSV

- Connect to your Splunk instance in SSH

- Navigate to the CSV output folder

- Transfer the file to your computer

- Connect to the Oncrawl ftp space

- Transfer the file to Oncrawl…

This process must be repeated regularly to avoid gaps in your log data. Often this becomes a daily task.

If you choose to use the Splunk integration for Oncrawl, you no longer need to launch the task regularly. You only need to set up the process (and, as mentioned earlier, it couldn’t be easier). You no longer need to worry about launching a script–or, worse, a series of manual actions–every day; the integration handles that for you.

4. Protect your process

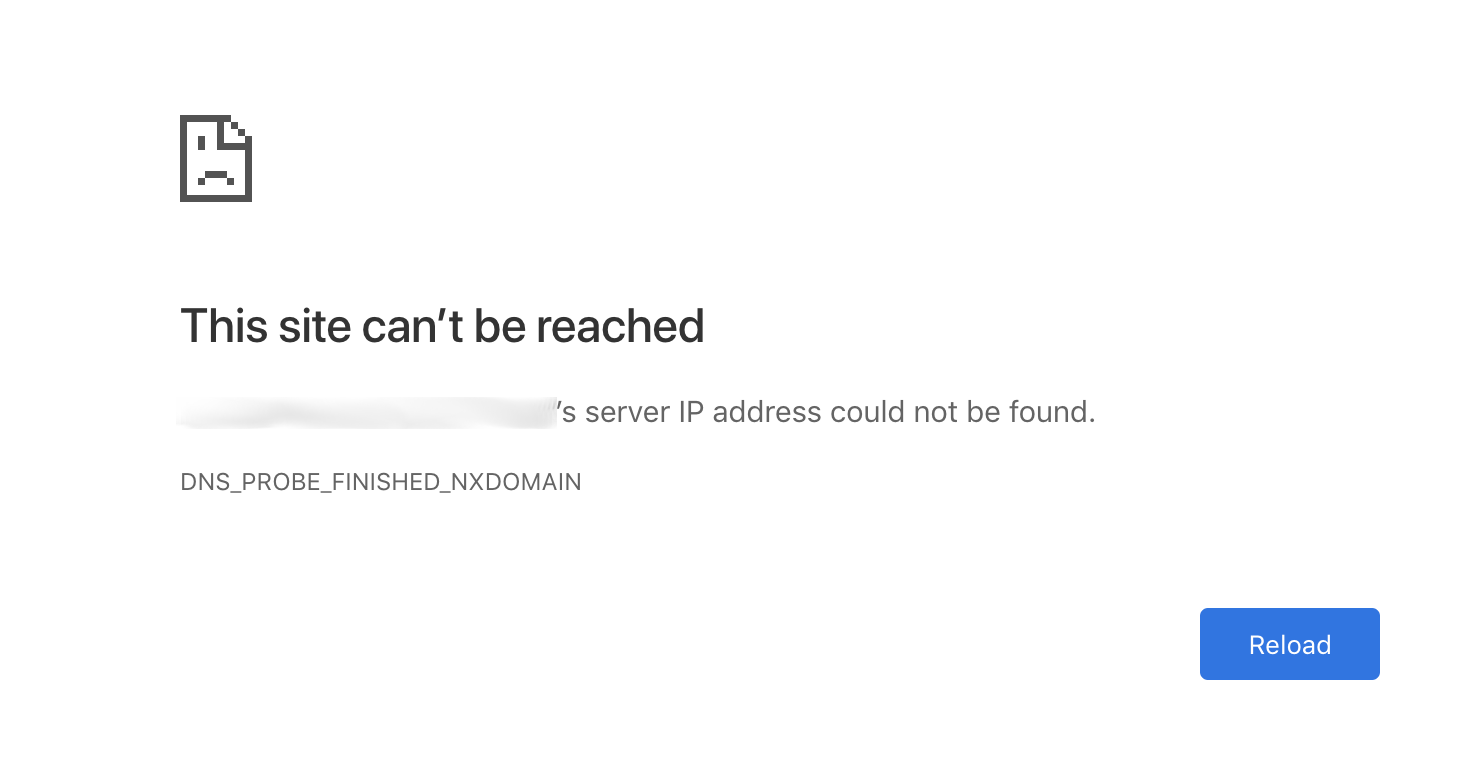

Protect yourself from losing data when there’s a problem. Because log monitoring relies on a continuous stream of data, gaps can lead to incorrect conclusions. You should never have to ask questions like: is the apparent absence of organic visits this morning due to something that happened on Google, or am I just missing the data?

The Oncrawl Splunk integration protects you in case your server is down or the connection is lost, and prevents human errors when you don’t have time or simply forget to upload data. If we can’t connect to the server, it won’t cause gaps in your data; we’ll just collect it a little later. If you find a set of data from an earlier date that you forgot to add to Splunk, the Oncrawl integration will also automatically pick it up.

5. Take control of data security

At Oncrawl, we take the security of your data extremely seriously.

As always, sensitive data in your logs are kept where you put them in your private, secure FTP space and are never made available elsewhere. For example, the only personal data we process are IP addresses when validating the authenticity of Googlebot visits. We keep no record of the IP addresses used–only the result of the validation. If necessary, you can remove sensitive information made available for analysis at any time by removing files from your FTP space.

The integration for Splunk goes even further. We make sure you maintain control of your data throughout the process. You define the access rights, the data to be shared, and the frequency of the updates in Oncrawl. When you share data with Oncrawl through the Splunk integration, we use standard, secure protocols to communicate with Splunk, protected by a password and a key that you set up.

Because the setup is done in Splunk, Oncrawl never sees anything you don’t allow us to see. You choose what information you share with Oncrawl. Not only that, but since you manage the setup, if there are changes in your logging process or in your company’s standards, you can make changes at any time.