Log files are a precise reflection of the life of your website. Whether by users or by bots, on pages or on resources, all of the activity on your website is stored in your logs.

Containing information such as IP addresses, status codes, user-agents, referers and other technical data, every line in logs (server-based data) can help you to supplement your website analysis, which is usually mostly based on analytics data (user-oriented data).

The data that you will find in your logs can, on their own, help you focus your SEO strategy.

1. Health status of your website

Among the information that you can get through your logs, the status code, response size and response time are excellent indicators of your website’s health.

In fact it is common to lose traffic or conversions without really understanding why. That is because the explanation can sometimes be technical.

Among the leads to consider are several that are directly related to the 3 fields we just mentioned.

Example 1: an increase in the number of server errors (“5xx”) could indicate technical issues which can go undetected if you simply navigate from page to page.

Example 2: several studies have shown the impact of load time on the conversion rate for e-commerce websites. A drop in your sales revenue could correlate with an increase in the load time of your pages.

Additionally, Google offers a calculator that allows you to simulate the relationship between load time and revenues (to be taken with a grain of salt).

Example 3: it can sometimes happen that your server, following various technical issues, returns empty pages. In this case, simple monitoring of your status codes will not be enough to alert you. That’s why it may be helpful to add response size to the data you monitor: these empty (or blank) pages are typically lighter than usual.

By segmenting your website based on the various types of URLs/pages, you will be able to isolate the sources of the technical issues more easily, which will make solving the problem simpler.

2. Bot hit frequency

For members of the SEO community, logs represent a wealth of useful information on how bots from search engines “consume” their websites.

For example, they let us know when bots visited a page for the first or last time.

Let’s take the example of a news website which logically needs search engines to quickly find and index its fresh content. Analyzing the logs fields which indicate the date and time make it possible to define the average time between the publication of an article and its discovery by search engines.

From there, it would be interesting to analyze the number of daily bot hits (or crawl frequency) on the website’s home page, the categories pages… This will make it easy to determine where to place links to fresh articles that need to be discovered.

The same theory can apply to an e-commerce website in order to get new products in the catalogue discovered, such as those you want to spotlight to keep on top of emerging trends.

3. Crawl budget

The crawl budget (a sort of credit of crawl bandwidth that Google and its peers dedicate to a site) is a favorite subject of SEO experts, and its optimization has become a mandatory taks.

Outside of logs, only Google Search Console (the old version for the moment) will give you a basic idea of the budget that search engines grant to your website. But the level of accuracy in the Search Console won’t really help you know where to concentrate your efforts. Especially since the data reported are actually aggregate data accumulated from all the Googlebots.

Logs, however, thanks to an analysis of the user-agent and URL fields, can to identify which pages (or ressources) bots are visiting and at what rate.

This information will let you know if the Googlebots excessively browse parts of your website which are not important for SEO, wasting budget which could be useful for other pages.

This type of analysis can be used to structure your internal linking strategy, your robots.txt file management, your use of meta tags targeting bots…

4. Mobile-First & migration

Some can’t wait for it, others are terrified of it, but the day will certainly come when you will receive an email from Google indicating that your website has been switched to the famous mobile-first index.

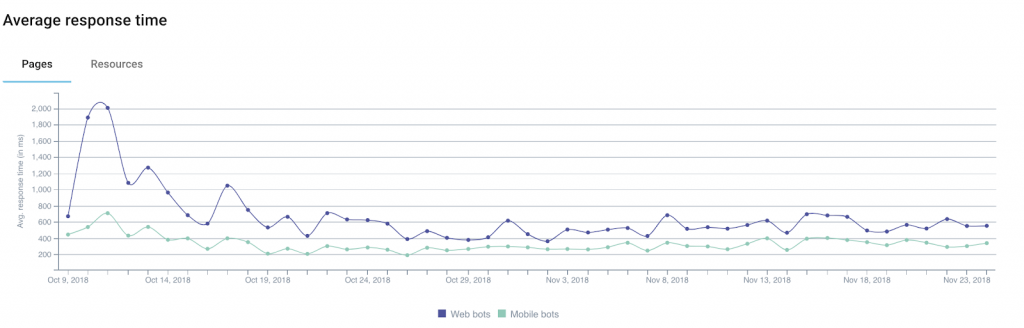

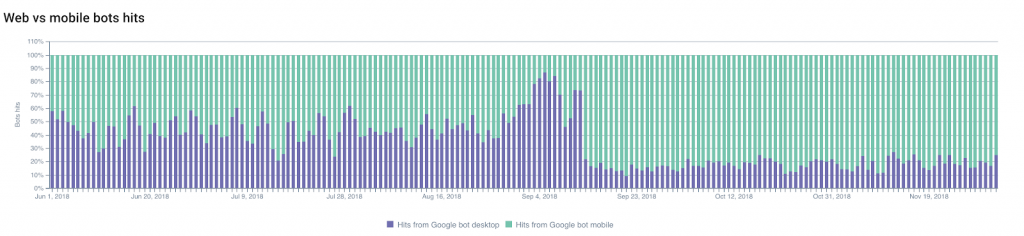

You can predict when the switch will happen by following changes in the ratio between Googlebot-desktop hits and Googlebot-mobile hits.

The portion of crawling by mobile Googlebots will generally increase, allowing you to predict and plan for the switch.

On the other hand, not seeing changes in this ratio can also be a significant indication of your website’s conformity with Google’s criteria for switching indexes.

You can also monitor other changes: migrations (from HTTP to HTTPS, for example), or modifications to your website’s structure.

If we concentrate on the first example — on a modification of the protocol used — indexation of the secure URLs and redirects, as well as the progressive “decline” of the old URLs, can easily be monitored thanks to logs.

Monitoring changes in status codes will be your best ally!

5. Nosy neighbors

You are an excellent SEO and your efforts have been paying off!

This has aroused the curiosity of your competitors (and other nosy folk) who want to understand how you pulled it of and who have decided to crawl your entire website.

This is bad. But not unusual (quite the contrary).

Your job now is to spot the snoopers..

The most subtle of them will try to pass their bots off as Googlebots by using Google user-agents. And this is where the IP address stored in the logs can be very useful.

But in fact, official Googlebots only make use of well-documented ranges of IP addresses. Google advises webmasters to carry out a reverse DNS lookup in order to verify bots origins.

If this test fails, the results (or the results of IP geo-tracking) can help you decide what to do.

Just for the record, the expert of digital security at Imperva Incapsula led a study published in 2016 which shows that 28.9% of the analyzed bandwidth was consumed by “bad bots” (compared to 22.9% by “good bots” and 48.2% by users). Taking a look at your logs can help you avoid an excessive drain on your ressources by detecting unwanted bots.