Log analysis is the only way to get 100% accurate data when analyzing your SEO performance. Logs help you dig into Google data more efficiently and know exactly what search engines are doing on your website, which pages are crawled, which ones are active and finally if there are any errors on your website.

François Goube has recently given a webinar with SEMRush about how to unlock the Google black box with log analysis. The presentation is in French but here are the key points to remember.

No matter how large you site is, log file analysis can help you unlock SEO optimisations opportunities. Indeed, log file analysis is helpful to audit your website, identify useful and useless pages, pages that Google sees or not. Log file analysis also allows you to monitor your pages and thus create alerts or check if you get spams or attacks.

Why using log analysis?

Log file analysis stresses out what Google is actually doing on your website and what he did. You can know what are your most actives pages, if Google has met errors. Actually, the search engines is always trying to optimize its crawl resources as it has a crawl budget to spend and respect. If he has decided to crawl 1000 pages per day, you need to get your most important pages crawled and indexed with this quota.

10 use cases to understand what Google is doing on your website.

1#Know what Google crawls

Log file analysis lets you know how many unique pages you have, and the Google crawl frequency. It is interesting to know if within all the uniques pages that Google crawls, there are all your pages and not old URLs that are now missing.

If you are a e-commerce website, it can help you check if all your product pages are crawled by Google, if a new content has been discovered, etc.

2#Understand if Google is fostering specific zones on your website

As we said, Google is giving a budget crawl to your site so you need to optimize it. With log analysis, you can identify where Google is spending its crawl budget and if it is on your “money pages” or on pages that don’t have any value and don’t generate any SEO visits (visits from the organic results).

3# Know what Google loves

You can detect your active pages and thus your pages that receive SEO traffic. It helps you determine, taking into account all the pages that Google knows, the active ratio. In other words, it is the percentage of pages, within all pages known by Google, that generate traffic.

The Fresh Rank also helps determine if Google loves your website. It is a metric that calculates the time between the moment where Google discovers a page and the moment where Google is sending its first visit. It is helpful to know how long you will have to wait to rank a product for example.

4# Identify obstacles

Log analysis is also useful to identify obstacles to pages’ indexation. You can for instance monitor your status codes. Let’s say you have a raise of 302 redirects on a period, you can deduce that this is a release that did not go right. It actually does have an impact on your crawl frequency and tends to lower Google budget. That’s why it is important to quickly correct those errors and this is where real time alerts can help.

Sometimes, status codes are also changing especially with CMS that can have weird behaviors. Pages with status codes changing too often will slow down Google crawl frequency. Again, seeing those changes in real time can help you to quickly take actions.

5# Check the SEO impact by groups of pages

It is useful to have categorized your pages by groups to identify your traffic by group, see the performance of your product pages and the most active ones and finally check which groups have lower performances. It is also useful to validate your optimisations by group of pages.

6# Cross logs and crawl data

When doing log analysis, it is interesting to get a comparison between what Google does and the theory of your website structure.

To do so, you can compare data from your crawls and what Google actually knows. If Google knows more pages that the ones in the structure it can lead to issues like orphan pages or pages that Google does not know. Actually, orphan pages are pages that Google knows but that are not linked in the structure and thus that do not receive any popularity anymore as there are no links pointing to them.

7#Find the factor to optimize

Crawl and log data comparaison also helps to determine which factor you should optimize. For instance, what is the impact of word count? We know that we need original content, long content… ok but how many words? Regarding your thematic, that number can evolve but in most cases the fewer words you have on your pages, the less Google wants to come on those pages.

In the same logic, do internal links are a factor to work on?

The more links you have pointing to a page, the more often Google comes to crawl it. You can also measure that page speed can impact Google’s crawl. It can be helpful to prove it to your IT team ;)

8# Measure the impact of duplicate content on bots

You can also measure the impact of duplicate content on Googlebot. Canonical URLs can be a problem. If you have a unique canonical within a cluster of duplicate page, Google will stop crawling those pages and this is what you are looking for. But if those canonical URLs are different within the cluster of duplicate pages, Google will still crawl them as often as if there was no duplicate content. In this case, you are not saving any crawl budget.

9#Evaluate your architecture

With log and crawl analysis, you can check your group of pages by depth. Are you sure to have placed the right pages at the right depth? Crawl depth is a parameter that can impact your crawl ratio. Indeed, if you have pages far from the home, they will get crawled less often. Obviously, you can improve that with a transversal and more dense internal linking but beyond level 7 or 8, Google gets troubles to find your pages.

10# Prioritize your actions

Combine analysis helps you to detect factors that have an impact on your website on bots’ behavior but also to determine on which pages you should focus. Is it your product pages? You can know how many of them generate traffic and SEO visits. So you can know on which pages to work in priority.

With combined analysis you can thus know which factors impact your pages performance.

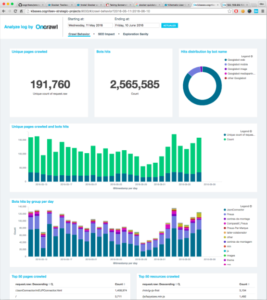

Moreover, any hypothesis need to be validate with data. With Saas tools like Oncrawl, you can find more than 300 metrics to use to make requests, validate your hypothesis and export them to work on them. It is easier for prioritizing your actions and justify their effectiveness.

To sum up, the key to log analysis is to cross logs and crawl data to open the Google black box. It will let you discover orphan pages, know where are your active pages and which one you should optimize, etc.