Crawl frequency is how often Google and other search engines visit your website to index its content. It’s an important technical SEO signal that shows how Google understands both your site’s health and performance. While it doesn’t directly boost domain authority or how you perform in search results, crawl frequency is key in ensuring your pages are discoverable, up to date, and eligible to rank well.

By analyzing your website’s crawl stats through log file analysis, you can evaluate whether high-value pages receive enough crawl requests or if the crawl budget is wasted on low-priority URLs.

Thankfully, conducting a crawl frequency analysis is pretty straightforward. Read on to explore what factors influence crawl frequency, healthy and problematic patterns to watch out for, and quick fixes to optimize your overall site performance.

What is crawl frequency and what affects it?

Crawl frequency refers to the rate at which Googlebot and other search engine spiders request pages from your site. Also known as crawl rate, it’s often confused with crawl budget — the maximum number of pages a search engine spider can crawl within a given time span.

This is mostly true for large sites. For smaller sites, crawl budget is generally less of a concern.

Several key factors influence crawl frequency, including:

- Page popularity: if a page has lots of high-quality backlinks or is on a site with a strong domain authority, there’s a better chance Google will allocate more crawl resources for it.

- Freshness signals: Googlebot prioritizes fresh content, including existing pages you’re regularly updating. When your XML sitemap shows new pages or site updates, crawl frequency increases.

- Internal linking: well-structured sites with a clean site architecture and robust internal links help web crawlers discover new and updated pages.

- Page performance: slow or error-prone pages, like those with faulty HTTP status codes or server errors, have lower crawl frequency. High-quality content sees more frequent crawling.

Ultimately, crawl frequency is a proxy for Googlebot’s perception of content importance. If a page is fast, popular, and authoritative, search engines like Google crawl it more frequently.

Measuring crawl frequency using log files

Log file analysis is a quick and effective way to measure crawl frequency. Server logs record every request, containing data points like:

- Request URL: the exact path being crawled (like a page ending in /blog/blog-post-title);

- Time stamp: the date and time of each crawl request;

- User agent: a string that identifies Googlebot, Bingbot, and other web crawlers.

This information is crucial for tracking how often search engines visit specific pages or directories, giving you insights into your website’s overall crawl stats. Regularly monitoring this data can help you identify any crawl issues and optimize for better crawl rates.

What tools are available to simplify your crawl frequency analysis?

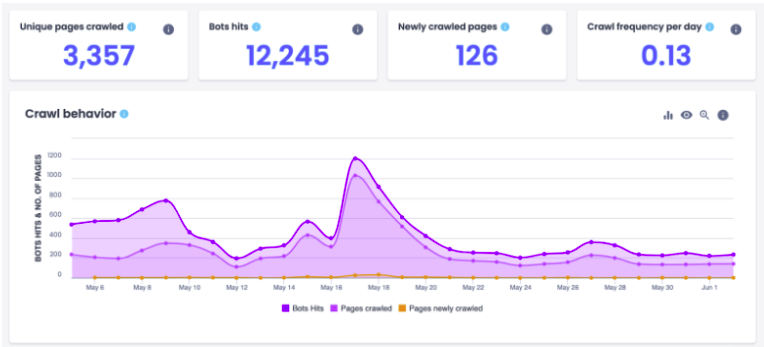

One of the most effective platforms for crawl frequency analysis is Oncrawl. It processes log files to detect crawl frequency trends, identify high- and low-priority pages, and correlate crawl behavior with page value. Oncrawl also helps you visualize crawl distribution across site segments, which is essential for spotting crawl budget waste and optimizing crawler access.

Source: Oncrawl

Other options include open-source Python scripts and command-line utilities

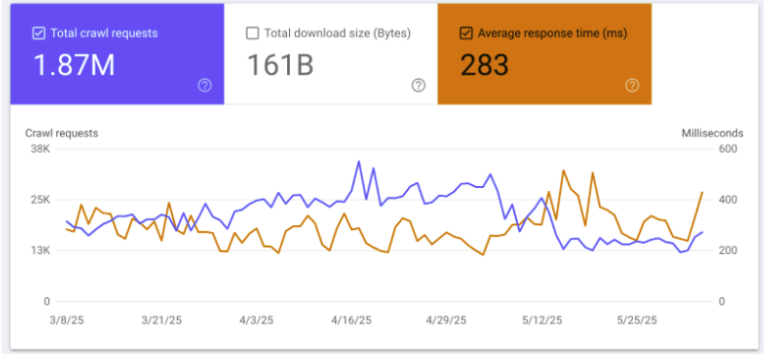

You can even compare this data to the Google Search Console’s Crawl Stats report, which provides aggregate insights into Google’s crawl behavior, including total requests per day and average response time.

The above figure highlights the kind of crawl data available in Google Search Console. Search Console also provides crawl request breakdowns based on host, response, file type, and more.

What crawl frequency tells you about site health

Interpreting crawl frequency patterns can reveal many insights regarding how Googlebot is gauging your site’s health. Below, you’ll find both positive signs and red flags to watch for when analyzing crawl frequency.

Positive signs

Here are the top indicators that your site is healthy:

- High crawl frequency on valuable pages: if money pages or routinely updated blog posts receive more crawl requests, it indicates search engine bots prioritize your high-value content.

- Stable crawling patterns on top-level pages: homepages, category pages, and key directory indexes should show consistent crawl rates over time.

- Balanced crawl distribution across site sections: each section of your site should receive a reasonable crawl frequency relative to its importance.

- New pages indexing quickly: newly published content appearing in Google search results soon after publication suggests a healthy crawl rate.

“In the context of freshness signals, it’s especially important to understand Google’s Query Deserves Freshness (QDF) algorithm. The search engine actively prioritizes the latest information for queries requiring current data, such as news, trending topics, or recurring events. Frequent crawling on pages with this type of content can signal that a given site is a valuable source of information.”

– Jakub Adamiec, Senior Technical SEO Specialist at Surfshark

Red flags

The following issues could signal problems on your web pages:

- Crawls concentrated on irrelevant URLs: spending crawl budget on low-priority or duplicate URLs — like those created by junk parameters and dynamic filters — can starve relevant content of crawl activity.

- Low crawl activity on important content: weak site links or an incomplete XML sitemap may cause new pages with the potential for high traffic to show minimal crawl requests.

- Crawl dips or spikes after site changes: sudden drops in crawl rate after a major update can signal technical issues like broken links or status errors. Conversely, abrupt spikes that occur without any updates could hint at problems, too.

Pro tip: Using a crawl distribution chart, like the one found in Oncrawl, is a great way to visualize crawl patterns and make informed decisions on optimizing your site’s content and structure. With Oncrawl, you can filter by URL patterns, templates, or page types to see exactly where crawl resources are being spent — and whether they align with your content priorities.

Crawl frequency: quick fixes and best practices

Take these steps to optimize crawl frequency whenever issues are spotted:

Addressing over-crawled pages

If the crawl frequency for your website’s pages is too high, consider these measures:

- Use robots.txt: disallow unnecessary directories like /scripts/ or /tags/ via robots.txt.

- Add canonical tags: point parameterized or printer-friendly URLs to the canonical version to reduce duplicate requests.

- Include noindex tags: use noindex tags to keep crawled pages (like a login or admin page at the same URL) hidden from search results.

“Be careful while editing the robots.txt file. Make sure you don’t block access to critical resources, such as CSS or JavaScript files that Googlebot needs to render page content properly. If Googlebot can’t do this correctly, it may not understand a page’s content in full, which could lead to indexing problems or misinterpretation of the page’s meaning.”

– Jakub Adamiec, Senior Technical SEO Specialist at Surfshark

Addressing under-crawled pages

Websites looking to increase crawl frequency should try the following:

- Strengthen internal links: ensure that high-priority content has clear internal links from the homepage and other authority pages;

- Check status codes: audit for 4xx/5xx errors using crawl errors reports to fix broken links and optimize for average response time;

- Include new pages in an updated sitemap: update the XML sitemap when needed so search engine bots know to crawl new and modified pages.

Ongoing crawl monitoring

Even if you aren’t seeing any crawl issues, it’s important to analyze your log files regularly. With Oncrawl, you can schedule monthly or quarterly reviews of your web pages, site links, and crawl stats reports to catch unexpected behavior or server errors.

Since Googlebot accesses your site from various data centers, it’s important to have a clear international SEO strategy. Tools like a secure VPN service can help you check how your localized content appears to users in different countries.

Key takeaway

Popular websites — the ones with fresh content, high domain authority, and impressive performance in Google search results — know how to leverage crawl frequency to their advantage. But crawl frequency is a vital metric for any website.

Gauging your crawl rate through a log file analysis highlights exactly what Google’s index values on your site, and proactive monitoring ensures that issues tied to web crawlers get detected early.

For effective SEO, optimizing crawl frequency is essential. Regular updates, robust internal linking, and a proper site structure can significantly improve your site’s health and search engine rankings.