If you work in SEO, you’ve likely heard it (and repeated it to your stakeholders) a thousand times: You can write the best content in the world, but if Google can’t find it, it’s not worth much. The same goes for products and services—no matter what your website offers, search engines have to be able to discover and recognize those offerings in order to rank them in search results.

This principle is why technical SEO is the foundation of a healthy website. And for many sites, getting important pages crawled and indexed isn’t a terribly difficult goal to achieve. You add a new URL, it appears in your sitemap, it isn’t blocked in robots.txt, perhaps you throw a few internal links its way—et voila! It’s eligible for the SERPs. But for larger and more complex sites, it’s not always that easy.

Search engines’ crawling capabilities are vast, but they do have a limit. Google prioritizes crawling of pages it sees as valuable, and if you’re not sending the right signals about pages you consider important for crawling, they might be overlooked in favor of others.

While this isn’t usually an issue for smaller and less complex sites, there are a few industries in which crawl budget management should factor into a technical SEO strategy.

Large e-commerce sites with huge numbers of products, news publishers that add new pages every day, and sites with at least a million URLs are all likely to have legitimate crawl concerns. Let’s look at how to manage those concerns by auditing the crawl efficiency of your most valuable pages.

Identifying valuable URLs

You might think of every single URL within your website as necessary, but that doesn’t mean that every page is valuable for organic search purposes.

For example, the terms of service page on your e-commerce site might exist due to legal necessity, but it doesn’t provide any direct or indirect monetary value for the business (and it probably doesn’t need to be crawled).

Because search engines spend their crawl budget on pages they perceive as valuable, you’ll have to define value within the context of your site before you can align Google’s perceptions with your own.

In some cases, valuable pages will be immediately obvious. An e-commerce site’s product pages usually drive the most direct monetary value, with category pages close behind.

A large news publisher’s most recent articles are its most valuable because they’re what keep the site consistently relevant. But in other cases, you’ll have to dive deeper into conversion and engagement metrics to find indirect links between organic search and the bottom line.

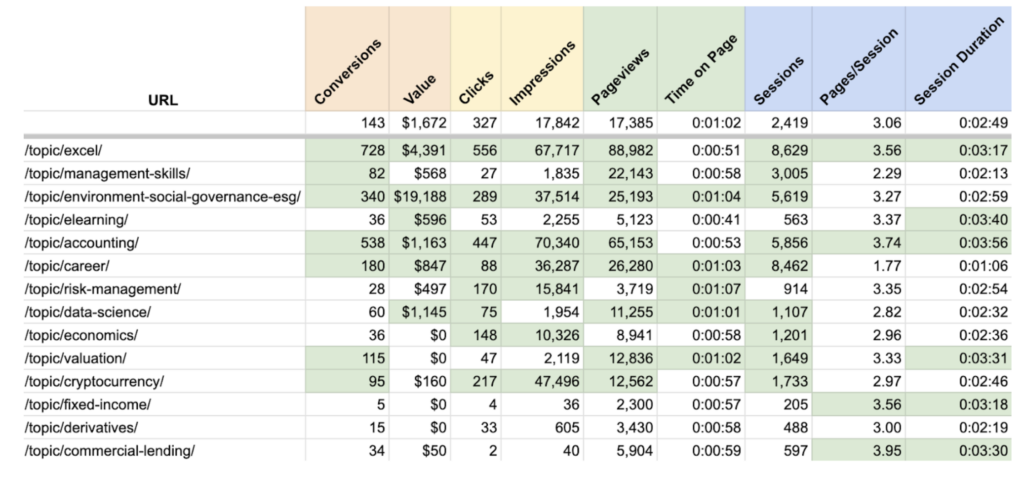

Conversion pages (which feature products directly) are obviously of high value, but some resource pages, like those shown above, can also be tied to actual dollars.

By pulling data from Google Analytics, we’re able to see which of these resource pages were visited the most frequently on a customer’s way to completing a conversion and what the monetary value of those conversions was.

We can also use GA and Search Console data to judge how valuable each page is in terms of engagement—organic clicks, time on page, etc.

Keep in mind that sometimes, pages that don’t make money should still be prioritized for crawling.

In the case of an enterprise SaaS site, solution and pricing pages may sit at the bottom of the sales funnel, but whitepapers, webinars, and other resources serve a vital top-of-funnel function in growing brand awareness and bringing informational searchers to the site.

If these pages aren’t crawled efficiently, the site’s traffic will be entirely reliant on users who are ready to purchase, with no opportunity to guide early-stage users down the funnel over time.

[Case Study] Increase crawl budget on strategic pages

The simplest way to segment your site and monitor crawling of different page types is to organize your sitemaps accordingly. On an e-commerce site, if you place all product pages into one set of sitemaps, all category pages into another, and so on, you can easily identify indexation patterns and keep track of issues affecting each page type.

Some CMSs will organize your sitemaps this way automatically, as long as your page templates and URL structures are properly applied.

Pinpointing crawl inefficiencies

You’ve identified which pages should be monitored most closely and organized those pages in a way that makes monitoring easy. Now you’ll want to find out how thoroughly and efficiently your valuable pages are crawled and indexed, investigate the potential causes of inefficiency, and do what you can to encourage search engines to crawl your site according to your priorities.

Let’s say you’ve identified your e-commerce products folder as your highest crawling priority. You can start by performing a site search for that directory, which will let you know whether the number of product URLs in existence is similar to the number that Google has indexed.

If this number is significantly lower than the number of products living on your site, you’ll know there’s a crawling and/or indexation problem to be addressed.

You can also use a tool like Oncrawl to crawl your entire site or a specific subfolder and return the status codes and indexability of each URL in question. Any valuable page that doesn’t return a 200 status code and show as “indexable” is one you’ll want to investigate further.

Source: Oncrawl

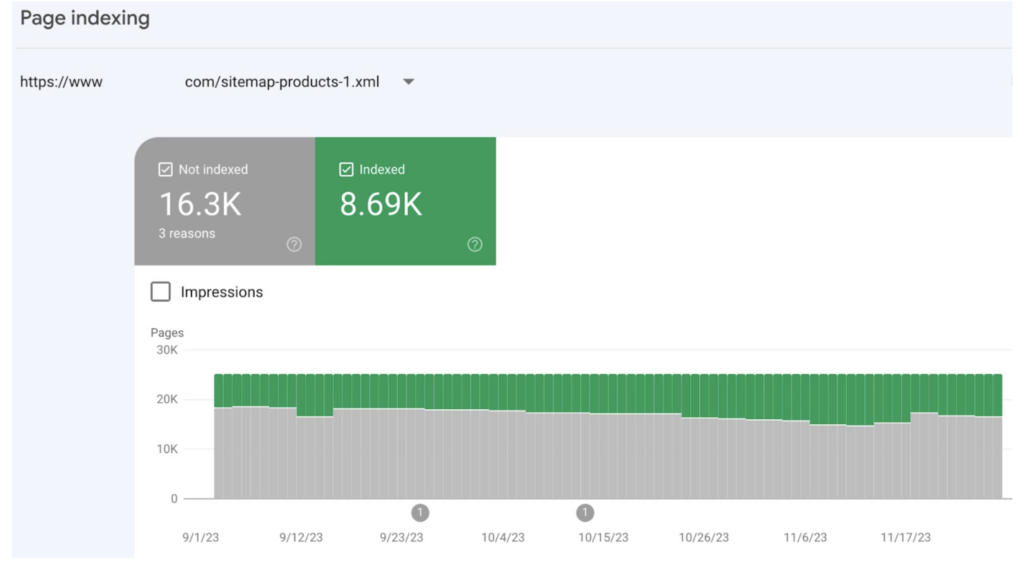

If you’ve organized your sitemaps by page type and submitted them to Search Console, you can also investigate issues affecting each page type by looking at each sitemap’s page indexing report.

Source: Search Console

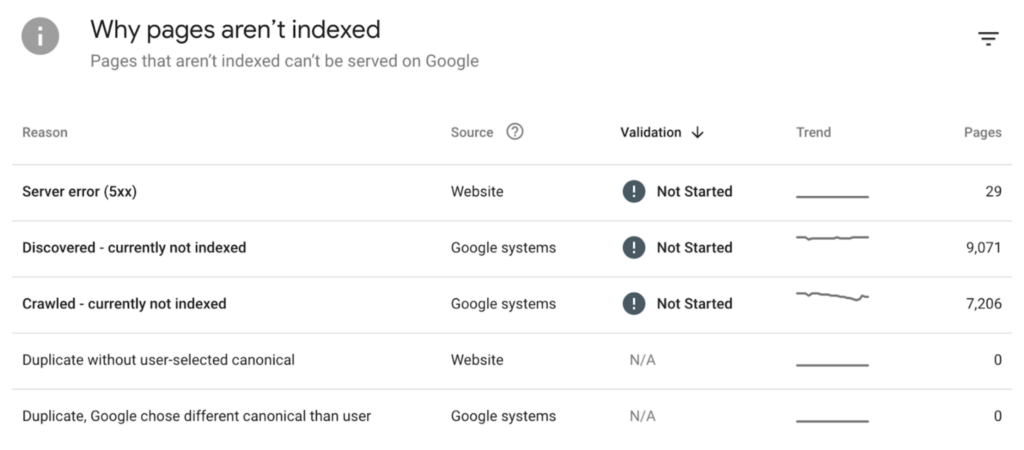

In this case, the indexation of product pages has fluctuated over time, but it’s clear that there are issues affecting the majority of these pages. We can see the reasons listed below as well as a sampling of specific pages being affected by each issue.

Source: Search Console

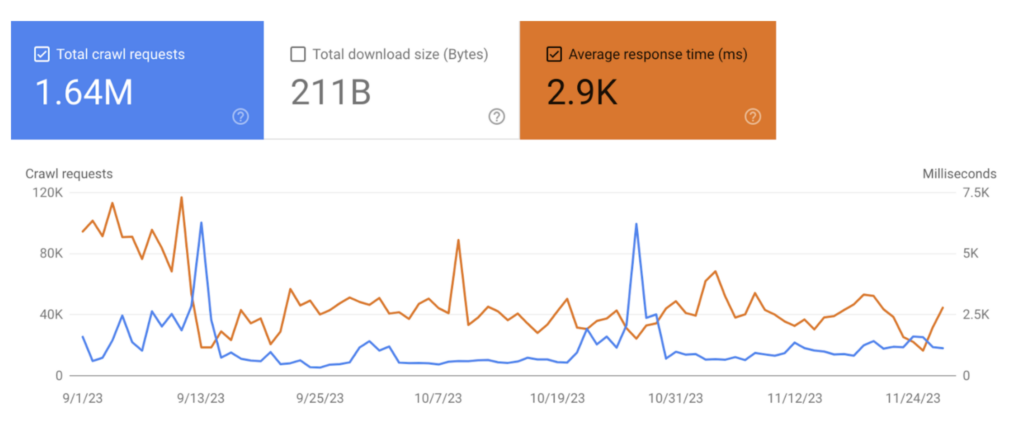

Search Console also offers full-site crawling statistics from within the Settings menu, under Crawling > Crawl stats.

Here you’ll find the total number of crawl requests your site has recently experienced (whether those requests were successful or not), as well as the average time it took for your site to respond to a crawl request.

While you can’t segment this data by subfolder, you can use it to gauge Google’s crawl request frequency relative to the number of valuable URLs on your site and determine whether page quality and use of crawling directives are affecting how thoroughly your site can be crawled.

Source: Search Console

For the example above, Google crawlers have visited an average of 18,500 pages per day. On a site with more than 600,000 URLs, this is an obvious crawling deficit. And we can identify some potential culprits.

First, the site’s average crawl response time is quite high—nearly 3,000ms, when anything over 300ms should be considered worrisome. This could be because Google is expected to crawl too much low-value content, either because too much of it exists or because the site has insufficient crawling controls implemented.

Second, we can scroll down a bit and see that only 7% of this site’s crawl requests were made for the purpose of discovering new pages.

Source: Search Console

This means that the incredibly vast majority of the site’s 600,000 URLs aren’t getting discovered and crawled at all, or are at least getting crawled very, very slowly.

The ultimate source of truth for how Google crawls your site lies in your log files. Using a log file analyzer with filters set to show only hits from Google spiders will let you know exactly which URLs the search engine hits, how frequently it crawls those URLs, and how successful and efficient those crawls are.

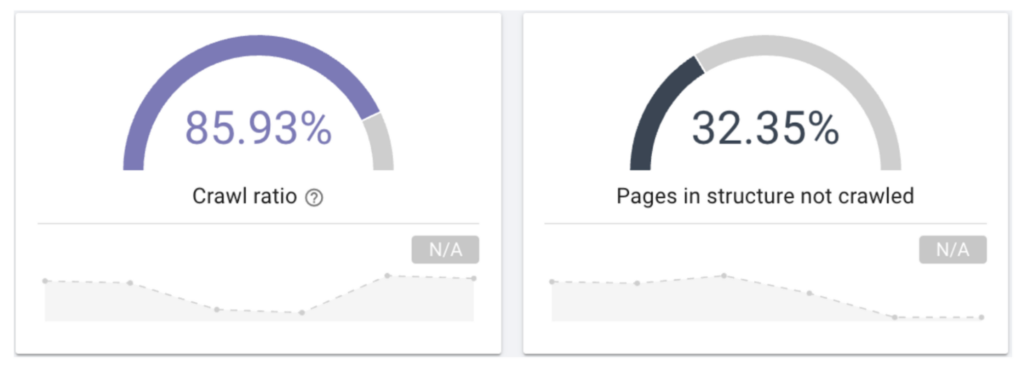

Source: Oncrawl

Sampling this data over a long period of time and segmenting it by page type can help you identify problematic patterns to address with site improvements or better crawling and indexation controls.

Auditing inefficiently crawled URLs

Once you know what pages are suffering from inefficient crawling, the next step is to find out why. Conducting a site audit is an efficient way to find your answers. Launching a crawl in Oncrawl helps you collect various types of data to analyze and cross-analyze. The different reports available include:

- Logs monitoring

- Crawl report

- SEO impact report

- Ranking report

- Social media report

Generally, there are two primary reasons a page might not be crawled effectively: poor use of technical controls and poor page quality.

Technical SEO considerations

In order for a page to be discovered and crawled, it needs to be linked to from somewhere. The more prominently a page is placed in the site architecture, the stronger the suggestion to search engines that this page is important for crawling and indexation.

Placing a link to a category page in your top navigation or in a persistent footer, for example, creates the impression that the page is of high value and ought to be treated as such. It’s a good idea to create a visual map of all valuable pages on your site and ensure that important pages link to each other effectively, with no high-value URL living any more than three clicks away from the home page.

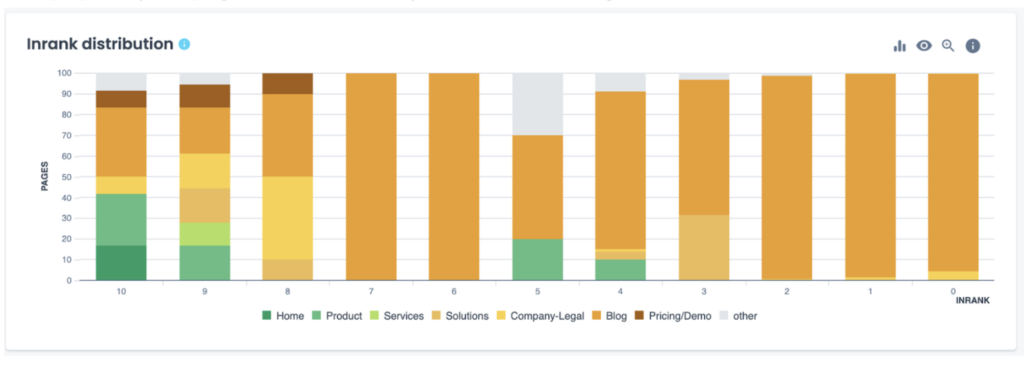

Looking at your Inrank distribution, a measure of your internal pagerank, helps you understand how popular your pages are based on your internal linking architecture.

Source: Oncrawl

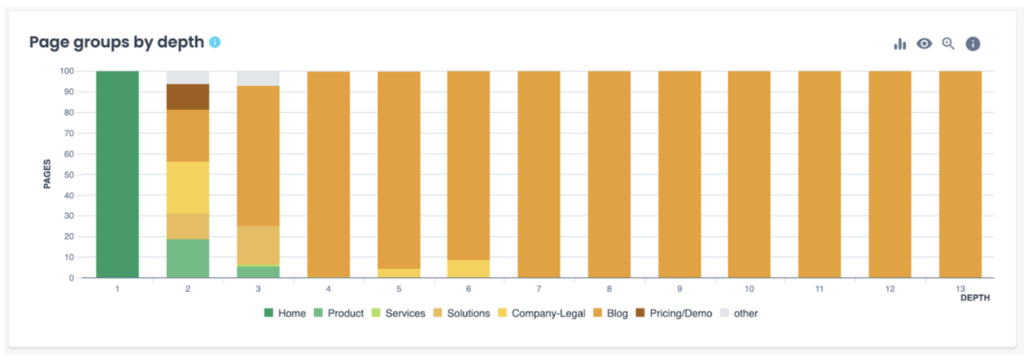

Evaluating your page groups by depth can also help you to understand if there are specific sections – or pages inside each section – that are too far from the home page and that should consequently be better positioned within your site’s architecture.

Source: Oncrawl

Poor page quality

Canonicalization, pagination, and parameterization can all be hints that a page is less important for crawling—and if your robots.txt file blocks crawling of certain parameterized URLs, they obviously won’t appear in the index.

For sites with complex parameterization directives, use a robots.txt testing tool to check on the crawlability of specific pages and either adjust your listed directives or move important pages outside of your filtration structure if they’re blocked unintentionally.

Regardless of what suggestions you offer up to search engines, they may still decline to frequently crawl and index pages that they deem to be of low quality. Asking them to pay attention to content that’s duplicated elsewhere on the site, that’s too thin to be of any value to a user, or that’s outdated means taking attention away from better content.

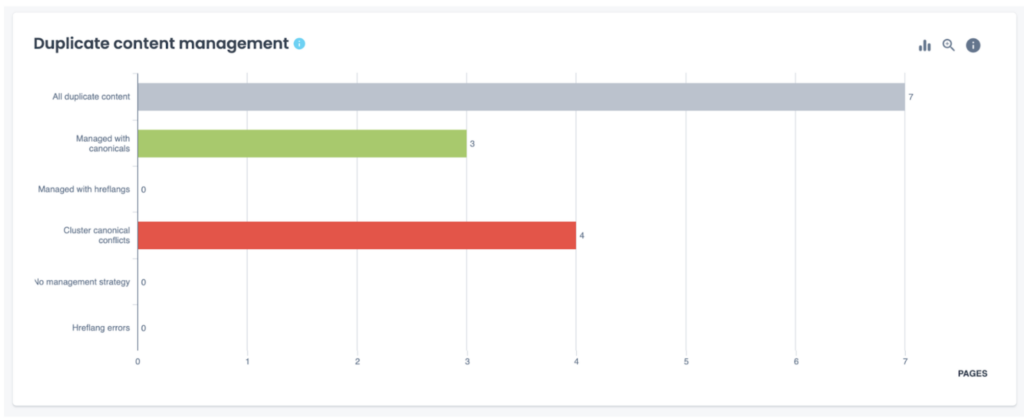

Determine how you want to manage your duplicate or thin content and closely monitor the strategies you implement.

Source: Oncrawl

What’s more, if Google discovers that a particular sitemap contains too many pages that don’t meet quality standards, it may treat that entire sitemap as low-priority.

Make sure that any unnecessary and low-value pages are consolidated or removed from your site, and that only 200-level, indexable, high-quality URLs are present in your sitemaps.

Encouraging crawling and indexation of valuable pages

One of the most effective ways to ensure search engines crawl and index the pages you care about most is to avoid distracting them with pages you don’t care about as much.

You can manage this by disallowing unimportant pages, like those involved in your checkout process, using the robots.txt directives.

Many ecommerce sites also opt to disallow filter pages beyond a certain level of parameterization.

For example, they may allow crawling of single-parameter URLs, like a “red dresses” page with the URL /dresses?color=red or a “dresses under $50” page at /dresses?price=under-50, but block any page with two or more filters applied, like /dresses?color=red&price=under-50. This can help cut down on near-duplicate indexed pages.

Appropriate canonicalization

Canonicalization is often used as a way to show search engines the “best” version of a page.

Therefore, a page that is a duplicate, or nearly so, should ideally point to the original content’s page as the canonical authority.

For key pages, self-canonicalization isn’t strictly necessary, but it’s recommended, particularly when dealing with pages that have different parameter variations.

It’s also crucial to understand that pages marked with a canonical tag are still subject to crawling and indexing.

This tag serves more as a suggestion for how Google and other search engines should process the page, rather than an absolute command. Consequently, the final decision on whether to index canonicalized pages lies with the search engine.

In most scenarios, employing a canonical tag transfers the SEO value of a page to the one indicated in the tag (be it the same page or a different one), enhancing its potential for higher performance in search results.

That said, when possible, consider consolidating content and implementing a 301 redirect instead of a canonical (for example, when letting search engines know about the https vs. the http version of a site).

Effective pagination

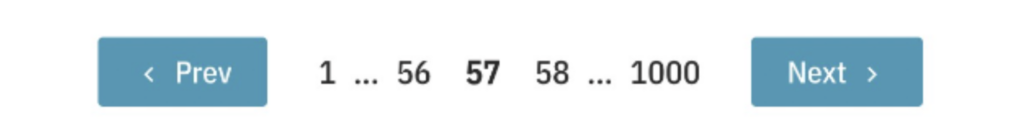

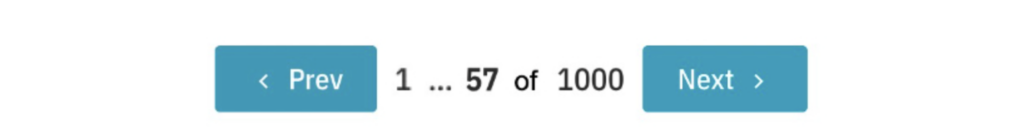

Ineffective pagination can also eat up crawling resources. For sites with huge numbers of constantly changing paginated pages, be sure to implement self-referencing canonicals on each paginated page (don’t canonicalize them to the first page of results). Also consider limiting the number of links to subsequent results pages—instead of this option:

Consider this one, which better demonstrates the diminishing importance of each paginated page in the list but still gives the user context about the size of the list:

Maximizing sitemap entries

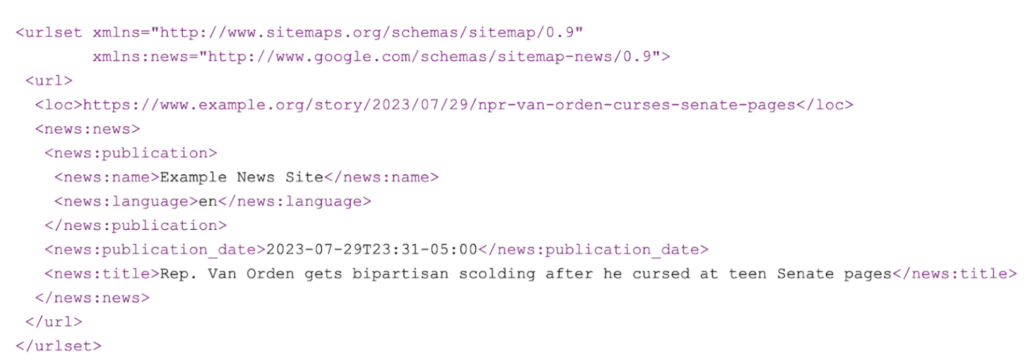

News publishers and other sites that frequently add new URLs with a need for speedy indexing can benefit from submitting RSS feeds to Google Publisher Center and maximizing their sitemap entries to offer as much information as possible about each story:

Maximized alternative:

Closing thoughts

At the end of the day, search engines will always manage their own crawl budgets in the ways that serve their own interests. Remembering that Google is a business like any other, with resources to conserve, should drive you to provide clear, efficient crawl paths throughout your website without any distracting fluff along the way.

Look at your site the way a search engine would, be as ruthless as they’ll be in identifying valuable content, and give them the strongest indicators and opportunity you can to place that content front and center in their indexes.