For every website on the internet, Google has a fixed budget for how many pages their bots can and are willing to crawl. The internet is a big place, so Googlebot can only spend so much time crawling and indexing our websites. Crawl budget optimization is the process of ensuring that the right pages of our websites end up in Google’s index and are ultimately shown to searchers.

Google’s recommendations for optimizing crawl budget are rather limited, because Googlebot crawls through most websites without reaching its limit. But enterprise-level and ecommerce sites with thousands of landing pages are at risk of maxing out their budget. A 2018 study even found that Google’s crawlers failed to crawl over half of the webpages of larger sites in the experiment.

Influencing how crawl budget is spent can be a more difficult technical optimization for strategists to implement. But for enterprise-level and ecommerce sites, it’s worth the effort to maximize crawl budget where you can. With a few tweaks, site owners and SEO strategists can guide Googlebot to regularly crawl and index their best performing pages.

How does Google determine crawl budget?

Crawl budget is essentially the time and resources Google is willing to spend crawling your website. The equation is as follows:

Crawl Budget = Crawl Rate + Crawl Demand

Domain Authority, backlinks, site speed, crawl errors, and number of landing pages all impact a website’s crawl rate. Larger sites usually have a higher crawl rate, while smaller sites, slower sites, or those with excessive redirects and server errors, usually get crawled less frequently.

Google also determines crawl budget by “crawl demand.” Popular urls have a higher crawl demand because Google wants to provide the freshest content to users. Google doesn’t like stale content in its index, so pages that haven’t been crawled in some time will also have a higher demand. If your website goes through a site migration, Google will increase crawl demand to more quickly update its index with your new urls.

Your website’s crawl budget can fluctuate and is certainly not fixed. If you improve your server hosting or site speed, Googlebot may start crawling your site more often knowing it is not slowing down the web experience for users. To get a better idea of your site’s current average crawl rate, look to your Google Search console Crawl Report.

Does every website need to worry about their crawl budget?

Smaller websites that are only focused on getting a few landing pages ranking don’t need to worry about crawl budget. But larger websites — especially unhealthy sites with excessive broken pages and redirects — can easily reach their crawl limit.

The types of large websites that are most at risk of maxing out their crawl budget usually have tens of thousands of landing pages. Major ecommerce websites in particular are often negatively impacted by crawl budgets. I’ve come across multiple enterprise websites with a significant number of their landing pages unindexed, meaning zero chance of ranking in Google.

There are a few reasons why ecommerce sites in particular need to pay more attention to where their crawl budget goes.

- Many ecommerce sites programmatically build out thousands of landing pages for their SKUs or for every city or state where they sell their products.

- These types of sites regularly update their landing pages when items go out-of-stock, new products are added, or other inventory changes occur.

- Ecommerce sites tend toward duplicate pages (e.g. product pages) and session identifiers (e.g. cookies). Both are perceived as “low-value-add” urls by Googlebot, which negatively impact crawl rate

Another challenge in influencing crawl budget is that Google may increase or decrease it at any time. Although a sitemap is an important step for large websites to improve the crawling and indexing of their most important pages, it is not sufficient in ensuring Google doesn’t max out your crawl budget on lower-value or under-performing pages.

So how can webmasters perform crawl budget optimization?

Although site owners can set higher crawl limits in their Google Search Console accounts, the setting does not guarantee increased crawl requests or influence which pages Google ends up crawling. It may feel like the most natural solution is to get Google to crawl your website more frequently, but there are very limited optimizations that have a direct correlation with increased crawl rate.

We all know that good budgeting is not about increasing your spending limits; it’s about being more selective with what you spend your money on. When you apply this same concept to crawl budget, it can yield huge results. Here are a few strategic steps for helping Google spend your budget to your advantage.

Step 1: Identify which pages Google is actually crawling on your site

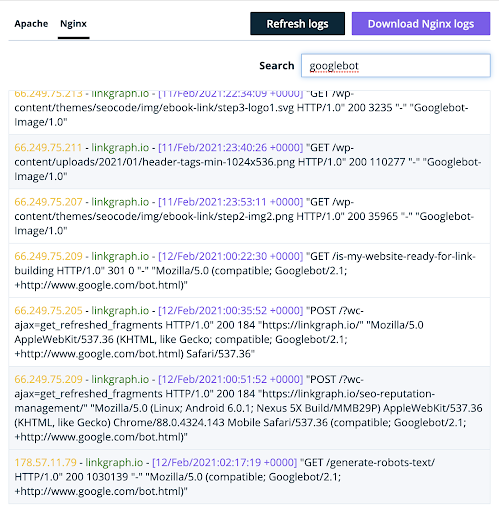

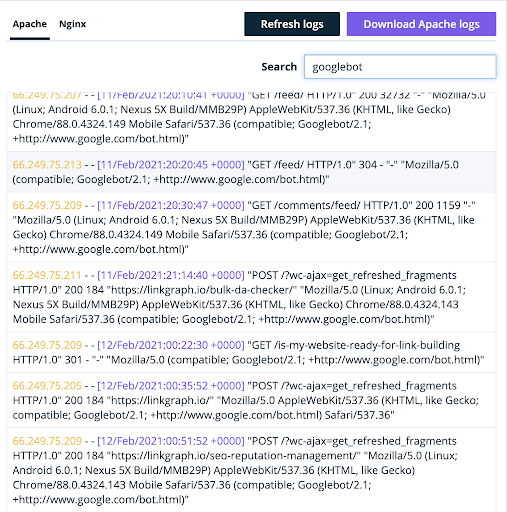

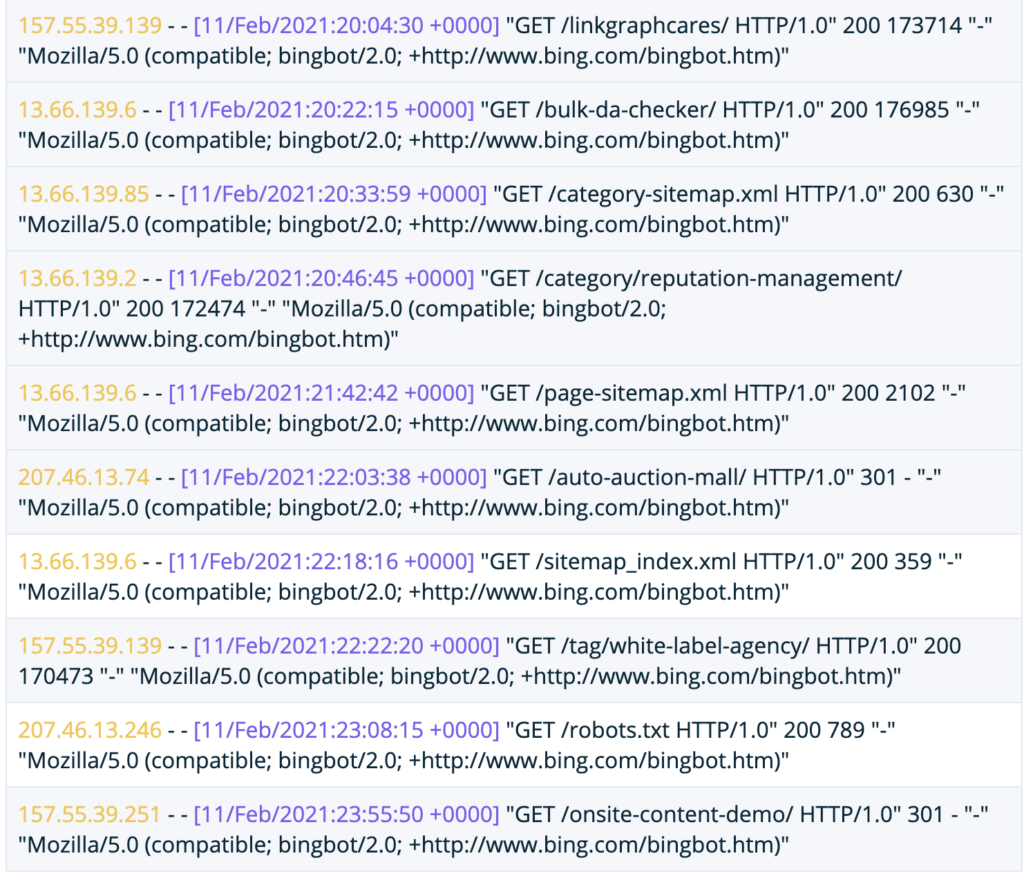

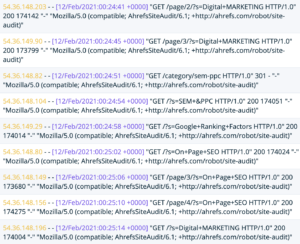

Until recently, Google Search Console’s crawl report only told site owners how many crawl requests their site received on given days. Although Google’s new Crawl Stats Report provides far more detailed information about crawling, the best place to understand how Google crawls your site is still in your server log files.

When Google visits your website, they use a particular user agent. This lets your server know that the traffic is actually Googlebot and not a real person.

(You’ll find Bingbot and Ahrefs’ bot there too).

Oncrawl Log Analyzer

Siteowners who analyze the contents of this log file will get loads of information about Google’s crawl budget for their site. The file will reveal a few things:

- Which pages the user agent visits

- How many pages that agent crawls per day

- Whether or not any of the crawled pages are 404ing or broken

Ideally, you want Google to crawl the landing pages on your website that are optimized for the highest-value keywords. Also, site owners should never waste crawl budget on 404s. Google Search Console will only show you some of your soft 404 errors, but you can identify all of them in your server logs.

Once you have more detailed information about which pages of your website are being crawled, complete the following action items:

- Add robots tags: If Googlebot is crawling those 404s or broken pages, priority number one should be adding robots tags [noindex, nofollow] to prevent Googlebot from crawling and indexing those pages.

- Adjust your sitemap: If your server logs reveal that Google isn’t crawling your potentially high-performing pages, put them higher up in your sitemap to make sure they get crawled.

Step 2: Accept that not all of your landing pages need to rank in Google

The primary reason why so many enterprise-level websites waste their crawl budget is because they allow Google to crawl every landing page on their site. Many websites even like to put all their pages into their mobile app so Google can find and crawl all of them. This is a mistake, because in reality, not all of our landing pages are going to rank.

What is the value of having a landing page in Google’s index? Ranking and converting. If your website has landing pages that aren’t pulling their weight by ranking for multiple keywords or converting site visitors into leads and revenue, why even take the risk of letting Google crawl them?

Enterprise-level and ecommerce site owners should know which pages of their websites are conversion-optimized and have the strongest chance of ranking and converting. Then, they should leverage every advantage they can to make sure Google spends crawl budget on those high-performing pages.

The landing pages of your website that have high ranking and conversion potential are worth spending crawl budget on. Here are a few tips to ensure that Googlebot includes those pages in your budget.

- Reduce the number of pages in your site map. Focus only on the pages that actually have a good chance of ranking and getting organic traffic.

- Delete underperforming or unnecessary pages. Remove those pages that bring no value because they have no ranking, conversion, or functional purpose.

- Content prune. Prune the pages that don’t actually get any organic traffic and redirect them to other landing pages on your site that are relevant and do get traffic. Take note that redirects do eat up a bit of your crawl budget, so try to use them sparingly and never use them twice in a row.

It’s difficult for any site owner to let go of content, but it’s much easier to prevent Google from crawling certain pages than it is to get Google to increase your overall crawl budget. Cleaning up your site so Google’s crawlers are more likely to find and index the best stuff is top priority if you want to spend your crawl budget wisely.

Step 3: Use internal links to elevate high-performing pages for Google’s crawlers

Once you’ve identified which pages Google is crawling, added necessary robots tags, deleted or pruned underperforming pages, and made adjustments to your sitemap, Google’s crawlers will be more prone to spend their budget on the right pages of your website.

But in order to truly maximize that budget, your pages need to have what it takes to rank. On-page SEO best practices are key, but a more advanced technical strategy is to use your internal linking structure to elevate those potentially high-performing pages.

Just like Googlebot only has a limited crawl budget, your website only has a certain amount of site equity based on its Internet footprint. It is your responsibility to concentrate your equity in a smart way. That means directing site equity to those pages that target keywords you stand a good chance of ranking for and on those that bring you traffic with the right kinds of customers, those who are likely to convert and actually have economic value.

This SEO strategy is known as PageRank sculpting. If you have a large website with thousands of landing pages, an advanced strategist can run SEO experiments to optimize the internal linking profile of your website for better PageRank distribution. If you’re a new website, you can get ahead of the curve by incorporating PageRank sculpting into your site architecture and thinking about site equity with every new landing page you create.

Here are two of my favorite strategies for analyzing my pages to determine which would most benefit from PageRank sculpting.

- Find the pages of your website that have good traffic but don’t have enough PageRank. Find ways to get those pages more internal links and send more PageRank there. Adding them to the header or footer of your website is a great way to do this quickly, but don’t overdo links in your navigation menu.

- Focus on the pages that have a lot of internal links, but don’t get much traffic, search impressions, and rank for very few keywords. Pages receiving a lot of internal links typically contain a lot of PageRank. If they’re not using that PageRank to bring organic traffic to your site, they’re wasting it. It’s better to move that PageRank to pages that can actually move the needle.

Understanding the role that every link on your website plays in not only sending Googlebot around your website, but in distributing your link equity, is the final step in crawl budget optimization. Getting your internal linking structure right can lead to dramatic rankings improvements for your money pages. In the end, the best way to spend your crawl budget is on landing pages that are most likely to put revenue in your pocket.

After you implement your changes, keep an eye on the keyword rankings for those improved pages in a Google Search Console tool. If the rankings improve for those pages, it shows that your crawl budget optimization is working. Then, as you add new pages to your website, be more selective in whether or not they deserve to eat up your crawl budget. If not, keep directing crawlers only to the pages that work hardest for your brand.