Given the importance an online presence holds for most businesses, you’re likely aware of the impact technical SEO can have on your site. It is something that is significant, not only regarding how search engines understand your site, but also regarding user experience.

Though your site may have well-written, high-quality articles that target the relevant keywords, that’s not necessarily enough if those pages are not accessible to search engines. If you’re not implementing any SEO best practices or you don’t have a defined SEO strategy, those pages won’t appear in search results.

That is where a regular check of your site’s health comes into play. Ensuring that your site is optimized, secure, and easily navigable will impact its performance in the SERPs and will ultimately create a better experience for your site visitors.

Key indicators of a healthy website and common technical SEO issues

We won’t examine doing an in-depth audit in this article, but we’ll take a look at the key indicators you should monitor on a regular basis that can quickly alert you to any bigger issues.

A lot of SEO revolves around crawlability and indexing, so let’s dive deeper into those and also look at the most common issues and the best practices that you can utilize to resolve them.

Crawlability and indexation

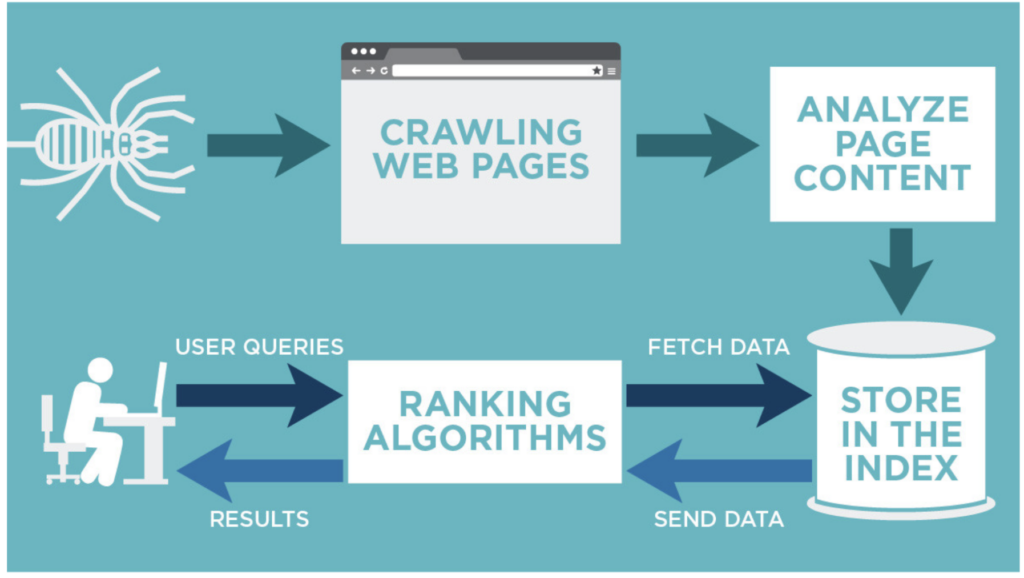

Getting straight to the point, crawlability and indexing are crucial because they determine whether your content appears in search results or not.

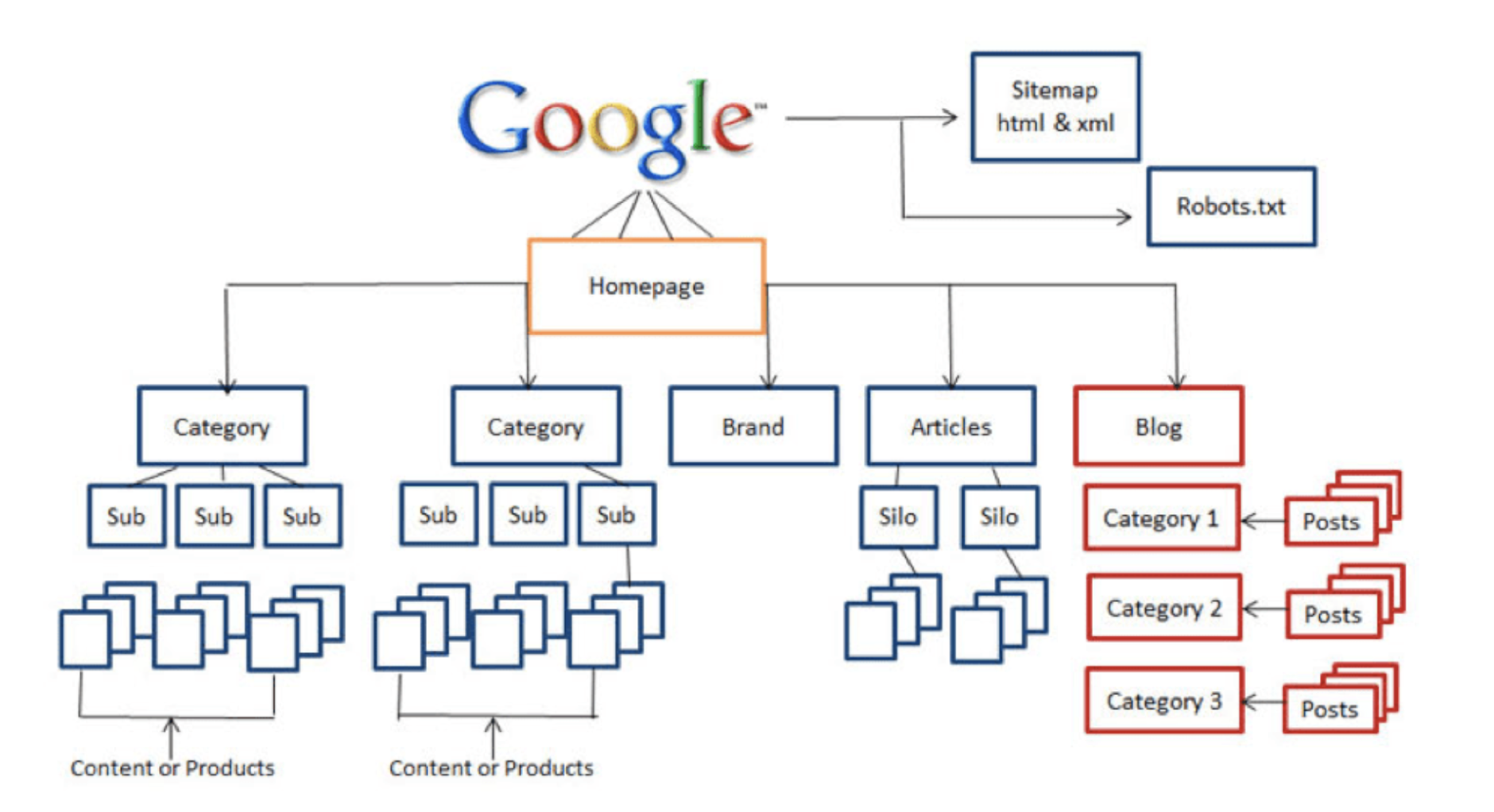

Source: ecreativeworks.com

The best way to spot crawlability and indexing issues is by running a full crawl of your website with a tool like Oncrawl and checking the Coverage report in GSC.

By analyzing your crawl results, you can identify which issues may be affecting how your site is crawled and subsequently indexed. It’s useful to monitor your site frequently to ensure that the indexation process is going smoothly. Below are some of the more common culprits that cause issues.

Robots.txt issues

Robots.txt is there to guide search engines around your site and understand what kind of pages it contains. However, a small inconvenience, such as unintentionally blocking certain bots or blocking JavaScript or CSS resources, can prevent search engines from both crawling and indexing your website properly.

Noindex tags

Using noindex tags is one of the methods that many SEOs use to block the indexing of certain pages. While there are often good reasons to prevent certain pages of your site from being indexed (i.e. content with little or no value), it’s important to implement it on the right pages. Used incorrectly, it can be harmful to your website, reducing visibility and organic traffic.

XML Sitemaps

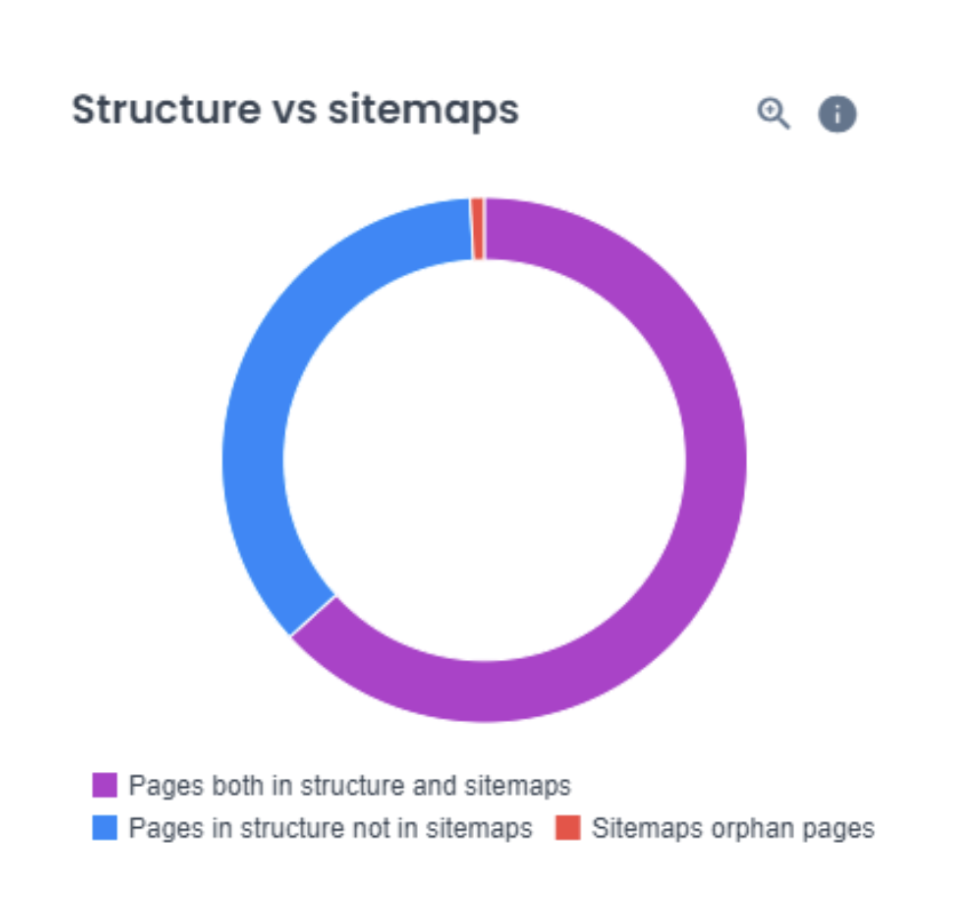

A sitemap is a file that lists all the important pages, videos, and files on your website and shows how they are related. It helps Google understand which parts of your site are important and gives useful details about them. Make sure that your XML sitemap file contains the most relevant pages of your website and that it is consistent with your site structure.

After launching a site crawl, you can find a sitemap report in the Oncrawl app that provides a quick and easy to understand representation. I find it very useful whenever performing a sitemap analysis.

Source: Oncrawl

Proper internal linking and site structure

Another important technical SEO factor to keep in mind is your internal linking strategy. How your site is organized (the site architecture), as well as the interconnection between pages, helps search engines find the pages more effectively. Additionally, with optimized contextual internal links, search engines can understand what the linked pages are about.

The importance of internal linking for the website’s performance

To understand internal linking a bit better, let’s look at an example. Imagine you’re a person that is passionate about dogs and you’re visiting a website hoping to find information about dog care, breeds, training, and health.

As you start navigating the site, you notice that finding the information you were looking for is not easy. There is no proper navigation menu, footer, or contextual links – all in all, there is no structure to guide you through. In most cases, if you run into such a situation, you will likely leave the site frustrated and dissatisfied.

Instead of continuing to search, you’ll find another site that understands your search intent, offers more information, and a better user experience. The same goes for search engines. Therefore, it’s extremely important to ensure that your site provides an optimal structure that facilitates site navigation.

Common internal linking mistakes

We often hear that practice makes perfect and when it comes to internal linking, it’s no different. Learning from mistakes is part of the process, so I’ve compiled a list of the most common internal linking errors I’ve come across in my work.

- Excessive use of internal links: Stuffing a page with an excessive number of internal links can lead to user confusion and might be flagged as spam-like behavior by search engines.

- Broken internal links: Links leading to pages that don’t exist or show error messages, like 404 errors, can result in poor user experience and obstruct the crawling process.

- Over optimized anchor texts: Anchor texts are there to give context both to users and search engines. However, it sometimes happens that they are over optimized. What does that mean? It means that the same anchors are repeated over and over again. To avoid that, we just need to find keyword variations related to that particular page and use those instead.

- Non-descriptive anchor texts: I usually notice anchor texts named “click here” or “read more” and those can be described as non-descriptive. That is to say, they give no context about the page to which it is linked and that both users and search engines alike are not informed about the linked page’s content.

- Identical anchor texts for different pages: Identical anchor texts can lead to issues with keyword cannibalization, which is harmful for any SEO strategy.

- Neglecting link relevance: Sometimes it happens that there are internal links that are leading to pages that are not topically related. This can confuse users and search engines about the site’s structure and content relevance of this website.

- Buried and orphan pages: There are often pages that are either buried too deep within the site structure or there are pages without any internal links pointing to them. Either way, it’s not good for your SEO because it’s difficult to discover these pages.

[Case Study] Monitoring and optimization of a website redesign following a penalty

Quick check metrics to gauge site health

As an experienced SEO Analyst, I have worked with a number of different SEO projects and had the opportunity to monitor different sites. As such, I see a lot of the same SEO issues that are often overlooked. Let’s take a quick look at a few and I’ll show you how to find these problems using Oncrawl and you can use the below list as a technical SEO checklist for your reference.

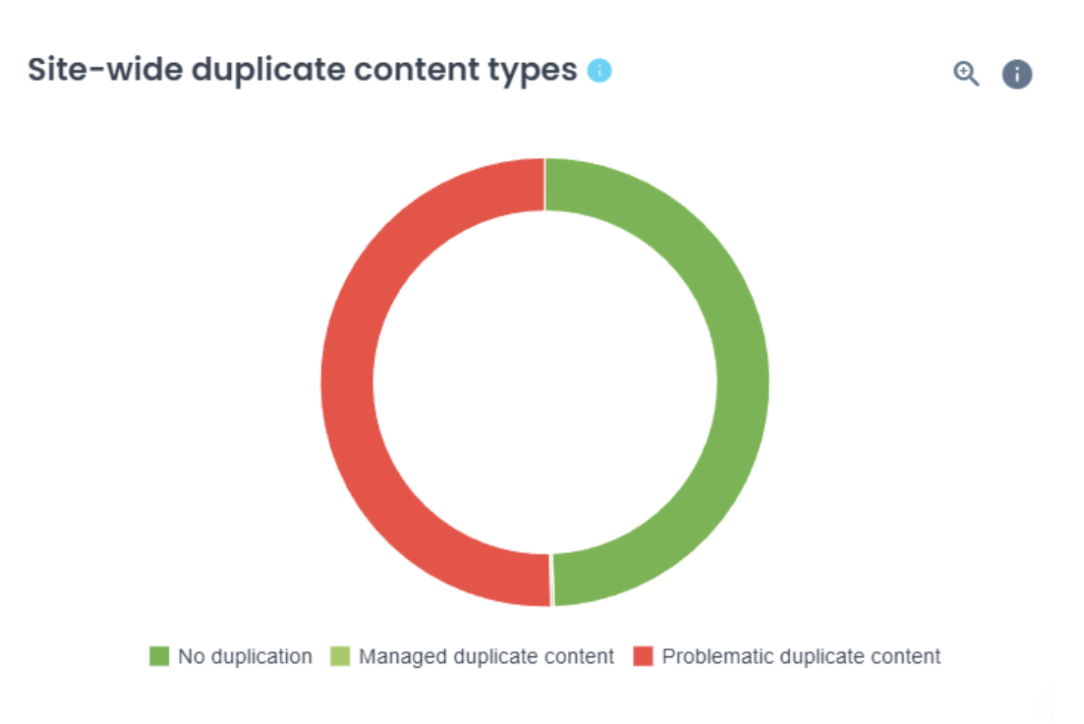

Duplicate content

Duplicate content happens when the same, or almost the same, content is found on more than one page of your site. This issue leads to a situation where search engines may struggle to determine which version is the most relevant.

It’s worth mentioning that duplicate content is an issue that can cause confusion for search engine crawlers and impact your site’s indexing. As a consequence, the overall health of your website will be damaged.

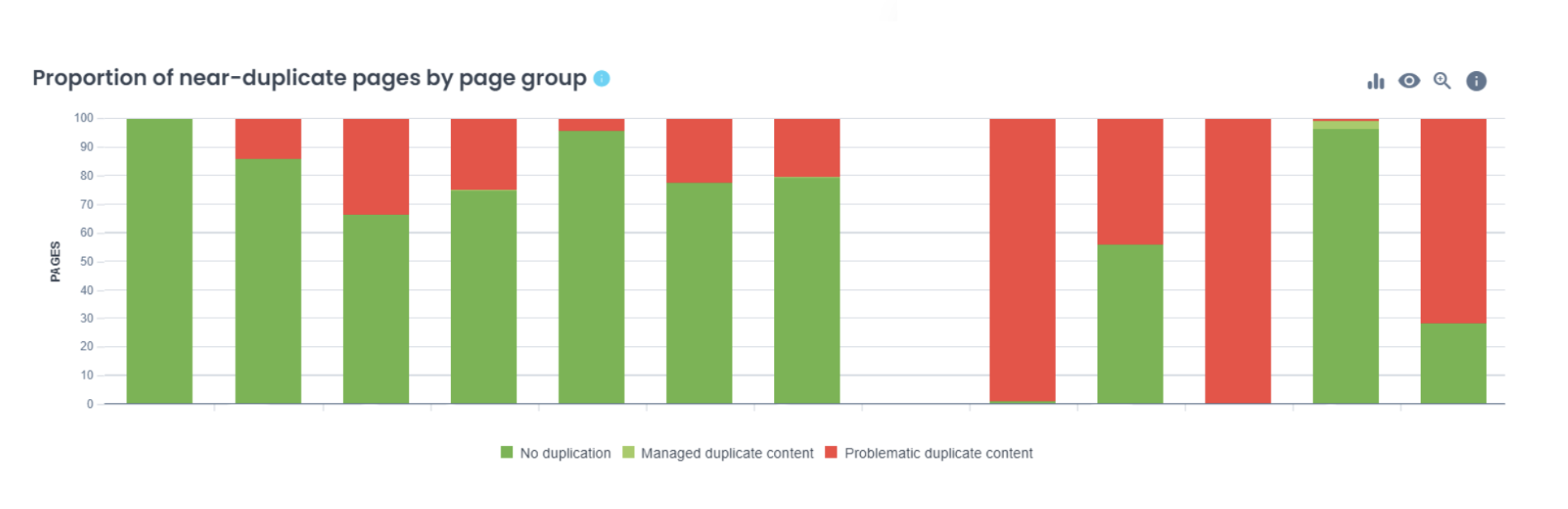

There are two standard charts in Oncrawl that look at duplicate or near-duplicate content which will help you identify if your site has these issues and where they can be found.

Source: Oncrawl

One way to avoid duplicate content issues is by using canonical URLs to indicate your preferred pages.

Canonical issues

Canonical issues are closely related to duplicate content issues. When Google discovers that we have duplicate content on our website, without proper guidance, the search engine cannot be sure which of the two similar pages should be indexed and it may not index the one you identify as your priority page.

To resolve this, you can use a canonical tag (<link rel=”canonical” href=”…”>) in the HTML of each page. This tag will tell search engines which version of the page you consider to be the most important and which should be indexed.

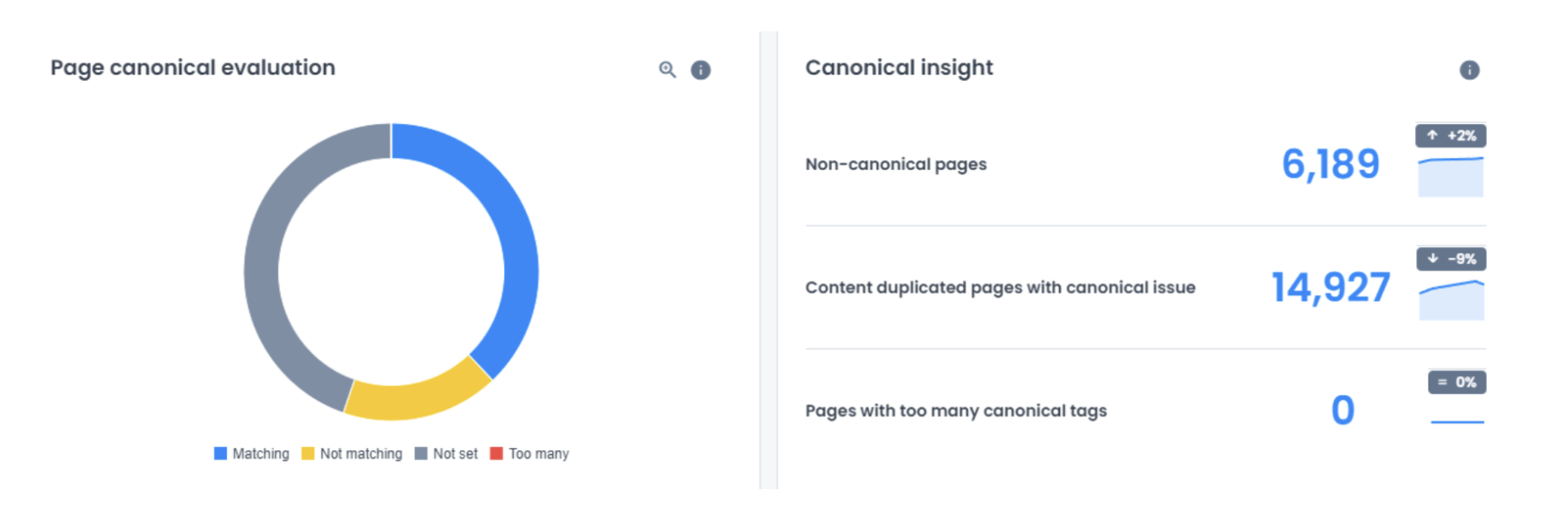

Oncrawl helps you discover canonical issues quickly by looking at the indexability summary located in your crawl report. The available charts give you an overview of canonical errors that exist so you can prioritize which ones to fix and how to fix them.

Source: Oncrawl

- If there are pages that contain matching canonicals, it means that those pages have self-referencing canonicals.

- When it comes to not matching canonicals, they refer to different pages as the canonical version.

- Not set means that particular pages do not have a canonical version.

- Too many refer to the pages that contain more than one canonical version.

Improper redirects

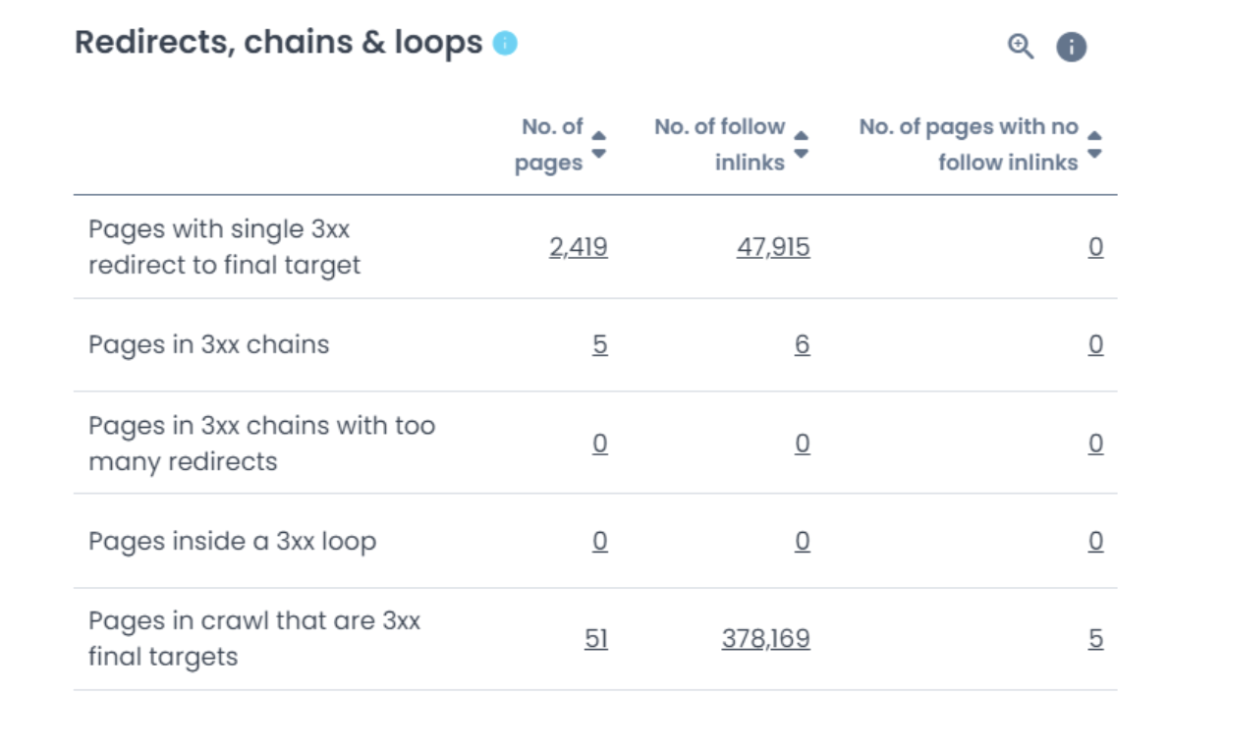

Redirect chains (and loops) are one of the main issues caused by incorrect redirection. A redirect chain can occur when one URL redirects to another URL. This creates an endless loop if one of the URLs in the chain then redirects to the original URL, resulting in wasted time and crawl budget for search engines, as well as frustration for users who can’t access the content.

The table below illustrates all pages that are caught in a redirect chain. Once they have been identified, you’ll need to analyze your site to find out why the loop exists and take the necessary steps to resolve the issues.

Source: Oncrawl

HTTP errors

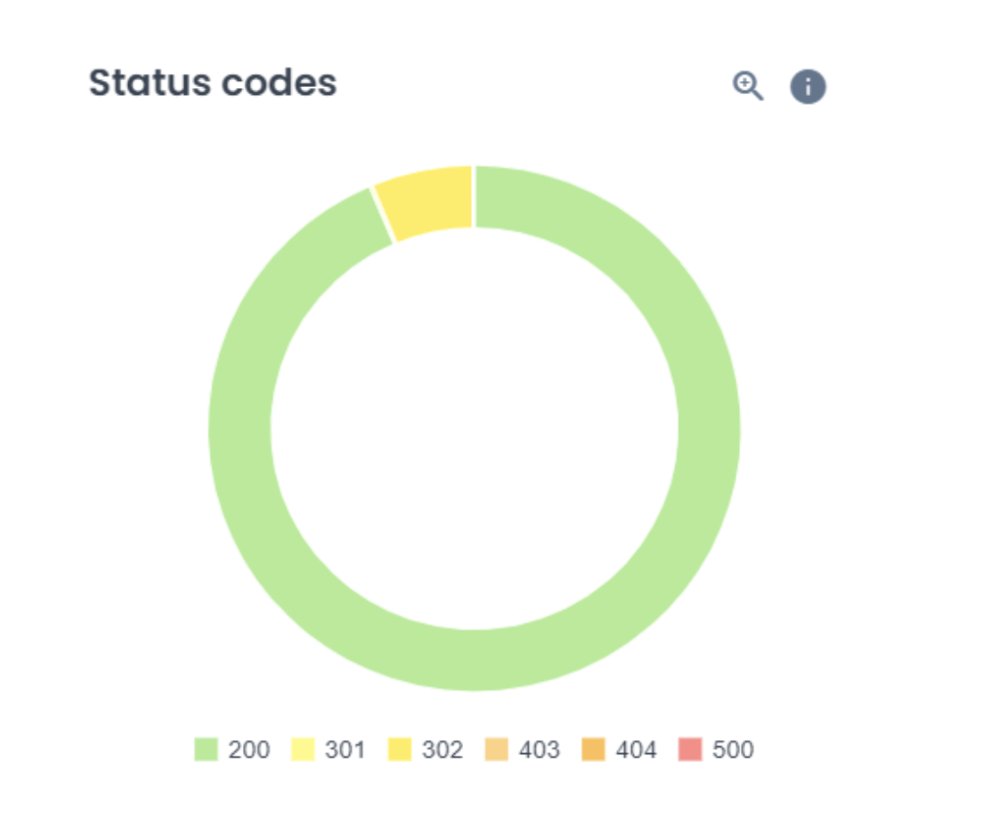

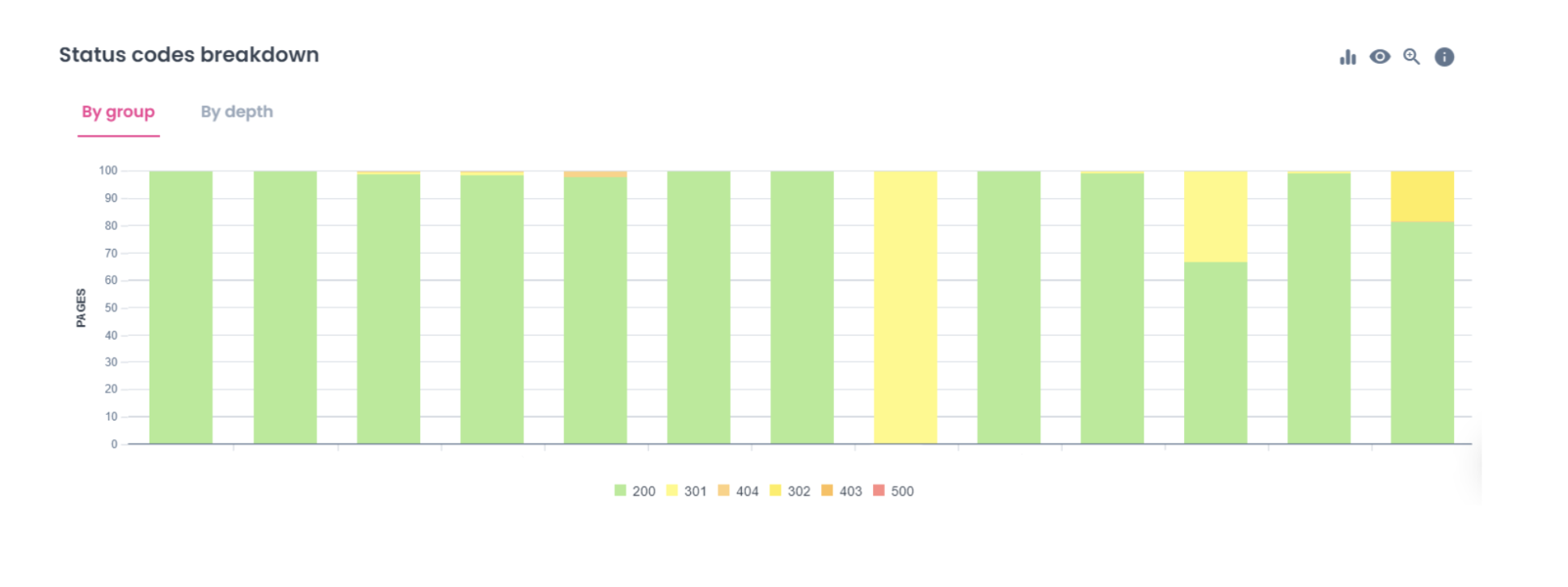

At times, our users experience status code errors that should be quickly identified and resolved. HTTP errors affect load time and user experience. Use the status codes chart to see where you may have issues.

Source: Oncrawl

Segmentation is one of the best ways to draw meaningful conclusions from your data. In your crawl reports, you can find a detailed breakdown of status codes by page group. This allows you to analyze different categories and identify which ones have the most issues with HTTP status codes, so you can take appropriate action as fast as possible.

Source: Oncrawl

Structured data errors

Structured data is useful in making our pages more visible to search engines. If we want to have a higher click-through rate and win rich snippets, structured data is there to provide quick help.

However, code and parsing errors can occur when marking up pages with structured data. Using a Schema validator can quickly identify and resolve these issues. All you need to do is place either the URL of the page you want to test or the code of the schema.

Also, it is important to have an overview of structured data distribution across your pages to make sure you’re in line with the latest industry standards, to ensure that your pages are getting the visibility they deserve, and guarantee that search engines can correctly interpret our content.

Two really interesting reports in Oncrawl can give you an overview of the markups on your pages in the structured data report.

Source: Oncrawl

You’ll be able to see where structured data is used on your site and spot any areas or categories where it may be missing.

Unoptimized images

Image optimization can affect your site’s SEO in that the size of the images plays a vital role in the performance of particular pages. If your images have a larger size they will consequently slow down our website, meaning that page load speed will be lower. Moreover, this will harm user experience.

Google offers some image optimization tips, but you can also take a look at this detailed ebook, both of which I recommend you read. There are a lot of specific tips to walk away with and as image SEO has evolved significantly in the past few years, both resources will help you identify the most appropriate image formats you can utilize as well as provide information about the other important elements to consider such as alt texts for images, lazy-loading and image optimization strategies.

Other performance measures to consider

I think it was important that we looked at the more common SEO issues that should be considered when you want to quickly get a gauge of your site’s health. However, in my experience, I have identified some other, less cited measures, that I like to look at. I find they also give me a good idea of how a site is performing.

Conversion rate

While conversion rate itself isn’t a direct SEO ranking factor, the strategies used to improve conversion rate overlap with those that are used for effective SEO (enhancing user experience, improving mobile responsiveness, ensuring content relevance, improving page speed). Therefore, I find it important to make sure that our CRO efforts align with our SEO efforts, and that the performance of both is properly tracked.

Site authority

Site authority plays an important role in SEO success because it influences how search engines perceive the relevance of a particular website. E-E-A-T has grown in importance in the past couple of years, so neglecting your site authority can impact your placement and visibility in the SERPs.

Organic traffic

Again, strictly speaking, organic traffic isn’t a ranking factor. But it’s a good and quick way to see if your site is successful in bringing in your intended traffic.

Engagement time

Tracking engagement time offers insights into user experience and gives you an idea of just how effective your content is. Additionally, it can help to identify areas for improvement. Longer engagement times can signal to search engines that a site is providing valuable content.

Final thoughts

Everything that we addressed above is in aim of one main goal: making sure your site is healthy. A healthy site is not only visible to search engines but it is also efficient, accessible, and user-friendly.

By monitoring a few key SEO factors, you can quickly gauge your website’s health and make adjustments when necessary. In the ever-evolving landscape that includes algorithm updates, the ebb and flow of “important” ranking factors and the increased presence and use of AI, checking your site’s technical health is not just a one-time task but a continuous commitment.