We all talk about it as SEOs, but how does crawl budget actually work? We know that the number of pages that search engines crawl and index when they visit our client’s websites has correlation with their success in organic search, but is having a bigger crawl budget always better?

Like everything with Google, I don’t think that the relationship between your websites crawl budget and ranking/SERP performance is 100% straightforward, it’s dependant on a number of factors.

Why is crawl budget important? Because of the 2010 Caffeine update. With this update Google rebuilt the way in which it indexed content, with incremental indexing. Introducing the ‘percolator’ system, they removed the ‘bottleneck’ of pages getting indexed.

How does Google determine crawl budget?

It’s all about your PageRank, Citation Flow and Trust Flow.

Why haven’t I mentioned Domain Authority? Honestly, in my opinion it is one of the most misused and misunderstood metrics available to SEOs and content marketers that, has its place, but far too many agencies and SEOs place too much value on it, especially when building links.

PageRank is now, of course, out of date, especially since they’ve dropped the toolbar, so it’s all about the Trust Ratio of a site (Trust Ratio = Trust Flow/Citation Flow). Essentially the more powerful domains have larger crawl budgets, so how do you identify Google bot activity on your website and importantly, identify any bot crawl issues? Server log files.

Now we all know that in order to indicate pages to the Google bot that we what indexed (and ranking) we using internal linking structure and keep them close to the root domain, not 5 sub-folders along the URL. But what about more technical issues? Like crawl budget wastage, bot traps or if Google is trying to fill out forms on the site (it happens).

Identifying crawler activity

To do this, you need to get your hands on some server log files. You may need to request these from your client, or you can download them directly from the hosting company.

The idea behind this is you want to try and find a record of the Google bot hitting your site – but because this isn’t a scheduled event, you may need to get a few days’ worth of data. There are various pieces of software available to analyse these files.

Below is an example hit to an Apache server:

50.56.92.47 – – [31/May/2012:12:21:17 +0100] “GET” – “/wp-content/themes/wp-theme/help.php” – “404” “-” “Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)” – www.hit-example.com

From here you can use tools (such as Oncrawl) to analyse the log files and identify issues such as Google crawling PPC pages or infinite GET requests to JSON scripts – both of which can be fixed within the Robots.txt file.

When is crawl budget an issue?

Crawl budget isn’t always an issue, if your site has a lot of URLs and has a proportionate allocation of ‘crawls’, you’re fine. But what if your website has 200,000 URLs and Google only crawls 2,000 pages on your site each day? It could take up to 100 days for Google to notice new or refreshed URLs – now that’s an issue.

One quick test to see if your crawl budget is an issue is use Google Search Console and the number of URLs on your site to calculate your ‘crawl number’.

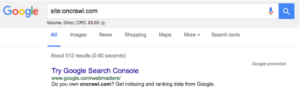

- First you need to determine how many pages there are on your site, you can do this by doing a site: search, for example oncrawl.com has roughly 512 pages in the index:

- Second you need to go to your Google Search Console account and go to Crawl, and then Crawl Stats. If your GSC account hasn’t been configured properly, you may not have this data.

- The third step is to take the “Pages crawled per day” average number (the middle one) and the total number of URLs on your website and divide them:

Total Pages On Site / Average Pages Crawled Per Day = X

If X is greater than 10, you need to look at optimizing your crawl budget. If it’s less than 5, bravo. You need not read on.

Optimizing your ‘crawl budget’ capacity

You can have the biggest crawl budget on the internet, but if you don’t know how to use it, it’s worthless.

Yes, it’s a cliché, but it’s true. If Google crawls all the pages of your site and finds that the greater majority of them are duplicate, blank or loading so slowly they cause timeout errors your budget may as well as be zilch.

To make the most out of your crawl budget (even without access to the server log files) you need to make sure that you do the following:

Remove duplicate pages

Often on ecommerce sites, tools such as OpenCart can create multiple URLs for the same product, I’ve seen instances of the same product on 4 URLs with varying subfolders between the destination and the root.

You don’t want Google indexing more than one version of each page, so make sure you have canonical tags in place pointing Google to the correct version.

Resolve Broken Links

Use Google Search Console, or crawling software, and find all the broken internal and external links on your site and fix them. Using 301s is great, but if they are navigational links or footer links that are broken, just change the URL they are pointing to without relying on a 301.

Don’t Write Thin Pages

Avoid having lots of pages on your site that offer little to no value to users, or search engines. Without context Google finds it hard to classify the pages, meaning they are contributing nothing to the site’s overall relevance and they’re just passengers taking up crawl budget.

Remove 301 Redirect Chains

Chain redirects are unnecessary, messy and misunderstood. Redirect chains can damage your crawl budget in a number of ways. When Google reaches a URL and sees a 301, it doesn’t always follow it immediately, it instead adds the new URL to a list and then follows it.

You also need to ensure your XML sitemap (and HTML sitemap) is accurate, and if you’re website is multilingual, ensure you have sitemaps for each language of the website. You also need to implement smart site architecture, URL architecture and speed up your pages. Putting your site behind a CDN like CloudFlare would also be beneficial.

TL;DR:

Crawl budget like any budget is an opportunity, you are in theory using your budget to buy time that Googlebot, Bingbot and Slurp spends on your site, it’s important that you make the most of this time.

Crawl budget optimization isn’t easy, and it’s certainly not a ‘quick win’. If you have a small site, or a medium sized site that’s well maintained, you’re probably fine. If you have a behemoth of a site with tens-of-thousands of URLs, and server log files go over your head – it may be time to call in the experts.