What is the Helpful Content algorithm update?

Last week, Google announced the release of an algorithm update that seeks to improve the quality of search results by promoting Helpful Content, which is currently being released. This update will initially apply to English-language websites only, and may devalue the content of the entire site if the site has a significant amount of unhelpful content.

As with E-A-T, the notion of “helpful” cannot be easily quantified by concrete metrics; the algorithm relies on machine learning to identify unhelpful content.

Experienced SEOs specializing in E-A-T and algorithm updates have already analyzed, in detail, what is known about and how to react to the Helpful Content update. The analyses by Marie Haynes, Glenn Gabe and Lily Ray are worth reading.

To take the discussion further, I spoke with Vincent Terrasi, former Product Director at Oncrawl and an expert in Data SEO with a focus on machine learning and language models like BERT and GPT-3.

The interview with Vincent Terrasi

“We can identify pure AI content as being unnatural, and therefore it will be penalized. But on the other hand, behind this notion of Helpful Content, there is another subject that can negatively affect all the new semantic tools that are based on the SERPs. Google is finally going to be able to detect over-optimization, I mean, someone who would reverse-engineer the perfect footprint to rank in Google.”

Content analysis and over-optimization detection: what the Helpful Content update will really change

Rebecca: I keep thinking of various elements of your work that won second place at Tech SEO Boost 2019 where you talked about text generation for SEO, the impacts and the dangers. We also discussed the topic, especially when Google released BERT, talking about the next steps and how they would be able to generalize text analysis through machine learning. In this case, it’s kind of like classification and then semantic analysis on top of that. Is that pretty much what you’re getting out of it, too? Does this update surprise you?

Vincent: Yes, that’s what I announced at the SEO Boost Tech: that they [Google] were going to go after this type of content.

I keep telling clients who are interested in text generation at Oncrawl why they have to be careful with generated content.

You have to be careful when talking about AI (artificial intelligence) generated content. With the Helpful Content update, we are not at all talking about a manual action, even though it might seem like the type of thing that would lead to a manual action. You might have seen some of the recent news about AI-generated sites, and I would definitely classify that as manual action. This was three months ago: there were strong manual actions where there were sites that said they were making $100,000 a month. They were all deindexed. Those are manual actions.

Now, there’s this update with a machine learning model that’s able to identify if it’s non-value added text. So I’d rather not talk about AI, but about sites with or without non-value added content.

Rebecca: Yes, there’s a confirmation from Google that it’s not about the manual actions. It’s interesting that in this case, Google is clearly saying it’s machine learning and it’s pretty much running all the time. So in the following months, an impacted site could possibly be reclassified…or not.

Vincent: I’m going to talk about this at my conference in September with Christian Méline at SEO Camp Paris, because it’s something that we identified five months ago. Can you imagine? We had already identified that there were things going on with Google.

Overall, there are two topics:

There’s the topic of AI-generated content. We can say that spammy content can be very easy to identify because AI repeats itself. If you break it down into word groups of three, four, five words, you’ll see it repeats the same phrases. That’s very easy to detect. You don’t need to do machine learning.

And on the other hand, the machine learning part is that there are in fact very stable probabilities of the next word appearing.

Rebecca: Yes, we also talked about this when we were working on your training courses. Internally at Oncrawl, this has led to the work currently underway to create a scoring system for the quality of generated texts, in order to find content that is too easily identifiable as such.

Vincent: That’s right.

We can identify pure AI content as being unnatural, and therefore it will be penalized. So that’s the first issue.

But on the other hand, behind this notion of Helpful Content, there is another subject that can negatively affect all the new semantic tools that are based on the SERPs.

Google is finally going to be able to detect over-optimization, I mean, someone who would reverse-engineer the perfect footprint to rank in Google. And here we have strong and talented players in France who have not yet reacted much to the news: the Frères Peyronnet, 1.fr, SEO Quantum, etc. They are directly concerned by the problem of over-optimization. They are directly affected by this update.

Rebecca: Let’s take your site transfer-learning.ai which was more of a sandbox to test if we could rank with entirely generated content, while adding something that doesn’t exist today (in this case the link between academic research and training courses on related machine learning topics). In your opinion, is it still possible to do this kind of thing?

Vincent: If it brings originality and is not detected as spammy, yes, it will always be possible to do this sort of thing.

However, if it is not considered helpful, then it will not be possible.

Additionally, I want to give a clear reminder to French creators: we are talking about English. We know that the roll-out in English can last for months, and often a year. When we look back at the old massive Core Updates like Panda or Penguin, they lasted up to several years in some cases. I think that some people will take advantage of this period of time to continue to practice spammy techniques. And then Google will intervene.

What I am going to discuss during my presentation with Christian Méline is that rather than proposing subjects that Google already has and that it is not interested in, we are able to use new technologies that help us to propose new subjects.

I’ll give you an example. If I test all the SEO tools and generate topic ideas with GPT-3, or in a French tool like yourtext.guru for example, I’ll get 40 ideas. If I use Christian Méline’s technique, I’ll get 4,500. And some of them are even topics that have never been used before and are not even in Google.

What do you think Google will prefer? To have content it already knows, or to have very interesting topics that nobody has ever dug into?

I think that’s the future of SEO: being able to detect new things. I know Koray is also going in that semantic direction.

Rebecca: Yes, in the sense of analyzing content gaps or lacunae where you can establish an expertise, because it’s those semantic areas of a topic that are not being addressed at all.

Vincent: Exactly. On the other hand, I think that this update is not going to do that immediately. There will be a version 1, a version 2, and so on. But the ultimate goal of this update is to do that.

[Case Study] Managing Google’s bot crawling

Other languages and other media: How will this update be deployed?

Rebecca: You mentioned earlier the difference between English and other languages like French. We’ve made huge strides in translation, in language-agnostic processing, like with MuM. Do you think it’s really going to take this long for this update to move into other languages?

Vincent: Frankly, I’ve done some work of my own. I don’t have Google’s technology, I don’t know Google, but I’ve never seen an algorithm that takes so long to run. That means that for a paragraph of 300 words, it takes about ten seconds. It’s an eternity. Usually we’re talking TF-IDF calculations, word embeddings… and it takes a second. In other words, this type of algorithm is pretty heavy to deploy. Now I know that Google has the technology, they have TPUs, they have super smart engineers, but I think they’re going to have this limit when using a language model: you have to load the language model. And when there are 200 billion parameters, it can hurt.

It’s funny, it’s right around the same time as the release of the text generation model on HuggingFace. So I think, and there’s no one who can say this for sure, but that’s what they based their detection on. In fact, they released a text generation model to detect text generation. Google is fighting fire with fire, as they say.

Rebecca: Yeah, that’s kind of how it works, right? It’s always been like that in detecting automated texts. We use what we know about how it’s built to detect it.

Vincent: But what impresses me are the SEO tools that provide a fingerprint of the SERPs. Google is now saying, “We have the footprint and we’re going to be able to tell if you’re too inspired by it.” Nobody knows how they do it. I know how other SEO tools do it, but how do they [Google] do it? No one knows.

Rebecca: Actually, the other thing that stood out to me was that it’s a site-level analysis and then for each site there’s, if we’re really talking about very high-level generalization, a “value” of Helpful or Unhelpful Content assigned to the site that can impact other content on that site. And that’s a lot of individual analysis, and a lot of information storage. So even just to process or re-process that, it takes a lot of time.

Vincent: I think that’s the constraint that they have. They’ve announced that they’re only doing it on Google Search not Google Discover.

It’s a bit of a paradox because on Google Discover, everyone is cheating, everyone is optimizing “SEO” content just to be in Google Discover. I think they have a big problem right now with Google Search and all this auto-generated content. There are some sites that have gone overboard with auto-generated content.

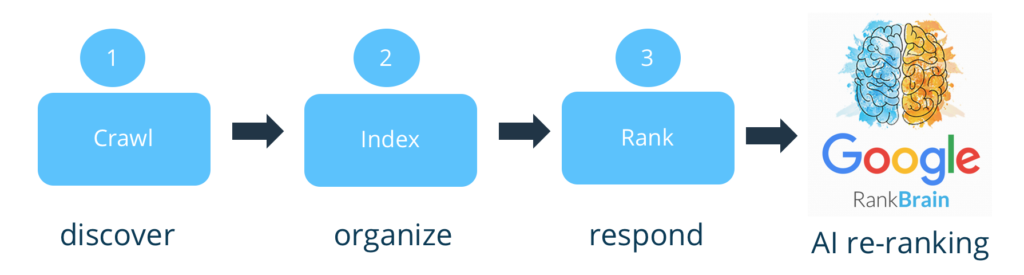

They’re not talking about a penalty, so they’re kind of spinning this like with Google RankBrain, as a new signal.

And not all sites will be affected. I’m thinking in particular of e-commerce sites with all their product descriptions. We know everyone is copying each other.

Rebecca: Yes, creating variants based on the official descriptions of the producers, the brands.

Vincent: Yes.

But some media sites are more at risk. There is a phenomenon that has been known in SEO for a long time. For example, some sites take English content and translate it without adding any value. Nobody has talked about it, but there is a major risk for this type of site because it doesn’t add anything and on top of that, they have the defect of not quoting their sources.

Rebecca: And they’ll also have the original content in English to make a comparison to, as well.

Vincent: Yes, the stage where we move from English to French with this update will likely hurt many of the spammy sites.

Out of all of the SEO news for the past several months, this is the most important update. RankBrain too, we could say, but it’s been a lot less obvious to point to, hard to see what are the actual results of its application.

Rebecca: I think it’s actually close, with the concept of semantic analysis and the parts of the website that are not related to the rest of the website.

Vincent: Exactly.

I know they’ve been working on this for a while. I had a friend who works at Google, who said he’s been working on this since 2009; there were two research teams on this. They’re trying to do it now in real time and they’re going to do a lot of cleanup.

But we still don’t know much about the implementation. How will they do it? With a signal? Will those who cheat be indexed less well? No one can answer that question except John Mueller.

Rebecca: I doubt even he’ll be allowed to. I imagine they’ll keep to their line of: “create useful content for users, not for search engines, and you won’t have a problem.”

Vincent: On Twitter, he is getting bombarded with questions about this topic and his answers have been a bit broad.

The impact on content creation in the future

Rebecca: I’m not surprised by that. I think he probably doesn’t have any more specific information. And even if he does, it must be absolutely forbidden to talk about the algorithm.

Anyway, I’m very anxious to start seeing the patents that are related to this update, to do a reanalysis of the patents in a year, two years, to see what’s out there and if there’s any indication of use a little bit later. But that’s another topic.

Vincent: To prepare for my conference in September, I’ve listed how we recognize quality content, useful content. I based it on the article in the journaldunet (in French) where Christian Méline had written on the subject [three] years ago. His content is still completely relevant. On the other hand, he does not rely on machine learning. He hates it, so these are basic, useful metrics: is the title well written? Are there any spelling mistakes? Does it provide new knowledge? Rarely things where you’ll need machine learning to do them.

Rebecca: This type of advice is going to be super important because most SEOs don’t necessarily have the resources, the data, the time or just the skills to implement machine learning, to be able to analyze their sites, to know if there are risks of falling into that or not.

Vincent: Exactly. We have to follow this very, very closely.

In addition, we have to be very careful about what we say. We have to speak in the conditional tense. There is no one who is certain about this subject.

Rebecca: That’s for sure. We only have very high level things, which means we have no proof, no clues and no information. So clearly all we can say about it are not conclusions, they are theories.

Vincent: Exactly.

Here’s what I set up as a starting point:

- The analysis of tokens to look at repetition: identify when it’s excessive, when it’s just to rank.

- Then, the probabilities between words that I mentioned earlier.

- And finally one, word groups.

Just with these three combos, I can detect 90% of AI-generated texts that haven’t been optimized by a human. So imagine what Google can do! It’s mind-blowing.

Rebecca: So we should definitely attend your SEO Campus conference on September 23 with Christian.

Vincent: Yes, we wanted to take apart the topic of helpful content a bit. It’s funny: even before Google started talking about the update, we had this planned.

I also like this topic because I am very ecologically-minded. It makes me feel better to know that there are controls like that to keep people from spamming. Because it costs us unimaginable resources.

Rebecca: Yes, it does. With this update, everyone is paying close attention. I think the people who think it’s not going to change anything are wrong. We can see that it’s going to change not only how we create content, but also how Google evaluates content. And these are strategies that we haven’t seen before.

Vincent: Exactly. In fact, if you want to take an extreme stance, Google does not evaluate content. That’s a huge weakness. It used to just index and rank. Now they will filter upstream. And that’s what Bing was criticizing Google for not doing.

Rebecca: Yes, most of the analysis [on content] came at the time of ranking.

Vincent: That’s right. Now it seems to have a little filter. I agree with you: I can’t wait to see the patent that comes out on this. They’ll have to reveal where they put the filter. Where do you bet they put the filter? Before, after indexing or before, after ranking? Where would you put it?

Rebecca: Since you have to have most of the site to be able to do that, I would say…

Vincent: Don’t forget that you need the footprint of the SERPs, as we discussed, for indexing. So you have to index them.

Rebecca: Yeah, that’s what I was going to say. I think it should be an additional step, we don’t run the risk of de-indexing, so we’re talking about an impact after indexing, maybe after the [initial] ranking as well.

Vincent: Yes, for me, it’s after the ranking. If I were Google, this is something I would have added to Google RankBrain, because it is able to aggregate signals, etc. Now, the question is how impactful it’s going to be on sites.

Rebecca: With machine learning, it can vary a lot from site to site, because you can have a lot more control over its impact and how much unhelpful content is on each site.

Vincent: The limit with Google is false positives. That would be de-indexing [or penalizing] legitimate pages. So I think the initial impact is going to be very, very low, but they’re really going to go after the cheaters.

I’ve had people contact me, though, who were a little concerned. I told them that at the beginning, it will only detect text without quality. That is, I think that a [generated] text, followed up with human proofreading, can have all its usefulness.

I’m not as strict as others who say “AI = garbage”. I don’t really believe that either.

Rebecca: That doesn’t surprise me, coming from you!

It’s a little frustrating, knowing that it’s going to be slow. As you say, to avoid false positives, that’s another reason for launching in English: they have a better command of English. This makes it possible to put in additional controls that are much more expensive, before generalizing to the whole web and to other languages that are less well mastered, less well automated.

In any case, it was a very rich discussion. Thank you very much for this exchange.

Vincent: We can talk about it again whenever you want.

Rebecca: It’s been a pleasure.