On September 25th, Oncrawl was happy to host Bill Hartzer for a webinar on log files analysis and why it matters for SEO audits. He ran through his own website to show the impact of optimisations on bots activity and crawl frequency.

Introducing Bill Hartzer

Bill Hartzer is a SEO Consultant & Domain Names Expert with over 20 years of experience. Bill is internationally recognized as an expert in his field and was recently interviewed on CBS News as one of the country’s leading search experts.

Over the course of this hour-long webinar, Bill gives us a look at his log files and discusses how he uses them in the context of a site audit. He presents the different tools he uses to verify site performance and bot behavior on his site.

Finally, Bill answers questions concerning how to use Oncrawl to visualize meaningful results, and provides tips to other SEOs.

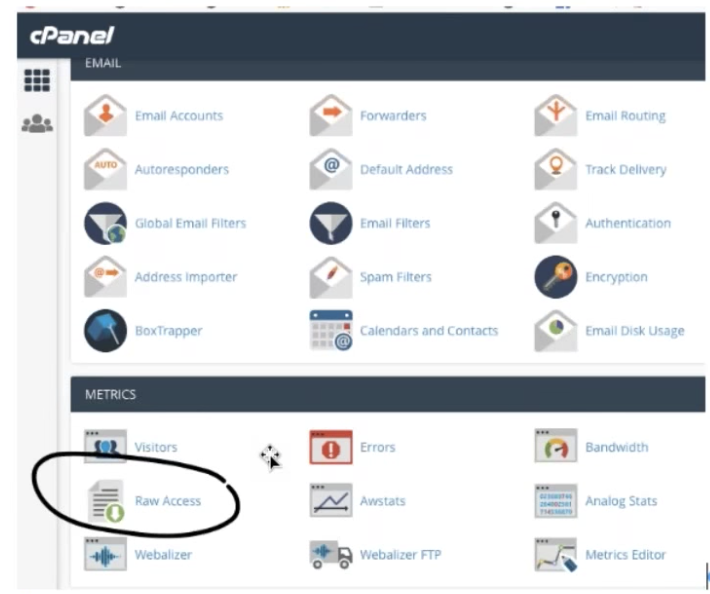

How to access your log files through the cPanel plugin for WordPress

If your website is built with WordPress and you use the plugin cPanel, you can find your server logs directly in the WordPress interface.

Navigate to Metrics, then to Raw Access. There, you can download daily log files from the file manager, as well as zipped archives of older log files.

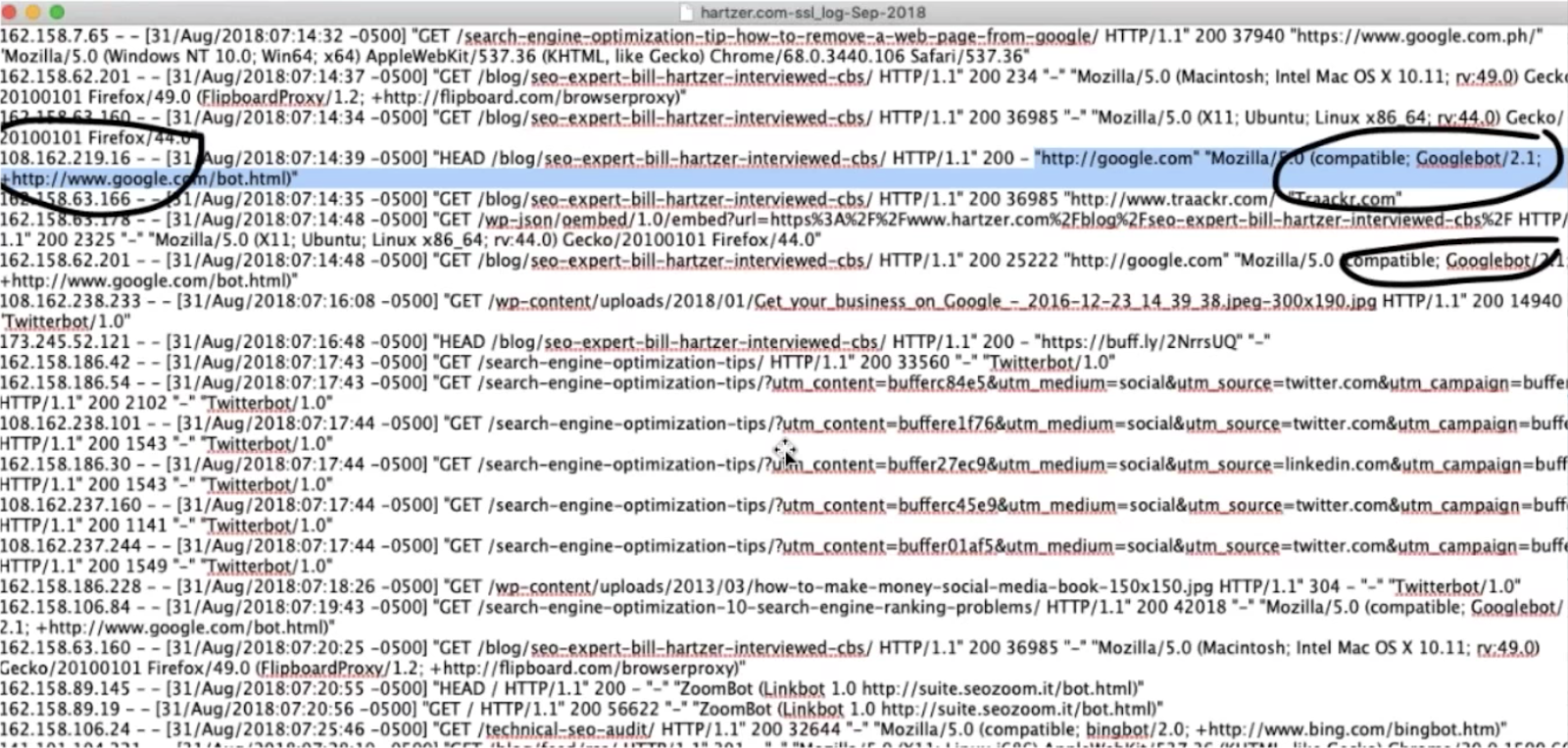

Examine the contents of a log file

A log file is a large text file that contains information about all of the visitors to your website, including bots. You can open it using a basic text editor.

It’s not difficult to spot potential bot hits from googlebot or bing, which do identify themselves in the log files, though it’s a good idea to confirm bot identification using IP lookups.

You may also find other bots that crawl your site, but which may not be useful to you. You can block these bots from accessing your site.

Oncrawl will process the raw analytics in your log files to give you a clear view of the bots that visit your site.

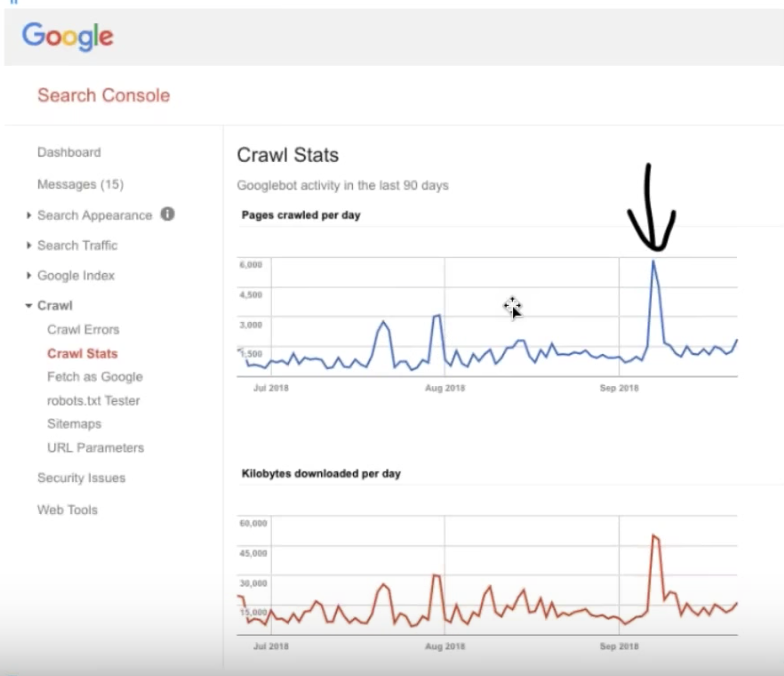

Using your log files for more information on Crawl Stats

Information about Crawl Stats is available in the old version of Google Search Console under Crawl > Crawl Stats takes on new meaning when compared to information in your log files.

You should be aware that the data shown in Google Search Console is not limited to Google’s SEO bots and can therefore be less useful than the more exact information that you can obtain by analyzing your log files.

Recent instances of unusual crawl activity

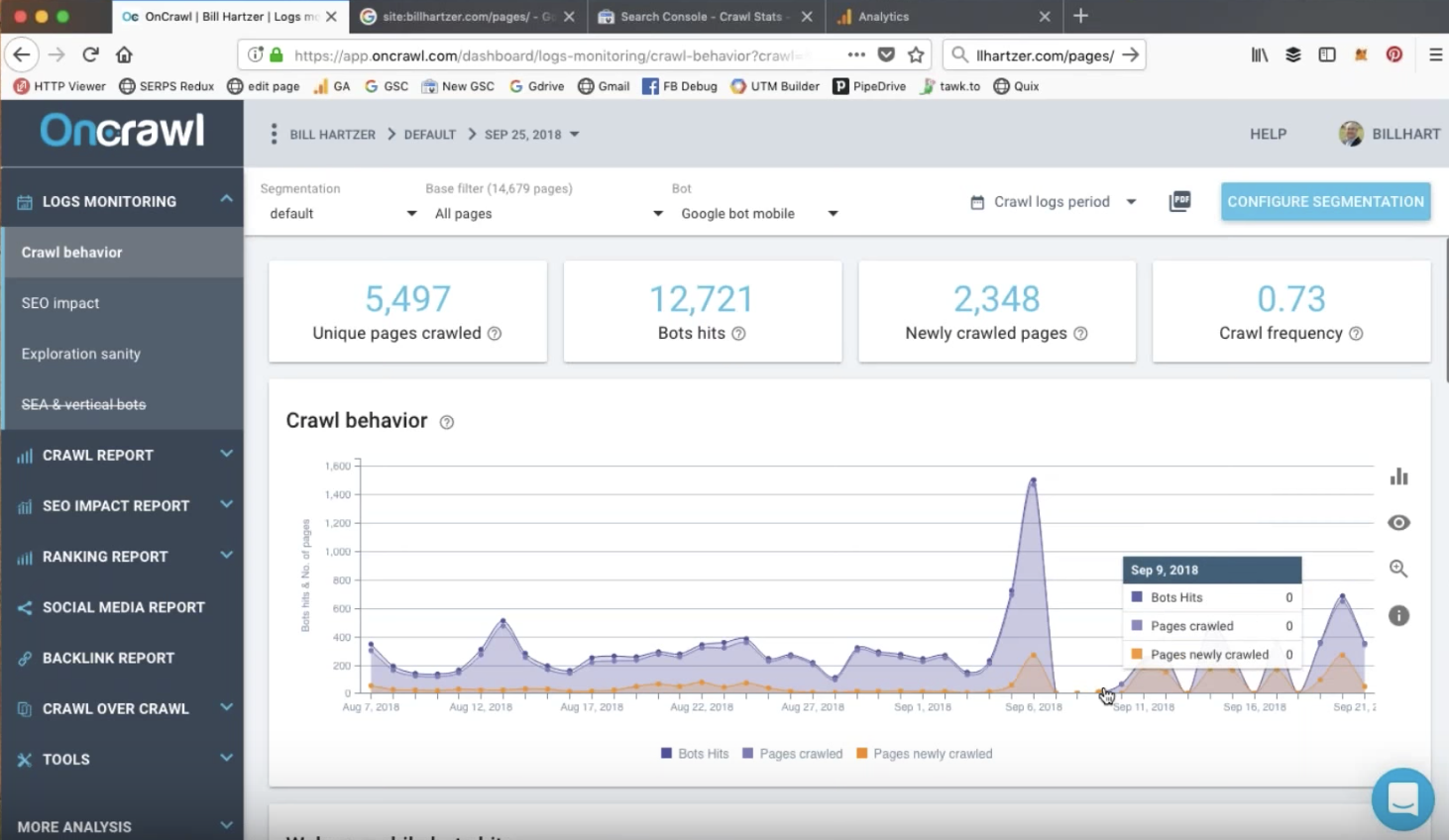

Bill looks at three recent spikes visible in the crawl stats in Google Search Console. These correspond to big events that trigger increased crawl activity.

Mobile First Indexation Spike

The September 7th spike in Google Search Console might seem, at first, unrelated to events on the website. However, a look at the log analysis in Oncrawl provided clues:

The analysis of log files allows us to see the breakdown of the different bots Google uses to crawl page. It becomes clear that the activity of the desktop Googlebot declined sharply before this date, and that this spike–unlike the earlier, smaller spikes–was composed almost entirely of hits on unique, already indexed pages by the mobile Google bot.

A 50% increase in organic traffic recorded by Google Analytics confirmed that this spike corresponds to the Mobile First Indexation of the site early September, weeks before the alert sent out by Google!

Modification to URL structure for the site

Mid-August, Bill made changes to his URL structure to make it more SEO-friendly.

Google Search Console recorded two large spikes just after this modification, confirming that Google identifies major site events and uses them as signals to recrawl the website’s URLs.

When we observe the breakdown of these hits in Oncrawl, it becomes apparent that the second spike is less of a spike, but that the high crawl rate of pages on this website continues over the course of multiple days. It’s clear that Google has picked up the changes, as Bill is able to confirm by observing the differences in crawl activity over the days following his changes.

Useful Oncrawl reports and features for conducting a technical audit

SEO Visits and SEO Active pages

Oncrawl processes your log file data to provide accurate information about SEO Visits, or human visitors arriving from Google SERP listings.

You can track the number of visits, or look at SEO active pages, which are individual pages on the website that receive organic traffic.

One thing you might want to look into as part of an audit would be the reason that some ranking pages don’t receive organic traffic (or, in other words, aren’t SEO active pages).

Fresh Rank

Metrics like Oncrawl’s Fresh Rank provide essential information. In this case: the average delay in days between when Google first crawls a page and when the page to get its first SEO visit.

The #FreshRank helps you know how many days a page needs to be crawled for the first time and to gets its first #SEO visit #oncrawlwebinar pic.twitter.com/WVojWXKStC

— Oncrawl (@Oncrawl) September 25, 2018

Content promotion strategies and developing backlinks can help earn traffic faster for a new page. Some pages on the site in this audit, such as blog posts that were promoted over social networks, earned a much lower Fresh Rank.

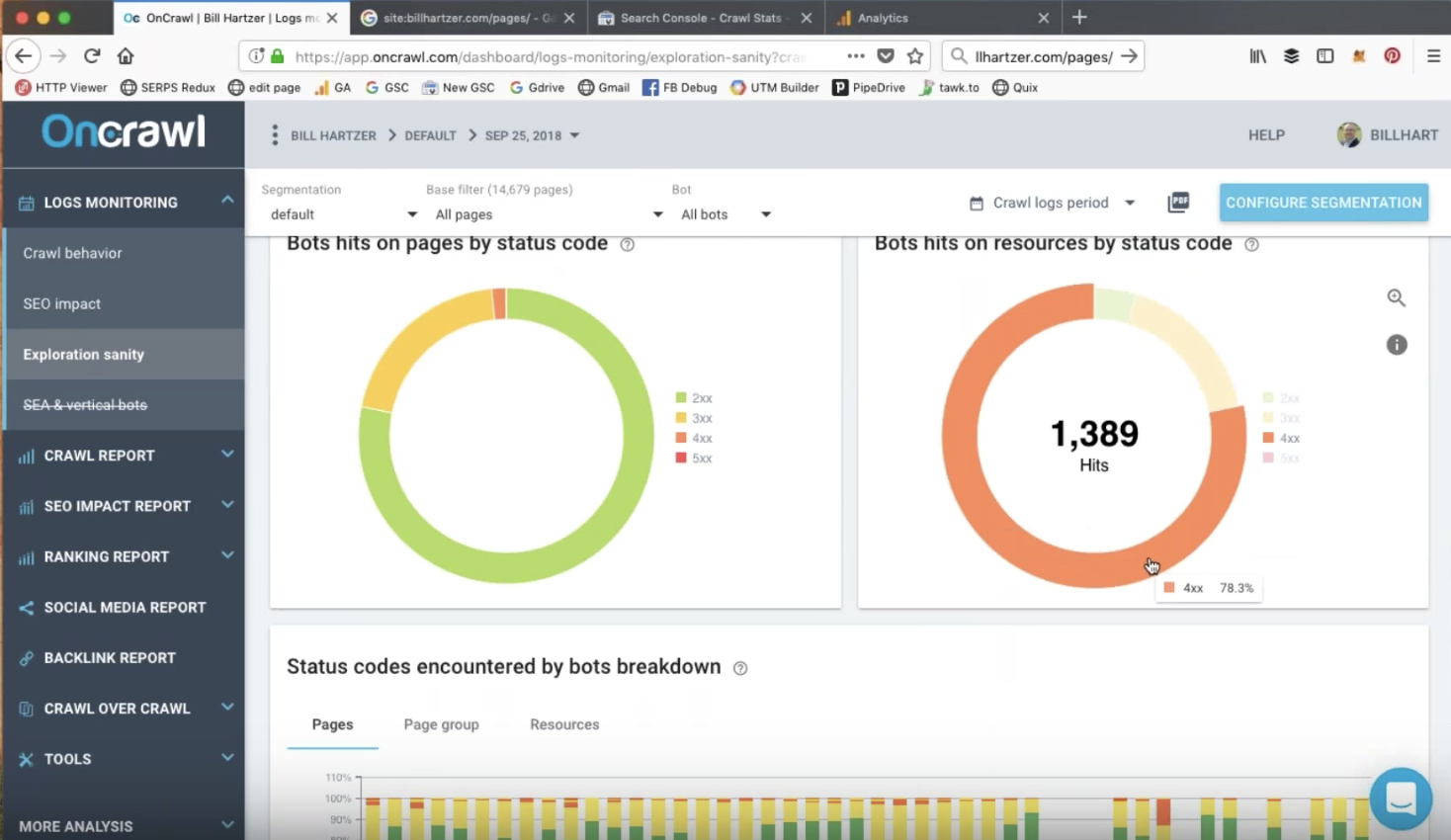

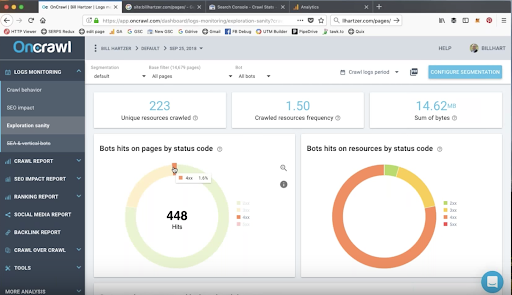

Bot hits on pages and resources by status code

Bots may be visiting URLs that return 404 or 410 errors. This might concern resources such as CSS, JavaScript, PDFs, or image files.

These are elements you definitely want to investigate during an audit. Redirecting these URLs and removing internal links to them can provide quick wins.

During an audit, it can be helpful to keep notes on elements that should be addressed, such as URLs that return status errors to bots.

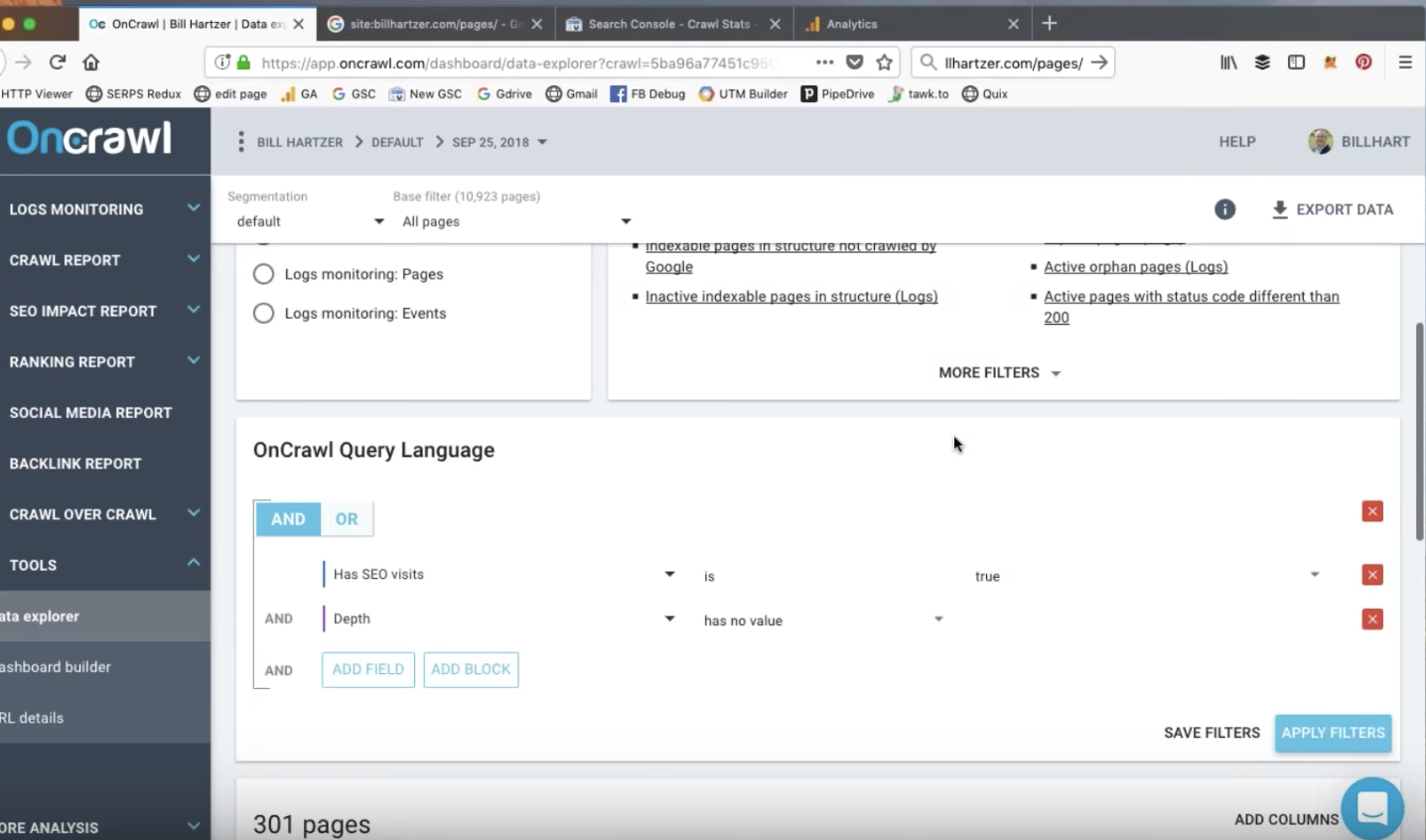

Data Explorer Reports: Custom reports

The Oncrawl Data Explorer offers quickfilters to produce reports that might interest you, but you can also pull your own reports based on the criteria that interest you. For example, you might want to survey SEO Active Pages with bounces and a high load time.

Data Explorer Reports: Active Orphan Pages

By combining analytics, crawl, and log file data, Oncrawl can help you discover pages with organic, human visits that don’t always bring value to your site. The advantage of using data from log files is that you can discover every page on your site that has been visited, including pages that might not have Google Analytics code on them.

Bill was able to identify SEO organic visits on RSS feed pages, likely through links from external sources. These pages are orphan pages on his site; they have no “parent” page that links to them. These pages don’t bring any extra value to his SEO strategy, but they still receive a few visits from organic traffic.

These pages are great candidates to start optimizing.

Search analytics for keyword rankings

Data for rankings can be drawn from Google Search Console. Directly in the old version of Google Search Console, you can go to Search Traffic, then Search Analytics, and view Clicks, Impressions, CTR and Positions for the past 90 days.

Oncrawl provides clear reports on how this information relates to the entire site, allowing you to compare the total number of pages on the site, the number of ranking pages, and the number of pages that receive clicks.

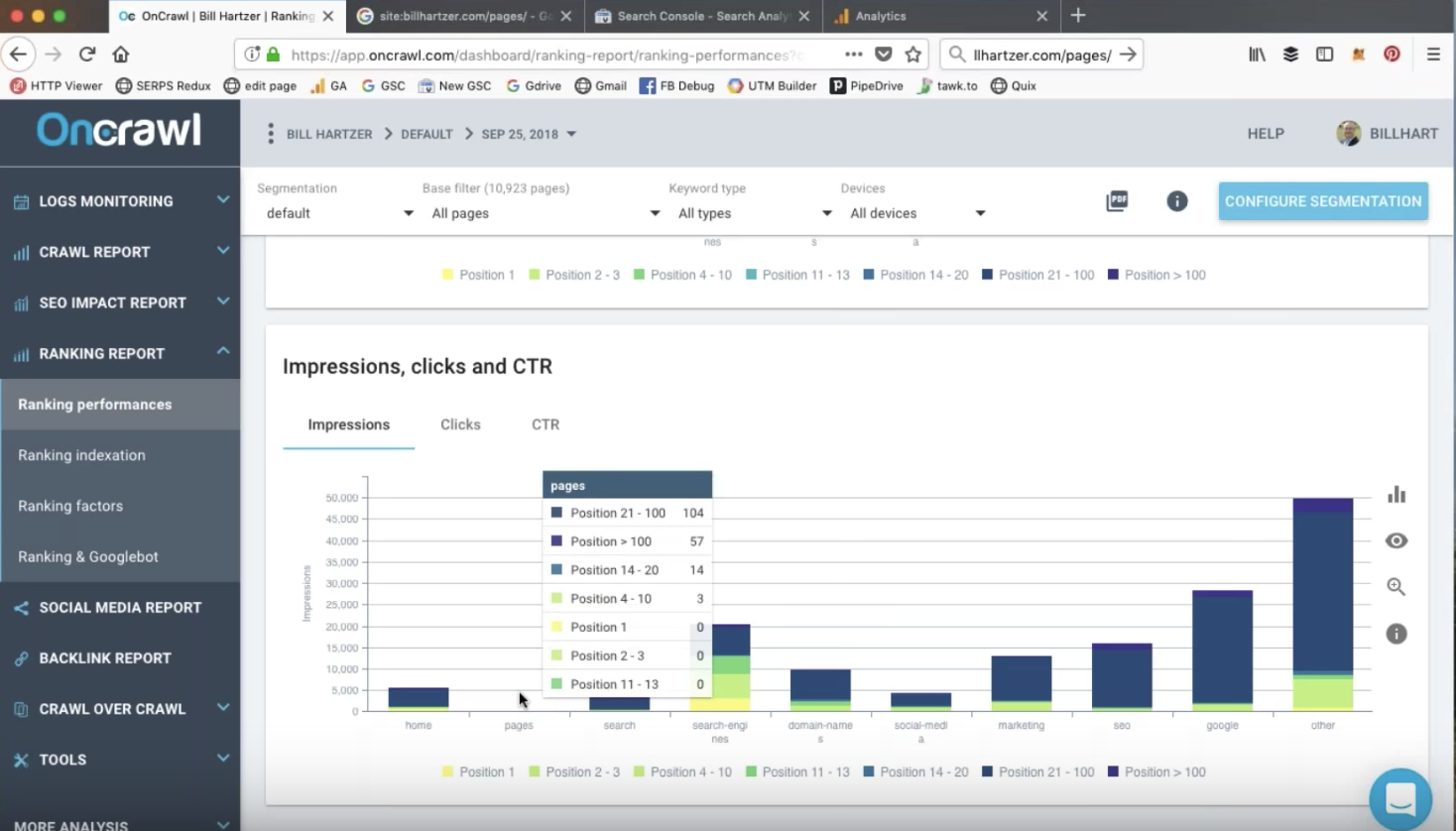

Impressions, CTRs, and Clicks

Site segmentation allows you to confirm, in a single glance, which types or groups of pages on your site are ranking, and on which page of the results.

In this audit, Bill is able to use Oncrawl’s metric to spot the types of pages that tend to rank well. These are the types of pages that he knows he should continue to produce in order to increase traffic to the website.

Clicks on ranking pages are strongly correlated to the ranking position: positions over 10 are no longer on the first page of search results, at which point the number of clicks will drop off sharply for most keywords.

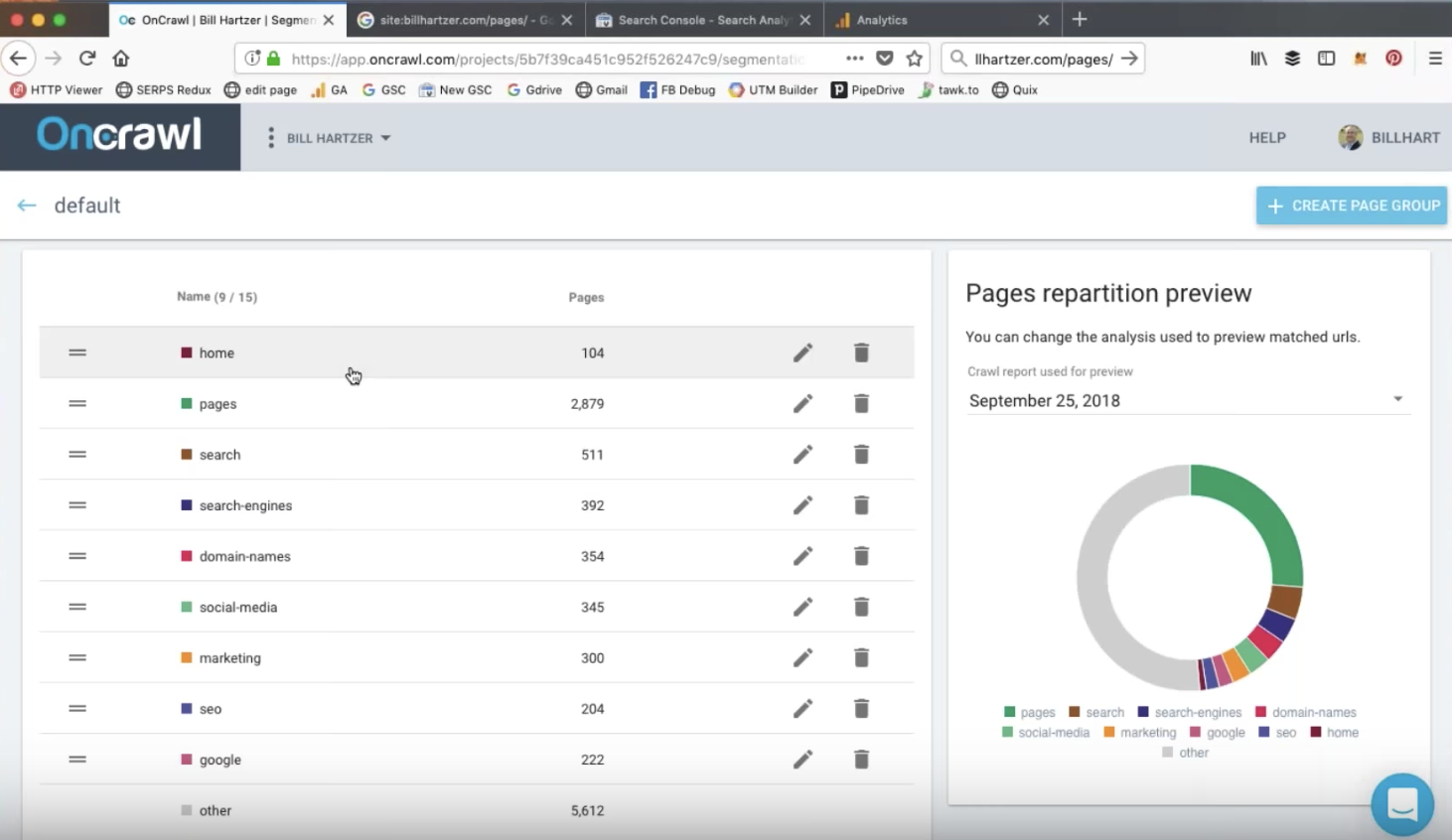

Website segmentation

Oncrawl’s segmentation is a way to group your pages into meaningful sets. While an automatic segmentation is provided, you can edit the filters, or create your own segmentations from scratch. Using the Oncrawl Query Language filters, you can include or exclude pages in a group based on many different criteria.

On the site Bill looks at in the webinar, the segmentation is based on the different directories on the website.

Pages in structure > crawled > ranked > active

In the Oncrawl Ranking report, the “Pages in structure > crawled > ranked > active” chart can alert you to problems getting your pages ranked and visited.

This chart shows you:

- Pages in the structure: the number of pages that can be reached through the different links on your site

- Crawled: pages that Google has crawled

- Ranked: pages that have appeared in Google SERPs

- Active: pages that have received organic visits

Your audit will want to look at the reasons for the differences between the bars in this graph.

However, differences between the number of pages in the structure and the number of pages crawled may be intentional, for example, if you prevent Google from crawling certain pages by disallowing robots in the robots.txt file. This is something you want to verify during your audit.

You can look into this sort of data in Oncrawl by clicking on the graph.

Key takeaways

Log files analysis helps you detect spikes in bot hits and monitor bots activity on a daily basis #oncrawlwebinar

Today’s webinar with @bhartzer pic.twitter.com/3DAC5d36j9— Oncrawl (@Oncrawl) September 25, 2018

Key takeaways from this webinar include:

- Big changes in a website’s structure can produce big changes in crawl activity.

- Google’s free tools report data that is aggregated, averaged, or rounded in ways that can make it look inaccurate.

- Log files allow you to see real bot behavior and organic visits. Combined with crawl data and daily monitoring, they are a powerful tool for detecting spikes.

- Accurate data is necessary to understanding why and what happened, and this can only be achieved by cross-analysis of analytics, crawl, rankings and–specifically–log file data in a tool like Oncrawl.

Missed it live? Watch the replay!

Even if you couldn’t make the live webinar, or if you couldn’t stay for the full session, you can still view the complete version.