Introduction to the coverage report and how to interpret the data

The Search Console Coverage Report provides information on which pages on your site have been indexed and lists URLs that have presented any problems while Googlebot tries to crawl and index them.

The main page in the coverage report shows the URLs in your site grouped by status:

- Error: the page isn’t indexed. There are several reasons for this, pages responding with 404, soft 404 pages, among other things.

- Valid with warnings: the page is indexed but has problems.

- Valid: the page is indexed.

- Excluded: The page is not indexed, Google is following rules on the site such as noindex tags in robots.txt or meta tags, canonical tags, etc. that prevent the pages from getting indexed.

This coverage report gives a lot more information than the old google search console did. Google has really improved the data it shares, but there are still some things that need improvement.

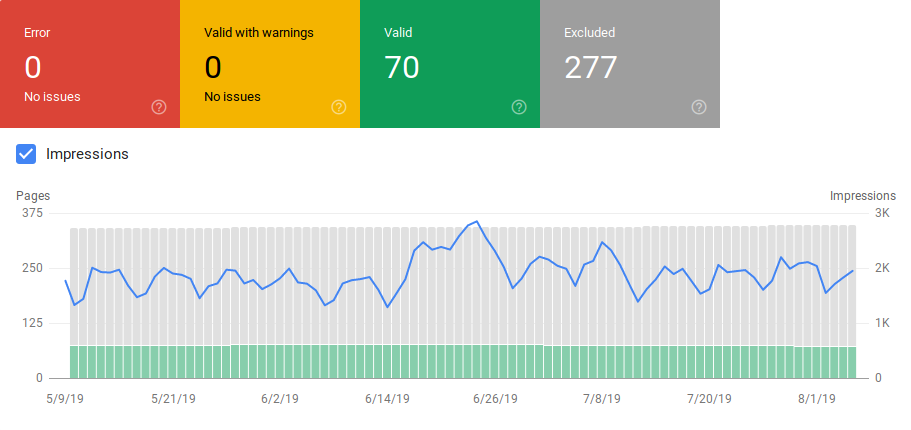

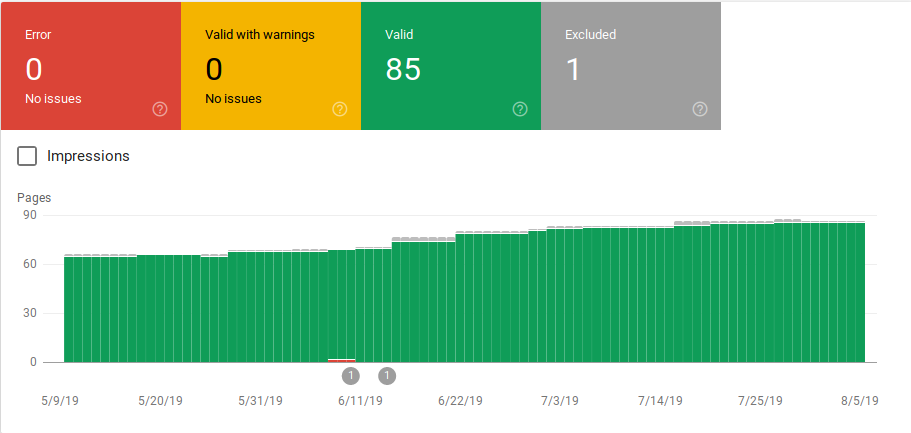

As you can see below, Google shows a graph with the number of URLs in each category. If there is a sudden increase in errors you can see the bars and even correlate that with impressions to determine if an increase in URLs with errors or warnings can drive impressions down.

After a site launches or you create new sections, you want to see an increasing count of valid indexed pages. It takes a few days to Google index new pages, but you can use the URL inspection tool to request indexation and reduce the time for Google to find your new page.

However, if you see declining number of valid URLs or see sudden spikes, it is important to work on identifying the URLs in the Errors section and fix the issues listed in the report. Google provides a good summary of action items to carry out when there are increases in errors or warnings.

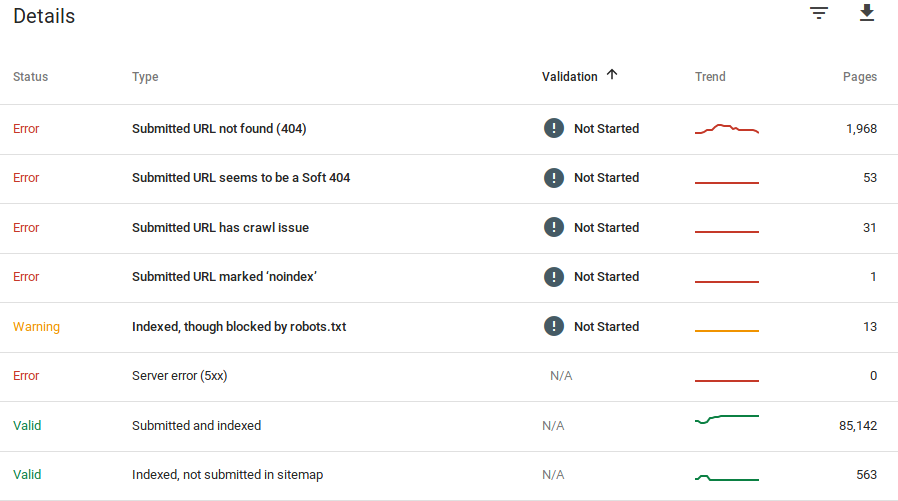

Google provides information about what the errors are and how many URLs have that problem:

Remember that Google Search Console doesn’t show 100% accurate information. In fact,there have been several reports about bugs and data anomalies. Furthermore, Google Search console takes time to update, it has been known for the data to be 16 days to 20 days behind. Also, the report will show sometimes list more than a 1000 pages in errors or warning categories as you can see in the image above, but it only allows you to see and download a sample of 1000 URLs for you to audit and check.

Nevertheless, this is a great tool for finding indexation issues on your site:

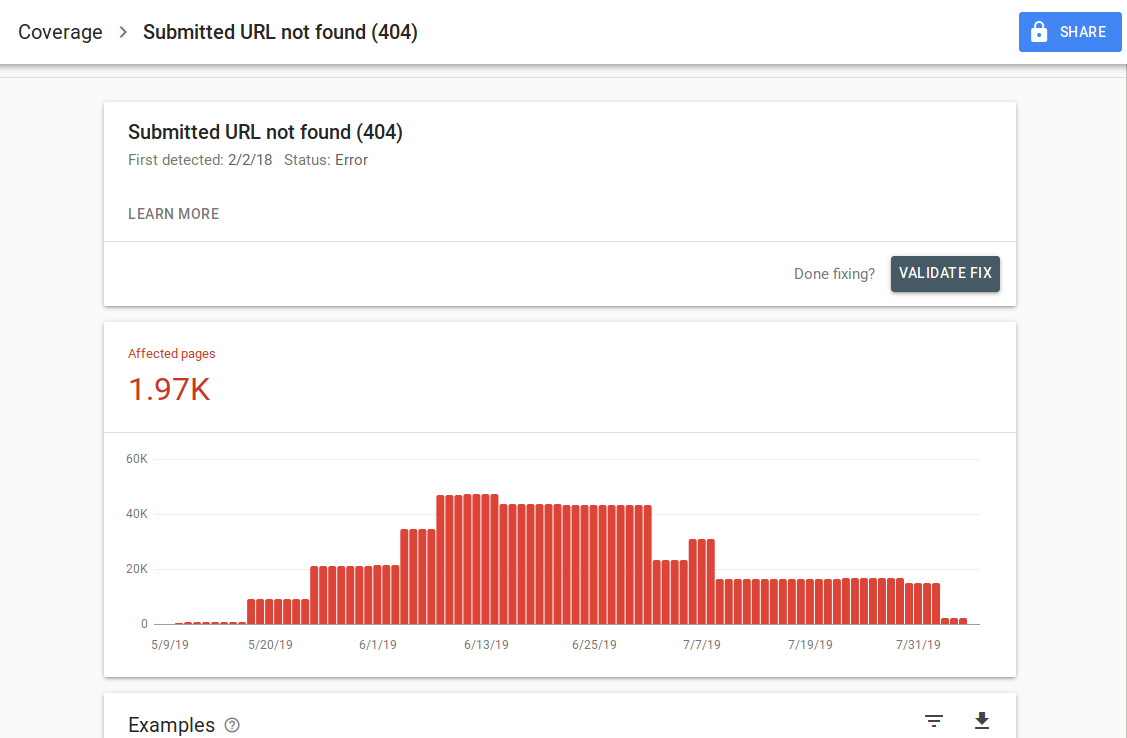

When you click on a specific error, you’ll be able to see the details page that lists examples of URLs:

As you can see on the image above, this is the details page for all of the URLs responding with 404. Each report has a “Learn More” link that takes you to a Google documentation page providing details about that specific error. Google also provides a graph that shows the count affected pages over time.

You can click on each URL in order to inspect the URL which is similar to the old “fetch as Googlebot” feature from the old Google Search Console. You can also test if the page is blocked by your robots.txt

After you fix URLs, you can request Google to validate them so that the error disappears from your report. You should prioritize fixing issues that are in validation state “failed” or “not started”.

It is important to mention that you should not expect all of the URLs on your site to be indexed. Google states that the webmaster’s goal should be to get all of the canonical URLs indexed. Duplicate or alternate pages will be categorized as excluded since they have similar content as the canonical page.

It is normal for sites to have several pages included in the excluded category. Most websites will have several pages with no index meta tags or blocked through the robots.txt. When Google identifies a duplicate or alternate page you should make sure those pages have a canonical tag pointing to the correct URL and try to find the canonical equivalent in the valid category.

Google has included a dropdown filter at the top left of the report so you can filter the report for all known pages, all submitted pages or URLs in a specific sitemap. The default report includes all known pages which includes all of the URLs discovered by Google. All submitted pages include all of the URLs you’ve reported through a sitemap. If you’ve submitted several sitemaps you can filter by URLs in each sitemap.

[Case Study] Increase crawl budget on strategic pages

Errors, Warnings, Valid and Excluded URLs

Error

- Server error (5xx): The server returned a 500 error when Googlebot tried to crawl the page.

- Redirect error: When Googlebot crawled the URL there was a redirect error, either because the chain was too long, there was a redirect loop, URL exceeded max URL length or there was a bad or empty URL in the redirect chain.

- Submitted URL blocked by robots.txt: The URLs in this list are blocked by your robts.txt file.

- Submitted URL marked ‘noindex’: The URLs in this list have a meta robots ‘noindex’ tag or http header.

- Submitted URL seems to be a Soft 404: A soft 404 error happens when a page that doesn’t exist (has been removed or redirected) displays a ‘page not found’ message to the user but fails to return an HTTP 404 status code. Soft 404s also happen when pages are redirected to non-relevant pages for example a page redirecting to the home page instead of returning a 404 status code or redirecting to a relevant page.

- Submitted URL returns unauthorized request (401): The page submitted for indexing is returning a 401 unauthorized HTTP response.

- Submitted URL not found (404): The page responded with a 404 Not Found error when Googlebot tried to crawl the page.

- Submitted URL has crawl issue: Googlebot experienced a crawl error while crawling these pages that doesn’t fall in any of the other categories. You’ll have to check each URL and determine what the problem could have been.

Warning

- Indexed, though blocked by robots.txt: The page was indexed because Googlebot accessed it through external links pointing to the page, however the page is blocked by your robots.txt. Google marks these URLs as warnings because they aren’t sure if the page should actually be blocked from showing up in search results. If you want to block a page, you should use a ‘noindex’ meta tag, or use a noindex HTTP response header.

If Google is correct and the URL was incorrectly blocked, you should update your robots.txt file to allow Google to crawl the page.

Valid

- Submitted and indexed: URLs you submitted to Google through the sitemap.xml for indexing and were indexed.

- Indexed, not submitted in sitemap: The URL was discovered by Google and indexed, but it wasn’t included in your sitemap. It is recommended to update your sitemap and include every page that you want Google to crawl and index.

Excluded

- Excluded by ‘noindex’ tag: When Google tried to index the page it found a ‘noindex’ meta robots tag or HTTP header.

- Blocked by page removal tool: Someone has submitted a request to Google to not index this page by using the URL removal request in Google Search Console. If you want this page to be indexed, log into Google’s Search Console and remove it from the list of removed pages.

- Blocked by robots.txt: The robots.txt file has a line that excludes the URL from being crawled. You can check which line is doing this by using the robots.txt tester.

- Blocked due to unauthorized request (401): Same as in the Error category, the pages here are returning with a 401 HTTP header.

- Crawl anomaly: This is kind of a catch-all category, URLs here either respond with 4xx- or 5xx-level response codes; These response codes prevent the indexation of the page.

- Crawled – currently not indexed: Google doesn’t provide a reason why the URL was not indexed. They suggest re-submitting the URL for indexation. However, it is important to check if the page has thin or duplicate content, it is canonicalized to a different page, has a noindex directive, metrics show a bad user experience, high page load time, etc. There could be several reasons that Google doesn’t want to index the page.

- Discovered – currently not indexed: The page was found but Google has not included it in its index. You can submit the URL for indexation to speed up the process like we mentioned above. Google states that the typical reason that this happens is that the site was overloaded and Google rescheduled the crawl.

- Alternate page with proper canonical tag: Google didn’t index this page because it has a canonical tag pointing to a different URL. Google has followed the canonical rule and has correctly indexed the canonical URL. If you meant for this page not to be indexed, then there is nothing to fix here.

- Duplicate without user-selected canonical: Google has found duplicates for the pages listed in this category and none make use of canonical tags. Google has selected a different version as a canonical tag. You need to review these pages and add a canonical tag pointing to the correct URL.

- Duplicate, Google chose different canonical than user: URLs in these category have been discovered by Google without an explicit crawl request. Google found these through external links and has determined that there is another page that makes a better canonical. Google hasn’t indexed these pages because of this reason. Google recommends marking these URLs as duplicates of the canonical.

- Not found (404): When Googlebot tries to access these pages they respond with a 404 error. Google states that these URLs have not been submitted, these URLs have been found through external links pointing to these URLs. It is a good idea to redirect these URLs to similar pages to take advantage of the link equity and also make sure users land in a relevant page.

- Page removed because of legal complaint: Someone has complained about these pages due to legal issues, such as a copyright violation. You can appeal the submitted legal complaint here.

- Page with redirect: These URLs are redirecting, therefore they are excluded.

- Soft 404: As explained above these URLs are excluded because they should be responding with a 404. Check the pages and make sure that if it has a ‘not found’ message for them to respond with a 404 HTTP header.

- Duplicate, submitted URL not selected as canonical: Similar to “Google chose different canonical than user” however, the URLs in this category were submitted by you. It is a good idea to check your sitemaps and make sure there are no duplicate pages being included.

How to use the data and action items for improving the site

Working at an agency, I have access to a lot of different sites and their coverage reports. I’ve spent time analyzing the errors that Google reports in the different categories.

It has been helpful to find issues with canonicalization and duplicate content, however sometimes you encounter discrepancies as the one reported by @jroakes:

Looks like Google Search Console > URL Inspection > Live Test incorrectly reports all JS and CSS files as Crawl allowed: No: blocked by robots.txt. Test about 20 files across 3 domains. pic.twitter.com/fM3WAcvK8q

— JR%20Oakes ???? (@jroakes) July 16, 2019

AJ Koh, wrote a great article soon after the new Google Search Console became available where he explains that the real value in the data is using it to paint a picture of health for each type of content on your site:

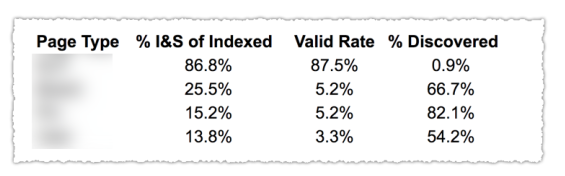

As you can see in the image above, the URLs from the different categories in the coverage report have been classified by page template such as blog, service page, etc. Using several sitemaps for different types of URLs can help with this task since Google allows you to filter coverage information by sitemap. Then he included three columns with the following information % of Indexed and Submitted pages, Valid Rate and % of discovered.

This table really gives you a great overview of the health of your site. Now if you want to dig into the different sections, I recommend reviewing the reports and double checking the errors google presents.

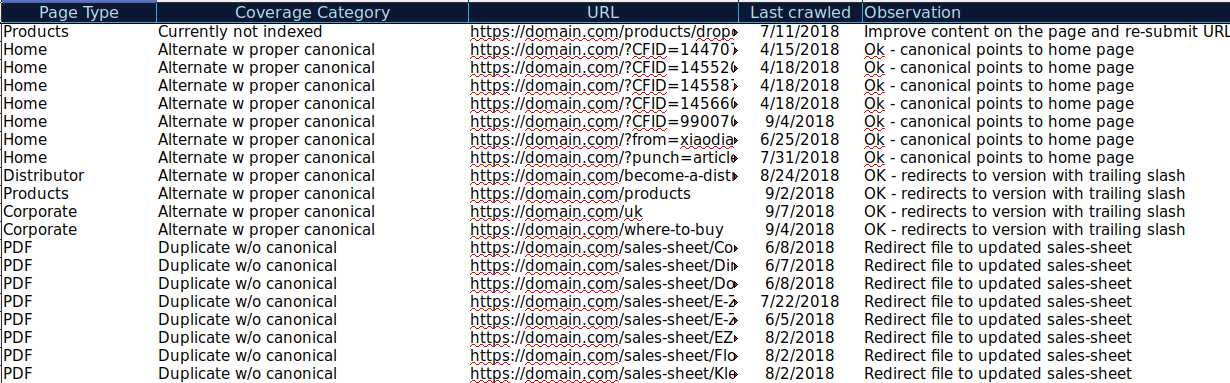

You can download all of the URLs presented in different Categories and use Oncrawl to check their HTTP status, canonical tags, etc. and create a spreadsheet such as this one:

Organizing your data like this can help keep track of issues as well as add action items for URLs that need to be improved or fixed. Also, you can check off URLs that are correct and no action items are needed in the case of those URLs with parameters with correctly canonical tag implementation.

Request your custom demo

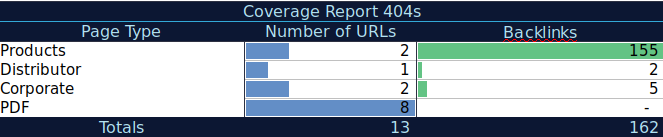

You can even add more information to this spreadsheet from other sources such as ahrefs, Majestic and Google Analytics with Oncrawl integrations. This would allow you to extract link data as well as traffic and conversion data for each of the URLs in Google Search Console. All of this data can help you make better decisions as to what to do for each page, for example if you have a list of pages with 404s, you can tie this with backlinks to determine if you are losing any link equity from domains linking to broken pages on your site. Or you can check indexed pages and how much organic traffic they are getting. You could identify indexed pages that do not get organic traffic and work on optimizing them (improving content and usability) to help drive more traffic to that page.

With this extra data, you can create a summary table on another spreadsheet. You can use the formula =COUNTIF(range, criteria) to count the URLs in each page type (this table can complement the table that AJ Kohn suggested above). You could also use another formula to add backlinks, visits or conversions that you extracted for each URL and show them in your summary table with the following formula =SUMIF (range, criteria, [sum_range]). You would get something like this:

I really like working with summary tables that can give me a summarized view of the data and can help me identify the sections I need to focus on fixing first.

Final thoughts

What you need to think of when working on fixing issues and looking at the data in this report is: Is my site optimized for crawling? Are my indexed and valid pages increasing or decreasing? Pages with errors are they increasing or decreasing? Am I allowing Google to spend time on the URLs that will bring more value to my users or is it finding a lot of worthless pages? With the answers to these questions you can start making improvements to your site so that Googlebot can spend its crawl budget on pages that can provide value to your users instead of worthless pages. You can use your robots.txt to help improve crawling efficiency, remove worthless URLs when possible or use canonical or noindex tags to prevent duplicate content.

Google keeps adding functionalities and updating data accuracy to the different reports in Google search console, so hopefully we will continue to see more data in each of the categories in the coverage report as well as other reports in Google Search Console.