Your content marketing team has one main responsibility: create content that drives results. Their job requires a mix of creativity with logic, finding the right words to educate and persuade your audience, and using the right data to optimize the content for performance.

As a marketing manager, it would be hard to ask your content creators to also be responsible for your technical SEO. Technical SEO requires a deep understanding of web development, a complex skill to master on its own.

While you can’t ask your content creators to optimize your technical SEO, they should understand its basics. This makes it much easier for your content team to communicate with your web developers and increase the performance of the content they produce.

Let’s take a look at the basics of technical SEO your content team should grasp in order to understand how their perfectly written content can gain even more organic readers.

What’s Technical SEO?

In the simplest terms, technical SEO is the technical side of SEO—it encompasses every task that requires knowledge of web development, of how websites work, and of how Google crawls and indexes them.

To be more specific, technical SEO includes any optimization you make to help Google crawl and index your web pages correctly.

Crawling and Indexing

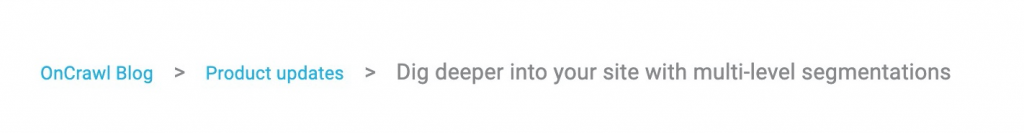

All web pages that show up in Google results (also known as SERPs, or search engine results pages) first have to exist in Google’s index. This index is the “directory” where Google lists all the pages they analyze when ranking a list of pages for a query that gets typed in the search box.

Before they can add a page in their index, Google’s bots—the algorithms they use to scan a web page—must be able to access it. As the Google’s support explains:

“There isn’t a central registry of all web pages, so Google must constantly search for new pages and add them to its list of known pages. Once Google discovers a page URL, it visits, or crawls, the page to find out what’s on it.“

In other words, crawling is when Google’s bots scan a page. Mind that I said “scan” and not “index” because crawling only refers to Google bots’ capacity to scan a page; indexing comes later.

The first step of a correct technical SEO implementation is to make sure your website is “crawlable;” that is, Google should be able to crawl all your site’s pages.

There are many reasons why a website or page wouldn’t be crawlable, including:

- The server is down

- The URL is broken

- There’s a problem loading the site/page

- No other pages link to it

Some types of crawling issues tend to be rare as they are relatively easy to spot. Still, you should never discount crawling in your analysis: other issues can be hard to find, such as orphan pages that you created but Google can’t find, pages that are redirected in circles, or parts of a site that can potentially create an infinite number of pages for Google to crawl, such as archives for years with no content.

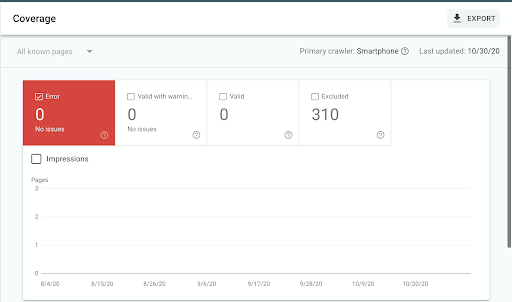

To find crawling issues, you can use Google Search Console, which works like Google’s representative for your website: any technical issues you may have, GSC (as it’s called) will assist you.

In GSC, go to “Coverage Report” and check the Errors column.

You can double-check any crawling issues by using an SEO tool that includes an SEO crawler, like Oncrawl. SEO crawlers will tell you when they find 5xx or 4xx problems (these weird numbers refer to their status code—e.g., a 404 error happens when a page can’t be reached or found).

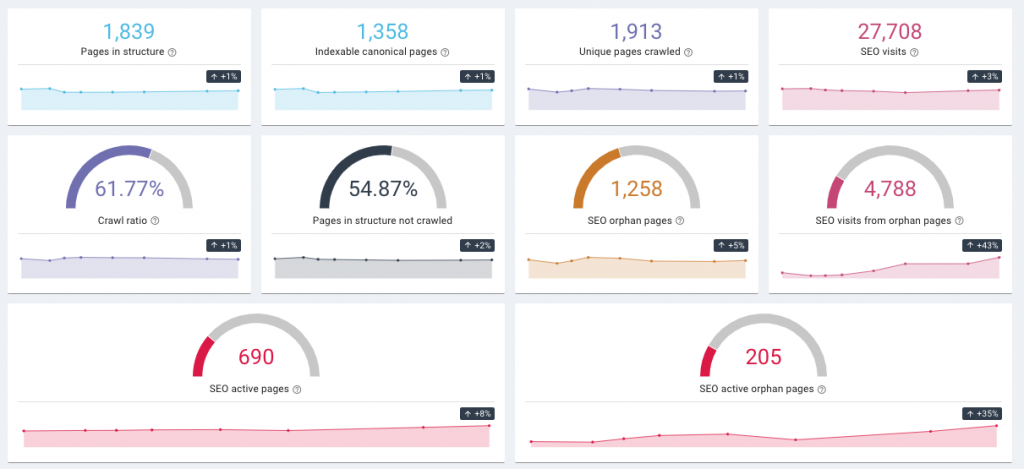

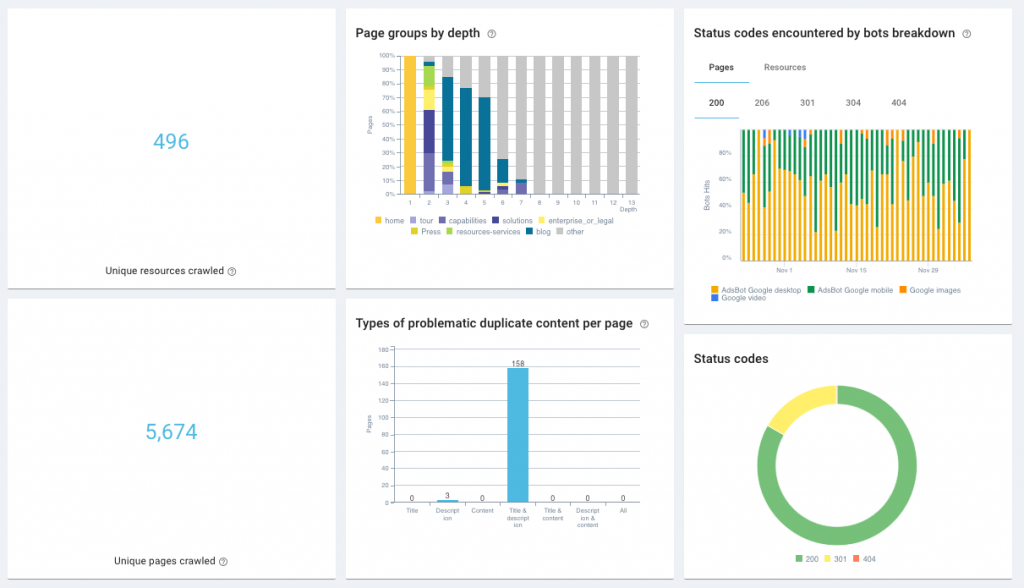

Oncrawl SEO Impact Report

Oncrawl SEO Impact Report

Oncrawl Health Dashboard

Oncrawl Health Dashboard

After Google crawls a page, it doesn’t index it immediately. Indexing happens when Google decides to add a page to their index, the same index they consult when they rank a page for the SERPs. As they state:

“Google analyzes the content of the page, catalogs images and video files embedded on the page, and otherwise tries to understand the page. This information is stored in the Google index, a huge database stored in many, many (many!) computers.“

To be clear: any page that Google’s bots can crawl will be crawled, while we can’t say the same about indexing. To index a page, you need to make it clear that Google should do so. Here’s how:

- Avoid any server errors. The best way to have a page indexed is make sure it is crawled in the first place. Avoiding server errors is the best way to do so. Check GSC’s Coverage report, as shown previously.

- Add internal links. All your pages should link to each other at least once. Use relevant anchor text that describes the page’s content properly (e.g., if I were to link to this post, I’d use the anchor text technical SEO).

- Create and upload an XML sitemap. They are like brochures that you use to present your site’s pages to Google’s bots. According to a Google engineer, XML sitemaps are the “second most important source” for finding URLs—internal links seem to be the first one.

- Avoid indications that Google should stay away from the page. These can include instructions not to crawl the page in the site’s guide for bots, the robots.txt file, or “nofollow” attributes on links pointing to the page.

- Make sure that you’re not telling Google to index a different page. Pages can suggest to Google that another page would be a better choice to index the same content, as we’ll see later. It can send Google to a different page with a redirect, or it can also directly tell Google not to index it with a “noindex” tag. Pages that do any of this are not considered indexable.

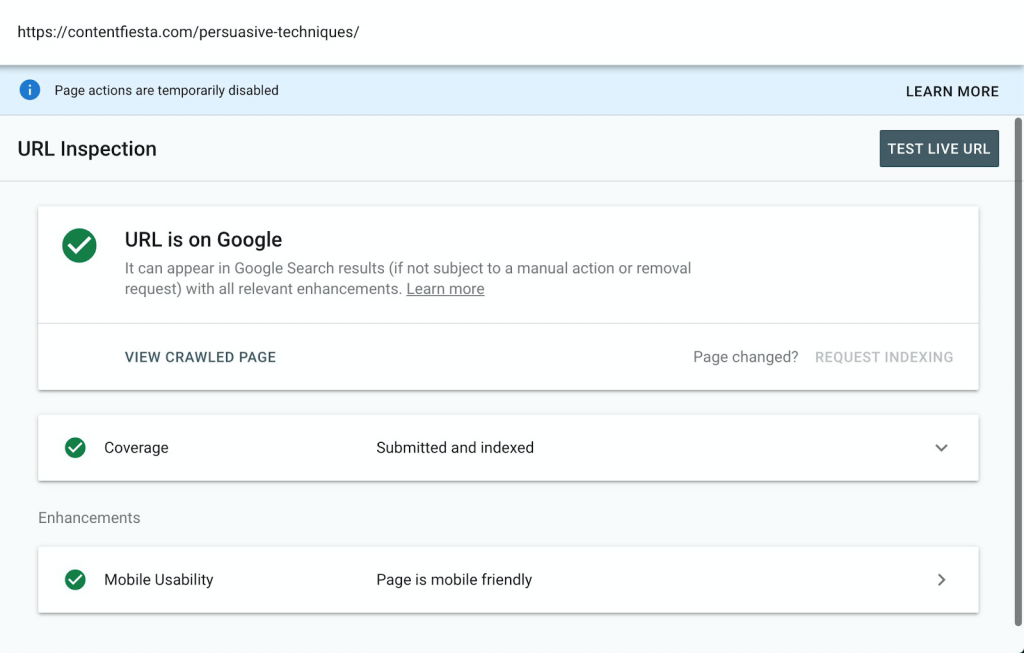

To ensure your page’s indexability, use GSC’s URL Inspection tool:

Once Google indexes a page that you actively want to rank, you are good to go. But there are many cases in which you wouldn’t want Google to index your pages.

- You have sensitive information no one outside your company should see

- You have several versions of a page you want Google to ignore (later, you will see what this looks like when we talk about “canonical URLs”)

- You don’t want to dilute your domain authority by indexing irrelevant pages—e.g., your privacy terms

When you de-index a page on purpose, you are using Google’s mighty power for good. Do it unconsciously, and you will hurt your site’s performance significantly.

The rest of this guide will cover three topics that directly or indirectly affect your site’s crawling and indexing.

Site Structure

Your site structure refers to the way you plan and organize your pages. However, we’re not talking about your menus, but rather the structure created by links from one page to another. SEOs often call site structure “site architecture” because you define the layout of your site’s different parts, how they relate to each other, and how they all sustain your site.

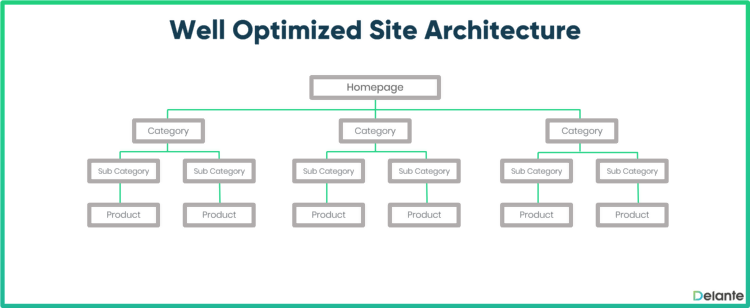

Many crawling and indexing problems happen when Google can’t access or index a page due to an inefficient site structure; sites where different parts are tangled in incoherent, complicated ways. To fix this issue, one theory of SEO site architecture uses the following rule of thumb: a site should have not more than three to four layers.

Such a simple site structure would mean that from your homepage, you could click to a blog page from your homepage and then to a blog post written by your content team. You could click to other posts or pages (e.g., your contact page), but all of them could alternatively be accessed in less than three or four clicks when starting from the home page. Alternatively, an ecommerce site could have a homepage, a category page, and a product page (checkout carts aren’t indexed, so they don’t count).

Such simple site structures are called “flat” due to their appearance as you saw in the image above. Whether your site has two, three, or four layers, you want to have a website that’s easy to navigate, both for your visitors and Google’s bot.

According to Google, “The navigation of a website is important in helping visitors quickly find the content they want. It can also help search engines understand what content the webmaster thinks is important.“

To get an idea of your site structure, carry out a site audit with Screaming Frog or Oncrawl.

If you find your site structure has too many layers and confusing sections, you need to rethink your structure with the help of your dev team and professional SEO experts. Your content creators may not need to participate in the discussion, but it’s important they know why this topic matters: if the content they create is too far down in the architecture, or isn’t part of it at all, it won’t be indexed.

[Ebook] Le technical SEO pour les esprits non techniques

Speed Optimization

Speed is one of the most critical factors that affect user experience (UX) and rankings. By “speed,” I mean the time it takes for a browser to retrieve a website’s data from a server, create the page, and display it.

Every page has dozens, if not hundreds of elements a browser must retrieve to load a page. The way a browser loads these elements will define your site’s speed; the longer it takes for the to load your site’s elements, the slower your page’s speed.

Optimizing your site’s speed is one of the most important technical SEO changes you can make. The most common—and, most often, effective—speed optimization changes you can make are:

- Compressing and minifying your HTML, CSS, and JS files

- Optimizing the size and compression quality of your images

- Setting up browser caching

- Setting up a CDN

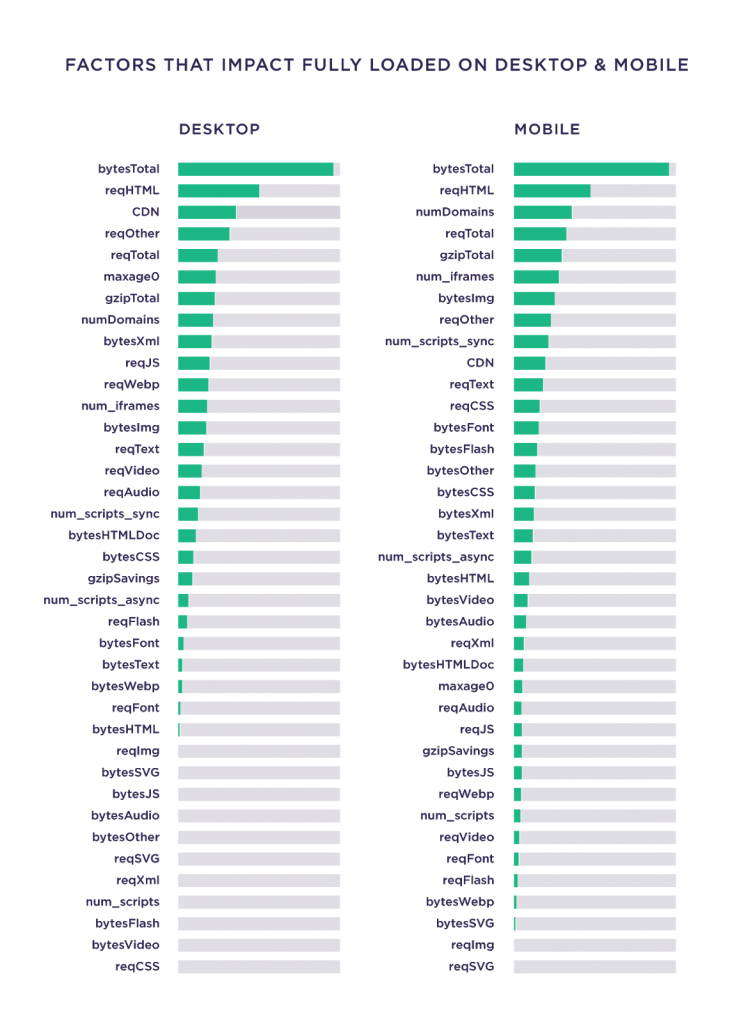

According to a Backlinko study, a page’s total size has the highest correlation with load times above all the other factors.

For a content creator, speed optimization is often outside their scope, as it requires server-side and highly technical skills—e.g., using RegEx, Apache HTTP Server, etc. Still, there are two aspects that any content marketer can be responsible for:

- Plugin use: Installing WordPress plugins is not only easy but useful. The problem is that they can lower a site’s speed, so whenever a content marketer decides to install a plugin, they should discuss the speed implication properly with an SEO and a web developer.

- Image optimization: Images tend to be the heaviest elements a page has. To overcome this issue, use the JPG format, compress your images, and, whenever possible, decrease their dimensions.

If you are optimizing a page, that’s already ranking high but not in the same first positions, and you have seen that it’s already appropriately optimized for on-page SEO, check its speed. Add your page in Google’s PageSpeed Insights tool—or alternatively, on Oncrawl’s Payload report—and optimize it based on its suggestions. If these suggestions are outside your scope, discuss them with your SEO team and developers responsible for your site’s management.

Content Optimization

When a content writer creates a new content piece, their primary goal is to educate the reader. Soon after the content is published, a technical issue can arise, which can ruin the content operation.

I’m talking about duplicate content, a problem that happens when Google indexes multiple versions of a page. Ecommerce sites tend to have duplicate content, leading to the indexation of variant pages such as:

- http://www.domain.com/product-list.html

- http://www.domain.com/product-list.html?sort=color

- http://www.domain.com/product-list.html?sort=price

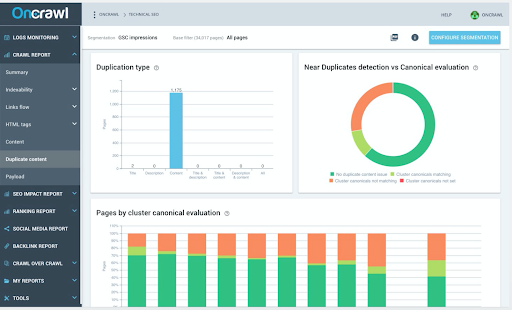

These mistakes happen unintentionally and inconspicuously. Your goal is to avoid the indexing of your duplicate pages. To start, audit your site with the help of Oncrawl’s Near Duplicate Detector.

There are several solutions to fix duplicate content:

- Deindex it: Add the “noindex” tag to your duplicate page, so Google removes it from their index.

- Add the canonical tag: Add the rel=“canonical” tag to the duplicate page and indicate the real (or “canonical”) version of the page. This won’t deindex it, but tell Google which page they should use in the SERPs.

- Redirect it: Use a 301 or an HTTP-level redirect to take visitors and bots from the duplicate page (http://www.domain.com/product-list.html?sort=color from the example above) to the correct one.

- Remove it: In some cases, the duplicate page has a separate file in your server or your CMS. In that case, remove the duplicate page and redirect its URL.

- Rewrite it: If you happen to have multiple pages similar to one another, you can change their content to ensure that they are recognized as separate pages.

Duplicate content isn’t a small issue to ignore, so make sure to audit your site properly and fix any instances of this problem.

In Summary

The advice laid out here is only the tip of the iceberg. Technical SEO can be an overwhelming task for any non-technical content writer, so a developer should make the most complex changes.

Still, no content creator should ignore the basics of technical SEO as they are essential in making sure content performs as expected. Any issues that may cause an incorrect crawling and indexing from Google can end up causing severe problems to the entire content marketing operation.

What else do you think a content marketer should know about technical SEO?