Don’t be Joey Donner.

Out of the middle of a crowded high school hallway, Joey Donner appears before Bianca (who has been seriously crushing on him for about 15 movie minutes of 10 Things I Hate About You), pulls out two nearly identical photos and forces her into choosing which she prefers.

Joey: [holding up headshots] “Which one do you like better?”

Bianca: “Umm, I think I like the white shirt better.”

Joey: “Yeah, it’s-it’s more…”

Bianca: “Pensive?”

Joey: “Damn, I was going for thoughtful.”

Like Bianca, search engines must make choices – black tee or white tee, to rank or not to rank (#ShakespearePuns!). And now, with the explosion of AI-generated content, the choice has become even more complex.

According to Google, “60% of the internet is duplicate” – which accounts for a tremendous amount of wasted storage and overhead resources (for little return #AintNoBotGotTimeForThat)!

On the surface, the solution is simple: Don’t be Joey Donner, making search engine bots pick between two identical results.

However, diving deeper into Joey’s psychological state — he doesn’t know he’s being redundant. He doesn’t realize that he’s presenting the same photo and a sticky situation to Bianca. He is simply unaware.

Similarly, duplicate content can sprout from a multitude of unexpected avenues and webmasters must be vigilant to ensure that it does not interfere with the user and bot’s experience. We must be mindful and purposeful about the content we produce and want indexed.

What is duplicate content?

As outlined in Google Search Central guidelines, duplicate content is,

“Substantive blocks of content within or across domains that either completely match other content or are appreciably similar.”

It’s important to understand that pages which virtually appear the same to a user won’t necessarily affect their site experience; however, pages with highly similar content will affect a search engine bot’s evaluation of those pages.

This definition expands beyond exact matches, it also includes AI-generated variations and content that serve the same intent without adding unique value.

Why should webmasters care about duplicate content?

Duplicate content causes a few issues, primarily around rankings, due to the confusing signals that are sent to the search engine. These issues include:

- Indexing challenges: Search engines don’t know which version(s) to include/exclude from their indices.

- Lower link impact: As different internet denizens across the web link to different versions of the same page, the link equity is spread between those multiple versions.

- Internal competition: When content is very closely related, search engines struggle with which versions of the page to rank for query results (because they look so similar. How’s a bot to know?!).

- Poor crawl bandwidth: Forcing search engines into crawling pages that don’t add value eats away at a site’s crawl budget and can be a huge detriment for larger sites.

What counts as duplicate content?

Duplicate content is often created unintentionally (most times we don’t aim to be Joey Donner).

Below is a list of common sources from which duplicate content may unintentionally arise.

It is important to note that although all of these elements should be checked, they may not be causing issues (prioritizing top duplicate content challenges is vital).

Common sources of duplication:

- Repeated pages (example: sizing pages for every product with same specifications, paid search landing pages with repeated copy)

- Indexed staging sites

- URLs with different protocol (HTTP vs. HTTPS URLs)

- URLs on different subdomains (e.g., www v non-www)

- Trailing slash or no slash (Google Search Central on / or no-/)

- Index pages (/index.html, /index.asp, etc.)

- URLs with parameters (i.e., click tracking, navigation filtering, session IDs, analytics code, etc.)

- Facets and sorts

- Printer-friendly versions

- Syndicated content

- PR releases across domains

- Localized content (pages without appropriate Hreflang labels, especially in the same language)

- AI-generated content variations

- Dynamic rendering differences across devices

- JavaScript framework-generated duplicates

Does duplicate content rank?

Rankings used to be based on a sort of “auction” which made duplicate content within the SERPs, hypothetically, a winner-take-all situation.

However, the process has evolved. When dealing with cross-domain duplicate content, Google now evaluates a number of factors beyond just who published the content first.

While there is still a type of “auction” that takes place, it is integrated in a dynamic process that takes into consideration:

- Content freshness and update frequency

- Whether expertise and first-hand experience have been demonstrated

- User engagement and helpfulness signals

- Site authority and E-E-A-T signals

- Unique insights and value-add beyond the original source

Google’s systems continuously evaluate which version best serves user intent, meaning rankings can shift as the different criteria above change.

The first publisher may have an initial advantage, but maintaining content relevance, demonstrating expertise, and providing unique value are now more important for long-term ranking success.

This however, is not to say that duplicate content –whether on the same or across domains — will not rank. There are actually cases that exist, where Google determines that the original site is less suited to answer a result and a competing site is selected to rank.

Should duplicate content rank?

Theoretically, no. If content doesn’t add value to the users within the SERPs, it shouldn’t rank.

[Ebook] Technical SEO for non-technical thinkers

Should I be worried if my site must have duplicated content?

Focus on what’s best for the user. A grounding question — is this answering your users’ questions in a way that is meaningful to your site’s overall experience?

If a site must have duplicate content (whether for political, legal, or time constraint reasons) and it cannot be consolidated, signal to search engine bots how they should proceed with one of the following methods — canonical tags, noindex/nofollow meta robots tags, or block within the robots.txt.

It’s also important to note that duplicate content, in and of itself, does NOT merit a penalty.

How does one go about identifying duplicate content?

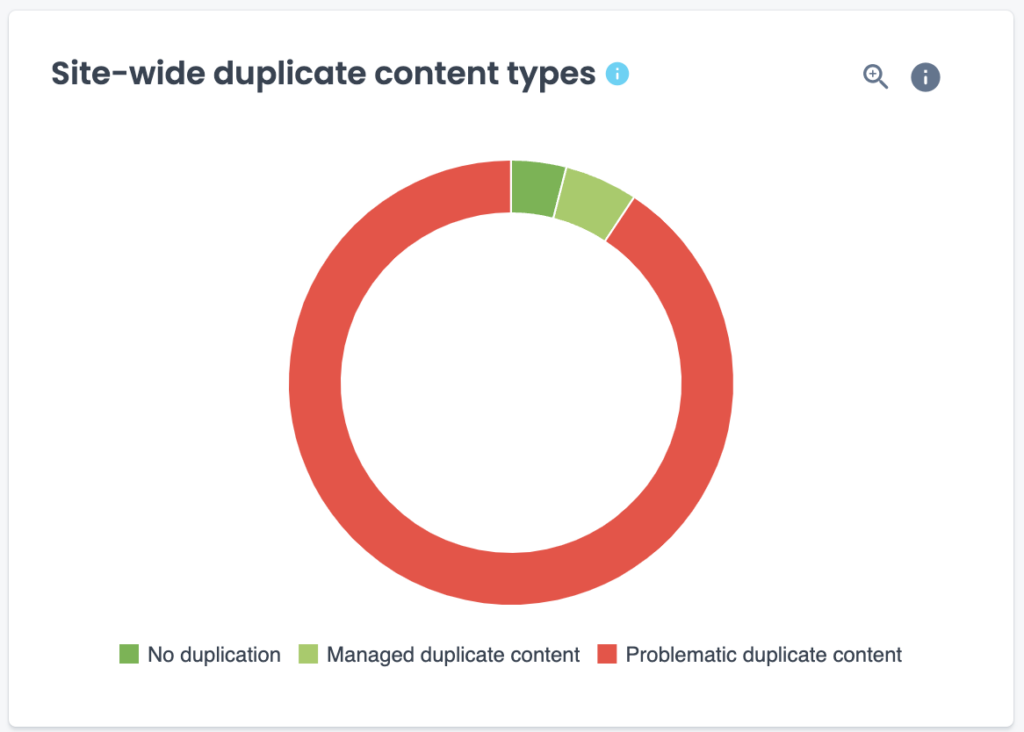

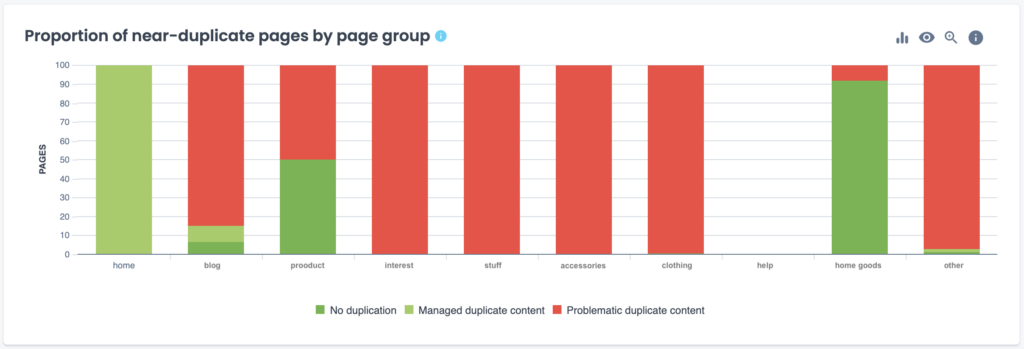

Oncrawl – I’d be remiss if I didn’t point out Oncrawl’s duplicate content visualization, because they’re the baddest in the biz (and by this I mean the best).

One of my favorite aspects is how Oncrawl evaluates the duplicate content against canonicals. If the content is within a specific canonical cluster/group then the issues can typically be dismissed as resolved.

Their reports also take this one step further and can show data segmented by subfolder. This can help to identify specific areas with duplicate content issues.

Source: Oncrawl

Source: Oncrawl

Solving for duplicate content

Solutions for duplicate content are highly contingent upon the cause; however, here are some tips and tricks.

Duplicate content resolution requires a beautiful balance between technical SEO and content strategy.

Know your user journey

Understanding where users are in the marketing funnel, what content they’re interacting with, and why they’re interacting with it can ultimately help shape your site’s overarching information architecture and the content, creating experiences with purpose.

See sample content mapping outlines below (print and fill in!).

Create a strong hierarchical URL taxonomy and information architecture

If you have a ton of similar topics, make sure you have a clear keyword alignment map.

At the core, you want to make sure that you aren’t cannibalizing your own traffic.

Prioritize duplicate content issues

When identifying duplicate content, it is vital to prioritize duplicate content issues that are affecting performance and integrate this into the overall organic search strategy.

If the pages are 100 percent duplicate and one version doesn’t need to be live, pick one and consolidate with a 301 HTTP status redirect.

Make sure all content on pages that should be indexed is indexable

This process should be based on your users’ journey.

As an illustrative example, my team once identified an issue where comments from Facebook, which were a highlight of this website’s product pages, were not being indexed.

Resolving Facebook comments not being crawled and indexed would turn the pages from thin content into unique forums relating to the product.

Leverage HTML tags, robots.txt, and appropriate HTTP status codes

This will indicate to search engines what they should do with a particular piece of content.

- <Link>tag attributes to indicate document relationships:

- Canonical Tags: I’m the same as this version!

- Rel=”prev/next”: I’m part of a paginated series.

- Blocking URLs:

- Meta robots=”noindex”: Don’t index me. Note: Don’t just noindex pages with link authority. Be strategic with the link authority.

- Meta robots=”nofollow”: Don’t follow my links.

- Robots.txt disallow: You’re not allowed to be here.

- 403 HTTP Status Code: No bots allowed.

- Server Password Protection: Give me a password to get in.

Move forward strategically: consolidate, optimize, create, and delete

- Consolidate – Consolidate duplicate content where applicable with 301 redirects and canonicalization (when both experiences must remain live).

- Optimize – Can you have a unique perspective? Can you target or align keywords better? How can you reframe this content to be unique and valuable?

- Create – Occasionally, it makes sense to break out content and create a separate, universal experience.

- Delete – Pruning content can support crawl bandwidth, as search engines won’t be forced into crawling the same content repetitively that does not add additional value to your site.

If your content is stolen, there are two primary venues to approach:

- 1. Request a canonical tag pointing back to your site.

- 2. File a DCMA request with Google.

Content mapping strategy templates

Illustrative user journey

Print out and label a top user journey to ground yourself in the example.

Label each type of content the user could interact with in their journey and estimated time duration of each step. Note that there may be additional steps and that the path may not always be linear.

Add arrows and expand, the goal is to ground oneself in a basic example before diving into complex/involved mappings.

Simple marketing funnel content map

Simple marketing funnel content map

Print out and write out goals, types of content, common psychological traits, content location, and what it would take to push the user to the next stage in their journey.

The idea is to identify when users are interacting with certain content, what’s going through their minds, and how to move them along in their journey.

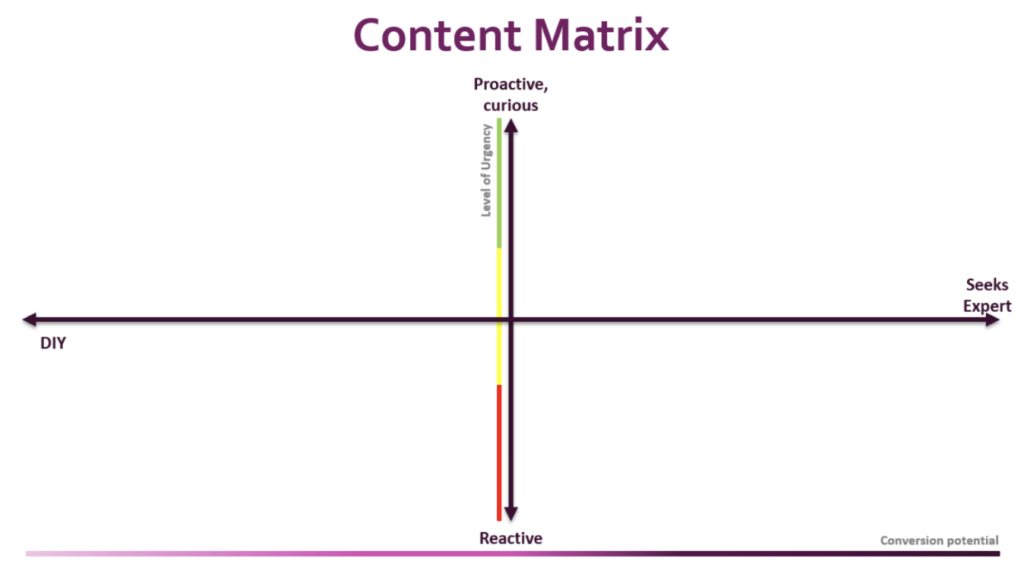

Content prioritization matrix

Print out and map points with the type of content available, mapping out by vital binary points.

Once you’ve mapped out all of your content, step back and see if there are any areas missing.

Leverage this matrix to prioritize the most important content, whether it’s by conversion potential or by need.

Retail Content Matrix

Start with mapping informational to transactional intent on the y-axis. Non-brand and brand as more relevant.

Service line content matrix

Start with users that are more proactive versus reactive on the y-axis. Then transition to conversion potential.

For services, this may look like “Seeks Expert” versus “DIY”.

Conclusion

In today’s climate of continuously advancing search algorithms, when we talk about managing our duplicate content issues, we are no longer just talking about making sure our page content isn’t too similar to something else on our site or somewhere else on the internet.

We also have to make sure that each page adds genuine value to the users’ experience. Like Joey Donner’s photoshoot dilemma, the key is to make each piece of content truly unique, valuable and relevant.

And remember, while some content will inevitably age or lose its relevance, the same does not apply to 10 Things I Hate About You – watch it again, some classics never get old!

*Recommended reading

If you’re looking to learn more about duplicate content, you can check out a few of the resources below:

Simple marketing funnel content map

Simple marketing funnel content map