Site structure is one of the most effective SEO levers for large sites because it scales well and you have full control over it. But it’s also one of the most loosely used terms in SEO. Understanding how to evaluate and optimize it is often fuzzy and unclear. There’s no consensus on what site structure actually means and how to optimize it.

Let me attempt to change that in this article.

When we talk about “site structure”, we talk about how its pages and content are organized – but that touches on many areas! Many times, site structure is confused with “click depth”, which is how many clicks it takes from the homepage to reach a certain page, or “URL structure”. But these are just individual parts of it.

The full body of site structure optimization contains:

- Internal linking

- Taxonomy

- Click depth

- URL structure

The goal is to optimize those four factors in such a way that they indicate to Google and users what the most important pages are, how they’re related, and in what context.

The importance of site structure optimization

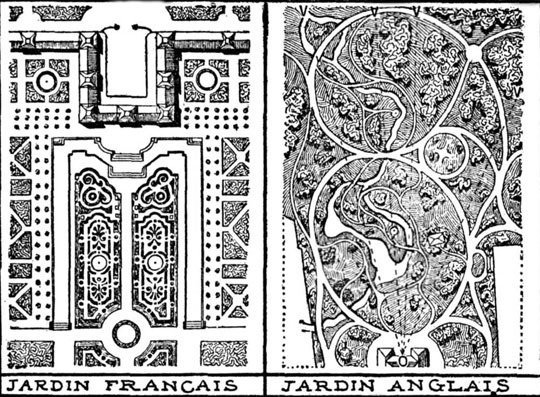

The difference between English and French Gardens is a great metaphor for things that grow orderly vs. wildly.

In the 17 century the hottest thing was French Formal Gardens. The most famous one was the Garden of Versailles (at the Château de Versailles). It was completely symmetrical and organized, trimmed down to the inch.

In the 18th century, the English took over and came up with the English Landscape Garden, which was the exact opposite: asymmetrical and human. The French came back in the 19th century with the French Landscape Garden, which was similar to and inspired by the English Landscape Garden.

Most site structures grow like English gardens: wildly. This is a downside for users and search engines, who like structure to quickly understand what a site is about and where to find what. Structure gives us and bots orientation and context.

Things tend to grow “naturally”. Plants do have their own structure when they grow but a whole garden grows wildly and almost randomly if its not pruned and organized. Taken to an extreme, a naturally grown garden is a jungle. You can’t get through (without a machete). It’s the same thing with websites.

(Site structure should be like French gardens)

That’s why you should aim for a French garden for your site structure: planned, organized, pruned.

The driver of that need is Scale. Wild things don’t scale well but organized, optimized structures benefit from growth. More pages in a good site structure mean stronger signals for search engine and broad selection for users without losing orientation. So, the larger the site, the higher the impact of its structure on growth.

Site structure areas: Internal Linking, Taxonomy, Click Depth, URL Structure

Let me start by saying that I won’t go all the way down the rabbit hole on those four topics. They’re simple too deep to cover everything in one article. Instead, I’ll explain what they are and how they’re relevant within site structure optimization.

Internal Linking has the biggest impact on site structure and consists of links between pages on a site. It has such a great influence because it drives Click Depth, crawl frequency, and “link power”* of a page.

*I’ll use the term link power to describe how well a page is linked internally and externally. Other known terms are “Authority”, “Page Strength”, or “Page Authority”. I try to avoid the confusing term “PageRank” as Google doesn’t use it in its original form anymore.

John Mueller mentioned that URL structure is important because it provides context:

“So we should be able to crawl from one URL to any other URL on your website just by following the links on the page. […] If that’s not possible then we lose a lot of context. So if we’re only seeing these URLs through your sitemap file then we don’t really know how these URLs are related to each other and it makes it really hard for us to be able to understand how relevant is this piece of content in the context of your website. So that’s one thing to… watch out for.”

The Taxonomy of a site describes how information is organized, i.e. categories, sub-categories, tags, and other classifications. The way you organize your content helps users find things quicker but also provides context for search engines.

The relationship between pages plays an important role for entity optimization. If each page is an entity, the more information you can provide about that relationship, the better. Taxonomy helps with that because it creates the context of those relationships.

Click Depth represents the number of clicks it takes to go from the homepage to a subpage on the same site. Does it even matter? You bet! John Mueller mentioned that Click Depth, also sometimes called page depth, is more important than URL structure to indicate the importance of URLs. For Google, the fewer clicks from the homepage it takes to get to a subpage, the more important it is assumed to be.

URL Structure is a label for the structural elements of a URL, i.e. subdomains, directories, and sub-directories. It helps Google with indexing and plays a semantic role, i.e. it makes the relationship between pages clearer.

I’ve seen that happen when a site has a hard-to-understand URL structure, with many URLs leading to the same content, making our systems assume that a part of the URL is irrelevant.

— ???? John ???? (@JohnMu) December 28, 2018

By now, you should understand how entangled those four areas are and why they’re so often mixed up. A change in taxonomy, for example, leads to changes in the URL and internal linking structure, which potentially causes a change in Click Depth. However, they are are not the same. It’s important to know the difference to be effective.

How to audit site structure for weaknesses

We got the why down, so let’s tackle the how and what.

You’re probably monitoring your site check crawls and log files at least once a week (or once a day, if you’re OCD like me). But a full-blown site structure audit takes time. So, the best frequency is once a quarter, unless you migrate/relaunch your site or traffic drops all of a sudden.

To audit your site structure, you need at least a crawler. Optimally, you have a tool to analyze log files and pair it with data from Search Console and/or your web analytics tool of choice.

The goal is to get the best understanding possible of how users use your site and how search engines crawl it. Log files are the best insights into what search engines do on your site, web analytics shows you what users do. Combining the two is a powerful monitoring tool to assess the effect of site structure changes.

The big question is how to audit your site. To begin, you first need to understand what to look for. We can split problems up into the four use-cases I mentioned earlier.

Internal Linking

Let me preface this section with the caution that your optimal internal linking structure depends on your business model. Not everyone should optimize internal linking the same way. Instead, take into account whether you have one point of conversion or many.

That being said, I see four common issues with internal linking:

Pages with too many outgoing links. Look for pages – or better page templates – that have too many outgoing internal and few incoming links. In my experience, those pages often suffer under high CheiRank and low PageRank, meaning they give away too much link power and don’t get enough.

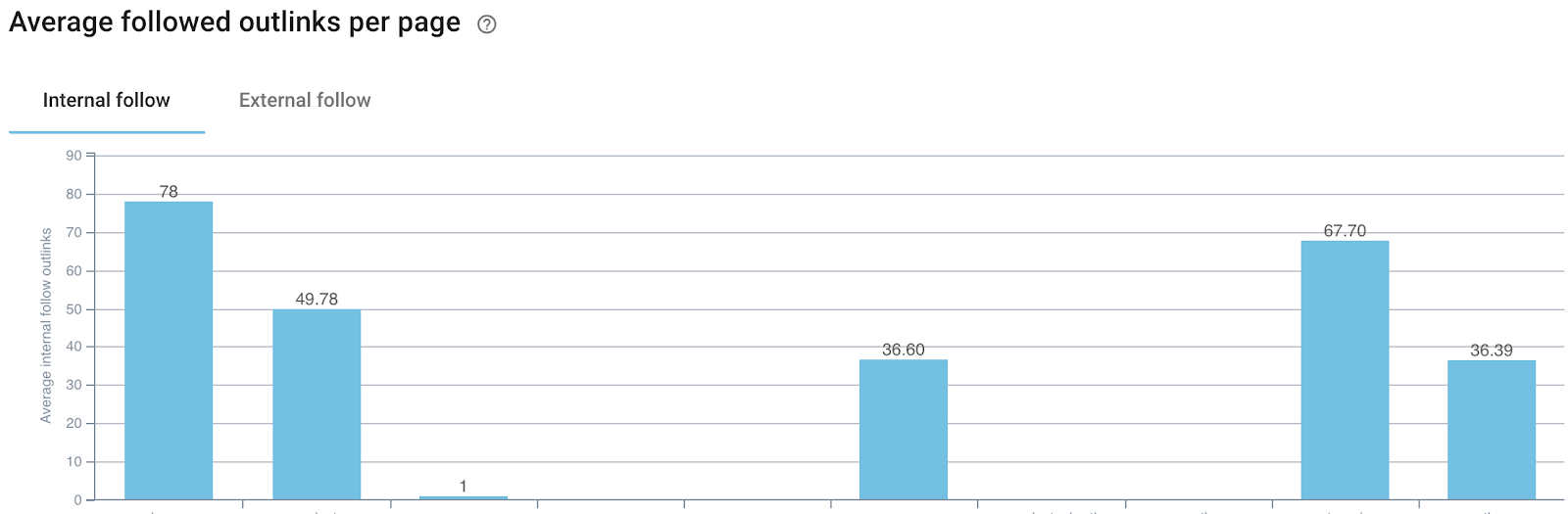

How many is too many? There is no magic number. Measure against your average! Pull the average number of outgoing links per page template and see if one stands out significantly. If so, critically assess those outgoing links and see if you can trim some.

Also, measure outgoing against incoming links in relation to the importance and performance of the page. If it’s important (for the business) and doesn’t perform well, incoming vs. outgoing links might be a factor.

Important pages have too few incoming links. In the same manner, you should analyze important pages for your business for having enough incoming internal links with a crawl.

A true representation of internal link power only comes from factoring backlinks in when calculating your internal link graph. That’s the idea I promote with the T.I.P.R. Model. But for starters, looking at incoming links should suffice.

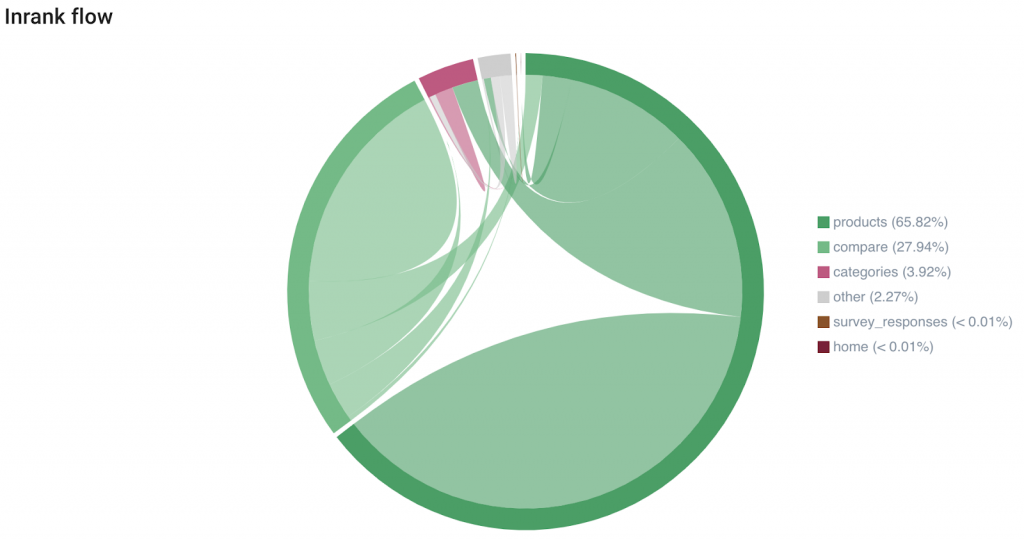

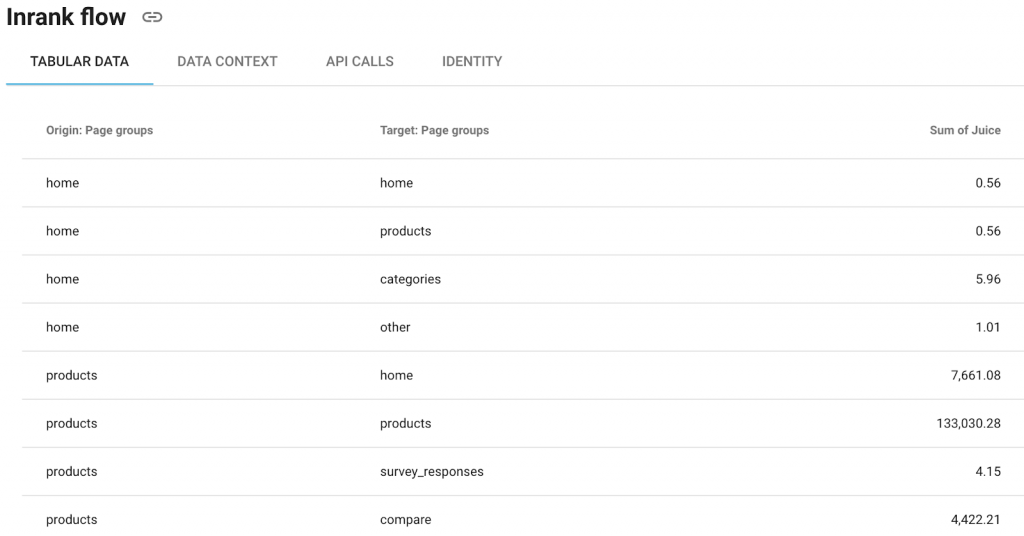

(Oncrawl’s Inrank Flow chart helps you understand which page types link to each other the most)

(You can also get the data in raw form from Oncrawl and copy/paste it into a spreadsheet or download it as CSV)

[Case Study] Optimize links to improve pages with the greatest ROI

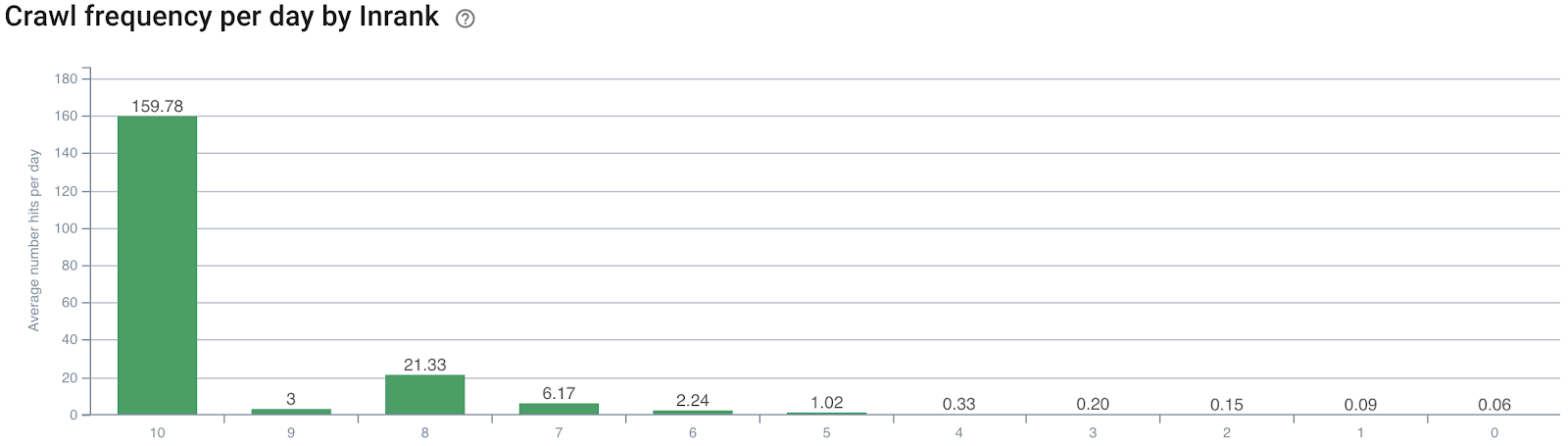

Important pages aren’t crawled often enough. Crawl rate, i.e. the frequency with which Google crawls a page, is a significant indicator of Google’s perceived importance of the page. Thus, when analyzing site structure, factor in log files and see how often each page is crawled in relation to its incoming and outgoing links. Google has been factoring in PageRank (or whatever they use nowadays) to decide what to crawl how often for a long time, so there is a noticeable correlation between crawl frequency and rankings. If you find important pages to be crawled less often than others, you want to think about increasing the number of internal links to them.

(Oncrawl’s crawl frequency by inrank report clearly shows that pages with more incoming links are crawled more often)

Pages hoarding link power. Just as there are pages that are linking out too much, you also have pages that get a lot of link power but don’t give enough away. Mind you, I’m not promoting link sculpting in the classic sense or the idea that you should not link out. When I’m saying that pages are “hoarding link power”, I suggest that they don’t link out enough.

Identify the top 20 or 50 most internally linked pages and assess them for potential to link out more, unless they already have an above average number of outgoing links.

(Oncrawl’s average number of outgoing links per page type helps you find pages with potentially too many or too few outgoings links)

Optimizing internal linking often follows a barbell distribution with lots of pages with high incoming/low outgoing links and the opposite on the other side of the spectrum. Sites with a few points of conversion (think: landing pages) should concentrate link power around money maker pages. Sites with many points of conversion (think: product pages for ecommerce sites or articles for publishers) should strive for an equal distribution of incoming and outgoing links.

That prescription is very general and exceptions apply, but it’s important to keep in mind that not every site should be optimized the same way.

The most effective ways to scale internal link changes are modifications to link modules on category pages or the top navigation. However, such changes need to be tested before shipping because they’re impact is too complex to be estimated and it is subject to Google’s evaluation of boilerplate content. Making the modifications on a staging environment and comparing the internal linking structure to the live version with a crawl is the smartest way to go here.

Click Depth

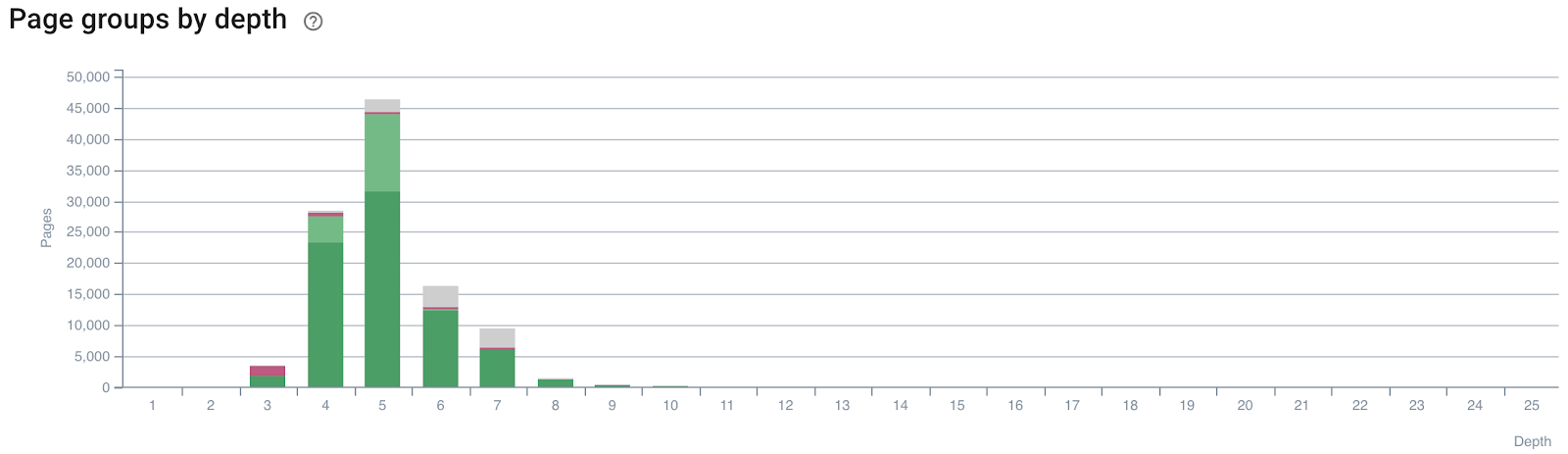

With Click Depth, the problem you want to look out for is important pages having a too high click-depth, meaning it takes too many clicks from the homepage to reach them. Classic offenders and solutions are paginations and breadcrumbs.

(Page types or groups by depth shows you if important pages are too far away from the homepage)

Not every page will have a low click-depth if a site reaches a certain size. Practically speaking, it’s not feasible, unless you want to end up with thousands of links on your homepage. That means that while the old ideal for every page to be reachable within 3 clicks from the homepage is a nice target to shoot for, don’t break your head if (unimportant) pages end up higher than that.

Changes in Click Depth should be evaluated on a staging environment and compared to the live version as well. It’s simple to segment by page type and see how adding a breadcrumb or pagination would change the Click Depth.

URL Structure

An optimal URL structure scales with the size of the site just as much as the factors mentioned in this post. The larger the site, the more I recommend to reflect a certain hierarchy in the URL structure with directories and subdirectories to give users and search engines context.

The biggest issues I see with sites is providing either too much or too little hierarchy in their URL structure. Let me be clear, it’s not the highest priority to drive organic traffic. But when you’re dealing with a large site and have a chance to change your URL structure, adding some hierarchy is not a bad idea.

Taxonomy

Taxonomy is one of those topics that contain enough information for a whole book. I’ll try to wrap it up in a few sentences but keep in mind that it’s a deep topic.

The approach to finding the optimum is very much tied to the specific site and there are often several options. So, I cannot provide you with a number that indicates whether your taxonomy is good or bad. If you never sat down to play through different ways to organize content on your site, you probably haven’t invested enough thought into your taxonomy.

A valuable exercise is card sorting for taxonomy. Write all of your categories, subcategories, topics (and tags) on index cards. Then, summon your marketing department and discuss the optimal structure of information on your site.

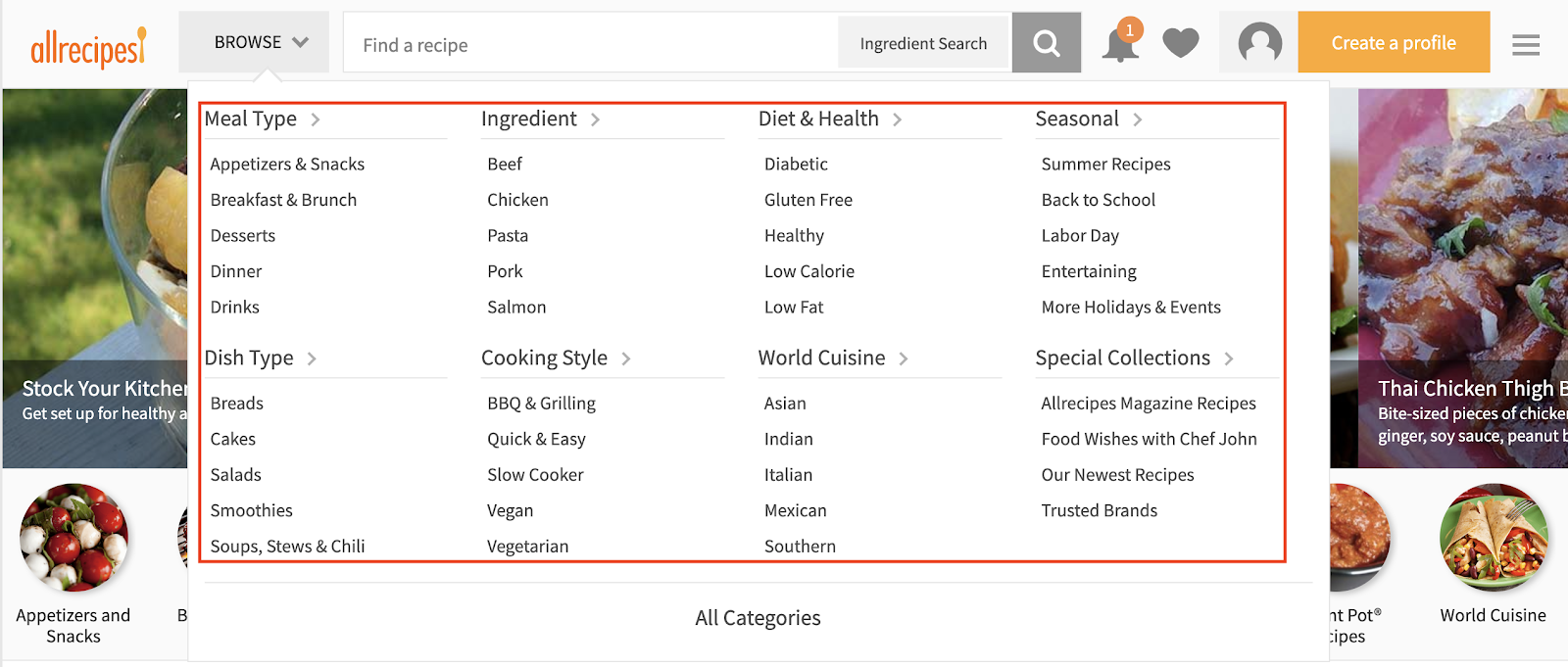

Allrecipes.com is an example of a site with a well-structured taxonomy. I don’t know if the taxonomy alone is driving the success of the site, but it certainly reflects the internal linking and content structure.

(Top navigation of allrecipes.com)

Focus on indexable content

Indexability is not part of site structure but it’s important to differentiate whether content is actually open to being indexed or not. If you block big parts of a site from being indexed, say a certain template like forum profiles, the site structure implications only apply to humans.

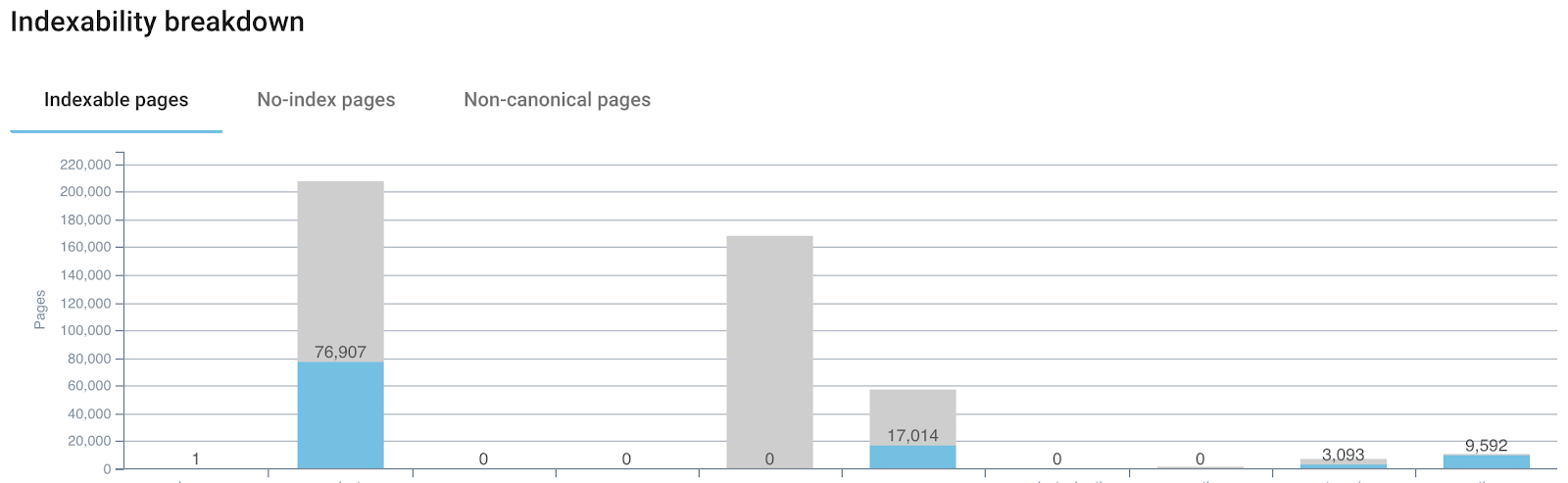

(Oncrawl’s indexability report – it’s important to keep indexability in mind when optimizing site structure)

In that sense, you have to consider two points. First, unindexed parts of a site don’t provide context for search engines. So, be mindful about what your exclusion logic is. Second, instead of using noindex meta-tags, consider preventing crawling using robots.txt to also spare crawl budget. You could use rel=nofollow but robots.txt allows for more flexibility, quicker changes, and better control. Keeping low quality content out of Google’s index is always a good idea and I’ve seen good results from sparing crawl budget along the way. So, when looking at internal linking, click depth, taxonomy, and URL structure, keep in mind what’s supposed to be indexed and what not.

Site structure changes over time

Site structure optimization is an ongoing process. Websites are “living” ecosystems: they grow and shrink and change over time. Just like humans need to go to the doctor for a check-up from time to time, we need to reassess site structure regularly.

Think of the social graph of a city. There’s a defined number of people living in that city and most of them know each other. Some of them are very popular, e.g. the mayor. Some may know only one person. The city is optimally connected when the right people are known, say because they’re the city council.

One challenge is flux. New people move to the city, others leave. The social graph constantly changes and so does the link graph of a site.

In the metaphor, people are nodes and the relationships between them are edges. We can transfer that to how pages are related within a website. Sites grow, some faster, some slower, and the internal link graph changes. That’s the challenge behind site structure optimization: it’s a constant reassessment of how well nodes are connected to each other and making sure the right ones are popular enough.

Run a weekly or bi-weekly crawl and look at log files in relation to crawl frequency about once a month. I suggest a quarterly site structure audit factoring in internal linking, URL structure, taxonomy, and Click Depth. Overhauling a taxonomy is only necessary when adding a new section, relaunching/migrating, or rolling out a new product. It probably won’t happen that often, maybe once a year if at all.

The cleaner you keep your site structure, the better it will scale as your site grows.