Latent Semantic Indexing (LSI) has long been cause for debate amongst search marketers. Google the term ‘latent semantic indexing’ and you will encounter both advocates and sceptics in equal measure. There is no clear consensus on the benefits of considering LSI in the context of search engine marketing. If you’re unfamiliar with the concept, this article will summarise the debate on LSI, so you can hopefully understand what it means for your SEO strategy.

What is Latent Semantic Indexing?

LSI is a process found in Natural Language Processing (NLP). NLP is a subset of linguistics and information engineering, with a focus on how machines interpret human language. A key part of this study is distributional semantics. This model helps us understand and classify words with similar contextual meanings within large data sets.

Developed in the 1980s, LSI uses a mathematical method that makes information retrieval more accurate. This method works by identifying the hidden contextual relationships between words. It may help you to break it down like this:

- Latent → Hidden

- Semantic → Relationships Between Words

- Indexing → Information Retrieval

How does Latent Semantic Indexing Work?

LSI works using the partial application of Singular Value Decomposition (SVD). SVD is a mathematical operation that reduces a matrix to its constituent parts for simple and efficient calculations.

When analysing a string of words, LSI removes conjunctions, pronouns, and common verbs, also known as stop words. This isolates the words which comprise the main ‘content’ of a phrase. Here’s a quick example of how this might look:

![]()

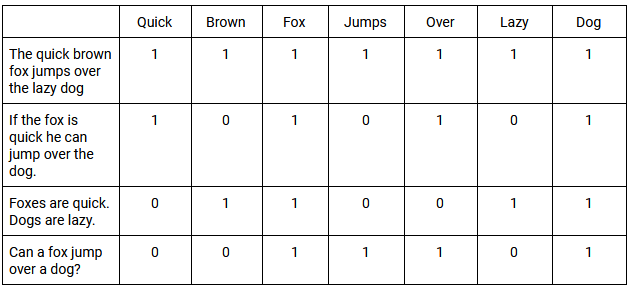

These words are then placed in a Term Document Matrix (TDM). A TDM is a 2D grid that lists the frequency that each specific word (or term) occurs in the documents within a data set.

Weighing functions are then applied to the TDM. A simple example is classifying all documents that contain the word with a value of 1 and all that don’t with a value of 0. When words occur with the same general frequency in these documents, it is called co-occurrence. Below you will find a basic example of a TDM, and how it assesses co-occurrence across multiple phrases:

Using SVD allows us to approximate the patterns in word usage across all documents. The SVD vectors produced by LSI predict meaning more accurately than analysing individual terms. Ultimately, LSI can use the relationships between words to better understand their sense, or meaning, in a specific context.

[Case Study] Driving growth in new markets with on-page SEO

How did Latent Semantic Indexing become involved with SEO?

In its formative years, Google found that search engines were ranking websites based on the frequency of a particular keyword. This, however, does not guarantee the most relevant search result. Google instead began ranking websites they considered trusted arbiters of information.

Over time, Google’s algorithms would filter out low-quality and irrelevant websites with greater accuracy. Therefore, marketers must understand the meaning behind a search, instead of relying on the exact words being used. This is why Roger Montti described LSI as “training wheels for search engines” in an article about outdated SEO beliefs, adding that LSI has “little to zero relevance to how search engines rank websites today”.

The meaning of a search query is closely linked to the intent behind it. Google maintains a document called Search Quality Evaluator Guidelines. In these guidelines, they introduce four helpful categories for user intent:

- Know Query – This represents seeking information about a topic. A variant on this is the ‘Know Simple’ query, which is when users are searching with a particular answer in mind.

- Do Query – This reflects a desire to engage in a particular activity, such as an online purchase or download. All of these queries can be defined by a sense of ‘interaction.’

- Website Query – This is when users are looking for a specific website or page. These searches indicate a prior awareness of a particular website or brand.

- Visit-in-Person Query – The user is searching for a physical location, such as a brick-and-mortar store or a restaurant.

The theory behind LSI – defining a word’s contextual meaning within in a phrase – gave Google a competitive edge. However, the idea began to spread that ‘LSI keywords’ were suddenly a golden ticket to SEO success.

Do ‘LSI Keywords’ actually exist?

Many notable publications remain firm advocates of LSI keywords. Yet several sources, such as Google’s Webmaster Trends Analyst John Mueller, state they are a myth. These sources began raising the following points:

- LSI was developed before the World Wide Web and wasn’t intended to be applied to such a large and dynamic dataset.

- The U.S. patent on Latent Semantic Indexing, granted to an organisation named Bell Communications Research Inc. in 1989, would have expired in 2008. Therefore, according to Bill Slawski, Google using LSI would be akin to ‘using a smart telegraph device to connect to the mobile web.’

- Google uses RankBrain, a machine learning method that transforms volumes of text into ‘vectors’ – mathematical entities that help computers understand written language. RankBrain accommodates the web as a constantly expanding dataset, making it usable by Google, unlike LSI.

Ultimately, LSI reveals a truth marketers should adhere to: exploring a word’s unique context helps us understand user intent better than keywords stuffed into content. However, this does not necessarily confirm that Google ranks based on LSI. Therefore, could it be safe to say that LSI works in SEO as a philosophy, rather than an exact science?

Let’s return to the Roger Montti quote about LSI as “training wheels for search engines.” Once you learn to ride a bike, you tend to take the training wheels off. Can we assume that in 2020, Google no longer uses training wheels?

We can consider Google’s recent algorithm update. In October 2019 Pandu Nayak, Vice President of Search, announced that Google had begun using an AI system named BERT (Bidirectional Encoder Representations from Transformers). Affecting over 10% of all search queries, this is one of the biggest Google updates in recent years.

When analysing a search query, BERT considers a single word in relation to all of the words in that particular phrase. This analysis is bidirectional, in that it considers all of the words before or after a specific word. The removal of a single word could drastically impact how BERT understands a phrase’s unique context.

This marks a contrast from LSI, which omits any stop words from its analysis. The below example shows how removing stop words can alter how we understand a phrase:

![]()

Despite being a stop word, ‘find’ is the crux of the search, which we would define as a ‘visit-in-person’ query.

So what should marketers do?

Initially, LSI was thought to be able to help Google match content with relevant queries. However, it appears that the debate in marketing surrounding the use of LSI has yet to reach a single conclusion. Despite this, marketers can still take many steps to ensure their work remains strategically relevant.

Firstly, articles, web copy and paid campaigns should be optimised to include synonyms and variants. This accounts for the ways people with similar intent use language differently.

Marketers must continue to write with authority and clarity. This is an absolute must if they want their content to solve a specific problem. This problem could be a lack of information or the need for a certain product or service. Once marketers do this, it shows that they truly understand user intent.

Finally, they should also make frequent use of structured data. Whether it’s a website, a recipe or an FAQ, structured data provides the context for Google to make sense of what it is crawling.