What’s the most important thing you can do to make sure your site can show up in search results?

A bot-friendly website makes it easy for search engines to discover its content and make it available to users.

Crawling, or a visit from a bot to collect information, is the first step in the (long) process that ends with your site listed first in the search engine results pages. This step is so important that Google representative Gary Illyes believes it needs all caps:

We [Google] don’t do a good enough job getting people to focus on the important things. Like CREATING A DAMN CRAWLABLE SITE

— Gary Illyes (Google’s Chief of Sunshine and Happiness & Trends Analyst) / Feb. 08 2019 Reddit AMA on r/TechSEO

A crawlable site lets search engine bots carry out their basic tasks:

- Discover that a page exists through links pointing to it

- Reach a page from main site entry points, such as the home page

- Examine the contents of the page

- Find links to other pages

The steps you can take to make your website crawlable must address all of these aspects.

[Case Study] Managing Google’s bot crawling

Give the right instructions to bots

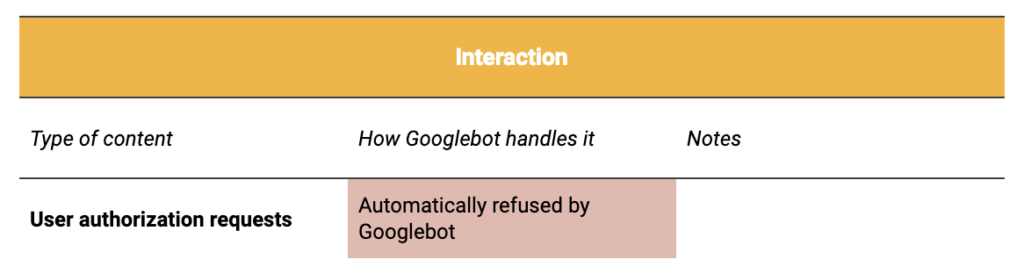

Googlebots follow website instructions to bots. These instructions can appear in different locations:

- The meta tag’s robots property in a page

- The x-robots tags in the page header, particularly for URLs that are not HTML pages

- The website’ss robots.txt file

- The domain’s server settings in its htaccess file

Pages or site sections that are forbidden to bots are not crawlable because they are not accessible to Google.

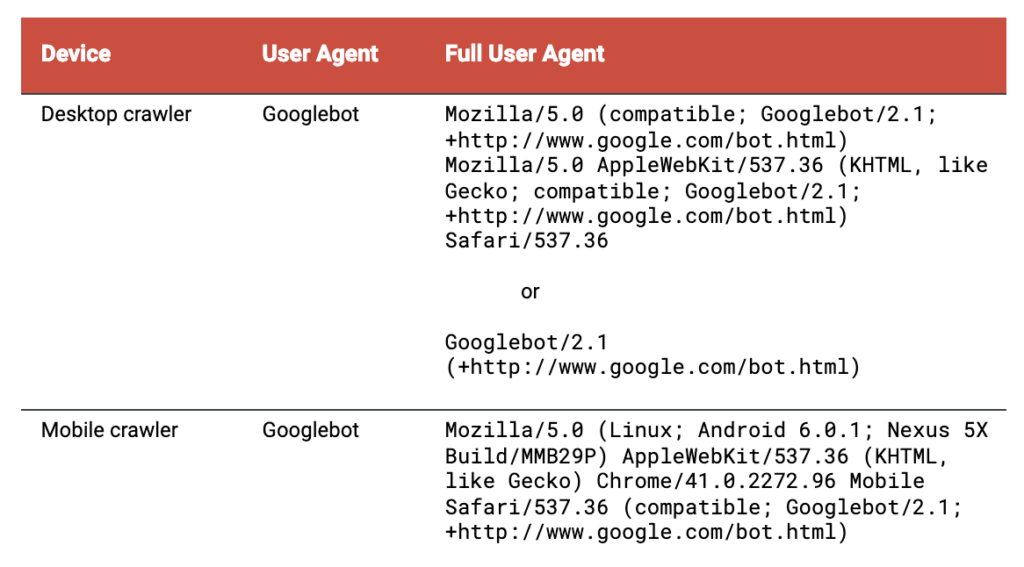

When providing instructions for bots, keep in mind that Google regularly crawls with the following bots:

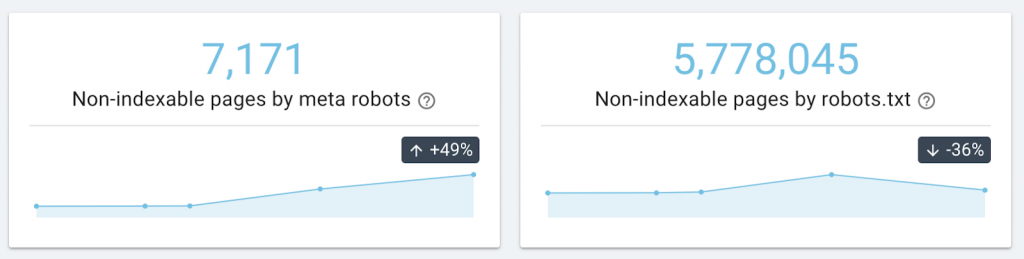

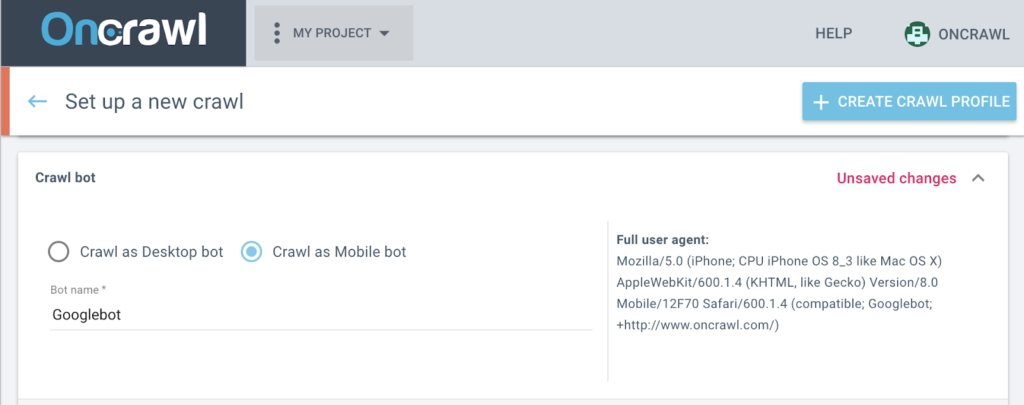

You can see how your site’s robots.txt file targets these bots by using an SEO crawler that respects directives to bots and running a crawl with “Googlebot” as the bot user agent name.

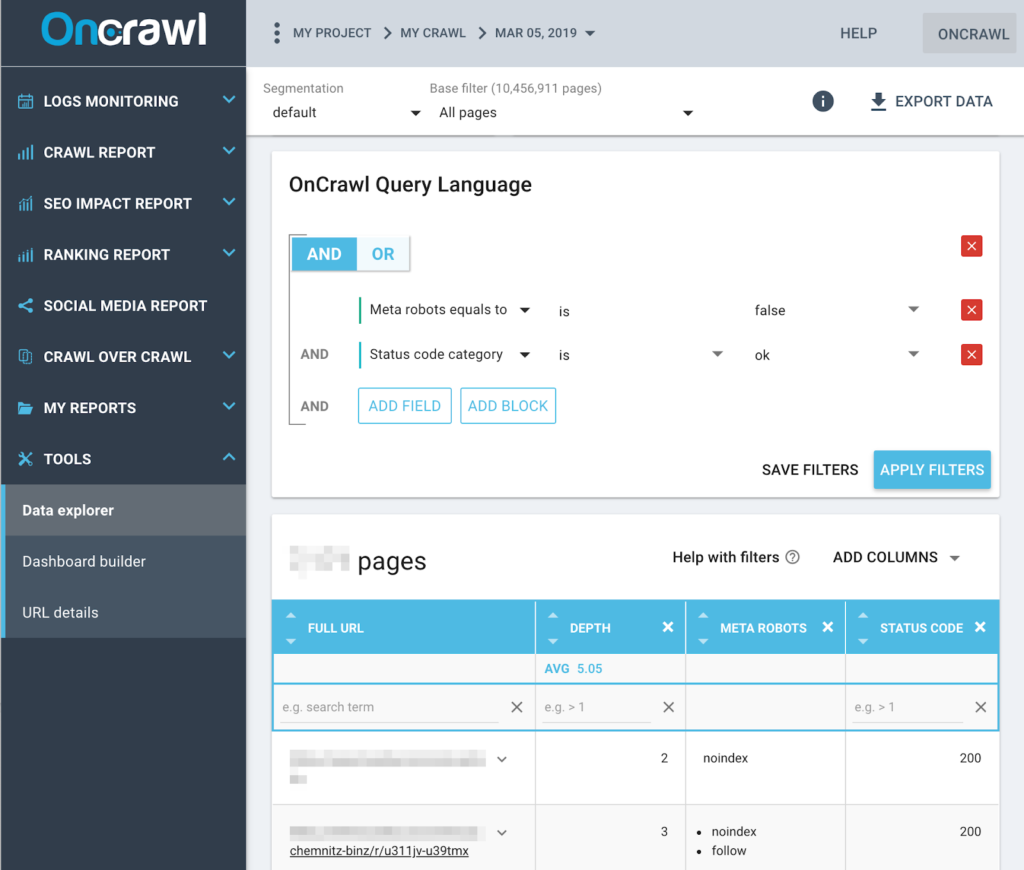

Auditing your site for each page’s meta robots instructions is also straightforward: pages should appear on Google must allow Googlebots to crawl them.

Monitor server performance

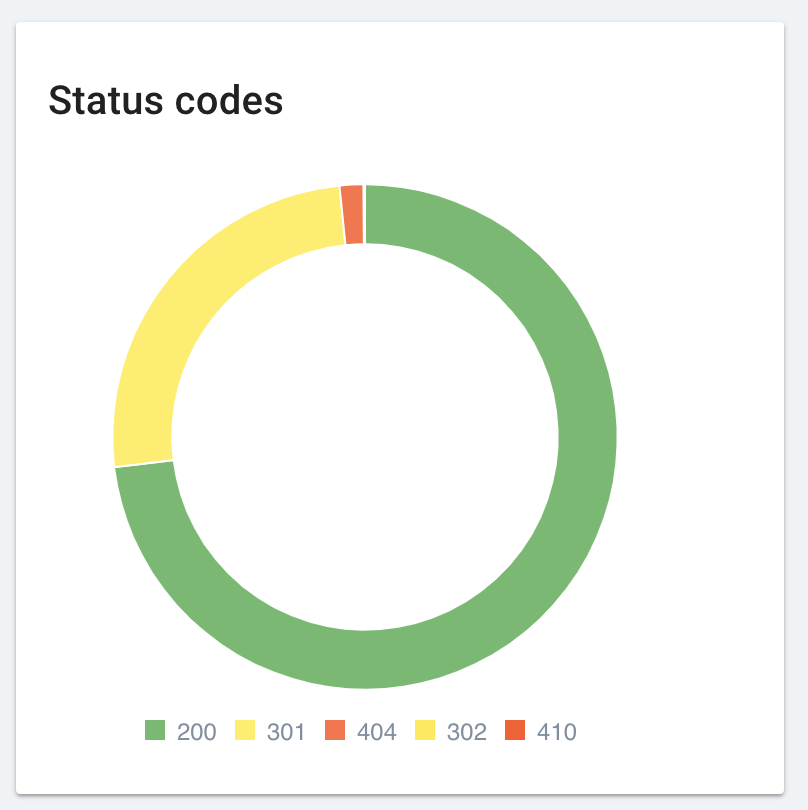

Sites with page and server errors are effectively unavailable to bots (and users!) while the error is present. Recurring or systematic errors can also have a negative effect on SEO in general.

You can monitor your website’s errors through regular crawls and by correcting the pages with an HTTP status of in the 400s and 500s.

Design site architecture carefully

Site architecture designates the way a visitor, whether a user or a bot, can move from page to page using links on a website. This includes not only the links in your navigational menu, but also the links in the content of the page and all of the links in the footer.

Good site architecture design considers the following standards:

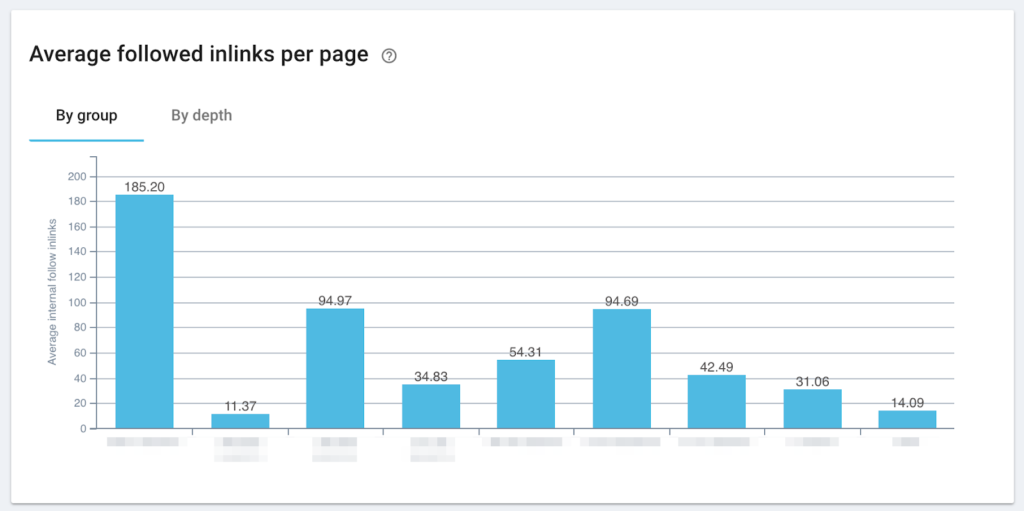

- Creating more links to the most important pages

- Make sure all page that exists have a least one link to them

- Reduce the number of clicks required to get from the home page to other pages

These three standards are based on how search engine crawlers behave:

Links from other pages on the same website help search engines establish the relative importance of a page on the website. This helps establish content as good content. In short, a page with more links pointing to it is often more important than a page with very few links pointing to it.

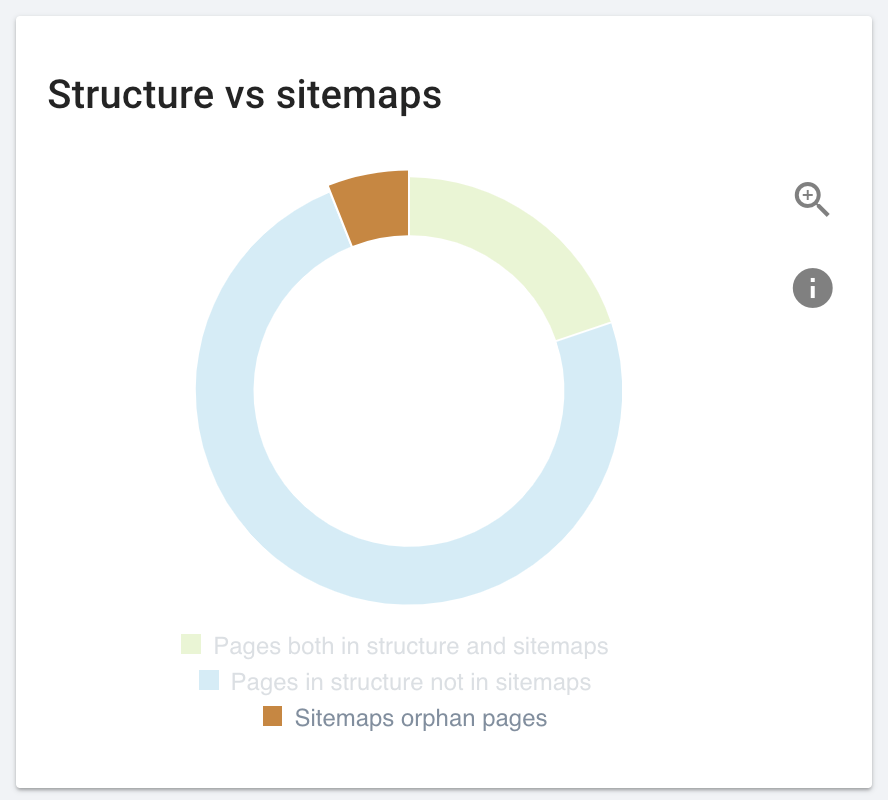

At the extreme end of the scale of unimportance is the orphan page, or a page with no links to it. Unless there are outside links to orphan pages, these pages are invisible to search engines. The key to making them crawlable is adding links to them from page that are already part of your site’s architecture.

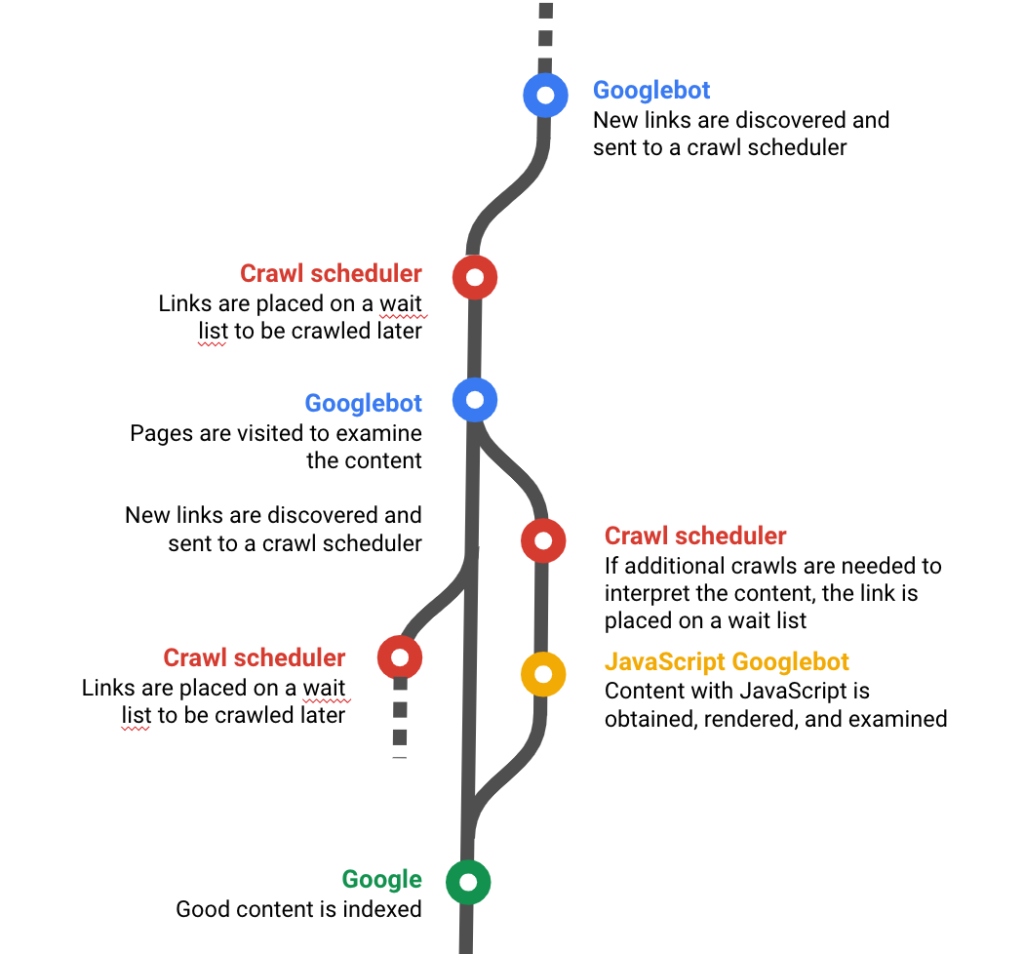

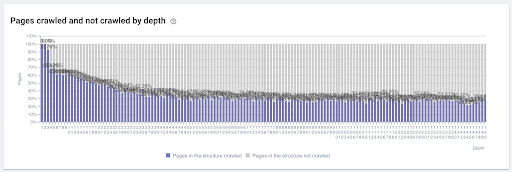

Because of the gaps between when a page is discovered, when it is crawled and when links on the new page are crawled, pages that are far from key site entry points (notably: the home page) can take a long time to be crawled by search engines. The distance from the home page is referred to as page depth and can be reduced by adding links to deep pages from category pages and other pages that are close to the home.

Use web technology that is accessible to search engine bots

There’s a gap between what modern browsers do and what Google does right now.

— Martin Splitt (Google Webmaster Trends Analyst) / Oct. 30 2018 Google Webmaster hangout

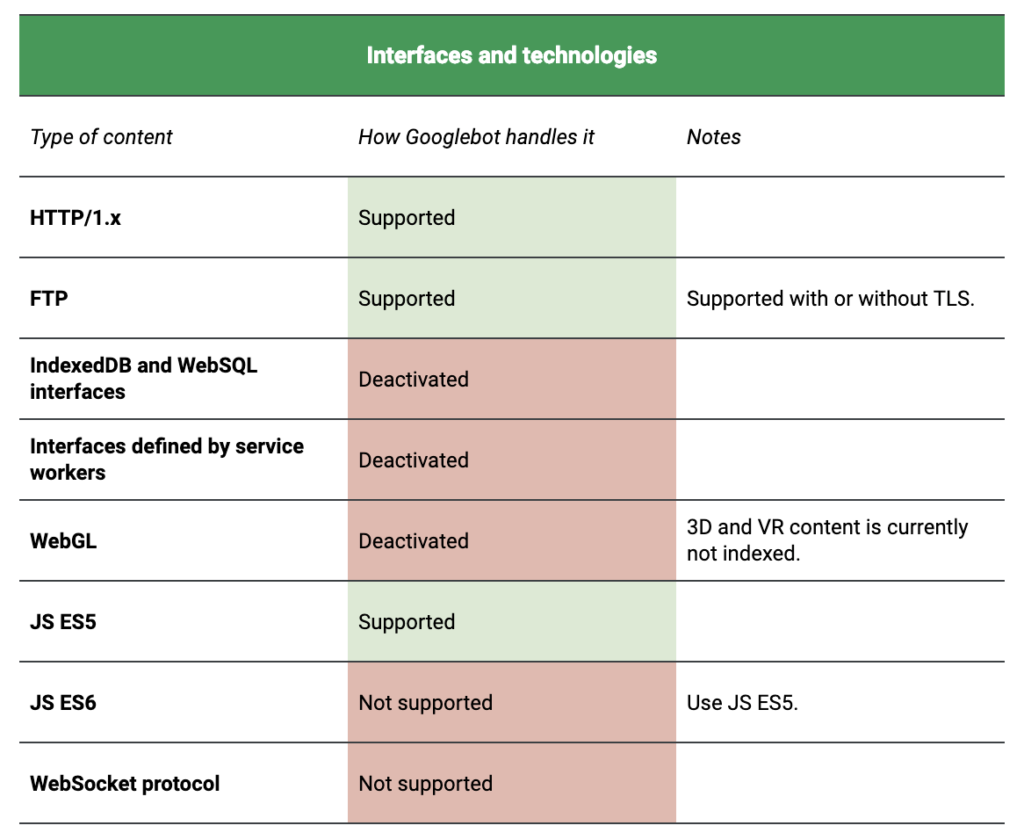

The technology used by web crawlers to access page content is currently based on Chrome 41 (M41). If you’re using an up-to-date version of Chrome in March 2019, you’re probably on a version of Chrome 72 or Chrome 73. While that’s a big gap, Google says they’re working on closing it.

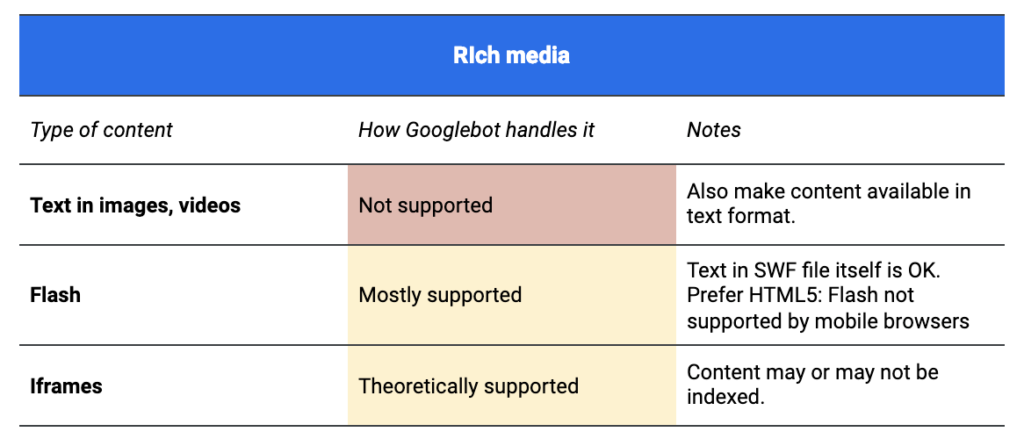

The main differences concern support for rich media and additional technologies. Google provides details through the Chrome documentation and on the documentation page for its web rendering service.

This doesn’t mean that you can’t include rich media content such as Flash, Silverlight, or videos on your site; it just means that any content you embed in these files should also be available in text format or it might not be accessible to all search engines.

— Google Webmaster Support

If you are particularly concerned about JavaScript, you may be interested in Google’s new Webmasters video series, or in Maria Cieslak’s suggestions of how to work with JavaScript without it becoming a hassle.

Make sure key information is rendered ___ and ___

The key words missing from this section title are “first” and “by the server”.

Google recently published an article recommending dynamic rendering, a workaround to getting JavaScript content crawled faster by providing crawlers with content that has already been prepared by a server (server-side rendering, or SSR), even if you provide users with raw HTML and JavaScript that is then interpreted by their browser (client-side rendering, or CSR).

Dynamic rendering requires your web server to detect crawlers (for example, by checking the user agent). Requests from crawlers are routed to a renderer, requests from users are served normally. Where needed, the dynamic renderer serves a version of the content that’s suitable to the crawler, for example, it may serve a static HTML version. You can choose to enable the dynamic renderer for all pages or on a per-page basis.

— Google Developers’ Guide / last updated Feb. 4, 2019

There has been some discussion as to whether this amounts to cloaking, which is subject to penalties: it’s a way of providing non-identical content to users and to bots.

The objective is to ensure that your content can be viewed and interpreted by all visitors, whether users or bots. Some SEOs, such as Jan-Willem Bobink, argue instead for full SSR for SEO purposes.

If you provide unrendered content, ensure that your main content is available in the basic HTML of the page. Delay rendering for elements that can block content if they are absent, in error, or incomplete, such as CSS and JavaScript.

You can verify how Google sees your page using the Inspect URL tool, available since January 2019 in the new Google Search Console:

???? Yay! A new feature is now available in Search Console! ????

You can now see the HTTP response, page resources, JS logs and a rendered screenshot for a crawled page right from within the Inspect URL tool! ???? Go check it out and let us know what you think! ???? pic.twitter.com/qihtueIbsF

— Google Webmasters (@googlewmc) January 16, 2019

Influencing search engines with a bot-friendly site

If your website is crawl-friendly, you’ve won the first battle in SEO. Crawlable sites can be indexed, and indexed sites can be ranked. And ranked sites bring in leads.

A crawlable site considers bots’ nature and their limitations from its design through its monitoring. It addresses issues such as:

- How bots move from page to page

- How search engines schedule crawls

- How bots access content on a web page

- How sites communicate with bots

- How servers provide content to bot visitors

If you’re interested in attracting visitors, gaining leads through digital marketing, or promoting products and services online, a crawlable site is the first step to success.