Having carried out SEO services across a range of industries, sometimes you’re able to pick up on common issues especially when working on a common CMS like Wordpress, Shopify or SquareSpace.

Here I’ve outlined 10 pretty common technical SEO issues you might bump into when optimising a website.

I’m not saying these issues will definitely be problematic for you or your client – very often context is still very important. There isn’t always a one size fits all solution but it’s probably still good to be wary of the scenarios outlined below.

1 – Robots.txt file blocking access to Googlebot

This is nothing new to most technical SEO’s but it’s still very easy to neglect to check the robots file – and not just at the point of running a technical audit, but as a recurring check.

You can use a tool like Search Console (the old version) to review if Google has access problems, or you can just try crawling your site as Googlebot with a tool like Oncrawl (just select their User Agent). Oncrawl will obey the robots.txt unless you say otherwise.

Export the crawl results and compare against a known list of pages on your site and check there are no crawler blind spots.

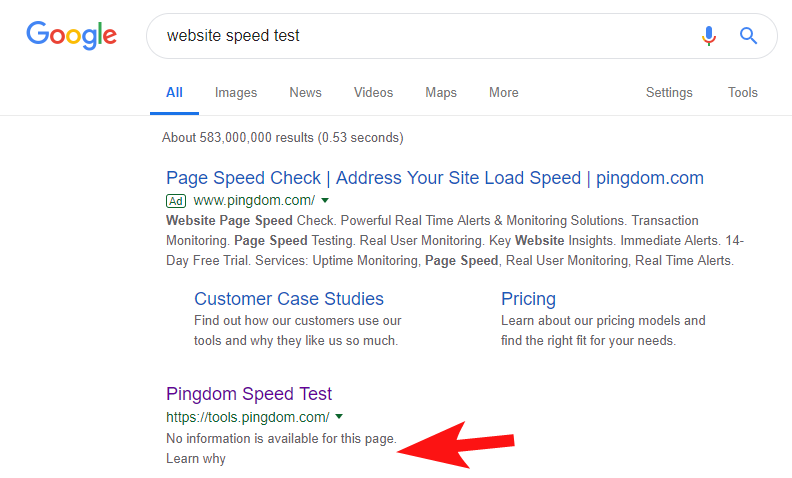

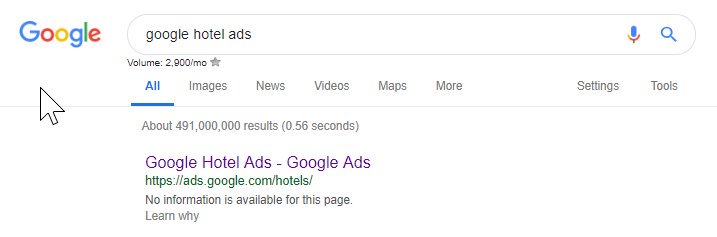

To show that this does still happen quite often, and to some fairly big sites, a few weeks ago I noticed Pingdom’s Speed Test tool was blocked within Google.

Looking at their robots file (and subsequently trying to crawl their page from Oncrawl as Googlebot) confirmed my suspicions that they were blocking access to their site.

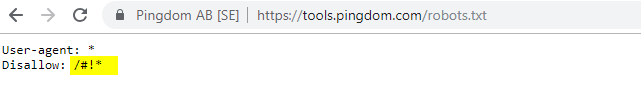

The guilty robots.txt file is shown below:

I reached out to them with an “FYI” but had no response, but then a few days later saw that all was back to normal. Phew – I could sleep easily again!

In their case it seemed that whenever you scan your site as part of their speed audit, it was creating a URL including that hashed character highlighted in the robots file above.

Perhaps these were getting crawled and even indexed somehow, and they wanted to control that (which would be very understandable). In this case they probably didn’t fully test the potential impact – which was likely minimal in the end.

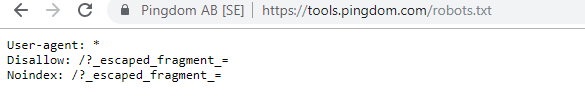

Here’s their current robots for anyone that’s interested.

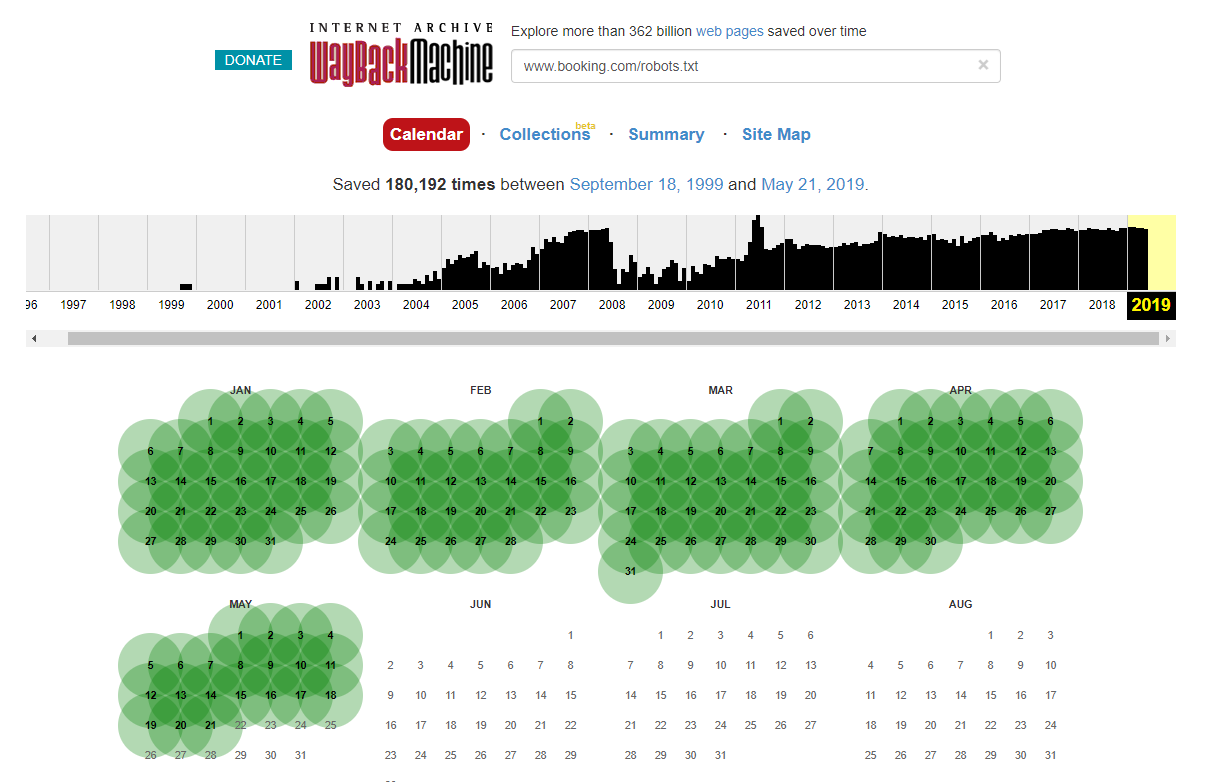

It’s worth noting that in some cases you can access historic robots.txt file changes using the Internet Wayback Machine. From my experience this works best on bigger sites as you can imagine – they’re far more often crawled by the Wayback Machine’s archiver.

It’s not the first time I’ve seen a live robots.txt out in the wild causing a bit of havoc in the SERPS. And it definitely won’t be the last – it’s such a simple thing to neglect (it’s literally one file after all) but checking it should be a part of every SEO’s ongoing work schedule.

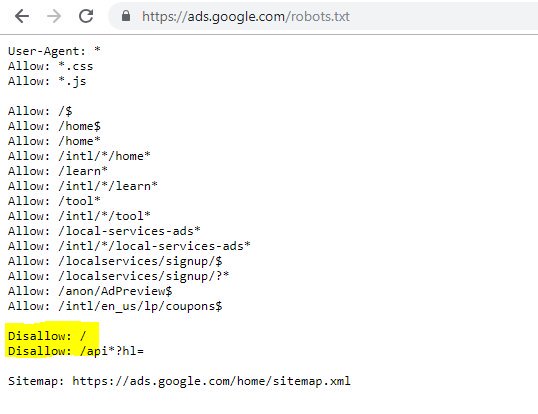

From the above you can see that even Google messes up their robots file sometimes, blocking themselves from accessing their content. This could have been intentional but looking at the language of their robots file below I somehow doubt it.

The highlighted Disallow: / in this case prevented access to any URL paths; it would have been safer to list the specific sections of the site that shouldn’t be crawled instead.

2 – Domain Configuration Issues at the DNS Level

This is a surprisingly common one but usually it’s a quick fix. This is one of those low-cost, *potentially* high impact SEO changes which technical SEO’s love.

Often with SSL implementations I fail to see the non-WWW domain version configured correctly, such as 302 redirecting to the next URL and forming a chain, or worst-case scenario not loading at all.

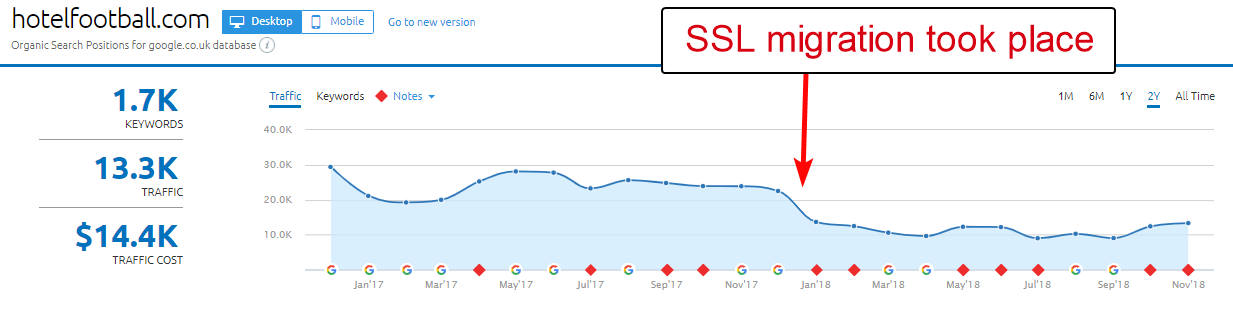

A good case in point here is that of the Hotel Football website.

They underwent an SSL migration early last year, which didn’t turn out that well for them judging from SEMRush’s domain overview report above.

I’d noticed this one a while back as I’ve worked a lot within the travel and hospitality industry – and with a keen love of football I was interested to see what their website was like (plus how it was doing organically of course!).

This was actually very easy to diagnose – the site had a ton of extremely good backlinks, all pointing to the non-SSL, WWW domain at http://www.hotelfootball.com/

If you try accessing that URL above though, it doesn’t load. Oops. And it’s been like this for about 18 months now, at least. I did reach out to the agency that manages the site via Twitter to let them know about it but had no response.

With this one all they need to do is ensure the DNS zone settings are correct, with an “A” record in place for the “WWW” version of the domain, which points to the correct IP address (a CNAME would also work). This will prevent the domain from not resolving.

The only downside, or reason this one takes so long to resolve, is that it can be tricky to get access to a site’s domain management panel, or even that passwords have been lost, or it’s not seen as high priority.

Sending instructions to fix to a non-techy person who holds the keys to the domain name isn’t always a great idea either.

I’d be very keen to see the organic impact if/when they are able to make the above adjustment – especially considering all the backlinks the non WWW domain has built up since the hotel was launched by former Manchester United footballers Gary Neville, Ryan Giggs and company.

Whilst they do rank # 1 in Google for their hotel name (as you’d imagine), they don’t appear to have strong rankings at all for any of their more competitive non-branded search terms (they’re currently in position 10 on Google for “hotel near Old Trafford”).

They scored a bit of an own goal with the above – but fixing this issue might go at least some way in solving that.

Oncrawl SEO Crawler

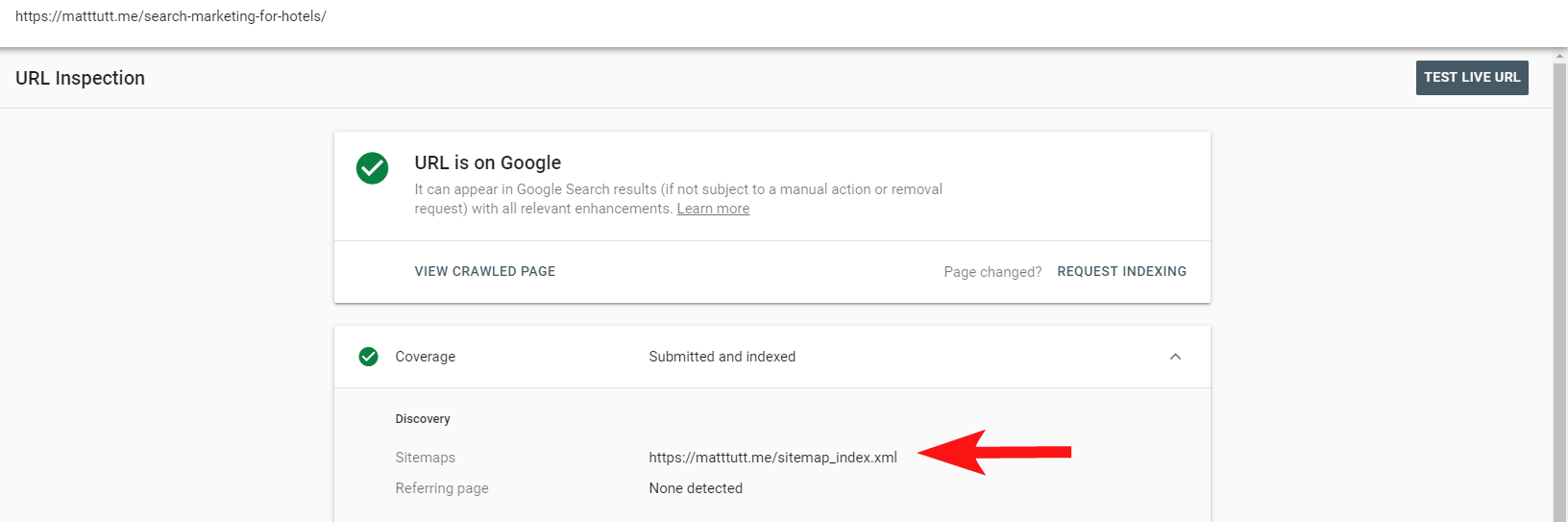

3 – Rogue Pages within the XML Sitemap

Again this is a pretty basic one but it’s strangely common – upon reviewing a sites XML sitemap (which is nearly always at either domain.com/sitemap.xml or domain.com/sitemap_index.xml, there may be pages listed here which really don’t have any need being indexed.

Typical culprits include hidden thank you pages (thanks for submitting a contact form), PPC landing pages which may be causing duplicate content issues, or other forms of pages/posts/taxonomies that you’ve already noindexed elsewhere.

Including them again within the XML sitemap can send conflicting signals to search engines – you really should only list the pages that you want them to find and index, which is mainly the point of the sitemap.

You can now use the handy report within Search Console to find out whether pages have or haven’t been included within a sites XML sitemap via the Inspect URL option.

If you’ve got a fairly small site you can probably just manually review your XML sitemap within your browser – otherwise download it and compare it against a full crawl of your indexable URL’s.

Often you can catch this kind of low-quality, invaluable content by doing a site:domain.com search in Google to return all that’s been indexed.

Worth noting here that this can contain old content and shouldn’t be relied on to be 100% up to date, but it’s an easy check to ensure there’s not boatloads of content bloating up your SEO efforts and eating up crawl budgets.

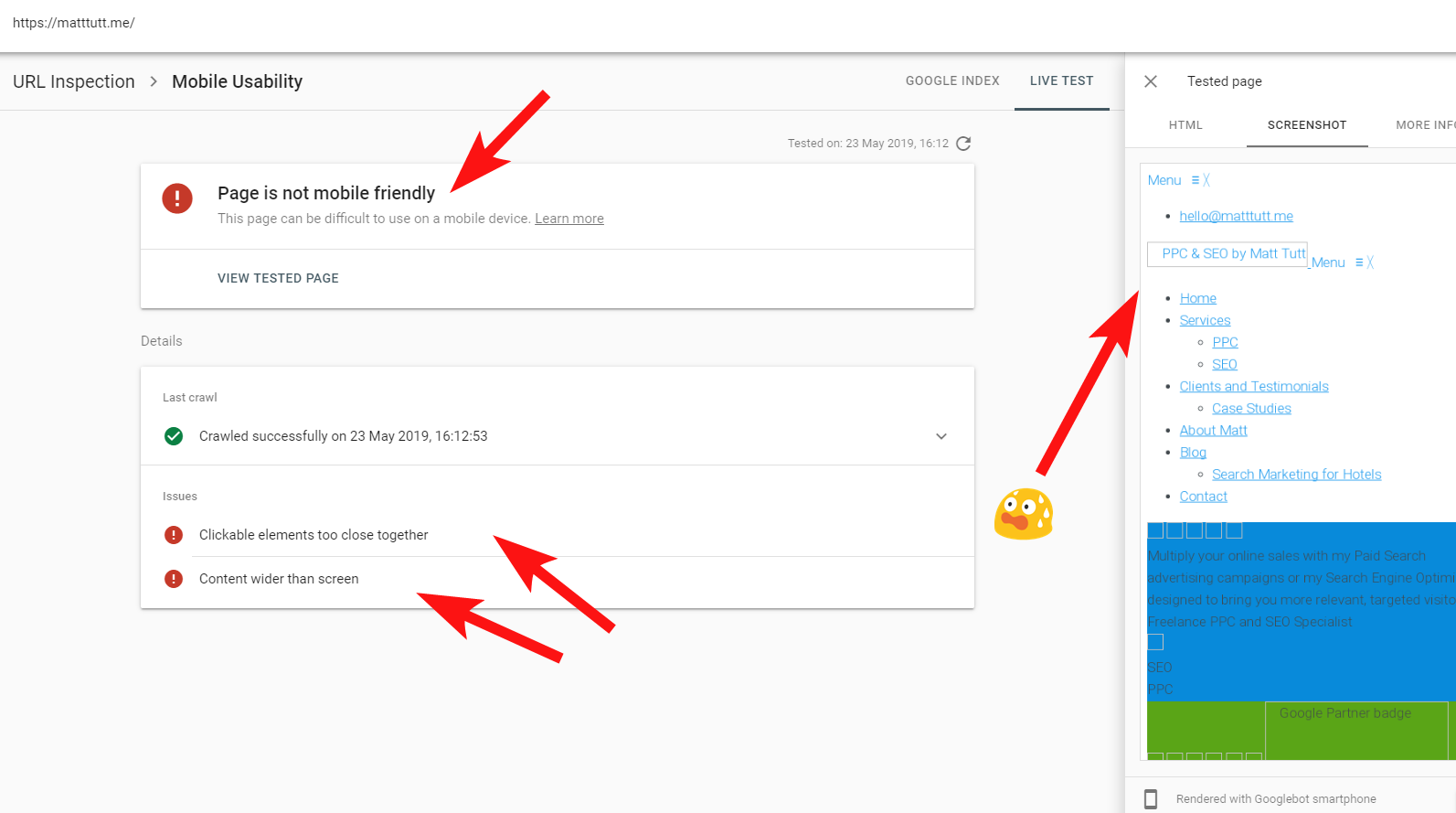

4 – Issues with Googlebot Rendering your Content

This one is worthy of an entire article dedicated to it, and I personally feel like I’ve spent a lifetime playing with Google’s fetch and render tool.

A lot has been said about this (and about JavaScript) already by some very capable SEO’s so I won’t delve into this too deeply but checking how Googlebot is rendering your site is always going to be worthy of your time.

Running a few checks via online tools can help to uncover Googlebot blindspots (areas on the site they can’t access), issues with your hosting environment, problematic JavaScript burning up resources, and even screen scaling problems.

Normally these third party tools are quite helpful at diagnosing the issue (Google even tells you when a resource is blocked due to your robots file for example) but sometimes you may find yourself going around in circles.

To show a live example of a problematic site I’m going to shoot myself in the foot and reference my own personal website – and a particularly frustrating Wordpress theme I’m using.

Sometimes when running a URL Inspection from Search Console, I get the “Page is not mobile friendly” warning (see below).

From clicking the More Info tab (top right) it gives a list of resources that couldn’t be accessed then by Googlebot which is mainly CSS and image files.

This is likely as Googlebot can’t always give it’s full “energy” into rendering the page – sometimes it’s because Google is wary of crashing my site (which is kind of them), and other times I might be limited as they’ve used lots of resources to fetch and render my site already.

Sometimes because of the above it’s worth running these tests a few times at spread out intervals to get a truer story. I also recommend checking server logs if you can, to check how Googlebot accessed (or didn’t access) your site content.

404’s or other bad statuses for these resources would clearly be a bad sign, especially if it’s consistent.

In my case, Google calls out the site out for not being mobile friendly which is mainly a result of certain CSS style files failing during the render, which can rightly sound alarm bells.

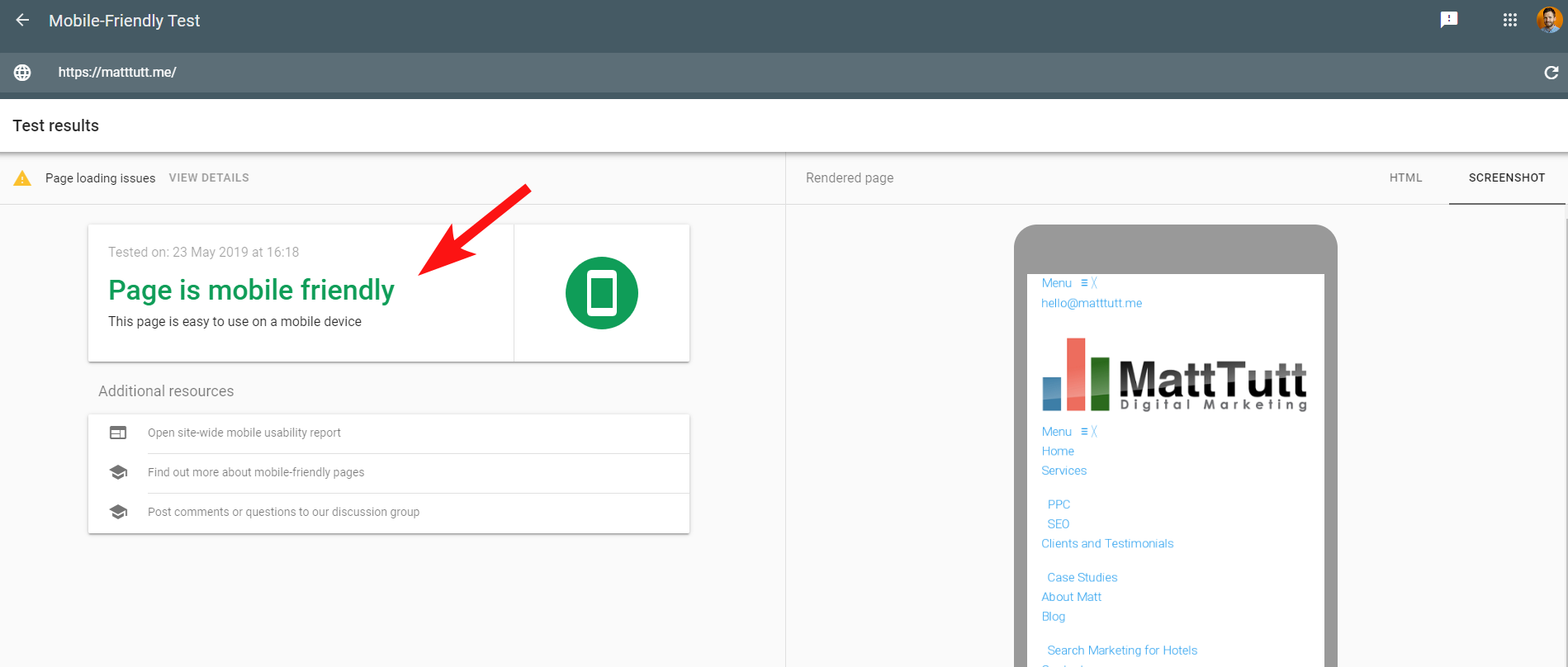

To make matters more confusing, when running the Mobile Friendly Test by Google, or when using any other third party tool, no issues are detected: the site is mobile friendly.

These conflicting messages from Google can be tricky for SEO’s and web developers to decode. To understand further I reached out to John Mueller who suggested I check my web host (no issues) and that the CSS file can indeed be cached by Google.

Search Console uses an older Web Rendering Service (WRS) compared to the Mobile-Friendly Tool, so nowadays I tend to give more weighting to the latter.

With Google announcing a newer Googlebot with the latest rendering capabilities this could all be set to change so it’s worth keeping up to date on which tools are best to use for rendering checks.

Another tip here – if you want to see a full scrollable render of a page you can switch to the HTML tab from Google’s mobile testing tool, hit CTRL+A to highlight all the rendered HTML code, then copy and paste into a text editor and save as a HTML file.

Opening that in your browser (fingers crossed, sometimes it depends on the CMS used!) will give you a scrollable render. And the benefit of this is that you can check how any site renders – you don’t need Search Console access.

5 – Hacked Sites and Spammy Backlinks

This is quite a fun one to catch and can often sneak up on sites that are running on older versions of Wordpress or other CMS platforms that require regular security updates.

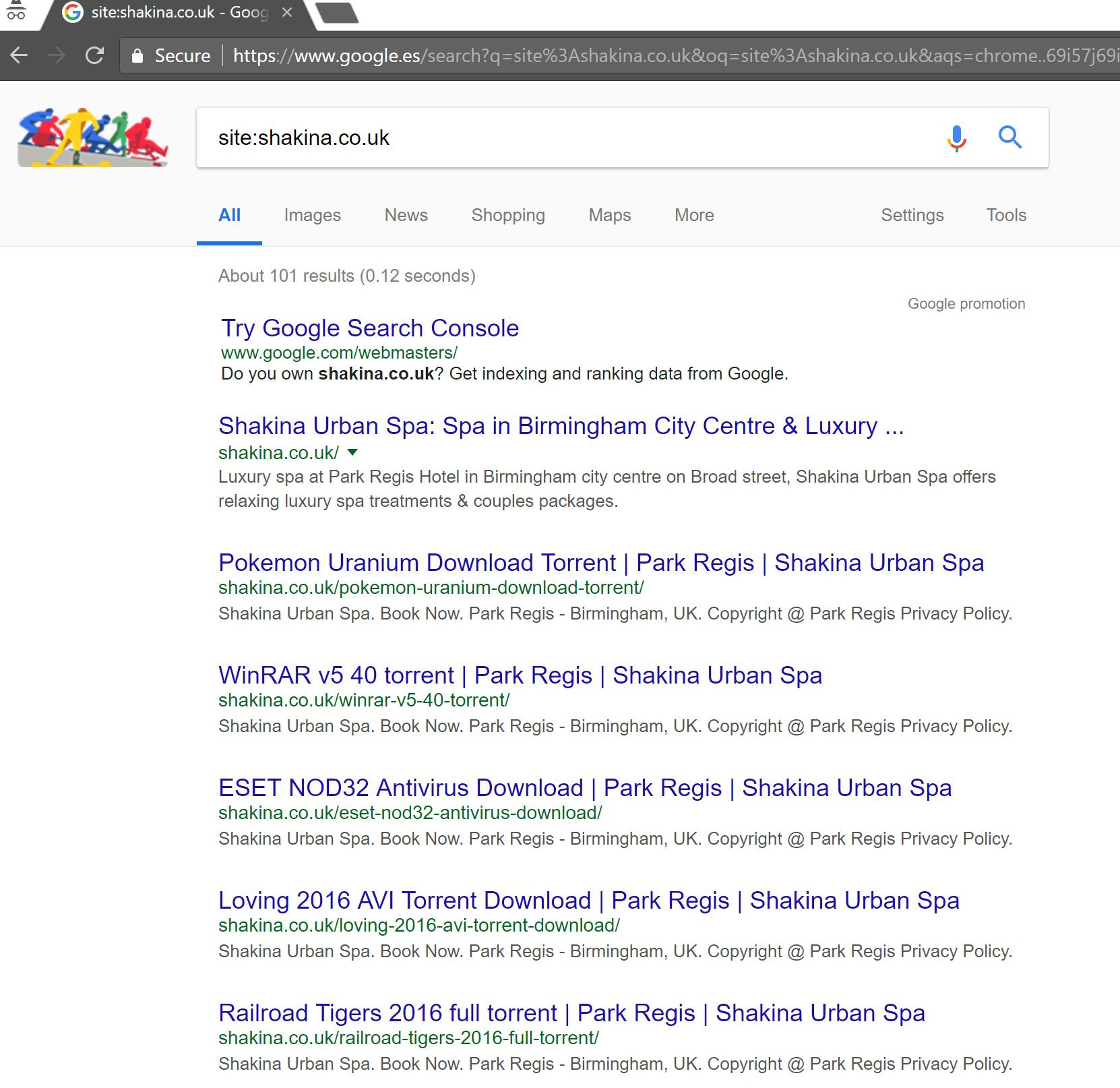

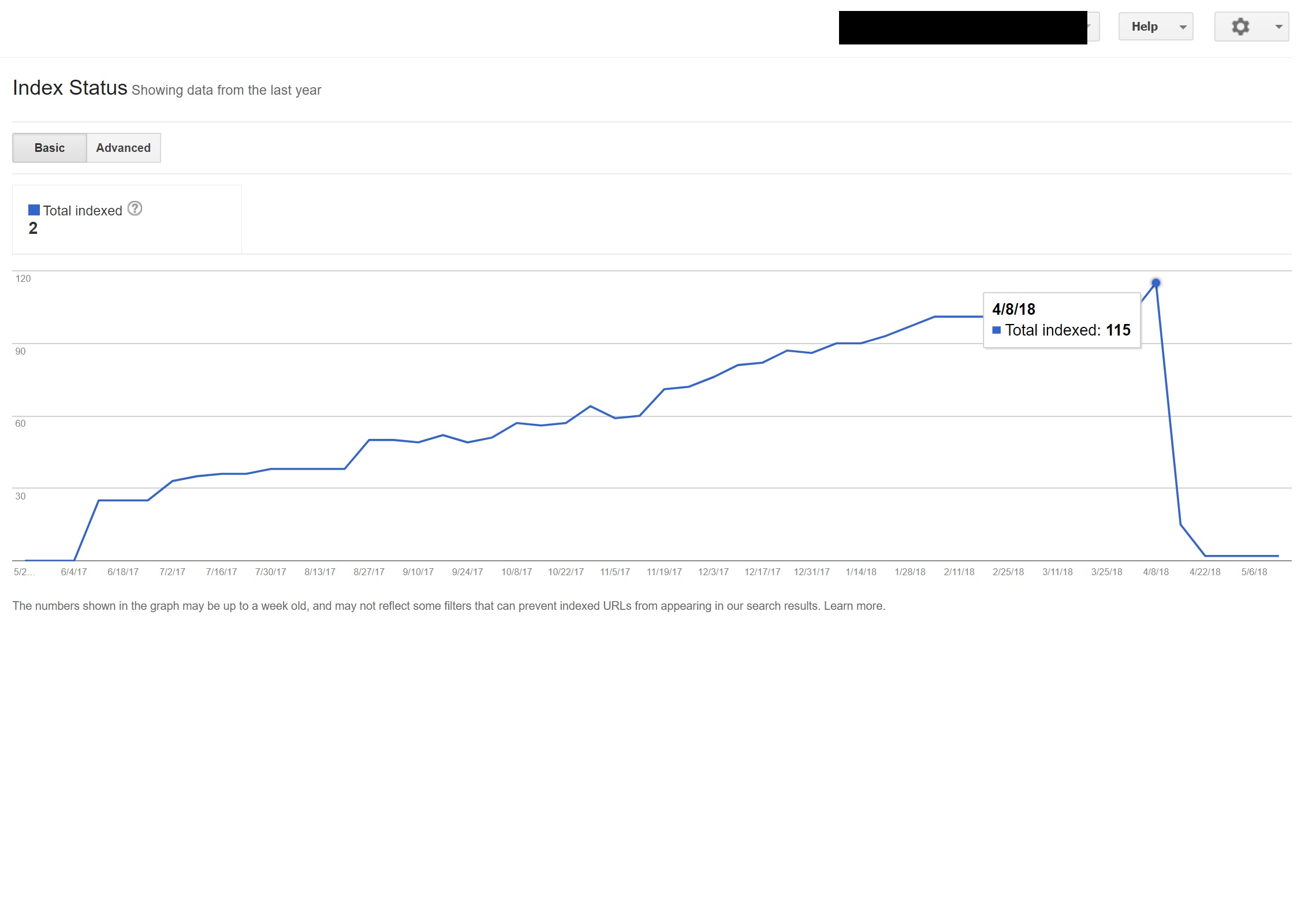

With this client (a beauty spa), I noticed some strange search terms appearing within Search Console.

Surprisingly not only did they have impressions within Search Console but Clicks too – meaning something must have been indexed on the domain.

Judging by the queries it was clearly very spammy, and not something the client would want their business associated with.

Doing a simple “site:domain.com” search in Google unearthed hundreds of pages of supposed torrents that the client was supposedly hosting on their site.

Visiting any of those URL’s actually resulted in a 404 – yet they were still indexed (I also checked various User Agents and they all got the same 404 error).

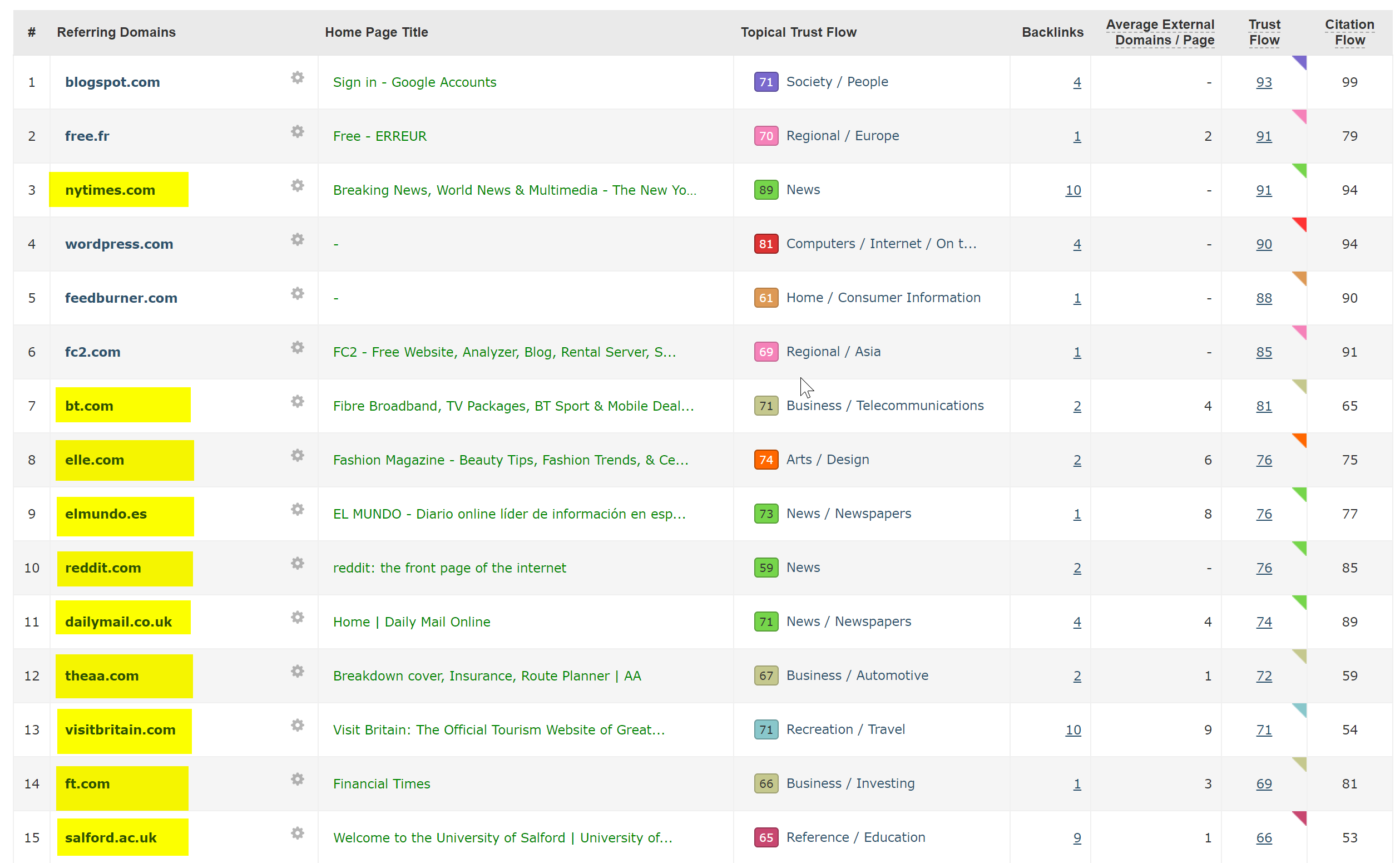

Next I ran the domain through Majestic’s backlink checker and it gave a lengthy list of very low quality backlinks pointing to these pages on the client sites – which was likely helping to get them indexed.

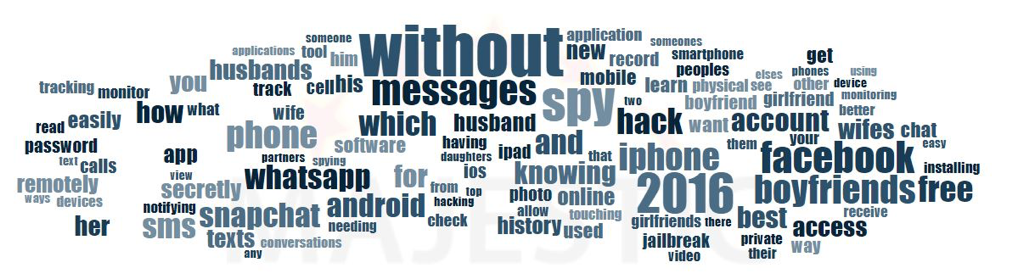

Looking at Majestic’s Anchor Cloud of the backlinks really showed the extent of the problem.

The only fix here was to disavow all those backlinks by domain, and then run a clean sweep of the Wordpress installation in a hope of clearing up any code injections, or install a fresh copy of Wordpress.

If you’re really concerned about indexed content in cases like the one above you could also serve a 410 status code to really clarify things with the search crawlers.

The above would suit those sites that have been served with legal warnings due to copyright claims from film producers – which can sometimes occur in situations like this if the issue isn’t resolved quickly.

6 – Bad International SEO setups

Being based in Spain but browsing the internet in my native English I’ll often find myself being redirected automatically to a Spanish version of a website.

Whilst I understand the logic (I’m based in Spain therefore I want to browse the site in Spanish), it’s pretty annoying from a user experience perspective, and if not done correctly can also cause a bit of havoc with your international SEO.

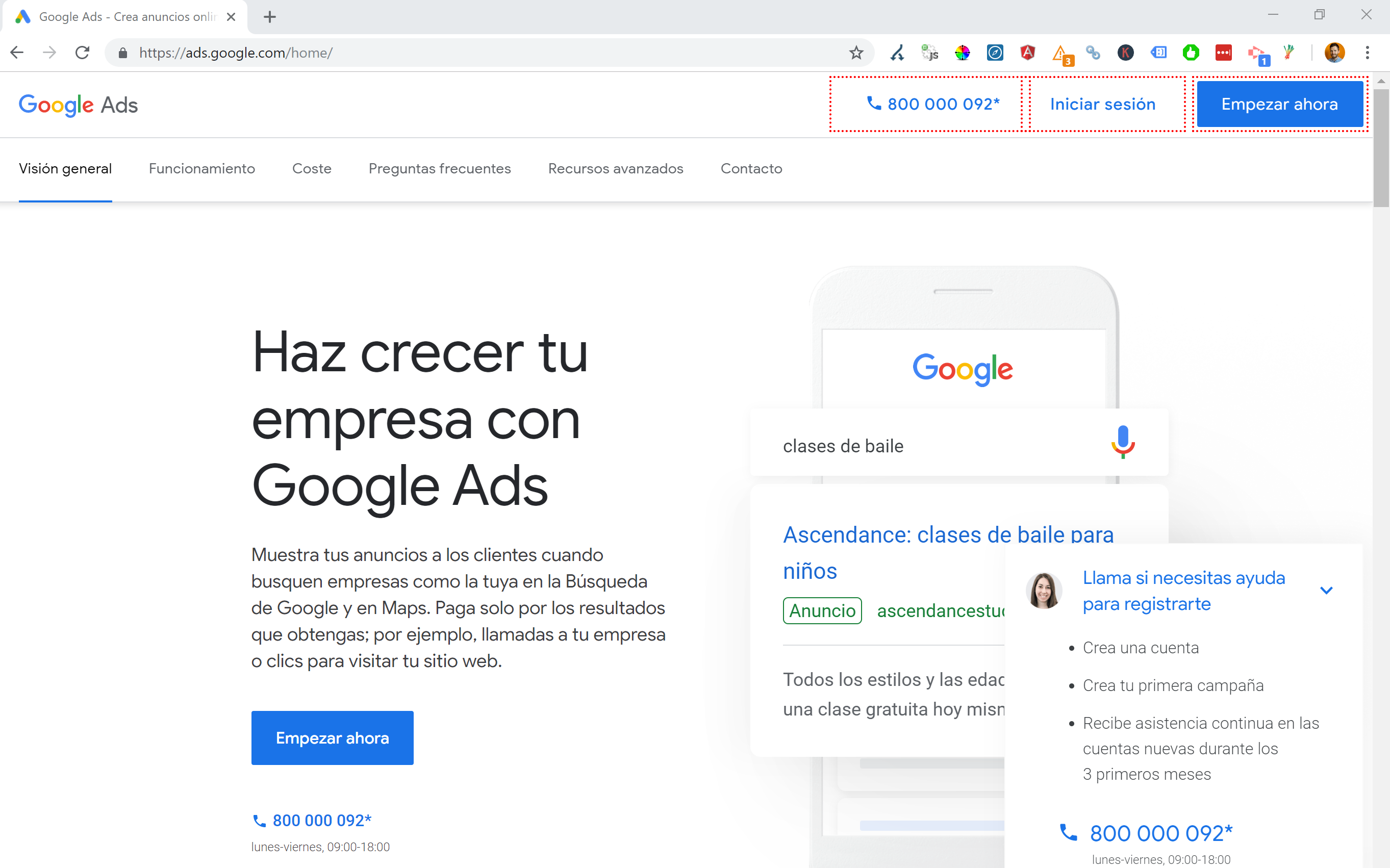

Sites like Google Ads take this to another level – making use of Angular JavaScript to dynamically generate content based on my location, not even passing through a page redirect of any kind and loading the content right in the DOM.

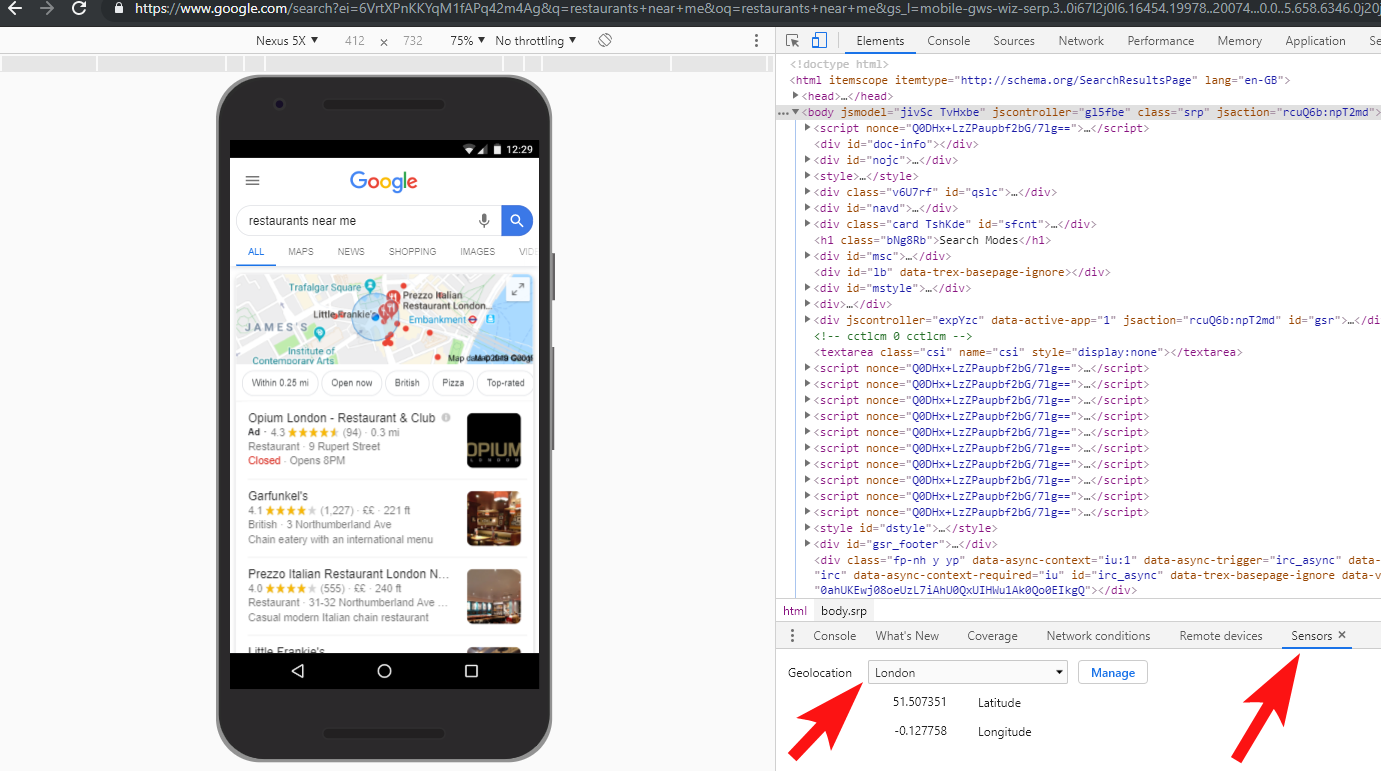

My preferred method of choice when multiple languages are available is to 302 redirect a user to a language based on their Internet browser settings.

Therefore if someone has German as their default language in Google Chrome, they’re likely comfortable reading the site in German regardless of their physical location.

This also helps navigate difficulties when someone is based in a region where various languages are spoken like in Switzerland where French, Italian, German and Romansh are all used.

It’s also key for usability purposes to ensure there’s an option to switch languages based on your preference – just in case they want to switch.

In one case I worked with a hotel based in Barcelona, where a JavaScript language redirection script was added to a site without considering the SEO impact.

This script redirected users based on their browser language setting (which isn’t too bad in itself) via a client-side JavaScript redirect.

Sadly in this case the script wasn’t setup correctly due to a weird configuration of the sites permalinks, and when combined with the fact that the HTML lang tag was missing from all pages on the site, Googlebot went a bit nuts…

In this example nearly all non-English content on the site was de-indexed by Google, because it was being redirected to pages that didn’t exist, thus serving multiple 404 errors.

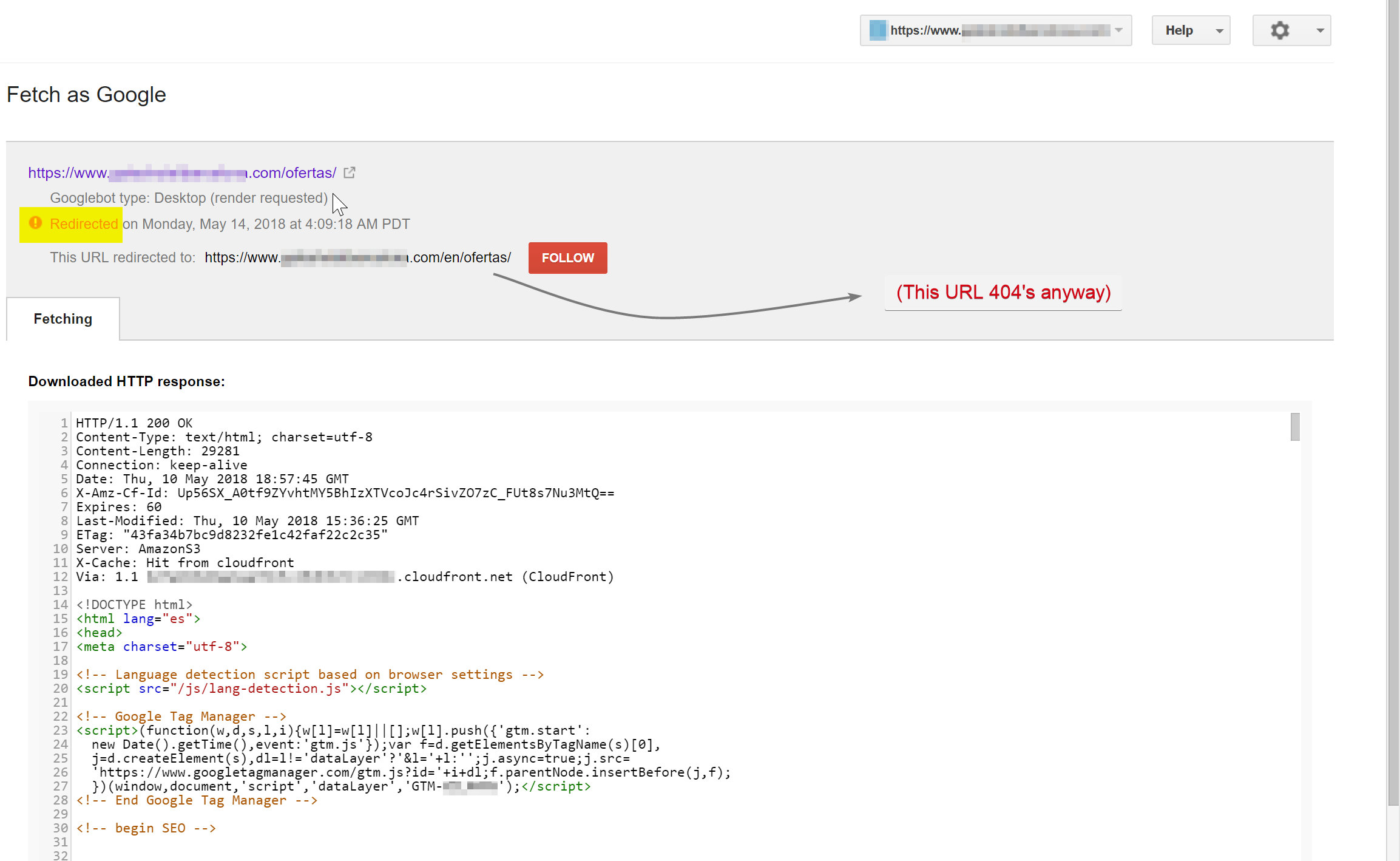

Googlebot was trying to crawl the Spanish content (which existed at hotelname.com/ofertas) and was being redirected to hotelname.com/en/ofertas – a non-existent URL.

Surprisingly in this case Googlebot was following all these JavaScript redirects, and as it couldn’t find these URL’s it was forced to drop them from its index.

In the above case I was able to confirm this by accessing server logs of the site, filtering down to Googlebot and checking where it was getting served 404’s.

Removing the faulty JavaScript redirection script resolved the issue, and luckily the translated pages weren’t de-indexed for long.

It’s always a good idea to test things out fully – investing in a VPN can help diagnose these types of scenarios, or even changing your location and/or language within the Chrome browser.

[Case Study] Handling multiple site audits

7 – Duplicate Content

Duplicate content is quite a common and well discussed issue, and there are many ways you can check for duplicate content on your site – Richard Baxter recently wrote a great piece on the topic.

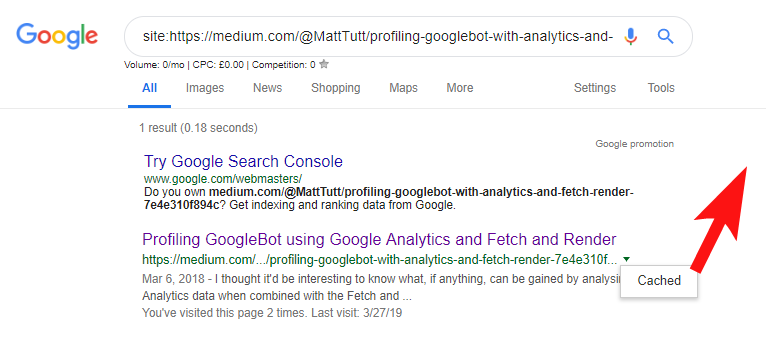

In my case the issue is probably a fair bit simpler. I’ve regularly seen sites publishing great content, often as a blog post, but then almost instantly sharing that some content on a 3rd party website like Medium.com.

Medium is a great site for repurposing existing content to reach wider audiences, but care should be taken with how this is approached.

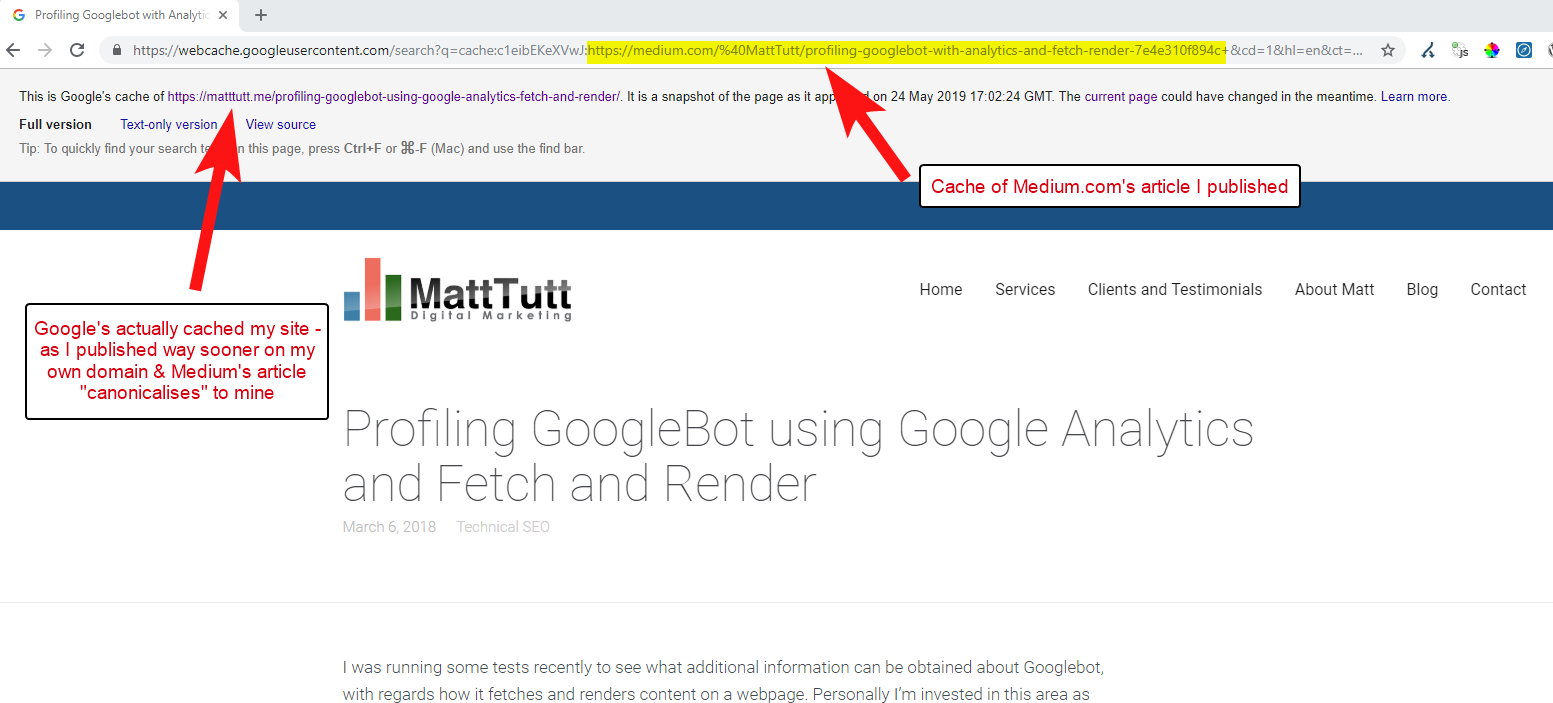

When importing content from Wordpress onto Medium, during this process Medium will use your website URL as it’s canonical tag. So in theory it should help to give your website the credit for the content, as the original source.

From some of my analysis though it doesn’t always work like this.

I believe this to be the case because when an article is published on Medium without first allowing Google time to crawl and index the article on your domain, if the article goes down well on Medium (which is a bit hit or miss) your content gets indexed and associated with Medium’s site despite their canonical pointing to yours.

Once content gets added to Medium (and particularly if it’s popular) you can pretty much guarantee the piece is going to be scraped and re-published on the web elsewhere almost instantly – so again your content is being duplicated elsewhere.

Whilst this is all going on, chances are that if your domain is quite small in terms of authority, Google may not even had the chance to crawl and index the content you published – and it could even be the case that the rendering element of the crawl/index hasn’t yet been completed, or there’s heavy JavaScript causing a big time lag between crawling, rendering and indexing of that content.

I’ve seen situations where a big company publishes a great article, but then the next day they publish it as a thought piece on a massive industry news blog. On top of this their site had an issue where content was duplicated (and indexed) at https://domain.com and https://www.domain.com.

A few days after publishing, when searching for an exact phrase of the article in quotes within Google, the company website was nowhere to be seen. Instead, the authoritative industry blog was in first place, and other re-publishers were taking up the next positions.

In that case the content has been associated with the industry blog and so any links that the piece gains are going to be benefiting that website – not the original publisher.

If you’re going to be repurposing content anywhere on the web it’s likely to get indexed you should really wait until you’re completely sure it’s been indexed by Google on your own domain.

You probably work hard to create and craft your content – don’t throw that all away by being too keen to re-publish elsewhere!

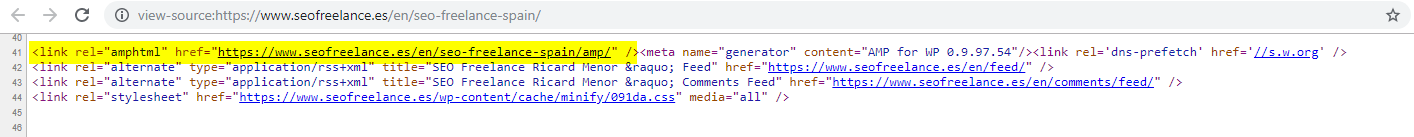

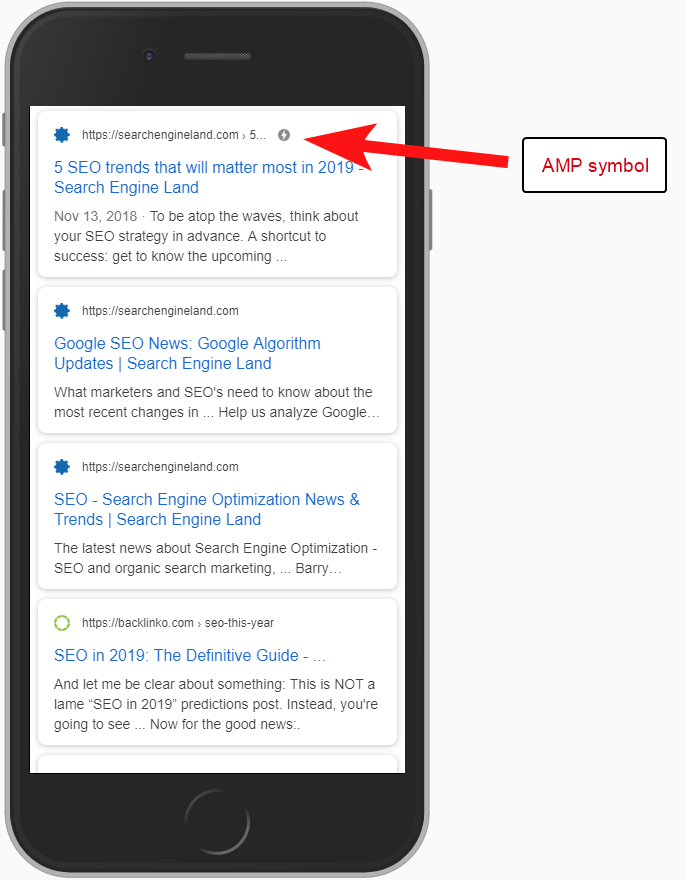

8 – Bad AMP Configuration (missing AMP URL declaration)

Only a handful of the clients I’ve assisted have opted to give AMP a go, perhaps based on some of the many Google-funded case studies around its use.

Sometimes I wasn’t even aware that a client had an AMP version of their site at all – there was some strange traffic appearing within the Analytics referral reports – where the AMP version of the site was linking back to the non-AMP site version.

In that case the AMP page versions weren’t configured correctly as there was no URL reference from the head of the non-AMP pages.

Without telling search engines that an AMP page exists at a particular URL, there’s not much point in having AMP setup at all – the point is that it gets indexed and returned in the SERPS for mobile users.

Adding the reference to your non-AMP page is an important way to tell Google about the AMP page, and it’s important to remember that canonical tags on AMP pages shouldn’t be self-referencing: they link back to the non-AMP page.

And although not really a technical SEO consideration, it’s worth noting that you still need to include tracking code on AMP pages if you want to be able to report on any traffic and user behaviour information.

Typically as part of my SEO audits I like to run some basic checks of the analytics implementation too – otherwise the data you’ve been provided may not actually be all that helpful, especially if there’s been a bodged analytics setup.

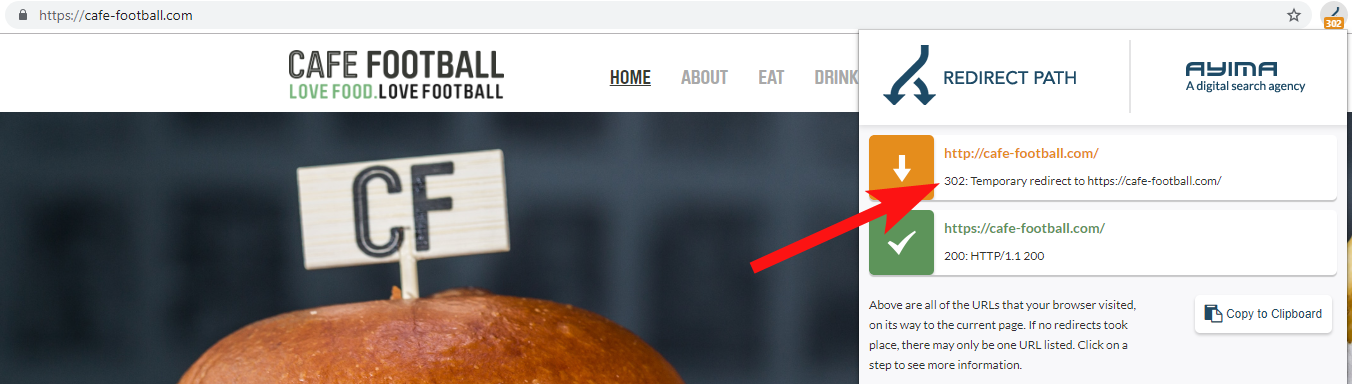

9 – Legacy Domains that 302 redirect or form a chain of redirects

When working with a large independent hotel brand in the US, which had undergone several rebrands over the past few years (quite common in the hospitality industry), it’s important to monitor how previous domain name requests behave.

This is easy to forget but it could be a simple semi-regular check of trying to crawl their old site using a tool like Oncrawl, or even a third party site that checks for status codes and redirects.

More often than not you’ll find the domain 302 redirects to the final destination (301 is always a best bet here) or it 302’s to a non-WWW version of the URL before jumping through several more redirects before hitting the final URL.

John Mueller of Google had stated before that they only follow 5 redirects before giving up, whilst it’s also known that for every redirect passed some of the link value is lost. For those reasons I prefer to stick to 301 redirects that are as clean as possible.

Redirect Path by Ayima is a great Chrome browser extension that will show you redirect statuses as you’re browsing the web.

Another way I’ve detected old domain names belonging to a client is by searching on Google for their phone number, using exact match quotes, or parts of their address.

A business like a hotel doesn’t often change address (at least part of it anyway) and you may find old directories/business profiles that link to an old domain.

Using a backlink tool like Majestic or Ahrefs might also show up some old links from previous domains, so this is a good port of call too – especially if you’re not in contact with the client directly.

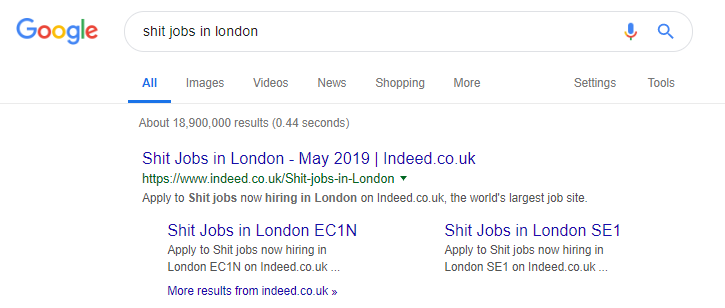

10 – Dealing with Internal Search Content Badly

This is actually a topic I’ve written about before here at Oncrawl – but I’m including it again because I do still see problematic internal content happening “in the wild” very often.

I began this piece talking about Pingdom’s robots.txt directive issue which from my outside looked to be a fix to prevent content they were outputting from being crawled and indexed.

Any site which serves internal search results to Google as content, or which outputs lots of user generated content, needs to be very careful about the way in which they do so.

If a site is serving internal search results to Google in a very direct way then this may lead to a manual penalty of some kind. Google would likely see that as a bad user experience – they search for X, then land on a site where they then have to manually filter for what they want.

In some cases I believe it can be fine to serve internal content, it just depends on the context and circumstances. A job site for example may want to serve the latest job results which update nearly daily – so they almost have to deal with this.

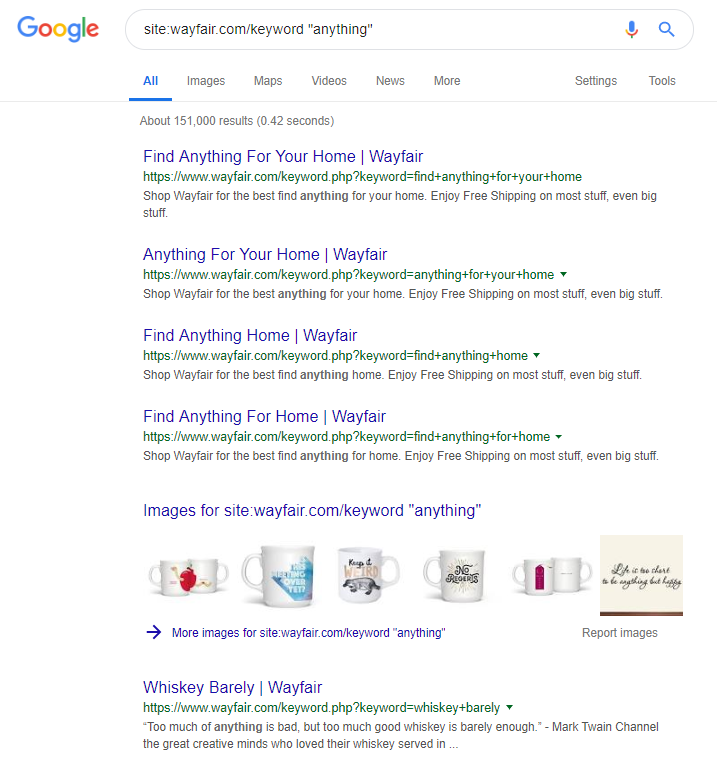

Indeed is a famous example of a job site that perhaps takes this too far, generating all kind of content based upon popular search queries (just see below for what can happen if you use this tactic).

Despite this though according to SEMRush data their organic traffic is doing great – but these are fine lines, and behaving like this puts you at high risk of a Google penalty.

Online retailer Wayfair.com is another brand that likes to sail close to the wind. With millions of indexed URL’s (and plenty of auto-generated keyword URL’s) they’re doing great in terms of organic traffic – but they’re high at risk of getting penalised for serving content in this manner to the search engines.

By implementing a proper site structure which involves categorising all content, building out the different parent/child hierarchies, even making use of tags or other custom taxonomies you could help aid customer and search crawler navigation.

Using tricks like the above might win in the short term but it’s unlikely to do much for you long term. This makes it key to get site structure right from the beginning, or to at least plan it properly in advance.

Wrapping up

The 10 errors discussed in this article are some of the most common technical problems I encounter during site audits.

Correcting these errors on your site is a first step to making sure your site is technically healthy. Once these issues are corrected, technical audits can concentrate on issues that are specific to your site.

i know this is a little of topic from what you talked here, but my question is does the new google search console have a limit on how many times you can request indexing for a new post or old one, because the old one had a limit. Thanks