When I talk to people about what I do and what SEO is, they usually get it fairly quickly, or they act as they do. A good website structure, a good content, good endorsing backlinks. But sometimes, it gets a bit more technical and I end up talking about search engines crawling your website and I usually lose them…

Why crawl a website ?

Web crawling started as mapping the internet and how each website was connected to each other. It was also used by search engines in order to discover and index new online pages. Web crawlers were also used to test website’s vulnerability by testing a website and analyzing if any issue was spotted.

Now you can find tools who crawl your website in order to provide you insights. For example, Oncrawl provides data regarding your content and onsite SEO or Majestic which provides insights regarding all links pointing to a page.

Crawlers are used to collect information which can then be used and processed to classify documents and provide insights about the data collected.

Building a crawler is accessible to anyone who knows a bit of code. Making an efficient crawler however is more difficult and takes time.

How does it work ?

In order to crawl a website or the web, you first need an entry point. Robots need to know that your website exists so they can come and have a look at it. Back in the days you would have submitted your website to search engines in order to tell them your website was online. Now you can easily build a few links to your website and Voilà you are in the loop!

Once a crawler lands on your website it analyses all your content line by line and follows each of the links you have whether they are internal or external. And so on until it lands on a page with no more links or if it encounters errors like 404, 403, 500, 503.

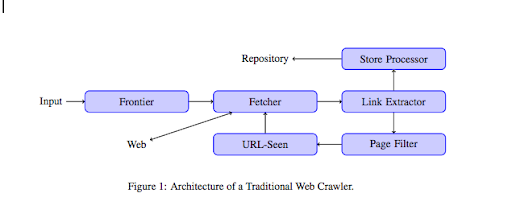

From a more technical point of view a crawler works with a seed (or list) of URLs. This is passed on to a Fetcher which will retrieve the content of a page. This content is then moved on to a Link extractor which will parse the HTML and extract all the links. These links are sent to both a Store processor which will, as its name says, store them. These URLs will also go through a Page filter which will send all interesting links to a URL-seen module. This module detects if the URL has already been seen or not. If not it gets sent to the Fetcher which will retrieve the content of the page and so on.

Keep in mind that some contents are impossible for spiders to crawl such as Flash. Javascript is being now properly crawled by GoogleBot, but now and then it doesn’t crawl any of it. Images aren’t content Google can technically crawl, but it became smart enough to start understanding them !

If robots aren’t told the contrary they will crawl everything. This is where robots.txt file becomes very useful. It tells crawlers (it can be specific per crawler ie GoogleBot or MSN Bot – find out more about bots here) what pages they cannot crawl. Let’s say for example you have navigation using facets, you might not want robots to crawl all of them as they have little added value and will use crawl budget. Using this simple line will help you prevent any robot from crawling it

User-agent: *

Disallow: /folder-a/

This tells all the robots not to crawl folder A.

User-agent: GoogleBot

Disallow: /repertoire-b/

This on the other hand specifies that only Google Bot can’t crawl folder B.

You can also use indication in HTML which tells robots not to follow a specific link using rel=”nofollow” tag. Some tests have shown even using the rel=”nofollow” tag on a link won’t block Googlebot from following it. This is contradictory to its purpose, but will be useful in other cases.

[Ebook] Crawlability

You mentioned crawl budget but what is it?

Let’s say you have a website which has been discovered by search engines. They regularly come to see if you have made any updates on your website and created new pages.

Each website has its own crawl budget depending on several factors such as the number of pages your website has and the sanity of it (if it has a lot of errors for example). You can easily have a quick idea of your crawl budget by login into the Search Console.

Your crawl budget will fix the number of page a robot crawls on your website each time it comes for a visit. It’s proportionally linked to the number of pages you have on your website and it has already crawled. Some pages get crawled more often than others especially if they are updated regularly or if they are linked from important pages.

For example your home is your main entry point which will get crawled very often. If you have a blog or a category page, they will get often crawled if it is linked to the main navigation. A blog will also be crawled often as it gets updated regularly. A blog post might get crawled often when it first got published, but after a few months it probably won’t get updated.

The more often a page gets crawled the more important a robot considers it is important compared to other ones. This is when you need to start working on optimizing your crawl budget.

Optimizing your crawl budget

In order to optimize your budget and make sure your most important pages get the attention they deserve you can analyse your server logs and look how your website is being crawled:

- How frequently are your top pages being crawled

- Can you see any less important pages being crawled more than others more important?

- Do robots often get a 4xx or 5xx error when crawling your website?

- Do robots encounter any spider traps? (Matthew Henry wrote a great article about them)

By analyzing your logs, you will see which pages you consider as less important are being crawled a lot. You then need to dig deeper into your internal link structure. If it is being crawled it must have a lot of links pointing to it.

You can also work on fixing all these errors (4xx and 5xx) with Oncrawl. It will improve crawlability as well as user experience, it’s a win-win case.

Oncrawl SEO Crawler

Crawling VS Scraping ?

Crawling and scraping are two different things which are used for different purposes. Crawling a website is landing on a page and following the links you find when you scan the content. A crawler will then move to another page and so on.

Scraping on the other hand is scanning a page and collecting specific data from the page: title tag, meta description, h1 tag or a specific area of your website such a list of prices. Scrapers are usually acting as “humans”, they will ignore any rules from the robots.txt file, file in forms and use a browser user-agent in order not to be detected.

Search engine crawlers usually act as scrappers as well as they need to collect data in order to process it for their ranking algorithm. They don’t look for specific data compares to scrapper they just use all the available data on the page and even more (loading time is something you can’t get from a page). Search engine crawlers will always identify themselves as crawlers so the owner of a website can know when they last came to visit their website. This can be very helpful when you track real user activity.

So now you know a bit more about crawling, how it works and why it is important, the next step is to start analysing server logs. This will provide you deep insights on how robots interact with your website, which pages they often visit and how many errors they encounter while visiting your website.

For more technical and historical information about web crawler you can read “A Brief History of Web Crawlers”

[…] wrote this article originally for my friends at OnCrawl, make sure to check out their […]

[…] about it as SEOs, but how does crawl budget actually work? We know that the number of pages that search engines crawl and index when they visit our client’s websites has correlation with their success in organic […]

Hey Julien,

Thanks for sharing this information. I was familiar with the term web crawling but actually not heard about crawl budgeting. Your amazing article is an asset to my knowledge. Thanks a lot.

Hello, Julien! Thank you for the sharp article. Would you have a proxy provider to recommend in addition to the crawling? If you had some experience, would be great if you could share! Was looking into the article – https://medium.com/@raimondofanucci/top-5-residential-proxy-providers-2018-dc69d9503155 But I am not sure if the information provided is correct.