If you’re doing technical SEO but not running the website you’re responsible for, the web development team is essential to your success. But developers and SEOs in growth and marketing don’t always see eye to eye.

It’s been said that one of the top skills SEOs need today is the ability to communicate and to federate different points of view. Not an event goes by without highly technical SEOs mentioning how to talk to developers.

But beyond how to talk to developers, you also need to know what to say. If your dev team has never had experience with SEO, here are some of the essential things to make sure they know–without condescending to explain what < title > tags are. After all, developers are far from web newbies.

Get a hang of the basics

Most SEOs expect website developers to have a basic grasp of the site elements that play a major role in SEO, and how they affect SEO performance:

- XML sitemaps

- Robots.txt

- Template requirements such as the placement of analytics tracking codes, the use of headings (

< h1 >…), schema.org markup, or semantic HTML - Pagedeclarations such as < link rel=”canonical” >

- Elements traditionally used in building a search result (< title >, < meta description=”lorem ipsum…” >, URLs)

- 301 redirects

- Page speed

- HTTPS–and site migrations, if your site is using HTTP

- Page importance and link-based site structure

- Server robustness and security

- Server log monitoring for SEO purposes

If you need a refresher course for yourself or someone else, guides for SEOs are often more detailed and more complete than guides written by SEOs for developers, making them more helpful. A good place to start is always Moz’s Beginner’s Guide to SEO, or Google’s SEO Starter Guide and their Search Console help in general.

[Ebook] Le technical SEO pour les esprits non techniques

SEO only works when search engines can crawl and render URLs

Appearing in search engine results means that the search engine was able to discover, crawl, render and parse key pages on a website. When there are technical reasons that this doesn’t happen, the entire digital marketing chain breaks down.

Bots need access to websites

Google uses different User-Agents to crawl a website. These must have access not only to pages, but also to resources (images and other media), JavaScript, and other elements required to render the content on a URL.

At the same time, crawl of URLs is prioritized: we sometimes want to promote one set of pages over another by discouraging crawling of the latter in favor of crawling of the former. Which pages fall into which category can change with the seasons, leading up to big events, or even after changes to the site or to Google’s algorithms.

Many SEO tools also require access to crawl or scrape parts of a website in order to analyze performance or prepare batch corrections.

If SEOs don’t have access to means of filtering bot access (robots.txt, htaccess, HTTP headers…) they’re going to pass requests on to the dev team.

Staging websites and going live

Staging websites need to take into account the fact that they need to be approved for SEO purposes–yet still not be indexed by Google and other search engines. An SEO team might need to allow access to a site by certain bots in order to perform verifications and checks that will allow them to give the site a go/no-go from an SEO perspective. It’s reasonable to ask the SEO team to provide a User-Agent and IP address for bots they need to authorize, as well as any information they have about the security protocols their SEO tools can or can’t support.

When taking a website live, keep SEO on the checklist. If bots have been forbidden to crawl the site, those rules need to be removed as a part of the process; no SEO wants to see

User-Agent: *

Disallow: /

as only content in a new site’s robots.txt file.

The choice of technology matters

Technical SEOs should be conversant on the subject of how websites are built. Someone from the SEO team should be able to sit in on discussions on servers, CDNs, choice of a CMS, JavaScript frameworks…

Until the past few months, Google was using Chromium M41 when crawling–yes, that means that features have been supported by all normal navigators for years could break the page for Google. While that’s been corrected, it goes to show that making assumptions where support for web technology is concerned can sometimes backfire big time.

Sometimes the means of implementation matters

Technical SEO will want all sorts of bells and whistles in page templates and markup. While most of the time technical SEOs can and should let developers decide how to put this in place, there are cases where Google provides specifications or requirements.

Developers should know where to find these–and how to ask whether the implementation instructions that came with the request from a technical SEO are a requirement, or just wishful thinking.

Some examples of features with recommended or required implementation strategies for search engines include–but are not limited to–the following:

- JavaScript in general

- Image optimization

- Lazy loading

- Multi-language and geotargeted site

- Preferred Schema.org markup format

Alternate solutions are possible

In theory, one thing technical SEO and web development have in common is a penchant for data-based, creative problem solving to use available technologies to achieve a desired result.

When a technical SEO request isn’t possible, look for alternate solutions. Many technical SEOs who are also developers are already proposing workarounds to complex legacy stacks that won’t support certain modifications.

- Last year, Dan Taylor introduced the term Edge SEO to refer to solutions that implement SEO fixes after the page is rendered, but before it is delivered to the client, taking advantage of service workers on CDNs, for example.

- Creative technical SEOs also work with JavaScript, Python, database management and query, and APIs made available by search engines and SEO tools.

Where no known solutions exist, running responsible tests with measurable results is always an option in SEO. Because Google doesn’t share the details of how it works, technical SEO makes reasonable assumptions based on Google patents, official Google statements, and observed site performance in search results. Running your own tests can be risky in SEO, but it’s also a respected and accepted practice.

Most technical SEO issues: iterative vs critical changes

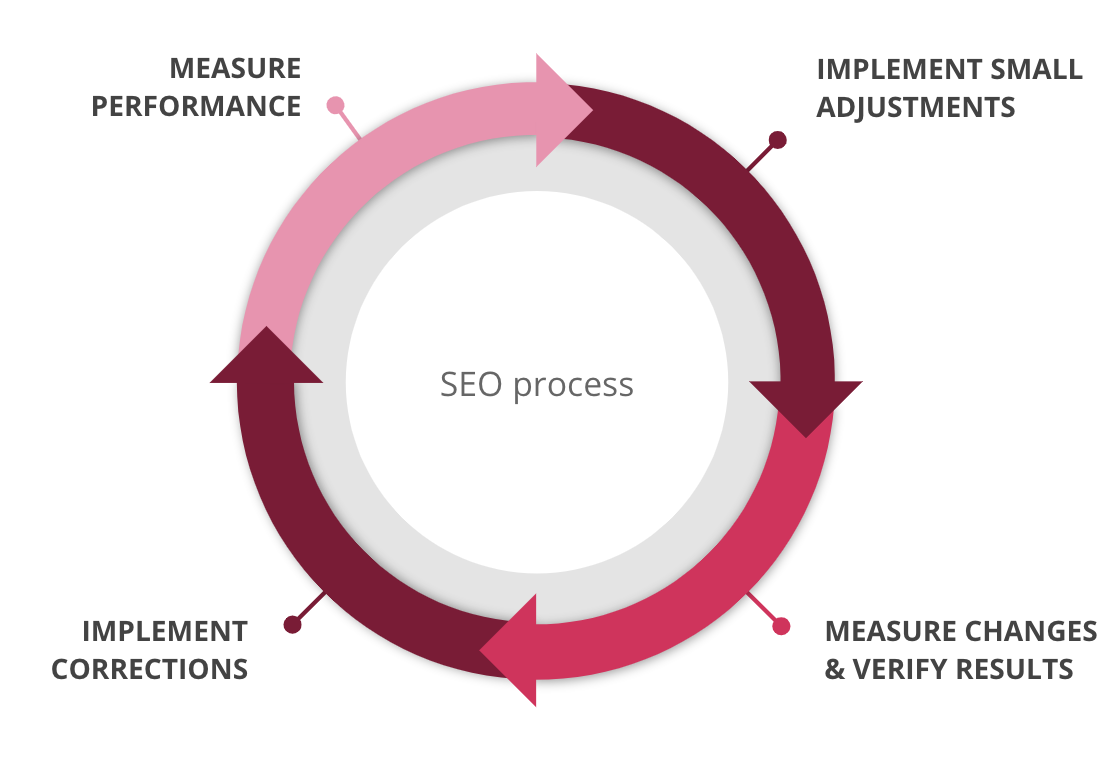

The best SEO work is iterative, and follows a procedure that looks something like this:

This means that asking SEOs to batch requests is reasonable, but not blocking regular times to implement SEO changes can push an SEO strategy back significantly. This also means that SEO requests might include rollbacks, or extensions of earlier tests.

SEOs and developers should work together to find a way to batch and schedule regular development requests.

However, some SEO requests really can’t wait. This might include:

- Fixes for errors that remove all or part of the site from search

- Fixes for Google penalties, known as “manual actions”

- Changes required to correct aberrant tool or tracker behavior

- Changes to address major algorithm changes with a direct impact on the site’s performance in search

Stay up to date and get excited about new search features

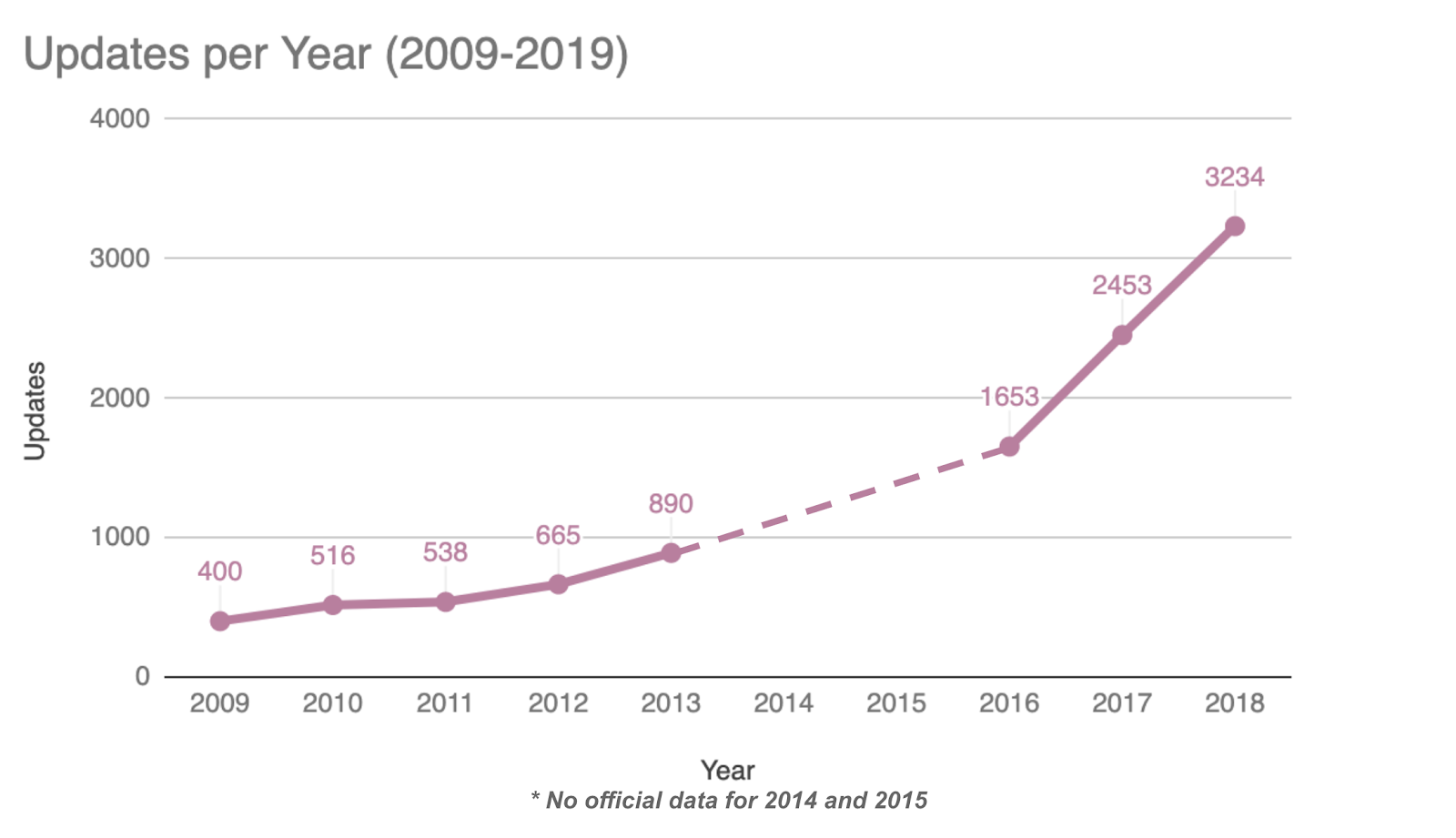

As we just suggested, search is not a static field. It evolves with new technologies, new usages, and new releases by search engines. Google also makes near-constant modifications–they reported 3234 changes in 2018–to their indexing and ranking algorithms, which often lead to changes in how websites should be optimized.

This means that even reliable information that is over six to twelve months old might no longer be relevant. For example:

- Ranking used to lean heavily on declaring meta keywords for each URL; while on-site search engines still make use of these keywords, SEO no longer does.

- Google used to recommend using < link rel=”prev” > and < link rel=”next” > declarations on paginated series of URLs in order to prevent them from flagging these pages as identical, but no longer takes this into account.

But it also means that there are frequently new elements to SEO. New and upcoming elements announced in 2019 include:

- Google’s Evergreen bot means that browser features and, perhaps most importantly, more recent JavaScript, have become accessible to Google. However, JavaScript rendering is still executed separately and at a later time, so recommendations for getting pages with JavaScript indexed are still valid.

- FAQ page markup, for pages with multiple questions and answers, can now give

- New guidelines for date usage

- Upcoming support for high-resolution images in search results

Google also fields questions from SEOs on twitter and through live webmaster hangouts, and provides information regarding changes and major announcements on the Google Webmaster blog.

Working together towards a mutual understanding

One of the keys to bridging the gap between web development and SEO is mutual respect and communication. While the basics of SEO are important, it’s also important to recognize that developers can pick this knowledge up easily on their own using the information that is already out there.

It is far more productive to understand how SEO works in practice. This includes understanding the importance of access to websites by bots, the implications of technology on search, are how to deal with SEO problems that can’t be fixed as recommended. It also means knowing how the SEO process works, and recognizing that search is evolving at an increasingly rapid rate.

There is also a growing awareness in the SEO community of issues facing web developers. Consequently, columns like Detlef Johnson’s for SEO for Developers can find a place in SEO-oriented publications like Search Engine Land, for example. This awareness also feeds the hope by SEOs that web developers will also make an effort to understand key elements of SEO.

Well written article in simple words. I learn something new and challenging from your blog and would like to read more from you in future also. Thanks for sharing.

Rebecca,

You have covered the topic so well. Wow, the topic “What web developers need to know about technical SEO” is really helpful. Especially, your explanation under the headings of “Working together towards a mutual understanding” and “Stay up to date and get excited about new search features” are just quite on point. A well-covered article. Keep sharing info and stay blessed :)